1085 Repositories

Python transformer-entity-tracking Libraries

a library for audio and music analysis

aubio aubio is a library to label music and sounds. It listens to audio signals and attempts to detect events. For instance, when a drum is hit, at wh

A very simple framework for state-of-the-art Natural Language Processing (NLP)

A very simple framework for state-of-the-art NLP. Developed by Humboldt University of Berlin and friends. Flair is: A powerful NLP library. Flair allo

Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image classification, in Pytorch

Transformer in Transformer Implementation of Transformer in Transformer, pixel level attention paired with patch level attention for image c

Implementation of E(n)-Transformer, which extends the ideas of Welling's E(n)-Equivariant Graph Neural Network to attention

E(n)-Equivariant Transformer (wip) Implementation of E(n)-Equivariant Transformer, which extends the ideas from Welling's E(n)-Equivariant G

A time tracking application

GTimeLog GTimeLog is a simple app for keeping track of time. Contents Installing Documentation Resources Credits Installing GTimeLog is packaged for D

Pytorch Code for "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation"

Medical-Transformer Pytorch Code for the paper "Medical Transformer: Gated Axial-Attention for Medical Image Segmentation" About this repo: This repo

Implementation of SETR model, Original paper: Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.

SETR - Pytorch Since the original paper (Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers.) has no official

3D Reconstruction Software

Meshroom is a free, open-source 3D Reconstruction Software based on the AliceVision Photogrammetric Computer Vision framework. Learn more details abou

A multi-entity Transformer for multi-agent spatiotemporal modeling.

baller2vec This is the repository for the paper: Michael A. Alcorn and Anh Nguyen. baller2vec: A Multi-Entity Transformer For Multi-Agent Spatiotempor

Official PyTorch implementation of Joint Object Detection and Multi-Object Tracking with Graph Neural Networks

This is the official PyTorch implementation of our paper: "Joint Object Detection and Multi-Object Tracking with Graph Neural Networks". Our project website and video demos are here.

Implementation of self-attention mechanisms for general purpose. Focused on computer vision modules. Ongoing repository.

Self-attention building blocks for computer vision applications in PyTorch Implementation of self attention mechanisms for computer vision in PyTorch

A command line utility for tracking a stock market portfolio. Primarily featuring high resolution braille graphs.

A command line stock market / portfolio tracker originally insipred by Ericm's Stonks program, featuring unicode for incredibly high detailed graphs even in a terminal.

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Summarization, translation, Q&A, text generation and more at blazing speed using a T5 version implemented in ONNX. This package is still in alpha stag

Named-entity recognition using neural networks. Easy-to-use and state-of-the-art results.

NeuroNER NeuroNER is a program that performs named-entity recognition (NER). Website: neuroner.com. This page gives step-by-step instructions to insta

Bidirectional LSTM-CRF and ELMo for Named-Entity Recognition, Part-of-Speech Tagging and so on.

anaGo anaGo is a Python library for sequence labeling(NER, PoS Tagging,...), implemented in Keras. anaGo can solve sequence labeling tasks such as nam

Textpipe: clean and extract metadata from text

textpipe: clean and extract metadata from text textpipe is a Python package for converting raw text in to clean, readable text and extracting metadata

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Kashgari Overview | Performance | Installation | Documentation | Contributing 🎉 🎉 🎉 We released the 2.0.0 version with TF2 Support. 🎉 🎉 🎉 If you

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

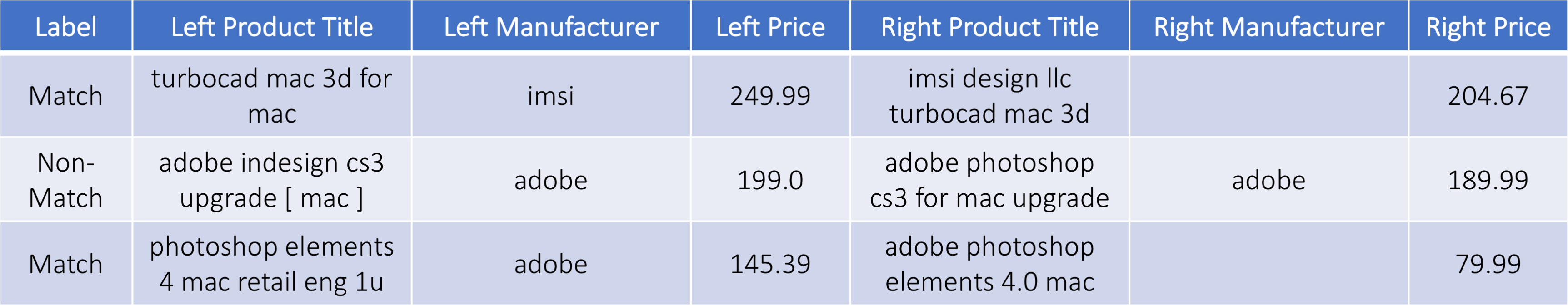

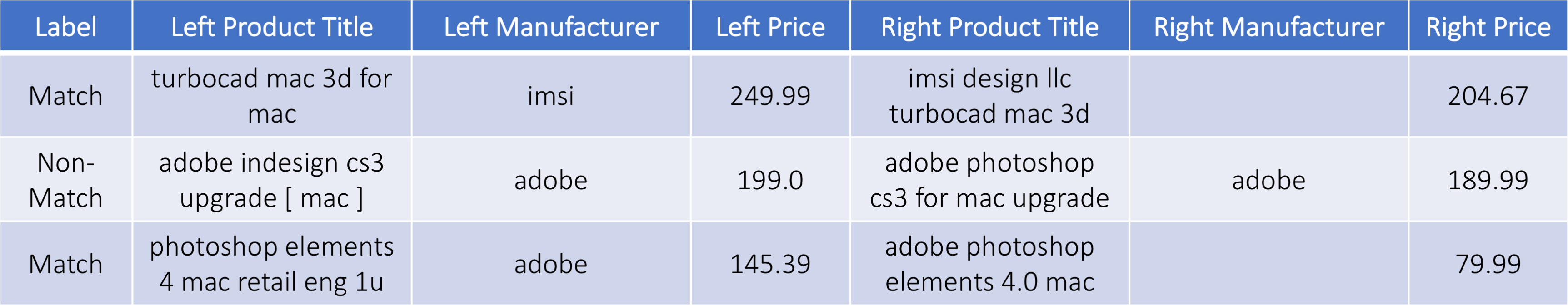

Python package for performing Entity and Text Matching using Deep Learning.

DeepMatcher DeepMatcher is a Python package for performing entity and text matching using deep learning. It provides built-in neural networks and util

Snips Python library to extract meaning from text

Snips NLU Snips NLU (Natural Language Understanding) is a Python library that allows to extract structured information from sentences written in natur

Code for the paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer"

T5: Text-To-Text Transfer Transformer The t5 library serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Lear

Official Stanford NLP Python Library for Many Human Languages

Stanza: A Python NLP Library for Many Human Languages The Stanford NLP Group's official Python NLP library. It contains support for running various ac

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

An open source library for deep learning end-to-end dialog systems and chatbots.

DeepPavlov is an open-source conversational AI library built on TensorFlow, Keras and PyTorch. DeepPavlov is designed for development of production re

:id: A python library for accurate and scalable fuzzy matching, record deduplication and entity-resolution.

Dedupe Python Library dedupe is a python library that uses machine learning to perform fuzzy matching, deduplication and entity resolution quickly on

A very simple framework for state-of-the-art Natural Language Processing (NLP)

A very simple framework for state-of-the-art NLP. Developed by Humboldt University of Berlin and friends. IMPORTANT: (30.08.2020) We moved our models

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

💫 Industrial-strength Natural Language Processing (NLP) in Python

spaCy: Industrial-strength NLP spaCy is a library for advanced Natural Language Processing in Python and Cython. It's built on the very latest researc

TransGAN: Two Transformers Can Make One Strong GAN

[Preprint] "TransGAN: Two Transformers Can Make One Strong GAN", Yifan Jiang, Shiyu Chang, Zhangyang Wang

Implementation of TabTransformer, attention network for tabular data, in Pytorch

Tab Transformer Implementation of Tab Transformer, attention network for tabular data, in Pytorch. This simple architecture came within a hair's bread

🌍💉 Global COVID-19 vaccination data at the regional level.

COVID-19 vaccination data at subnational level. To ensure its officiality, the source data is carefully verified.

Authors implementation of LieTransformer: Equivariant Self-Attention for Lie Groups

LieTransformer This repository contains the implementation of the LieTransformer used for experiments in the paper LieTransformer: Equivariant self-at

This is a Pytorch implementation of the paper: Self-Supervised Graph Transformer on Large-Scale Molecular Data.

This is a Pytorch implementation of the paper: Self-Supervised Graph Transformer on Large-Scale Molecular Data.

Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series Forecasting.

Non-AR Spatial-Temporal Transformer Introduction Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series For

Summarization, translation, sentiment-analysis, text-generation and more at blazing speed using a T5 version implemented in ONNX.

Summarization, translation, Q&A, text generation and more at blazing speed using a T5 version implemented in ONNX. This package is still in alpha stag

Named-entity recognition using neural networks. Easy-to-use and state-of-the-art results.

NeuroNER NeuroNER is a program that performs named-entity recognition (NER). Website: neuroner.com. This page gives step-by-step instructions to insta

Bidirectional LSTM-CRF and ELMo for Named-Entity Recognition, Part-of-Speech Tagging and so on.

anaGo anaGo is a Python library for sequence labeling(NER, PoS Tagging,...), implemented in Keras. anaGo can solve sequence labeling tasks such as nam

Textpipe: clean and extract metadata from text

textpipe: clean and extract metadata from text textpipe is a Python package for converting raw text in to clean, readable text and extracting metadata

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Kashgari Overview | Performance | Installation | Documentation | Contributing 🎉 🎉 🎉 We released the 2.0.0 version with TF2 Support. 🎉 🎉 🎉 If you

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sockeye This package contains the Sockeye project, an open-source sequence-to-sequence framework for Neural Machine Translation based on Apache MXNet

Python package for performing Entity and Text Matching using Deep Learning.

DeepMatcher DeepMatcher is a Python package for performing entity and text matching using deep learning. It provides built-in neural networks and util

Snips Python library to extract meaning from text

Snips NLU Snips NLU (Natural Language Understanding) is a Python library that allows to extract structured information from sentences written in natur

Code for the paper "Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer"

T5: Text-To-Text Transfer Transformer The t5 library serves primarily as code for reproducing the experiments in Exploring the Limits of Transfer Lear

Official Stanford NLP Python Library for Many Human Languages

Stanza: A Python NLP Library for Many Human Languages The Stanford NLP Group's official Python NLP library. It contains support for running various ac

State of the Art Natural Language Processing

Spark NLP: State of the Art Natural Language Processing Spark NLP is a Natural Language Processing library built on top of Apache Spark ML. It provide

An open source library for deep learning end-to-end dialog systems and chatbots.

DeepPavlov is an open-source conversational AI library built on TensorFlow, Keras and PyTorch. DeepPavlov is designed for development of production re

:id: A python library for accurate and scalable fuzzy matching, record deduplication and entity-resolution.

Dedupe Python Library dedupe is a python library that uses machine learning to perform fuzzy matching, deduplication and entity resolution quickly on

A very simple framework for state-of-the-art Natural Language Processing (NLP)

A very simple framework for state-of-the-art NLP. Developed by Humboldt University of Berlin and friends. IMPORTANT: (30.08.2020) We moved our models

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

💫 Industrial-strength Natural Language Processing (NLP) in Python

spaCy: Industrial-strength NLP spaCy is a library for advanced Natural Language Processing in Python and Cython. It's built on the very latest researc

A Python application for tracking, reporting on timing and complexity in Python code

A command-line application for tracking, reporting on complexity of Python tests and applications. wily [a]: quick to think of things, having a very g

NLP Core Library and Model Zoo based on PaddlePaddle 2.0

PaddleNLP 2.0拥有丰富的模型库、简洁易用的API与高性能的分布式训练的能力,旨在为飞桨开发者提升文本建模效率,并提供基于PaddlePaddle 2.0的NLP领域最佳实践。

Implementation of Feedback Transformer in Pytorch

Feedback Transformer - Pytorch Simple implementation of Feedback Transformer in Pytorch. They improve on Transformer-XL by having each token have acce

1st Place Solution to ECCV-TAO-2020: Detect and Represent Any Object for Tracking

Instead, two models for appearance modeling are included, together with the open-source BAGS model and the full set of code for inference. With this code, you can achieve around mAP@23 with TAO test set (based on our estimation).

Transformer-based Text Auto-encoder (T-TA) using TensorFlow 2.

T-TA (Transformer-based Text Auto-encoder) This repository contains codes for Transformer-based Text Auto-encoder (T-TA, paper: Fast and Accurate Deep

Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch

DALL-E in Pytorch Implementation / replication of DALL-E, OpenAI's Text to Image Transformer, in Pytorch. It will also contain CLIP for ranking the ge

Implementation of Bottleneck Transformer in Pytorch

Bottleneck Transformer - Pytorch Implementation of Bottleneck Transformer, SotA visual recognition model with convolution + attention that outperforms

Jittor implementation of PCT:Point Cloud Transformer

PCT: Point Cloud Transformer This is a Jittor implementation of PCT: Point Cloud Transformer.

Trankit is a Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing

Trankit: A Light-Weight Transformer-based Python Toolkit for Multilingual Natural Language Processing Trankit is a light-weight Transformer-based Pyth

Multiple-Object Tracking with Transformer

TransTrack: Multiple-Object Tracking with Transformer Introduction TransTrack: Multiple-Object Tracking with Transformer Models Training data Training

TimeTagger is a web-based time-tracking solution that can be run locally or on a server

TimeTagger is a web-based time-tracking solution that can be run locally or on a server. In the latter case, you'll want to add authentication, and also be aware of the license restrictions.

Chinese NewsTitle Generation Project by GPT2.带有超级详细注释的中文GPT2新闻标题生成项目。

GPT2-NewsTitle 带有超详细注释的GPT2新闻标题生成项目 UpDate 01.02.2021 从网上收集数据,将清华新闻数据、搜狗新闻数据等新闻数据集,以及开源的一些摘要数据进行整理清洗,构建一个较完善的中文摘要数据集。 数据集清洗时,仅进行了简单地规则清洗。

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting

Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting This is the origin Pytorch implementation of Informer in the followin

Implementation of the Point Transformer layer, in Pytorch

Point Transformer - Pytorch Implementation of the Point Transformer self-attention layer, in Pytorch. The simple circuit above seemed to have allowed

Graph Transformer Architecture. Source code for

Graph Transformer Architecture Source code for the paper "A Generalization of Transformer Networks to Graphs" by Vijay Prakash Dwivedi and Xavier Bres

Big Bird: Transformers for Longer Sequences

BigBird, is a sparse-attention based transformer which extends Transformer based models, such as BERT to much longer sequences. Moreover, BigBird comes along with a theoretical understanding of the capabilities of a complete transformer that the sparse model can handle.

Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

Segmentation Transformer Implementation of Segmentation Transformer in PyTorch, a new model to achieve SOTA in semantic segmentation while using trans

Explainability for Vision Transformers (in PyTorch)

Explainability for Vision Transformers (in PyTorch) This repository implements methods for explainability in Vision Transformers

Joint detection and tracking model named DEFT, or ``Detection Embeddings for Tracking.

DEFT: Detection Embeddings for Tracking DEFT: Detection Embeddings for Tracking, Mohamed Chaabane, Peter Zhang, J. Ross Beveridge, Stephen O'Hara

PhoNLP: A BERT-based multi-task learning toolkit for part-of-speech tagging, named entity recognition and dependency parsing

PhoNLP is a multi-task learning model for joint part-of-speech (POS) tagging, named entity recognition (NER) and dependency parsing. Experiments on Vietnamese benchmark datasets show that PhoNLP produces state-of-the-art results, outperforming a single-task learning approach that fine-tunes the pre-trained Vietnamese language model PhoBERT for each task independently.

Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch.

SE3 Transformer - Pytorch Implementation of SE3-Transformers for Equivariant Self-Attention, in Pytorch. May be needed for replicating Alphafold2 resu

Implementation of Lie Transformer, Equivariant Self-Attention, in Pytorch

Lie Transformer - Pytorch (wip) Implementation of Lie Transformer, Equivariant Self-Attention, in Pytorch. Only the SE3 version will be present in thi

Pytorch implementation of PCT: Point Cloud Transformer

PCT: Point Cloud Transformer This is a Pytorch implementation of PCT: Point Cloud Transformer.

CCKS-Title-based-large-scale-commodity-entity-retrieval-top1

- 基于标题的大规模商品实体检索top1 一、任务介绍 CCKS 2020:基于标题的大规模商品实体检索,任务为对于给定的一个商品标题,参赛系统需要匹配到该标题在给定商品库中的对应商品实体。 输入:输入文件包括若干行商品标题。 输出:输出文本每一行包括此标题对应的商品实体,即给定知识库中商品 ID,

CrossNER: Evaluating Cross-Domain Named Entity Recognition (AAAI-2021)

CrossNER is a fully-labeled collected of named entity recognition (NER) data spanning over five diverse domains (Politics, Natural Science, Music, Literature, and Artificial Intelligence) with specialized entity categories for different domains.

Framework for fine-tuning pretrained transformers for Named-Entity Recognition (NER) tasks

NERDA Not only is NERDA a mesmerizing muppet-like character. NERDA is also a python package, that offers a slick easy-to-use interface for fine-tuning

PyTorch implementation of "Conformer: Convolution-augmented Transformer for Speech Recognition" (INTERSPEECH 2020)

PyTorch implementation of Conformer: Convolution-augmented Transformer for Speech Recognition. Transformer models are good at capturing content-based

Graph neural network message passing reframed as a Transformer with local attention

Adjacent Attention Network An implementation of a simple transformer that is equivalent to graph neural network where the message passing is done with

Wrapper around the UPS API for creating shipping labels and fetching a package's tracking status.

ClassicUPS: A Useful UPS Library ClassicUPS is an Apache2 Licensed wrapper around the UPS API for creating shipping labels and fetching a package's tr

Official Stanford NLP Python Library for Many Human Languages

Stanza: A Python NLP Library for Many Human Languages The Stanford NLP Group's official Python NLP library. It contains support for running various ac

An open source library for deep learning end-to-end dialog systems and chatbots.

DeepPavlov is an open-source conversational AI library built on TensorFlow, Keras and PyTorch. DeepPavlov is designed for development of production re

Named-entity recognition using neural networks. Easy-to-use and state-of-the-art results.

NeuroNER NeuroNER is a program that performs named-entity recognition (NER). Website: neuroner.com. This page gives step-by-step instructions to insta

Snips Python library to extract meaning from text

Snips NLU Snips NLU (Natural Language Understanding) is a Python library that allows to extract structured information from sentences written in natur

:id: A python library for accurate and scalable fuzzy matching, record deduplication and entity-resolution.

Dedupe Python Library dedupe is a python library that uses machine learning to perform fuzzy matching, deduplication and entity resolution quickly on

💫 Industrial-strength Natural Language Processing (NLP) in Python

spaCy: Industrial-strength NLP spaCy is a library for advanced Natural Language Processing in Python and Cython. It's built on the very latest researc