1620 Repositories

Python transformers-models Libraries

In this project, RandomOverSampler and SMOTE algorithms were used to perform oversampling, ClusterCentroids algorithm was used to undersampling, SMOTEENN algorithm was applied as a combinatorial approach of over- and undersampling of credit card credit dataset from LendingClub. Machine learning models - BalancedRandomForestClassifier and EasyEnsembleClassifier were used to predict credit risk.

Overview of Credit Card Analysis In this project, RandomOverSampler and SMOTE algorithms were used to perform oversampling, ClusterCentroids algorithm

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

![[CVPR 2022]](https://github.com/VITA-Group/Diverse-ViT/raw/main/Figs/Diversity_overview.png)

[CVPR 2022] "The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy" by Tianlong Chen, Zhenyu Zhang, Yu Cheng, Ahmed Awadallah, Zhangyang Wang

The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy Codes for this paper: [CVPR 2022] The Pr

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

This repository contains the code and models necessary to replicate the results of paper: How to Robustify Black-Box ML Models? A Zeroth-Order Optimization Perspective

Black-Box-Defense This repository contains the code and models necessary to replicate the results of our recent paper: How to Robustify Black-Box ML M

Implementation of the state-of-the-art vision transformers with tensorflow

ViT Tensorflow This repository contains the tensorflow implementation of the state-of-the-art vision transformers (a category of computer vision model

PyTorch Implementation of DiffGAN-TTS: High-Fidelity and Efficient Text-to-Speech with Denoising Diffusion GANs

DiffGAN-TTS - PyTorch Implementation PyTorch implementation of DiffGAN-TTS: High

Neural-Machine-Translation - Implementation of revolutionary machine translation models

Neural Machine Translation Framework: PyTorch Repository contaning my implementa

Covid19-Forecasting - An interactive website that tracks, models and predicts COVID-19 Cases

Covid-Tracker This is an interactive website that tracks, models and predicts CO

Transformers-regression - Regression Bugs Are In Your Model! Measuring, Reducing and Analyzing Regressions In NLP Model Updates

Regression Free Model Update Code for the paper: Regression Bugs Are In Your Mod

Python code for ICLR 2022 spotlight paper EViT: Expediting Vision Transformers via Token Reorganizations

Expediting Vision Transformers via Token Reorganizations This repository contain

Federated Learning - Including common test models for federated learning, like CNN, Resnet18 and lstm, controlled by different parser

Federated_Learning 💻 This projest include common test models for federated lear

Using PyTorch Perform intent classification using three different models to see which one is better for this task

Using PyTorch Perform intent classification using three different models to see which one is better for this task

icepickle is to allow a safe way to serialize and deserialize linear scikit-learn models

icepickle It's a cooler way to store simple linear models. The goal of icepickle is to allow a safe way to serialize and deserialize linear scikit-lea

PyTorch implementation for the paper Pseudo Numerical Methods for Diffusion Models on Manifolds

Pseudo Numerical Methods for Diffusion Models on Manifolds (PNDM) This repo is the official PyTorch implementation for the paper Pseudo Numerical Meth

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers Authors: Jaemin Cho, Abhay Zala, and Mohit Bansal (

Code To Tune or Not To Tune? Zero-shot Models for Legal Case Entailment.

COLIEE 2021 - task 2: Legal Case Entailment This repository contains the code to reproduce NeuralMind's submissions to COLIEE 2021 presented in the pa

Source code of our work: "Benchmarking Deep Models for Salient Object Detection"

SALOD Source code of our work: "Benchmarking Deep Models for Salient Object Detection". In this works, we propose a new benchmark for SALient Object D

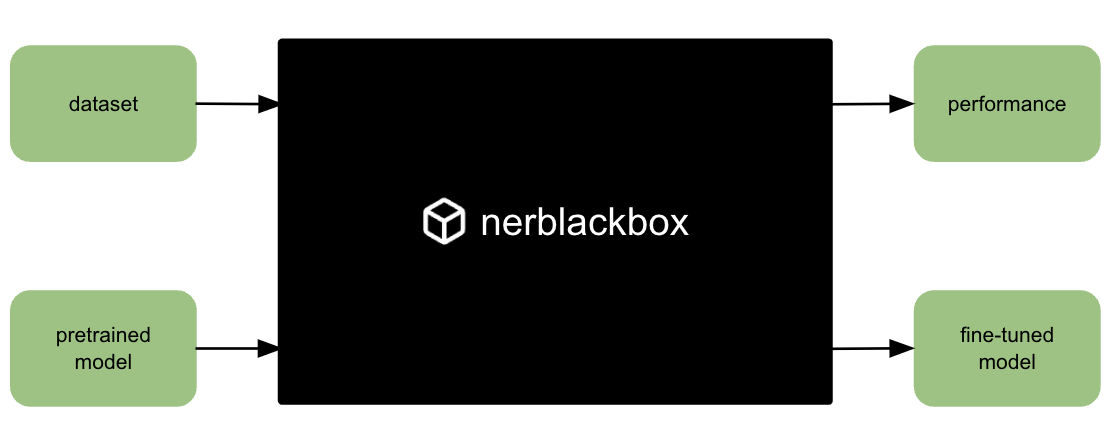

A python package to fine-tune transformer-based models for named entity recognition (NER).

nerblackbox A python package to fine-tune transformer-based language models for named entity recognition (NER). Resources Source Code: https://github.

TorchMD-Net provides state-of-the-art graph neural networks and equivariant transformer neural networks potentials for learning molecular potentials

TorchMD-net TorchMD-Net provides state-of-the-art graph neural networks and equivariant transformer neural networks potentials for learning molecular

This is the replication package for paper submission: Towards Training Reproducible Deep Learning Models.

This is the replication package for paper submission: Towards Training Reproducible Deep Learning Models.

Convert BART models to ONNX with quantization. 3X reduction in size, and upto 3X boost in inference speed

fast-Bart Reduction of BART model size by 3X, and boost in inference speed up to 3X BART implementation of the fastT5 library (https://github.com/Ki6a

KoRean based ELECTRA pre-trained models (KR-ELECTRA) for Tensorflow and PyTorch

KoRean based ELECTRA (KR-ELECTRA) This is a release of a Korean-specific ELECTRA model with comparable or better performances developed by the Computa

BASH - Biomechanical Animated Skinned Human

We developed a method animating a statistical 3D human model for biomechanical analysis to increase accessibility for non-experts, like patients, athletes, or designers.

JFB: Jacobian-Free Backpropagation for Implicit Models

JFB: Jacobian-Free Backpropagation for Implicit Models

CATE: Computation-aware Neural Architecture Encoding with Transformers

CATE: Computation-aware Neural Architecture Encoding with Transformers Code for paper: CATE: Computation-aware Neural Architecture Encoding with Trans

Datasets and pretrained Models for StyleGAN3 ...

Datasets and pretrained Models for StyleGAN3 ... Dear arfiticial friend, this is a collection of artistic datasets and models that we have put togethe

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers Authors: Jaemin Cho, Abhay Zala, and Mohit Bansal (

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

SGPT: Multi-billion parameter models for semantic search

SGPT: Multi-billion parameter models for semantic search This repository contains code, results and pre-trained models for the paper SGPT: Multi-billi

Blender 3.1 Alpha (and later) PLY importer that correctly loads point clouds (and all PLY models as point clouds)

import-ply-as-verts Blender 3.1 Alpha (and later) PLY importer that correctly loads point clouds (and all PLY models as point clouds) Latest News Mand

Rank-One Model Editing for Locating and Editing Factual Knowledge in GPT

Rank-One Model Editing (ROME) This repository provides an implementation of Rank-One Model Editing (ROME) on auto-regressive transformers (GPU-only).

Pretrained Japanese BERT models

Pretrained Japanese BERT models This is a repository of pretrained Japanese BERT models. The models are available in Transformers by Hugging Face. Mod

The source code for Generating Training Data with Language Models: Towards Zero-Shot Language Understanding.

SuperGen The source code for Generating Training Data with Language Models: Towards Zero-Shot Language Understanding. Requirements Before running, you

Explore extreme compression for pre-trained language models

Code for paper "Exploring extreme parameter compression for pre-trained language models ICLR2022"

The pyrelational package offers a flexible workflow to enable active learning with as little change to the models and datasets as possible

pyrelational is a python active learning library developed by Relation Therapeutics for rapidly implementing active learning pipelines from data management, model development (and Bayesian approximation), to creating novel active learning strategies.

A demo project to elaborate how Machine Learn Models are deployed on production using Flask API

This is a salary prediction website developed with the help of machine learning, this makes prediction of salary on basis of few parameters like interview score, experience test score.

Posterior temperature optimized Bayesian models for inverse problems in medical imaging

Posterior temperature optimized Bayesian models for inverse problems in medical imaging Max-Heinrich Laves*, Malte Tölle*, Alexander Schlaefer, Sandy

Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection"

Hierarchical Token Semantic Audio Transformer Introduction The Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound

Source code for "Understanding Knowledge Integration in Language Models with Graph Convolutions"

Graph Convolution Simulator (GCS) Source code for "Understanding Knowledge Integration in Language Models with Graph Convolutions" Requirements: PyTor

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation This reposi

Event queue (Equeue) dialect is an MLIR Dialect that models concurrent devices in terms of control and structure.

Event Queue Dialect Event queue (Equeue) dialect is an MLIR Dialect that models concurrent devices in terms of control and structure. Motivation The m

Transformers provides thousands of pretrained models to perform tasks on different modalities such as text, vision, and audio.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Official Implementation of "Transformers Can Do Bayesian Inference"

Official Code for the Paper "Transformers Can Do Bayesian Inference" We train Transformers to do Bayesian Prediction on novel datasets for a large var

Source code for EquiDock: Independent SE(3)-Equivariant Models for End-to-End Rigid Protein Docking (ICLR 2022)

Source code for EquiDock: Independent SE(3)-Equivariant Models for End-to-End Rigid Protein Docking (ICLR 2022) Please cite "Independent SE(3)-Equivar

This repository provides an efficient PyTorch-based library for training deep models.

An Efficient Library for Training Deep Models This repository provides an efficient PyTorch-based library for training deep models. Installation Make

Trafffic prediction analysis using hybrid models - Machine Learning

Hybrid Machine learning Model Clone the Repository Create a new Directory as assests and download the model from the below link Model Link To Start th

Tensorflow2 Keras-based Semantic Segmentation Models Implementation

Tensorflow2 Keras-based Semantic Segmentation Models Implementation

NeuralForecast is a Python library for time series forecasting with deep learning models

NeuralForecast is a Python library for time series forecasting with deep learning models. It includes benchmark datasets, data-loading utilities, evaluation functions, statistical tests, univariate model benchmarks and SOTA models implemented in PyTorch and PyTorchLightning.

Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5

NLP-Summarizer Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5 This project aimed to provide in

A U-Net combined with a variational auto-encoder that is able to learn conditional distributions over semantic segmentations.

Probabilistic U-Net + **Update** + An improved Model (the Hierarchical Probabilistic U-Net) + LIDC crops is now available. See below. Re-implementatio

/samples.jpg)

A collection of pre-trained StyleGAN2 models trained on different datasets at different resolution.

Awesome Pretrained StyleGAN2 A collection of pre-trained StyleGAN2 models trained on different datasets at different resolution. Note the readme is a

A curated list of Generative Deep Art projects, tools, artworks, and models

Generative Deep Art A curated list of Generative Deep Art projects, tools, artworks, and models Inbox Get started with making AI art in 2022 – deeplea

YOLOv7 - Framework Beyond Detection

🔥🔥🔥🔥 YOLO with Transformers and Instance Segmentation, with TensorRT acceleration! 🔥🔥🔥

Semantic Segmentation Suite in TensorFlow

Semantic Segmentation Suite in TensorFlow. Implement, train, and test new Semantic Segmentation models easily!

This project aims at providing a concise, easy-to-use, modifiable reference implementation for semantic segmentation models using PyTorch.

Semantic Segmentation on PyTorch (include FCN, PSPNet, Deeplabv3, Deeplabv3+, DANet, DenseASPP, BiSeNet, EncNet, DUNet, ICNet, ENet, OCNet, CCNet, PSANet, CGNet, ESPNet, LEDNet, DFANet)

Human segmentation models, training/inference code, and trained weights, implemented in PyTorch

Human-Segmentation-PyTorch Human segmentation models, training/inference code, and trained weights, implemented in PyTorch. Supported networks UNet: b

Machine Learning Models were applied to predict the mass of the brain based on gender, age ranges, and head size.

Brain Weight in Humans Variations of head sizes and brain weights in humans Kaggle dataset obtained from this link by Anubhab Swain. Image obtained fr

The official code repo of "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection"

Hierarchical Token Semantic Audio Transformer Introduction The Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound

This is an early in-development version of training CLIP models with hivemind.

A transformer that does not hog your GPU memory This is an early in-development codebase: if you want a stable and documented hivemind codebase, look

Constrained Language Models Yield Few-Shot Semantic Parsers

Constrained Language Models Yield Few-Shot Semantic Parsers This repository contains tools and instructions for reproducing the experiments in the pap

This GitHub repo consists of Code and Some results of project- Diabetes Treatment using Gold nanoparticles. These Consist of ML Models used for prediction Diabetes and further the basic theory and working of Gold nanoparticles.

GoldNanoparticles This GitHub repo consists of Code and Some results of project- Diabetes Treatment using Gold nanoparticles. These Consist of ML Mode

spaCy-wrap: For Wrapping fine-tuned transformers in spaCy pipelines

spaCy-wrap: For Wrapping fine-tuned transformers in spaCy pipelines spaCy-wrap is minimal library intended for wrapping fine-tuned transformers from t

Codes and models for the paper "Learning Unknown from Correlations: Graph Neural Network for Inter-novel-protein Interaction Prediction".

GNN_PPI Codes and models for the paper "Learning Unknown from Correlations: Graph Neural Network for Inter-novel-protein Interaction Prediction". Lear

In this workshop we will be exploring NLP state of the art transformers, with SOTA models like T5 and BERT, then build a model using HugginFace transformers framework.

Transformers are all you need In this workshop we will be exploring NLP state of the art transformers, with SOTA models like T5 and BERT, then build a

Optical character recognition for Japanese text, with the main focus being Japanese manga

Manga OCR Optical character recognition for Japanese text, with the main focus being Japanese manga. It uses a custom end-to-end model built with Tran

DocEnTr: An end-to-end document image enhancement transformer

DocEnTR Description Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer. This model is implemented on to

Deep ViT Features as Dense Visual Descriptors

dino-vit-features [paper] [project page] Official implementation of the paper "Deep ViT Features as Dense Visual Descriptors". We demonstrate the effe

Training DiffWave using variational method from Variational Diffusion Models.

Variational DiffWave Training DiffWave using variational method from Variational Diffusion Models. Quick Start python train_distributed.py discrete_10

Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners

DART Implementation for ICLR2022 paper Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. Environment [email protected] Use pi

BaseCls BaseCls 是一个基于 MegEngine 的预训练模型库,帮助大家挑选或训练出更适合自己科研或者业务的模型结构

BaseCls BaseCls 是一个基于 MegEngine 的预训练模型库,帮助大家挑选或训练出更适合自己科研或者业务的模型结构。 文档地址:https://basecls.readthedocs.io 安装 安装环境 BaseCls 需要 Python = 3.6。 BaseCls 依赖 M

Goal of the project : Detecting Temporal Boundaries in Sign Language videos

MVA RecVis course final project : Goal of the project : Detecting Temporal Boundaries in Sign Language videos. Sign language automatic indexing is an

NaturalCC is a sequence modeling toolkit that allows researchers and developers to train custom models

NaturalCC NaturalCC is a sequence modeling toolkit that allows researchers and developers to train custom models for many software engineering tasks,

smc.covid is an R package related to the paper A sequential Monte Carlo approach to estimate a time varying reproduction number in infectious disease models: the COVID-19 case by Storvik et al

smc.covid smc.covid is an R package related to the paper A sequential Monte Carlo approach to estimate a time varying reproduction number in infectiou

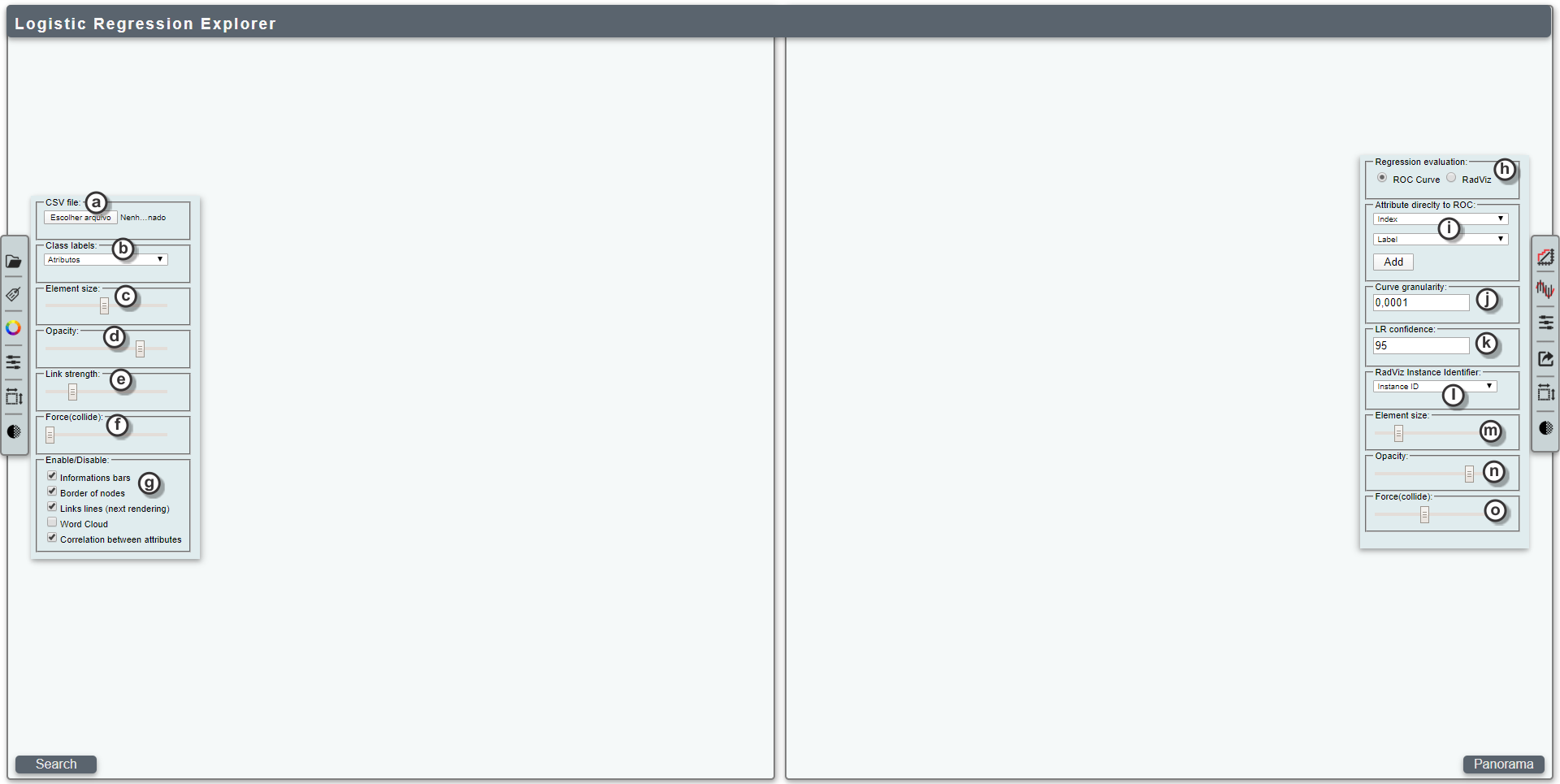

An Approach to Explore Logistic Regression Models

User-centered Regression An Approach to Explore Logistic Regression Models This tool applies the potential of Attribute-RadViz in identifying correlat

A PyTorch implementation for our paper "Dual Contrastive Learning: Text Classification via Label-Aware Data Augmentation".

Dual-Contrastive-Learning A PyTorch implementation for our paper "Dual Contrastive Learning: Text Classification via Label-Aware Data Augmentation". Y

Leaf: Multiple-Choice Question Generation

Leaf: Multiple-Choice Question Generation Easy to use and understand multiple-choice question generation algorithm using T5 Transformers. The applicat

Improved Fitness Optimization Landscapes for Sequence Design

ReLSO Improved Fitness Optimization Landscapes for Sequence Design Description Citation How to run Training models Original data source Description In

Official repository for the paper "On Evaluation Metrics for Graph Generative Models"

On Evaluation Metrics for Graph Generative Models Authors: Rylee Thompson, Boris Knyazev, Elahe Ghalebi, Jungtaek Kim, Graham Taylor This is the offic

This is the source code for the experiments related to the paper Unsupervised Audio Source Separation Using Differentiable Parametric Source Models

Unsupervised Audio Source Separation Using Differentiable Parametric Source Models This is the source code for the experiments related to the paper Un

Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer.

DocEnTR Description Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer. This model is implemented on to

Vision transformers (ViTs) have found only limited practical use in processing images

CXV Convolutional Xformers for Vision Vision transformers (ViTs) have found only limited practical use in processing images, in spite of their state-o

PyTorch implementation of our paper How robust are discriminatively trained zero-shot learning models?

How robust are discriminatively trained zero-shot learning models? This repository contains the PyTorch implementation of our paper How robust are dis

Check out the StyleGAN repo and place it in the same directory hierarchy as the present repo

Variational Model Inversion Attacks Kuan-Chieh Wang, Yan Fu, Ke Li, Ashish Khisti, Richard Zemel, Alireza Makhzani Most commands are in run_scripts. W

Main Results on ImageNet with Pretrained Models

This repository contains Pytorch evaluation code, training code and pretrained models for the following projects: SPACH (A Battle of Network Structure

Title: Graduate-Admissions-Predictor

The purpose of this project is create a predictive model capable of identifying the probability of a person securing an admit based on their personal profile parameters. Simplified visualisations have been created for understanding the data. 80% accuracy was achieved on the test set.

Predict the spans of toxic posts that were responsible for the toxic label of the posts

toxic-spans-detection An attempt at the SemEval 2021 Task 5: Toxic Spans Detection. The Toxic Spans Detection task of SemEval2021 required participant

Easy to use and customizable SOTA Semantic Segmentation models with abundant datasets in PyTorch

Semantic Segmentation Easy to use and customizable SOTA Semantic Segmentation models with abundant datasets in PyTorch Features Applicable to followin

Twitter bot that uses NLP models to summarize news articles referenced in a user's twitter timeline

Twitter-News-Summarizer Twitter bot that uses NLP models to summarize news articles referenced in a user's twitter timeline 1.) Extracts all tweets fr

OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive models

OptiPLANT OptiPLANT is a cloud-based based system that empowers professional and non-professional data scientists to build high-quality predictive mod

Sinkformers: Transformers with Doubly Stochastic Attention

Code for the paper : "Sinkformers: Transformers with Doubly Stochastic Attention" Paper You will find our paper here. Compat This package has been dev

This repo provides the source code & data of our paper "GreaseLM: Graph REASoning Enhanced Language Models"

GreaseLM: Graph REASoning Enhanced Language Models This repo provides the source code & data of our paper "GreaseLM: Graph REASoning Enhanced Language

This repository contains pre-trained models and some evaluation code for our paper Towards Unsupervised Dense Information Retrieval with Contrastive Learning

Contriever: Towards Unsupervised Dense Information Retrieval with Contrastive Learning This repository contains pre-trained models and some evaluation

Annotating the Tweebank Corpus on Named Entity Recognition and Building NLP Models for Social Media Analysis

TweebankNLP This repo contains the new Tweebank-NER dataset and off-the-shelf Twitter-Stanza pipeline for state-of-the-art Tweet NLP, as described in

Revisiting Weakly Supervised Pre-Training of Visual Perception Models

SWAG: Supervised Weakly from hashtAGs This repository contains SWAG models from the paper Revisiting Weakly Supervised Pre-Training of Visual Percepti

On Out-of-distribution Detection with Energy-based Models

On Out-of-distribution Detection with Energy-based Models This repository contains the code for the experiments conducted in the paper On Out-of-distr

This repository contains code to train and render Mixture of Volumetric Primitives (MVP) models

Mixture of Volumetric Primitives -- Training and Evaluation This repository contains code to train and render Mixture of Volumetric Primitives (MVP) m

Evidential Softmax for Sparse Multimodal Distributions in Deep Generative Models

Evidential Softmax for Sparse Multimodal Distributions in Deep Generative Models Abstract Many applications of generative models rely on the marginali

RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2

RoNER RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2. It is meant to be an easy to use, hi

Boltzmann visualization - Visualize the Boltzmann distribution for simple quantum models of molecular motion

Boltzmann visualization - Visualize the Boltzmann distribution for simple quantum models of molecular motion