43 Repositories

Python xgboost Libraries

Machine learning beginner to Kaggle competitor in 30 days. Non-coders welcome. The program starts Monday, August 2, and lasts four weeks. It's designed for people who want to learn machine learning.

30-Days-of-ML-Kaggle 🔥 About the Hands On Program 💻 Machine learning beginner → Kaggle competitor in 30 days. Non-coders welcome The program starts

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction

Attention-based CNN-LSTM and XGBoost hybrid model for stock prediction Requirements The code has been tested running under Python 3.7.4, with the foll

To build a regression model to predict the concrete compressive strength based on the different features in the training data.

Cement-Strength-Prediction Problem Statement To build a regression model to predict the concrete compressive strength based on the different features

This machine learning model was developed for House Prices

This machine learning model was developed for House Prices - Advanced Regression Techniques competition in Kaggle by using several machine learning models such as Random Forest, XGBoost and LightGBM.

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc)

Built various Machine Learning algorithms (Logistic Regression, Random Forest, KNN, Gradient Boosting and XGBoost. etc). Structured a custom ensemble model and a neural network. Found a outperformed model for heart failure prediction accuracy of 88 percent.

hgboost - Hyperoptimized Gradient Boosting

hgboost is short for Hyperoptimized Gradient Boosting and is a python package for hyperparameter optimization for xgboost, catboost and lightboost using cross-validation, and evaluating the results on an independent validation set. hgboost can be applied for classification and regression tasks.

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

This is an open solution to the Home Credit Default Risk challenge 🏡

Home Credit Default Risk: Open Solution This is an open solution to the Home Credit Default Risk challenge 🏡 . More competitions 🎇 Check collection

Mortality risk prediction for COVID-19 patients using XGBoost models

Mortality risk prediction for COVID-19 patients using XGBoost models Using demographic and lab test data received from the HM Hospitales in Spain, I b

Used Logistic Regression, Random Forest, and XGBoost to predict the outcome of Search & Destroy games from the Call of Duty World League for the 2018 and 2019 seasons.

Call of Duty World League: Search & Destroy Outcome Predictions Growing up as an avid Call of Duty player, I was always curious about what factors led

Developing your First ML Workflow of the AWS Machine Learning Engineer Nanodegree Program

Exercises and project documentation for the 3. Developing your First ML Workflow of the AWS Machine Learning Engineer Nanodegree Program

Video lie detector using xgboost - A video lie detector using OpenFace and xgboost

video_lie_detector_using_xgboost a video lie detector using OpenFace and xgboost

A full pipeline AutoML tool for tabular data

HyperGBM Doc | 中文 We Are Hiring! Dear folks,we are offering challenging opportunities located in Beijing for both professionals and students who are k

Extreme Dynamic Classifier Chains - XGBoost for Multi-label Classification

Extreme Dynamic Classifier Chains Classifier chains is a key technique in multi-label classification, sinceit allows to consider label dependencies ef

Scalable machine learning based time series forecasting

mlforecast Scalable machine learning based time series forecasting. Install PyPI pip install mlforecast Optional dependencies If you want more functio

Mars is a tensor-based unified framework for large-scale data computation which scales numpy, pandas, scikit-learn and Python functions.

Mars is a tensor-based unified framework for large-scale data computation which scales numpy, pandas, scikit-learn and many other libraries. Documenta

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models.

An optimization and data collection toolbox for convenient and fast prototyping of computationally expensive models. Hyperactive: is very easy to lear

pandas, scikit-learn, xgboost and seaborn integration

pandas, scikit-learn and xgboost integration.

Provide an input CSV and a target field to predict, generate a model + code to run it.

automl-gs Give an input CSV file and a target field you want to predict to automl-gs, and get a trained high-performing machine learning or deep learn

Transform ML models into a native code with zero dependencies

m2cgen (Model 2 Code Generator) - is a lightweight library which provides an easy way to transpile trained statistical models into a native code

XGBoost + Optuna

AutoXGB XGBoost + Optuna: no brainer auto train xgboost directly from CSV files auto tune xgboost using optuna auto serve best xgboot model using fast

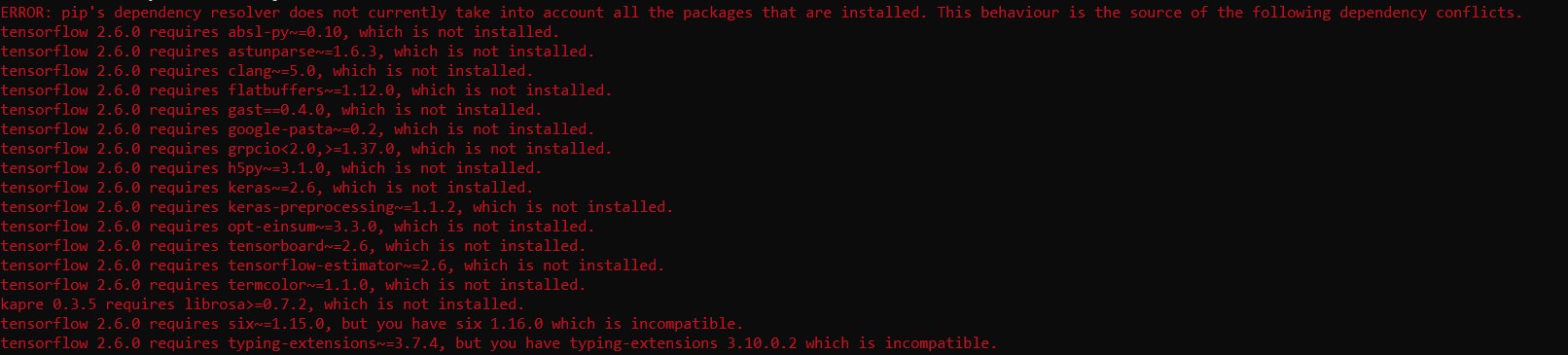

Data Science Environment Setup in single line

datascienv is package that helps your to setup your environment in single line of code with all dependency and it is also include pyforest that provide single line of import all required ml libraries

AutoTabular automates machine learning tasks enabling you to easily achieve strong predictive performance in your applications.

AutoTabular automates machine learning tasks enabling you to easily achieve strong predictive performance in your applications. With just a few lines of code, you can train and deploy high-accuracy machine learning and deep learning models tabular data.

XGBoost-Ray is a distributed backend for XGBoost, built on top of distributed computing framework Ray.

XGBoost-Ray is a distributed backend for XGBoost, built on top of distributed computing framework Ray.

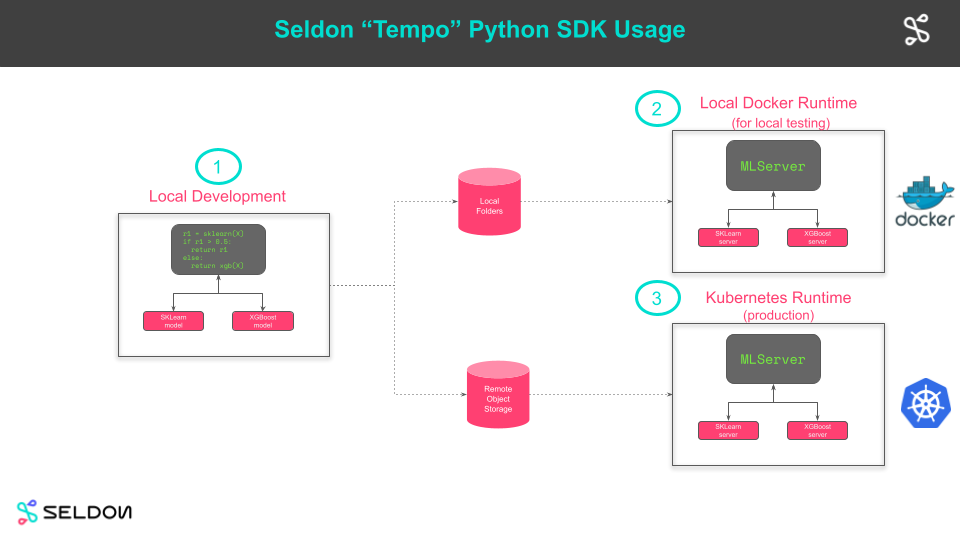

⏳ Tempo: The MLOps Software Development Kit

Tempo provides a unified interface to multiple MLOps projects that enable data scientists to deploy and productionise machine learning systems.

Automated Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning

The mljar-supervised is an Automated Machine Learning Python package that works with tabular data. I

Automatically build ARIMA, SARIMAX, VAR, FB Prophet and XGBoost Models on Time Series data sets with a Single Line of Code. Now updated with Dask to handle millions of rows.

Auto_TS: Auto_TimeSeries Automatically build multiple Time Series models using a Single Line of Code. Now updated with Dask. Auto_timeseries is a comp

A fast xgboost feature selection algorithm

BoostARoota A Fast XGBoost Feature Selection Algorithm (plus other sklearn tree-based classifiers) Why Create Another Algorithm? Automated processes l

A library for debugging/inspecting machine learning classifiers and explaining their predictions

ELI5 ELI5 is a Python package which helps to debug machine learning classifiers and explain their predictions. It provides support for the following m

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Stacked Generalization (Ensemble Learning)

Stacking (stacked generalization) Overview ikki407/stacking - Simple and useful stacking library, written in Python. User can use models of scikit-lea

MLBox is a powerful Automated Machine Learning python library.

MLBox is a powerful Automated Machine Learning python library. It provides the following features: Fast reading and distributed data preprocessing/cle

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

Improving XGBoost survival analysis with embeddings and debiased estimators

xgbse: XGBoost Survival Embeddings "There are two cultures in the use of statistical modeling to reach conclusions from data

Automatically Visualize any dataset, any size with a single line of code. Created by Ram Seshadri. Collaborators Welcome. Permission Granted upon Request.

AutoViz Automatically Visualize any dataset, any size with a single line of code. AutoViz performs automatic visualization of any dataset with one lin

Automatically Visualize any dataset, any size with a single line of code. Created by Ram Seshadri. Collaborators Welcome. Permission Granted upon Request.

AutoViz Automatically Visualize any dataset, any size with a single line of code. AutoViz performs automatic visualization of any dataset with one lin

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

Automates Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning :rocket:

MLJAR Automated Machine Learning Documentation: https://supervised.mljar.com/ Source Code: https://github.com/mljar/mljar-supervised Table of Contents

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

[UNMAINTAINED] Automated machine learning for analytics & production

auto_ml Automated machine learning for production and analytics Installation pip install auto_ml Getting started from auto_ml import Predictor from au

Automatically Build Multiple ML Models with a Single Line of Code. Created by Ram Seshadri. Collaborators Welcome. Permission Granted upon Request.

Auto-ViML Automatically Build Variant Interpretable ML models fast! Auto_ViML is pronounced "auto vimal" (autovimal logo created by Sanket Ghanmare) N

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

H2O is an Open Source, Distributed, Fast & Scalable Machine Learning Platform: Deep Learning, Gradient Boosting (GBM) & XGBoost, Random Forest, Generalized Linear Modeling (GLM with Elastic Net), K-Means, PCA, Generalized Additive Models (GAM), RuleFit, Support Vector Machine (SVM), Stacked Ensembles, Automatic Machine Learning (AutoML), etc.

H2O H2O is an in-memory platform for distributed, scalable machine learning. H2O uses familiar interfaces like R, Python, Scala, Java, JSON and the Fl