ParNet

Custom Implementation of Non-deep Networks

arXiv:2110.07641

Ankit Goyal, Alexey Bochkovskiy, Jia Deng, Vladlen Koltun

Official Repository https://github.com/imankgoyal/NonDeepNetworks

Custom Implementation of Non-deep Networks

arXiv:2110.07641

Ankit Goyal, Alexey Bochkovskiy, Jia Deng, Vladlen Koltun

Official Repository https://github.com/imankgoyal/NonDeepNetworks

Xilinx_Vitis_AI This repo will help you to Deploy your Deep Learning Model on Ultra96v2 Board. Prerequisites Vitis Core Development Kit 2019.2 This co

Non-AR Spatial-Temporal Transformer Introduction Implementation of the paper NAST: Non-Autoregressive Spatial-Temporal Transformer for Time Series For

MaskCycleGAN-VC Unofficial PyTorch implementation of Kaneko et al.'s MaskCycleGAN-VC (2021) for non-parallel voice conversion. MaskCycleGAN-VC is the

language-models-are-knowledge-graphs-pytorch Language models are open knowledge graphs ( work in progress ) A non official reimplementation of Languag

Parallel Tacotron2 Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Face Identity Disentanglement via Latent Space Mapping - Implement in pytorch with StyleGAN 2 Description Pytorch implementation of the paper Face Ide

Graph-to-Graph Transformers Self-attention models, such as Transformer, have been hugely successful in a wide range of natural language processing (NL

VAENAR-TTS This repo contains code accompanying the paper "VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis". Sa

GLAT Implementation for the ACL2021 paper "Glancing Transformer for Non-Autoregressive Neural Machine Translation" Requirements Python = 3.7 Pytorch

Hello, my friend, appreciate for your great work! I have tested your code and change the ResNet code in my model by using your ParNet , but the actual time is quite slow than the paper said. My block size is [64, 128, 256, 512, 2048], and the time of "forward()" is more than 5s average while the Resnet is 0.02s in my device. I have use the time function for every line in the forward(), find that the encode stuff is the main reason. I continue write time.perf_counter() in the encode stuff, find that the "self.stream2_fusion" and "self.stream3_fusion" is the most time user. Do you know why ?

In Downsample, I think it should be torch.nn.AvgPool2d(kernel_size=(3, 3), stride=(2, 2), padding=(1, 1)), not torch.nn.AvgPool2d(kernel_size=(2,2)). otherwise x & y have wrong size, and x + y will throw exception.

Fast Symbolic Regression Symbolic Regression is a non-linear, non-parametric Machine Learning method capable of modeling complex data sets. fastsr aim

Custom Keras ML block example for Edge Impulse This repository is an example on

NHDRRNet-PyTorch This is the PyTorch implementation of Deep HDR Imaging via A Non-Local Network (TIP 2020). 0. Differences between Original Paper and

Non-autoregressive Deep Learning-Based TTS Template This is a template for the Non-autoregressive TTS model. It contains Data Preprocessing Pipeline D

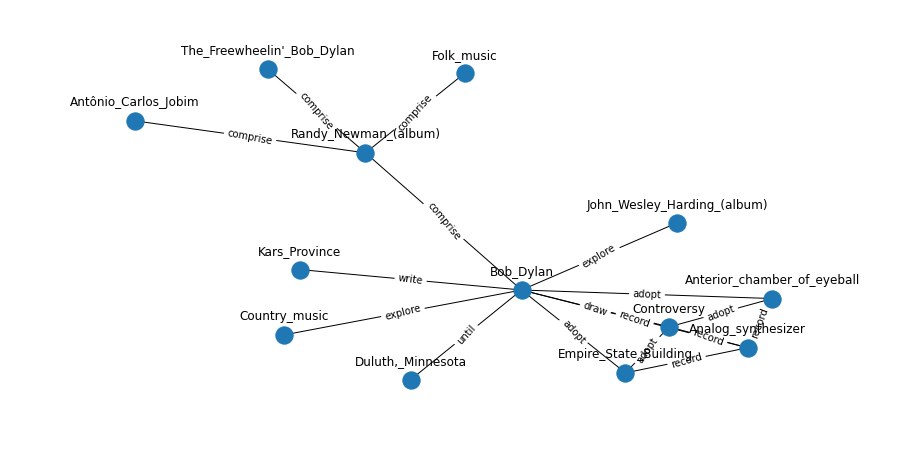

English | 简体中文 Why Non-Euclidean Geometry Considering these simple graph structures shown below. Nodes with same color has 2-hop distance whereas 1-ho

This is the repository for paper NEEDLE: Towards Non-invertible Backdoor Attack to Deep Learning Models.

ESGD-M - A stochastic non-convex second order optimizer, suitable for training deep learning models, for PyTorch

This repository is the official PyTorch implementation of Cascaded Deep Video Deblurring Using Temporal Sharpness Prior and Non-local Spatial-Temporal Similarity

A framework that constructs deep neural networks, autoencoders, logistic regressors, and linear networks without the use of any outside machine learning libraries - all from scratch.

SpiderBot_DeepRL Title: Implementation of Single and Multi-Agent Deep Reinforcement Learning Algorithms for a Walking Spider Robot Authors(s): Arijit