691 Repositories

Python NLP-topic-mdel-LDA Libraries

Recurrent Variational Autoencoder that generates sequential data implemented with pytorch

Pytorch Recurrent Variational Autoencoder Model: This is the implementation of Samuel Bowman's Generating Sentences from a Continuous Space with Kim's

Hierarchical unsupervised and semi-supervised topic models for sparse count data with CorEx

Anchored CorEx: Hierarchical Topic Modeling with Minimal Domain Knowledge Correlation Explanation (CorEx) is a topic model that yields rich topics tha

A Domain Specific Language (DSL) for building language patterns. These can be later compiled into spaCy patterns, pure regex, or any other format

RITA DSL This is a language, loosely based on language Apache UIMA RUTA, focused on writing manual language rules, which compiles into either spaCy co

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

A Multilingual Latent Dirichlet Allocation (LDA) Pipeline with Stop Words Removal, n-gram features, and Inverse Stemming, in Python.

Multilingual Latent Dirichlet Allocation (LDA) Pipeline This project is for text clustering using the Latent Dirichlet Allocation (LDA) algorithm. It

Accurately generate all possible forms of an English word e.g "election" -- "elect", "electoral", "electorate" etc.

Accurately generate all possible forms of an English word Word forms can accurately generate all possible forms of an English word. It can conjugate v

The tool to make NLP datasets ready to use

chazutsu photo from Kaikado, traditional Japanese chazutsu maker chazutsu is the dataset downloader for NLP. import chazutsu r = chazutsu.data

TextAttack 🐙 is a Python framework for adversarial attacks, data augmentation, and model training in NLP

TextAttack 🐙 Generating adversarial examples for NLP models [TextAttack Documentation on ReadTheDocs] About • Setup • Usage • Design About TextAttack

A2T: Towards Improving Adversarial Training of NLP Models (EMNLP 2021 Findings)

A2T: Towards Improving Adversarial Training of NLP Models This is the source code for the EMNLP 2021 (Findings) paper "Towards Improving Adversarial T

💛 Code and Dataset for our EMNLP 2021 paper: "Perspective-taking and Pragmatics for Generating Empathetic Responses Focused on Emotion Causes"

Perspective-taking and Pragmatics for Generating Empathetic Responses Focused on Emotion Causes Official PyTorch implementation and EmoCause evaluatio

Natural Language Processing with transformers

we want to create a repo to illustrate usage of transformers in chinese

IndoBERTweet is the first large-scale pretrained model for Indonesian Twitter. Published at EMNLP 2021 (main conference)

IndoBERTweet 🐦 🇮🇩 1. Paper Fajri Koto, Jey Han Lau, and Timothy Baldwin. IndoBERTweet: A Pretrained Language Model for Indonesian Twitter with Effe

Code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

This repository contains the code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

Augmenty is an augmentation library based on spaCy for augmenting texts.

Augmenty: The cherry on top of your NLP pipeline Augmenty is an augmentation library based on spaCy for augmenting texts. Besides a wide array of high

DataCLUE: 国内首个以数据为中心的AI测评(含模型分析报告)

DataCLUE 以数据为中心的AI测评(DataCLUE) DataCLUE: A Chinese Data-centric Language Evaluation Benchmark 内容导引 章节 描述 简介 介绍以数据为中心的AI测评(DataCLUE)的背景 任务描述 任务描述 实验结果

UniLM AI - Large-scale Self-supervised Pre-training across Tasks, Languages, and Modalities

Pre-trained (foundation) models across tasks (understanding, generation and translation), languages (100+ languages), and modalities (language, image, audio, vision + language, audio + language, etc.)

This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced in the paper titled "BanglaBERT: Combating Embedding Barrier in Multilingual Models for Low-Resource Language Understanding".

BanglaBERT This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced i

🍊 PAUSE (Positive and Annealed Unlabeled Sentence Embedding), accepted by EMNLP'2021 🌴

PAUSE: Positive and Annealed Unlabeled Sentence Embedding Sentence embedding refers to a set of effective and versatile techniques for converting raw

The code for our paper "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task —— Next Sentence Prediction"

The code for our paper "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task —— Next Sentence Prediction"

txtai executes machine-learning workflows to transform data and build AI-powered semantic search applications.

txtai executes machine-learning workflows to transform data and build AI-powered semantic search applications.

PIZZA - a task-oriented semantic parsing dataset

The PIZZA dataset continues the exploration of task-oriented parsing by introducing a new dataset for parsing pizza and drink orders, whose semantics cannot be captured by flat slots and intents.

Code and checkpoints for training the transformer-based Table QA models introduced in the paper TAPAS: Weakly Supervised Table Parsing via Pre-training.

End-to-end neural table-text understanding models.

✨Rubrix is a production-ready Python framework for exploring, annotating, and managing data in NLP projects.

✨A Python framework to explore, label, and monitor data for NLP projects

Data augmentation for NLP, accepted at EMNLP 2021 Findings

AEDA: An Easier Data Augmentation Technique for Text Classification This is the code for the EMNLP 2021 paper AEDA: An Easier Data Augmentation Techni

A Python multilingual toolkit for Sentiment Analysis and Social NLP tasks

pysentimiento: A Python toolkit for Sentiment Analysis and Social NLP tasks A Transformer-based library for SocialNLP classification tasks. Currently

Image Captioning using CNN and Transformers

Image-Captioning Keras/Tensorflow Image Captioning application using CNN and Transformer as encoder/decoder. In particulary, the architecture consists

pysentimiento: A Python toolkit for Sentiment Analysis and Social NLP tasks

A Python multilingual toolkit for Sentiment Analysis and Social NLP tasks

A data annotation pipeline to generate high-quality, large-scale speech datasets with machine pre-labeling and fully manual auditing.

About This repository provides data and code for the paper: Scalable Data Annotation Pipeline for High-Quality Large Speech Datasets Development (subm

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

Ray-based parallel data preprocessing for NLP and ML.

Wrangl Ray-based parallel data preprocessing for NLP and ML. pip install wrangl # for latest pip install git+https://github.com/vzhong/wrangl See exa

超轻量级bert的pytorch版本,大量中文注释,容易修改结构,持续更新

bert4pytorch 2021年8月27更新: 感谢大家的star,最近有小伙伴反映了一些小的bug,我也注意到了,奈何这个月工作上实在太忙,更新不及时,大约会在9月中旬集中更新一个只需要pip一下就完全可用的版本,然后会新添加一些关键注释。 再增加对抗训练的内容,更新一个完整的finetune

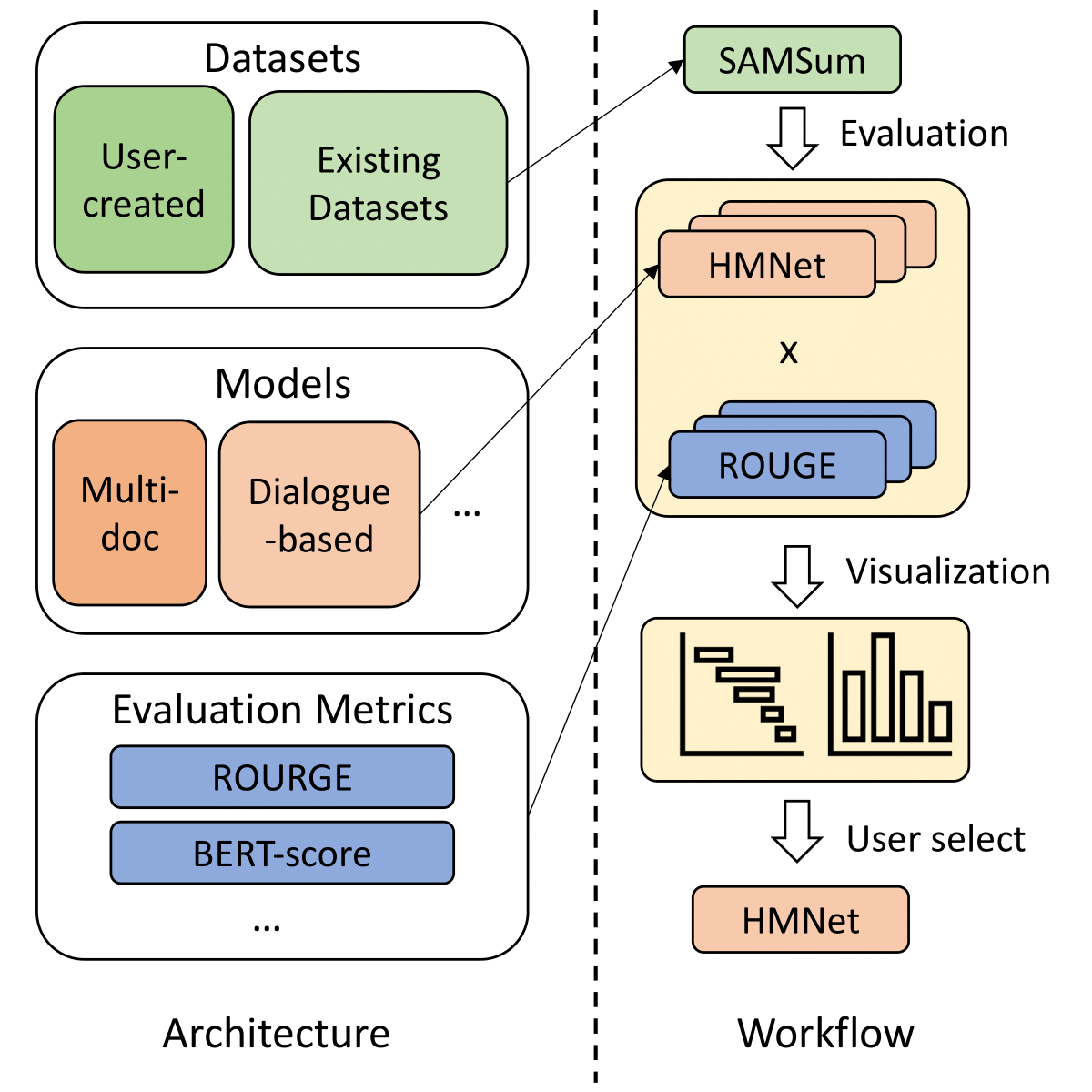

SummerTime - Text Summarization Toolkit for Non-experts

A library to help users choose appropriate summarization tools based on their specific tasks or needs. Includes models, evaluation metrics, and datasets.

Türkçe küfürlü içerikleri bulan bir yapay zeka kütüphanesi / An ML library for profanity detection in Turkish sentences

"Kötü söz sahibine aittir." -Anonim Nedir? sinkaf uygunsuz yorumların bulunmasını sağlayan bir python kütüphanesidir. Farkı nedir? Diğer algoritmalard

Ask for weather information like a human

weather-nlp About Ask for weather information like a human. Goals Understand typical questions like: Hourly temperatures in Potsdam on 2020-09-15. Rai

Unofficial implementation of Perceiver IO: A General Architecture for Structured Inputs & Outputs

Perceiver IO Unofficial implementation of Perceiver IO: A General Architecture for Structured Inputs & Outputs Usage import torch from src.perceiver.

This repo is to provide a list of literature regarding Deep Learning on Graphs for NLP

This repo is to provide a list of literature regarding Deep Learning on Graphs for NLP

TextDescriptives - A Python library for calculating a large variety of statistics from text

A Python library for calculating a large variety of statistics from text(s) using spaCy v.3 pipeline components and extensions. TextDescriptives can be used to calculate several descriptive statistics, readability metrics, and metrics related to dependency distance.

⚖️ A Statutory Article Retrieval Dataset in French.

A Statutory Article Retrieval Dataset in French This repository contains the Belgian Statutory Article Retrieval Dataset (BSARD), as well as the code

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

Pipeline for fast building text classification TF-IDF + LogReg baselines.

Text Classification Baseline Pipeline for fast building text classification TF-IDF + LogReg baselines. Usage Instead of writing custom code for specif

Deploy an inference API on AWS (EC2) using FastAPI Docker and Github Actions

Deploy an inference API on AWS (EC2) using FastAPI Docker and Github Actions To learn more about this project: medium blog post The goal of this proje

ETM - R package for Topic Modelling in Embedding Spaces

ETM - R package for Topic Modelling in Embedding Spaces This repository contains an R package called topicmodels.etm which is an implementation of ETM

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

A complete NLP guideline for enthusiasts

NLP-NINJA A complete guide for Natural Language Processing in Python Table of Contents S.No. Topic Level Meaning 1 Tokenization 🤍 Beginner 2 Stemming

Natural Language Processing library built with AllenNLP 🌲🌱

Custom Natural Language Processing with big and small models 🌲🌱

FairyTailor: Multimodal Generative Framework for Storytelling

FairyTailor: Multimodal Generative Framework for Storytelling

Code for ACL 21: Generating Query Focused Summaries from Query-Free Resources

marge This repository releases the code for Generating Query Focused Summaries from Query-Free Resources. Please cite the following paper [bib] if you

This repository contains the code for "Self-Diagnosis and Self-Debiasing: A Proposal for Reducing Corpus-Based Bias in NLP".

Self-Diagnosis and Self-Debiasing This repository contains the source code for Self-Diagnosis and Self-Debiasing: A Proposal for Reducing Corpus-Based

open-information-extraction-system, build open-knowledge-graph(SPO, subject-predicate-object) by pyltp(version==3.4.0)

中文开放信息抽取系统, open-information-extraction-system, build open-knowledge-graph(SPO, subject-predicate-object) by pyltp(version==3.4.0)

Code for "Finetuning Pretrained Transformers into Variational Autoencoders"

transformers-into-vaes Code for Finetuning Pretrained Transformers into Variational Autoencoders (our submission to NLP Insights Workshop 2021). Gathe

Machine learning models from Singapore's NLP research community

SG-NLP Machine learning models from Singapore's natural language processing (NLP) research community. sgnlp is a Python package that allows you to eas

Scraping and analysis of leetcode-compensations page.

Leetcode compensations report Scraping and analysis of leetcode-compensations page.

自然言語で書かれた時間情報表現を抽出/規格化するルールベースの解析器

ja-timex 自然言語で書かれた時間情報表現を抽出/規格化するルールベースの解析器 概要 ja-timex は、現代日本語で書かれた自然文に含まれる時間情報表現を抽出しTIMEX3と呼ばれるアノテーション仕様に変換することで、プログラムが利用できるような形に規格化するルールベースの解析器です。

Code Repo for the ACL21 paper "Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning"

Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning This is the Github repository of our paper, "Common S

NLPShala , the best IDE for all Natural language processing tasks.

The revolutionary IDE for all NLP (Natural language processing) stuffs on the internet.

Few-shot NLP benchmark for unified, rigorous eval

FLEX FLEX is a benchmark and framework for unified, rigorous few-shot NLP evaluation. FLEX enables: First-class NLP support Support for meta-training

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (supports 16 languages) of Universal Sentence Encoder (USE).

A text augmentation tool for named entity recognition.

neraug This python library helps you with augmenting text data for named entity recognition. Augmentation Example Reference from An Analysis of Simple

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Implementation of character based convolutional neural network

Character Based CNN This repo contains a PyTorch implementation of a character-level convolutional neural network for text classification. The model a

Simple Text-Generator with OpenAI gpt-2 Pytorch Implementation

GPT2-Pytorch with Text-Generator Better Language Models and Their Implications Our model, called GPT-2 (a successor to GPT), was trained simply to pre

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 Corpora 📃 Corpora Number of documents Size (GB) BNE 201,080,084 570GB Models 🤖 RoBERTa-base BNE: https://huggingface.co

DRIFT is a tool for Diachronic Analysis of Scientific Literature.

About DRIFT is a tool for Diachronic Analysis of Scientific Literature. The application offers user-friendly and customizable utilities for two modes:

Universal Adversarial Triggers for Attacking and Analyzing NLP (EMNLP 2019)

Universal Adversarial Triggers for Attacking and Analyzing NLP This is the official code for the EMNLP 2019 paper, Universal Adversarial Triggers for

A pytorch implementation of the ACL2019 paper "Simple and Effective Text Matching with Richer Alignment Features".

RE2 This is a pytorch implementation of the ACL 2019 paper "Simple and Effective Text Matching with Richer Alignment Features". The original Tensorflo

🛠 All-in-one web-based IDE specialized for machine learning and data science.

All-in-one web-based development environment for machine learning Getting Started • Features & Screenshots • Support • Report a Bug • FAQ • Known Issu

A repo for open resources & information for people to succeed in PhD in CS & career in AI / NLP

A repo for open resources & information for people to succeed in PhD in CS & career in AI / NLP

🤗 Push your spaCy pipelines to the Hugging Face Hub

spacy-huggingface-hub: Push your spaCy pipelines to the Hugging Face Hub This package provides a CLI command for uploading any trained spaCy pipeline

Use different orders of N-gram model to play Hangman game.

Hangman game The Hangman game is a game whereby one person thinks of a word, which is kept secret from another person, who tries to guess the word one

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

ACL'2021: Learning Dense Representations of Phrases at Scale

DensePhrases DensePhrases is an extractive phrase search tool based on your natural language inputs. From 5 million Wikipedia articles, it can search

Huggingface Transformers + Adapters = ❤️

adapter-transformers A friendly fork of HuggingFace's Transformers, adding Adapters to PyTorch language models adapter-transformers is an extension of

Graph4nlp is the library for the easy use of Graph Neural Networks for NLP

Graph4NLP Graph4NLP is an easy-to-use library for R&D at the intersection of Deep Learning on Graphs and Natural Language Processing (i.e., DLG4NLP).

Text-to-SQL in the Wild: A Naturally-Occurring Dataset Based on Stack Exchange Data

SEDE SEDE (Stack Exchange Data Explorer) is new dataset for Text-to-SQL tasks with more than 12,000 SQL queries and their natural language description

中文无监督SimCSE Pytorch实现

A PyTorch implementation of unsupervised SimCSE SimCSE: Simple Contrastive Learning of Sentence Embeddings 1. 用法 无监督训练 python train_unsup.py ./data/ne

Meta-learning for NLP

Self-Supervised Meta-Learning for Few-Shot Natural Language Classification Tasks Code for training the meta-learning models and fine-tuning on downstr

CausaLM: Causal Model Explanation Through Counterfactual Language Models

CausaLM: Causal Model Explanation Through Counterfactual Language Models Authors: Amir Feder, Nadav Oved, Uri Shalit, Roi Reichart Abstract: Understan

TunBERT is the first release of a pre-trained BERT model for the Tunisian dialect using a Tunisian Common-Crawl-based dataset.

TunBERT is the first release of a pre-trained BERT model for the Tunisian dialect using a Tunisian Common-Crawl-based dataset. TunBERT was applied to three NLP downstream tasks: Sentiment Analysis (SA), Tunisian Dialect Identification (TDI) and Reading Comprehension Question-Answering (RCQA)

Sequence model architectures from scratch in PyTorch

This repository implements a variety of sequence model architectures from scratch in PyTorch. Effort has been put to make the code well structured so that it can serve as learning material. The training loop implements the learner design pattern from fast.ai in pure PyTorch, with access to the loop provided through callbacks. Detailed logging and graphs are also provided with python logging and wandb. Additional implementations will be added.

Deep Learning for Natural Language Processing - Lectures 2021

This repository contains slides for the course "20-00-0947: Deep Learning for Natural Language Processing" (Technical University of Darmstadt, Summer term 2021).

Jina allows you to build deep learning-powered search-as-a-service in just minutes

Cloud-native neural search framework for any kind of data

In this repository, I have developed an end to end Automatic speech recognition project. I have developed the neural network model for automatic speech recognition with PyTorch and used MLflow to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry.

End to End Automatic Speech Recognition In this repository, I have developed an end to end Automatic speech recognition project. I have developed the

Markup is an online annotation tool that can be used to transform unstructured documents into structured formats for NLP and ML tasks, such as named-entity recognition. Markup learns as you annotate in order to predict and suggest complex annotations. Markup also provides integrated access to existing and custom ontologies, enabling the prediction and suggestion of ontology mappings based on the text you're annotating.

Markup is an online annotation tool that can be used to transform unstructured documents into structured formats for NLP and ML tasks, such as named-entity recognition. Markup learns as you annotate in order to predict and suggest complex annotations. Markup also provides integrated access to existing and custom ontologies, enabling the prediction and suggestion of ontology mappings based on the text you're annotating.

This repository contains all the source code that is needed for the project : An Efficient Pipeline For Bloom’s Taxonomy Using Natural Language Processing and Deep Learning

Pipeline For NLP with Bloom's Taxonomy Using Improved Question Classification and Question Generation using Deep Learning This repository contains all

CausalNLP is a practical toolkit for causal inference with text as treatment, outcome, or "controlled-for" variable.

CausalNLP CausalNLP is a practical toolkit for causal inference with text as treatment, outcome, or "controlled-for" variable. Install pip install -U

Unifying Cross-Lingual Semantic Role Labeling with Heterogeneous Linguistic Resources (NAACL-2021).

Unifying Cross-Lingual Semantic Role Labeling with Heterogeneous Linguistic Resources Description This is the repository for the paper Unifying Cross-

A Neural Language Style Transfer framework to transfer natural language text smoothly between fine-grained language styles like formal/casual, active/passive, and many more. Created by Prithiviraj Damodaran. Open to pull requests and other forms of collaboration.

Styleformer A Neural Language Style Transfer framework to transfer natural language text smoothly between fine-grained language styles like formal/cas

Load What You Need: Smaller Multilingual Transformers for Pytorch and TensorFlow 2.0.

Smaller Multilingual Transformers This repository shares smaller versions of multilingual transformers that keep the same representations offered by t

Code for our ACL 2021 (Findings) Paper - Fingerprinting Fine-tuned Language Models in the wild .

🌳 Fingerprinting Fine-tuned Language Models in the wild This is the code and dataset for our ACL 2021 (Findings) Paper - Fingerprinting Fine-tuned La

Repository for the COLING 2020 paper "Explainable Automated Fact-Checking: A Survey."

Explainable Fact Checking: A Survey This repository and the accompanying webpage contain resources for the paper "Explainable Fact Checking: A Survey"

SparseML is a libraries for applying sparsification recipes to neural networks with a few lines of code, enabling faster and smaller models

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.

A curated list of awesome resources related to Semantic Search🔎 and Semantic Similarity tasks.

A curated list of awesome resources related to Semantic Search🔎 and Semantic Similarity tasks.

An IVR Chatbot which can exponentially reduce the burden of companies as well as can improve the consumer/end user experience.

IVR-Chatbot Achievements 🏆 Team Uhtred won the Maverick 2.0 Bot-a-thon 2021 organized by AbInbev India. ❓ Problem Statement As we all know that, lot

![[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.](https://github.com/cambridgeltl/sapbert/raw/main/sapbert_front_graphs_v6.png?raw=true)

[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.

SapBERT: Self-alignment pretraining for BERT This repo holds code for the SapBERT model presented in our NAACL 2021 paper: Self-Alignment Pretraining

MILES is a multilingual text simplifier inspired by LSBert - A BERT-based lexical simplification approach proposed in 2018. Unlike LSBert, MILES uses the bert-base-multilingual-uncased model, as well as simple language-agnostic approaches to complex word identification (CWI) and candidate ranking.

MILES Multilingual Lexical Simplifier Explore the docs » Read LSBert Paper · Report Bug · Request Feature About The Project MILES is a multilingual te

:hot_pepper: R²SQL: "Dynamic Hybrid Relation Network for Cross-Domain Context-Dependent Semantic Parsing." (AAAI 2021)

R²SQL The PyTorch implementation of paper Dynamic Hybrid Relation Network for Cross-Domain Context-Dependent Semantic Parsing. (AAAI 2021) Requirement