41 Repositories

Python Pixiv-spider Libraries

Mailer is python3 script use for sending spear-phishing to target email...It was created by Spider Anongreyhat

Mailer Mailer is a python3 script. It's used for sending spear-phishing to target email...It was created by Spider Anongreyhat Screenshots Installatio

Blazing fast GraphQL endpoints finder using subdomain enumeration, scripts analysis and bruteforce.

Graphinder Graphinder is a tool that extracts all GraphQL endpoints from a given domain. Run with docker docker run -it -v $(pwd):/usr/bin/graphinder

SpiderArcadeGame - A game where the player controls a little spider who is trying to protect herself from other invasive bugs

SpiderArcadeGame - A game where the player controls a little spider who is trying to protect herself from other invasive bugs

Amazon web scraping using Scrapy Framework

Amazon-web-scraping-using-Scrapy-Framework Scrapy Scrapy is an application framework for crawling web sites and extracting structured data which can b

Pypixiv - A fully-typed, asynchronous api wrapper for pixiv

pypixiv this library is a fully-typed, asynchronous api wrapper for pixiv. featu

Desktop utility to download images/videos/music/text from various websites, and more

Desktop utility to download images/videos/music/text from various websites, and more

A web crawler for recording posts in "sina weibo"

Web Crawler for "sina weibo" A web crawler for recording posts in "sina weibo" Introduction This script helps collect attributes of posts in "sina wei

High available distributed ip proxy pool, powerd by Scrapy and Redis

高可用IP代理池 README | 中文文档 本项目所采集的IP资源都来自互联网,愿景是为大型爬虫项目提供一个高可用低延迟的高匿IP代理池。 项目亮点 代理来源丰富 代理抓取提取精准 代理校验严格合理 监控完备,鲁棒性强 架构灵活,便于扩展 各个组件分布式部署 快速开始 注意,代码请在release

This Spider/Bot is developed using Python and based on Scrapy Framework to Fetch some items information from Amazon

- Hello, This Project Contains Amazon Web-bot. - I've developed this bot for fething some items information on Amazon. - Scrapy Framework in Python is

✂️🕷️ Spider-Cut is a Network Mapper Framework (NMAP Framework)

Spider-Cut is a Network Mapper Framework (NMAP Framework) Installation | Usage | Creators | Donate Installation # Kali Linux | WSL

Chatbot construido com o framework Rasa para responder dúvidas referentes ao COVID-19.

Racom Chatbot Chatbot construido com o framework Rasa. Como executar Necessário instalar Docker e Docker Compose. Para inicializar a aplicação, basta

Implementation of the Spider-Man Game

Projeto FPRO FPRO/LEIC, 2021/22 Francisco Campos (up202108735) 1LEIC08 Objetivo Criar um clone do clássico Spider-Man em Pygame... Repositório de códi

Creating Scrapy scrapers via the Django admin interface

django-dynamic-scraper Django Dynamic Scraper (DDS) is an app for Django which builds on top of the scraping framework Scrapy and lets you create and

A spider for Universal Online Judge(UOJ) system, converting problem pages to PDFs.

Universal Online Judge Spider Introduction This is a spider for Universal Online Judge (UOJ) system (https://uoj.ac/). It also works for all other Onl

Python3 script to dump employee information from XING API

XingDumper Python 3 script to dump company employees from XING API. Perfect OSINT tool ;-) The results contain firstname, lastname, position, gender,

A Pixiv web crawler module

Pixiv-spider A Pixiv spider module WARNING It's an unfinished work, browsing the code carefully before using it. Features 0004 - Readme.md updated, co

Crawler do site Fundamentus.com com o uso do framework scrapy, tanto da aba detalhada como a de resumo.

Crawler do site Fundamentus.com com o uso do framework scrapy, tanto da aba detalhada como a de resumo. (Todas as infomações)

An arxiv spider

An Arxiv Spider 做为一个cser,杰出男孩深知内核对连接到计算机上的硬件设备进行管理的高效方式是中断而不是轮询。每当小伙伴发来一篇刚挂在arxiv上的”热乎“好文章时,杰出男孩都会感叹道:”师兄这是每天都挂在arxiv上呀,跑的好快~“。于是杰出男孩找了找 github,借鉴了一下其

a Scrapy spider that utilizes Postgres as a DB, Squid as a proxy server, Redis for de-duplication and Splash to render JavaScript. All in a microservices architecture utilizing Docker and Docker Compose

This is George's Scraping Project To get started cd into the theZoo file and run: chmod +x script.sh then: ./script.sh This will spin up a Postgres co

A low-code tool that generates python crawler code based on curl or url

KKBA Intruoduction A low-code tool that generates python crawler code based on curl or url Requirement Python = 3.6 Install pip install kkba Usage Co

Get your Pixiv token (for running upbit/pixivpy)

gppt: get-pixivpy-token Get your Pixiv token (for running upbit/pixivpy) Refine pixiv_auth.py + its fork Install ❭ pip install gppt Run Note: In advan

A Telegram bot that searches for the original source of anime, manga, and art

A Telegram bot that searches for the original source of anime, manga, and art How to use the bot Just send a screenshot of the anime, manga or art or

Pixiv 爬虫,使用 Python 实现。支持批量下载、上传到图床。

用 Python 实现的 Pixiv 爬虫,支持批量下载和上传。 随机图片 API: https://loliapi.ml/ Deploy Github Action 集成部署 建议使用本方法部署,相较于本地部署,无需搭建环境,全程在线上完成。并且使用国外服务器下载、上传,网络更加通畅。 Fork

一个基于Python3的Bot。目前支持以Docker的方式部署在vps上。支持Aria2、本子下载、网易云音乐下载、Pixiv榜单下载、Youtue-dl支持、搜图。

介绍 一个基于Python3的Bot。目前支持以Docker的方式部署在vps上。 主要功能: 文件管理 修改主界面为 filebrowser,账号为admin,密码为admin,主界面路径:http://ip:port,请自行修改密码 FolderMagic自带的webdav:路径:http://

A Spider for BiliBili comments with a simple API server.

BiliComment A spider for BiliBili comment. Spider Usage Put config.json into config directory, and then python . ./config/config.json. A example confi

Text-to-SQL in the Wild: A Naturally-Occurring Dataset Based on Stack Exchange Data

SEDE SEDE (Stack Exchange Data Explorer) is new dataset for Text-to-SQL tasks with more than 12,000 SQL queries and their natural language description

A custom-designed Spider Robot trained to walk using Deep RL in a PyBullet Simulation

SpiderBot_DeepRL Title: Implementation of Single and Multi-Agent Deep Reinforcement Learning Algorithms for a Walking Spider Robot Authors(s): Arijit

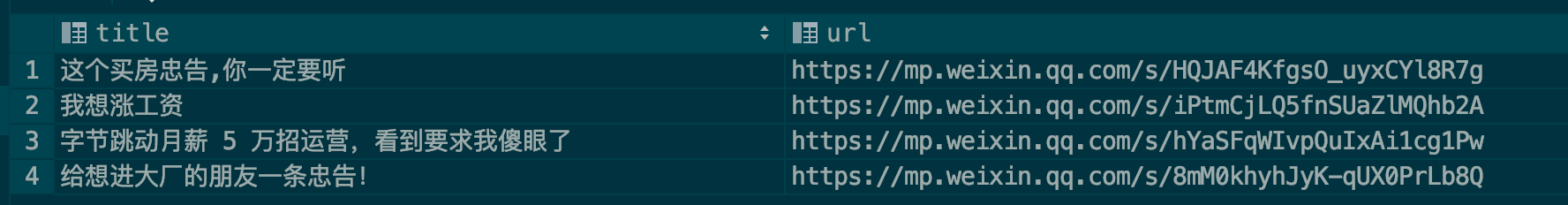

构建一个多源(公众号、RSS)、干净、个性化的阅读环境

2C 构建一个多源(公众号、RSS)、干净、个性化的阅读环境 作为一名微信公众号的重度用户,公众号一直被我设为汲取知识的地方。随着使用程度的增加,相信大家或多或少会有一个比较头疼的问题——广告问题。 假设你关注的公众号有十来个,若一个公众号两周接一次广告,理论上你会面临二十多次广告,实际上会更多,运

feapder 是一款简单、快速、轻量级的爬虫框架。以开发快速、抓取快速、使用简单、功能强大为宗旨。支持分布式爬虫、批次爬虫、多模板爬虫,以及完善的爬虫报警机制。

feapder 是一款简单、快速、轻量级的爬虫框架。起名源于 fast、easy、air、pro、spider的缩写,以开发快速、抓取快速、使用简单、功能强大为宗旨,历时4年倾心打造。支持轻量爬虫、分布式爬虫、批次爬虫、爬虫集成,以及完善的爬虫报警机制。 之

spider-admin-pro

Spider Admin Pro Github: https://github.com/mouday/spider-admin-pro Gitee: https://gitee.com/mouday/spider-admin-pro Pypi: https://pypi.org/

Web crawling framework based on asyncio.

Web crawling framework for everyone. Written with asyncio, uvloop and aiohttp. Requirements Python3.5+ Installation pip install gain pip install uvloo

Incredibly fast crawler designed for OSINT.

Photon Incredibly fast crawler designed for OSINT. Photon Wiki • How To Use • Compatibility • Photon Library • Contribution • Roadmap Key Features Dat

Async Python 3.6+ web scraping micro-framework based on asyncio

Ruia 🕸️ Async Python 3.6+ web scraping micro-framework based on asyncio. ⚡ Write less, run faster. Overview Ruia is an async web scraping micro-frame

Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Django and Vue.js

Gerapy Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Scrapyd-Client, Scrapyd-API, Django and Vue.js. Documentation Documentation

Command-line program to download image galleries and collections from several image hosting sites

gallery-dl gallery-dl is a command-line program to download image galleries and collections from several image hosting sites (see Supported Sites). It

LSpider 一个为被动扫描器定制的前端爬虫

LSpider LSpider - 一个为被动扫描器定制的前端爬虫 什么是LSpider? 一款为被动扫描器而生的前端爬虫~ 由Chrome Headless、LSpider主控、Mysql数据库、RabbitMQ、被动扫描器5部分组合而成。

An alternative implement of Imjad API | Imjad API 的开源替代

HibiAPI An alternative implement of Imjad API. Imjad API 的开源替代. 前言 由于Imjad API这是什么?使用人数过多, 致使调用超出限制, 所以本人希望提供一个开源替代来供社区进行自由的部署和使用, 从而减轻一部分该API的使用压力 优势

Every web site provides APIs.

Toapi Overview Toapi give you the ability to make every web site provides APIs. Version v2.0.0, Completely rewrote. More elegant. More pythonic v1.0.0

A Powerful Spider(Web Crawler) System in Python.

pyspider A Powerful Spider(Web Crawler) System in Python. Write script in Python Powerful WebUI with script editor, task monitor, project manager and

Web Scraping Framework

Grab Framework Documentation Installation $ pip install -U grab See details about installing Grab on different platforms here http://docs.grablib.