46 Repositories

Python airflow-operators Libraries

A data engineering project with Kafka, Spark Streaming, dbt, Docker, Airflow, Terraform, GCP and much more!

Streamify A data pipeline with Kafka, Spark Streaming, dbt, Docker, Airflow, Terraform, GCP and much more! Description Objective The project will stre

Free Data Engineering course!

Data Engineering Zoomcamp Register in DataTalks.Club's Slack Join the #course-data-engineering channel The videos are published to DataTalks.Club's Yo

Learn the basics of Python. These tutorials are for Python beginners. so even if you have no prior knowledge of Python, you won’t face any difficulty understanding these tutorials.

01_Python_Introduction Introduction 👋 Python is a modern, robust, high level programming language. It is very easy to pick up even if you are complet

Code for the SIGGRAPH 2022 paper "DeltaConv: Anisotropic Operators for Geometric Deep Learning on Point Clouds."

DeltaConv [Paper] [Project page] Code for the SIGGRAPH 2022 paper "DeltaConv: Anisotropic Operators for Geometric Deep Learning on Point Clouds" by Ru

Yet another Airflow plugin using CLI command as RESTful api, supports Airflow v2.X.

中文版文档 Airflow Extended API Plugin Airflow Extended API, which export airflow CLI command as REST-ful API to extend the ability of airflow official API

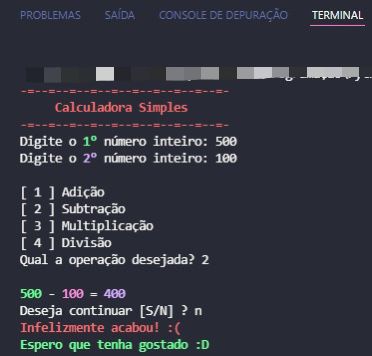

Calculadora-basica - Calculator with basic operators

Calculadora básica Calculadora com operadores básicos; O programa solicitará a d

GLODISMO: Gradient-Based Learning of Discrete Structured Measurement Operators for Signal Recovery

GLODISMO: Gradient-Based Learning of Discrete Structured Measurement Operators for Signal Recovery This is the code to the paper: Gradient-Based Learn

A reproduction repo for a Scheduling bug in AirFlow 2.2.3

A reproduction repo for a Scheduling bug in AirFlow 2.2.3

Airflow ETL With EKS EFS Sagemaker

Airflow ETL With EKS EFS & Sagemaker (en desarrollo) Diagrama de la solución Imp

Pizza Orders Data Pipeline Usecase Solved by SQL, Sqoop, HDFS, Hive, Airflow.

PizzaOrders_DataPipeline There is a Tony who is owning a New Pizza shop. He knew that pizza alone was not going to help him get seed funding to expand

Dag-bakery - Dag Bakery enables the capability to define Airflow DAGs via YAML.

DAG Bakery - WIP 🔧 dag-bakery aims to simplify our DAG development by removing all the boilerplate and duplicated code when defining multiple DAG cro

Sparse-dense operators implementation for Paddle

Sparse-dense operators implementation for Paddle This module implements coo, csc and csr matrix formats and their inter-ops with dense matrices. Feel

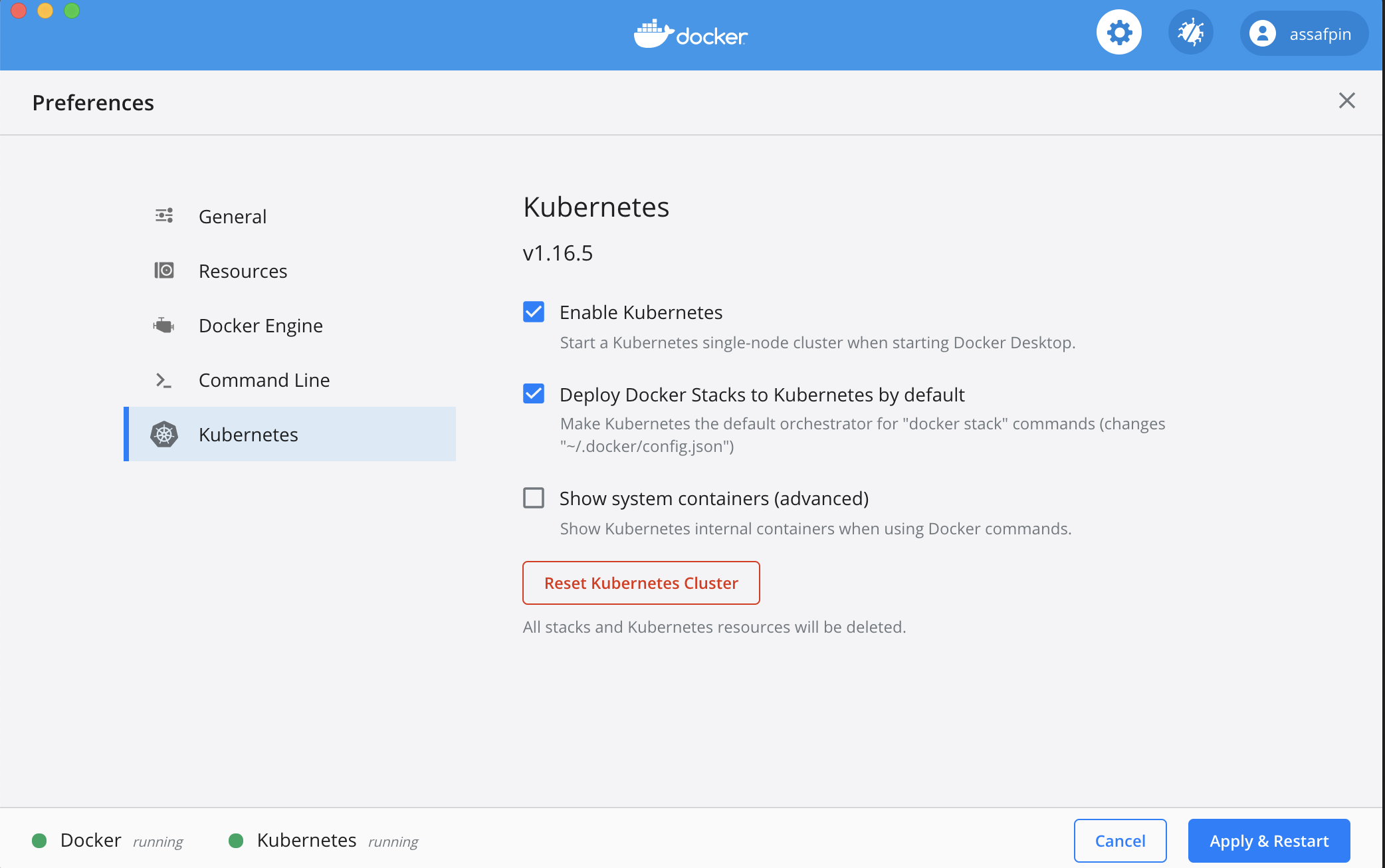

A lightweight tool to get an AI Infrastructure Stack up in minutes not days.

K3ai will take care of setup K8s for You, deploy the AI tool of your choice and even run your code on it.

Testbed of AI Systems Quality Management

qunomon Description A testbed for testing and managing AI system qualities. Demo Sorry. Not deployment public server at alpha version. Requirement Ins

Multiwavelets-based operator model

Multiwavelet model for Operator maps Gaurav Gupta, Xiongye Xiao, and Paul Bogdan Multiwavelet-based Operator Learning for Differential Equations In Ne

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

Apache Airflow Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are define

Keeper for Ricochet Protocol, implemented with Apache Airflow

Ricochet Keeper This repository contains Apache Airflow DAGs for executing keeper operations for Ricochet Exchange. Usage You will need to run this us

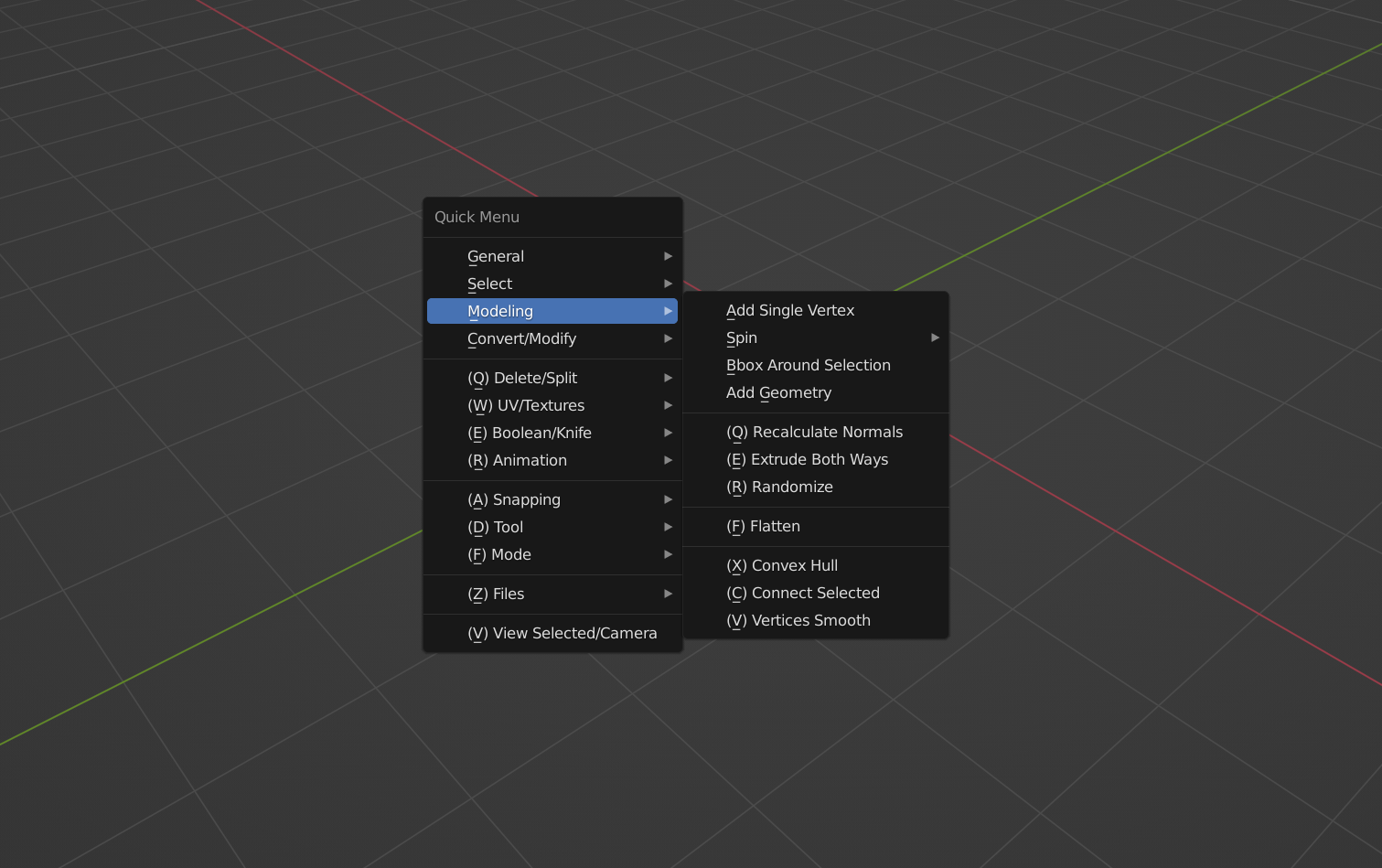

Blender addon that simplifies access to useful operators and adds missing functionality

Quick Menu is a Blender addon that simplifies common tasks Compatible with Blender 3.x.x Install through Edit - Preferences - Addons - Install... -

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

Jittor is a high-performance deep learning framework based on JIT compiling and meta-operators.

Jittor: a Just-in-time(JIT) deep learning framework Quickstart | Install | Tutorial | Chinese Jittor is a high-performance deep learning framework bas

Repositório para estudo do airflow

airflow-101 Repositório para estudo do airflow Docker criado baseado no tutorial Exemplo de API da pokeapi Para executar clone o repo execute as confi

Crypto Stats and Tweets Data Pipeline using Airflow

Crypto Stats and Tweets Data Pipeline using Airflow Introduction Project Overview This project was brought upon through Udacity's nanodegree program.

Sample code for Harry's Airflow online trainng course

Sample code for Harry's Airflow online trainng course You can find the videos on youtube or bilibili. I am working on adding below things: the slide p

An Airflow operator to call the main function from the dbt-core Python package

airflow-dbt-python An Airflow operator to call the main function from the dbt-core Python package Motivation Airflow running in a managed environment

Scheduled Block Checker for Cardano Stakepool Operators

ScheduledBlocks Scheduled Block Checker for Cardano Stakepool Operators Lightweight and Portable Scheduled Blocks Checker for Current Epoch. No cardan

Scheduled Block Checker for Cardano Stakepool Operators

ScheduledBlocks Scheduled Block Checker for Cardano Stakepool Operators Lightweight and Portable Scheduled Blocks Checker for Current Epoch. No cardan

This is a practice on Airflow, which is building virtual env, installing Airflow and constructing data pipeline (DAGs)

airflow-test This is a practice on Airflow, which is Builing virtualbox env and setting Airflow on that env Installing Airflow using python virtual en

A tutorial presents several practical examples of how to build DAGs in Apache Airflow

Apache Airflow - Python Brasil 2021 Este tutorial apresenta vários exemplos práticos de como construir DAGs no Apache Airflow. Background Apache Airfl

Show you how to integrate Zeppelin with Airflow

Introduction This repository is to show you how to integrate Zeppelin with Airflow. The philosophy behind the ingtegration is to make the transition f

Automatizando a criação de DAGs usando Jinja e YAML

Automatizando a criação de DAGs no Airflow usando Jinja e YAML Arquitetura do Repo: Pastas por contexto de negócio (ex: Marketing, Analytics, HR, etc)

Write maintainable, production-ready pipelines using Jupyter or your favorite text editor. Develop locally, deploy to the cloud. ☁️

Write maintainable, production-ready pipelines using Jupyter or your favorite text editor. Develop locally, deploy to the cloud. ☁️

Project repository of Apache Airflow, deployed on Docker in Amazon EC2 via GitLab.

Airflow on Docker in EC2 + GitLab's CI/CD Personal project for simple data pipeline using Airflow. Airflow will be installed inside Docker container,

Airflow Operator for running Soda SQL scans

Airflow Operator for running Soda SQL scans

Example repository for custom C++/CUDA operators for TorchScript

Custom TorchScript Operators Example This repository contains examples for writing, compiling and using custom TorchScript operators. See here for the

Reference python implementation of Chia pool operations for pool operators

This repository provides a sample server written in python, which is meant to server as a basis for a Chia Pool. While this is a fully functional implementation, it requires some work in scalability and security to run in production.

The elegance of Airflow + the power of AWS

Orkestra The elegance of Airflow + the power of AWS

Apache Liminal is an end-to-end platform for data engineers & scientists, allowing them to build, train and deploy machine learning models in a robust and agile way

Apache Liminals goal is to operationalise the machine learning process, allowing data scientists to quickly transition from a successful experiment to an automated pipeline of model training, validation, deployment and inference in production. Liminal provides a Domain Specific Language to build ML workflows on top of Apache Airflow.

Cloud-native, data onboarding architecture for the Google Cloud Public Datasets program

Public Datasets Pipelines Cloud-native, data pipeline architecture for onboarding datasets to the Google Cloud Public Datasets Program. Overview Requi

Educational project on how to build an ETL (Extract, Transform, Load) data pipeline, orchestrated with Airflow.

ETL Pipeline with Airflow, Spark, s3, MongoDB and Amazon Redshift

Learning nonlinear operators via DeepONet

DeepONet: Learning nonlinear operators The source code for the paper Learning nonlinear operators via DeepONet based on the universal approximation th

Viewflow is an Airflow-based framework that allows data scientists to create data models without writing Airflow code.

Viewflow Viewflow is a framework built on the top of Airflow that enables data scientists to create materialized views. It allows data scientists to f

Several simple examples for popular neural network toolkits calling custom CUDA operators.

Neural Network CUDA Example Several simple examples for neural network toolkits (PyTorch, TensorFlow, etc.) calling custom CUDA operators. We provide

Apache Airflow - A platform to programmatically author, schedule, and monitor workflows

Apache Airflow Apache Airflow (or simply Airflow) is a platform to programmatically author, schedule, and monitor workflows. When workflows are define

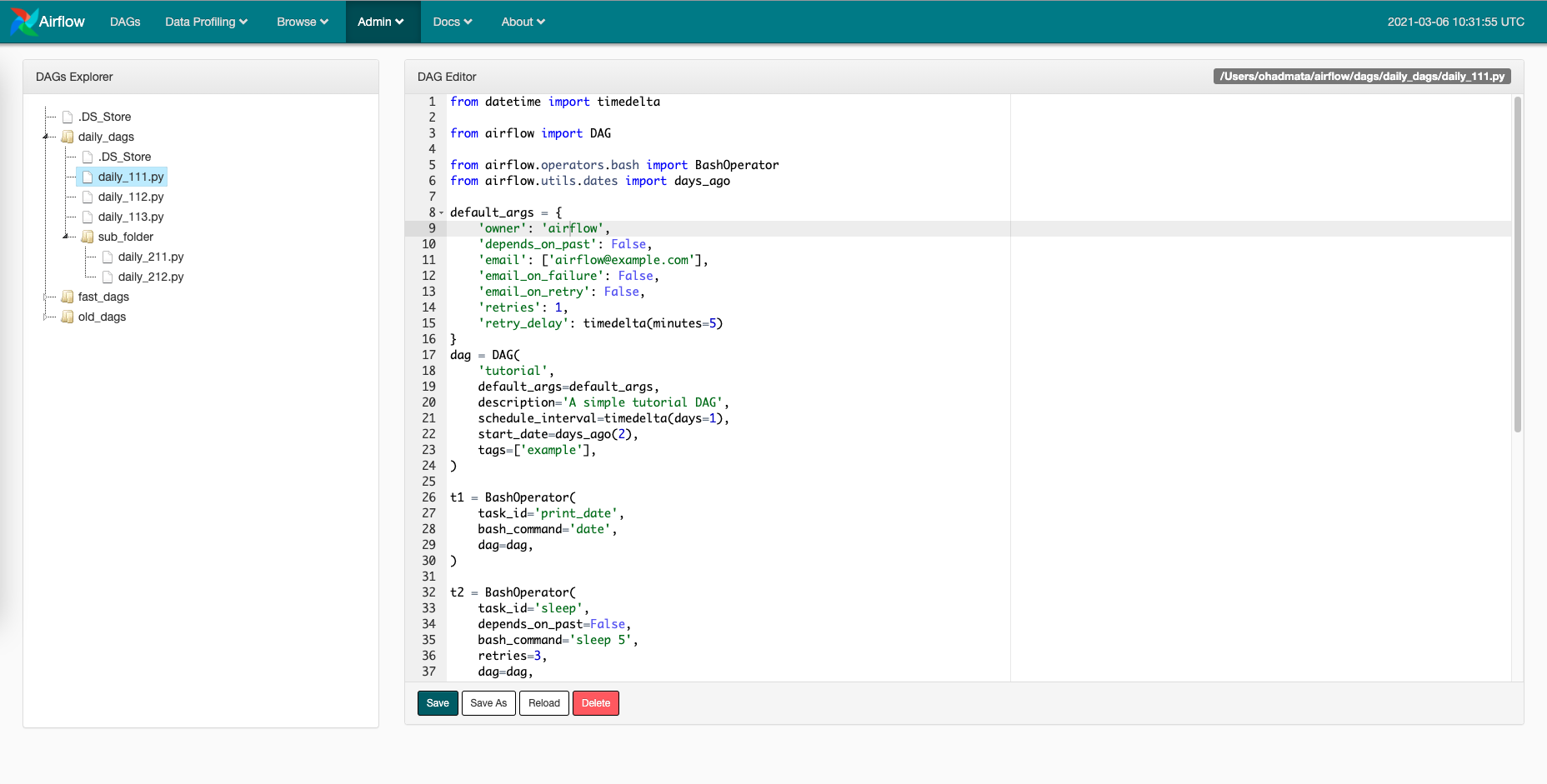

Zero configuration Airflow plugin that let you manage your DAG files.

simple-dag-editor SimpleDagEditor is a zero configuration plugin for Apache Airflow. It provides a file managing interface that points to your dag_fol

Soda SQL Data testing, monitoring and profiling for SQL accessible data.

Soda SQL Data testing, monitoring and profiling for SQL accessible data. What does Soda SQL do? Soda SQL allows you to Stop your pipeline when bad dat

Unified Interface for Constructing and Managing Workflows on different workflow engines, such as Argo Workflows, Tekton Pipelines, and Apache Airflow.

Couler What is Couler? Couler aims to provide a unified interface for constructing and managing workflows on different workflow engines, such as Argo