849 Repositories

Python efficient-transformers Libraries

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

Optimizing Deeper Transformers on Small Datasets

DT-Fixup Optimizing Deeper Transformers on Small Datasets Paper published in ACL 2021: arXiv Detailed instructions to replicate our results in the pap

[ACL-IJCNLP 2021] "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets"

EarlyBERT This is the official implementation for the paper in ACL-IJCNLP 2021 "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets" by

Official Pytorch Implementation of Length-Adaptive Transformer (ACL 2021)

Length-Adaptive Transformer This is the official Pytorch implementation of Length-Adaptive Transformer. For detailed information about the method, ple

Research code for "What to Pre-Train on? Efficient Intermediate Task Selection", EMNLP 2021

efficient-task-transfer This repository contains code for the experiments in our paper "What to Pre-Train on? Efficient Intermediate Task Selection".

PyTorch implementation of the ACL, 2021 paper Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks.

Parameter-efficient Multi-task Fine-tuning for Transformers via Shared Hypernetworks This repo contains the PyTorch implementation of the ACL, 2021 pa

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Efficient training of deep recommenders on cloud.

HybridBackend Introduction HybridBackend is a training framework for deep recommenders which bridges the gap between evolving cloud infrastructure and

Official Repository for "Robust On-Policy Data Collection for Data Efficient Policy Evaluation" (NeurIPS 2021 Workshop on OfflineRL).

Robust On-Policy Data Collection for Data-Efficient Policy Evaluation Source code of Robust On-Policy Data Collection for Data-Efficient Policy Evalua

Official PyTorch Implementation of HELP: Hardware-adaptive Efficient Latency Prediction for NAS via Meta-Learning (NeurIPS 2021 Spotlight)

[NeurIPS 2021 Spotlight] HELP: Hardware-adaptive Efficient Latency Prediction for NAS via Meta-Learning [Paper] This is Official PyTorch implementatio

Sample Code for "Pessimism Meets Invariance: Provably Efficient Offline Mean-Field Multi-Agent RL"

Sample Code for "Pessimism Meets Invariance: Provably Efficient Offline Mean-Field Multi-Agent RL" This is the official codebase for Pessimism Meets I

The official implementation of EIGNN: Efficient Infinite-Depth Graph Neural Networks (NeurIPS 2021)

EIGNN: Efficient Infinite-Depth Graph Neural Networks The official implementation of EIGNN: Efficient Infinite-Depth Graph Neural Networks (NeurIPS 20

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

Code for "ATISS: Autoregressive Transformers for Indoor Scene Synthesis", NeurIPS 2021

ATISS: Autoregressive Transformers for Indoor Scene Synthesis This repository contains the code that accompanies our paper ATISS: Autoregressive Trans

End-to-End Referring Video Object Segmentation with Multimodal Transformers

End-to-End Referring Video Object Segmentation with Multimodal Transformers This repo contains the official implementation of the paper: End-to-End Re

Pre-Training 3D Point Cloud Transformers with Masked Point Modeling

Point-BERT: Pre-Training 3D Point Cloud Transformers with Masked Point Modeling Created by Xumin Yu*, Lulu Tang*, Yongming Rao*, Tiejun Huang, Jie Zho

![[IEEE Transactions on Computational Imaging] Self-Gated Memory Recurrent Network for Efficient Scalable HDR Deghosting](https://github.com/Susmit-A/HDRRNN/raw/master/github_images/overview.png)

[IEEE Transactions on Computational Imaging] Self-Gated Memory Recurrent Network for Efficient Scalable HDR Deghosting

Few-shot Deep HDR Deghosting This repository contains code and pretrained models for our paper: Self-Gated Memory Recurrent Network for Efficient Scal

This repository contains the source code of our work on designing efficient CNNs for computer vision

Efficient networks for Computer Vision This repo contains source code of our work on designing efficient networks for different computer vision tasks:

Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust.

Subspace Adversarial Training Single-step adversarial training (AT) has received wide attention as it proved to be both efficient and robust. However,

Our implementation used for the MICCAI 2021 FLARE Challenge titled 'Efficient Multi-Organ Segmentation Using SpatialConfiguartion-Net with Low GPU Memory Requirements'.

Efficient Multi-Organ Segmentation Using SpatialConfiguartion-Net with Low GPU Memory Requirements Our implementation used for the MICCAI 2021 FLARE C

Code for our ICCV 2021 Paper "OadTR: Online Action Detection with Transformers".

Code for our ICCV 2021 Paper "OadTR: Online Action Detection with Transformers".

Official Pytorch Code for the paper TransWeather

TransWeather Official Code for the paper TransWeather, Arxiv Tech Report 2021 Paper | Website About this repo: This repo hosts the implentation code,

An optimized prompt tuning strategy comparable to fine-tuning across model scales and tasks.

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy achievi

BinTuner is a cost-efficient auto-tuning framework, which can deliver a near-optimal binary code that reveals much more differences than -Ox settings.

BinTuner is a cost-efficient auto-tuning framework, which can deliver a near-optimal binary code that reveals much more differences than -Ox settings. it also can assist the binary code analysis research in generating more diversified datasets for training and testing. The BinTuner framework is based on OpenTuner, thanks to all contributors for their contributions.

SuMa++: Efficient LiDAR-based Semantic SLAM (Chen et al IROS 2019)

SuMa++: Efficient LiDAR-based Semantic SLAM This repository contains the implementation of SuMa++, which generates semantic maps only using three-dime

A unofficial pytorch implementation of PAN(PSENet2): Efficient and Accurate Arbitrary-Shaped Text Detection with Pixel Aggregation Network

Efficient and Accurate Arbitrary-Shaped Text Detection with Pixel Aggregation Network Requirements pytorch 1.1+ torchvision 0.3+ pyclipper opencv3 gcc

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

Conversational text Analysis using various NLP techniques

PyConverse Let me try first Installation pip install pyconverse Usage Please try this notebook that demos the core functionalities: basic usage noteb

Paper: Cross-View Kernel Similarity Metric Learning Using Pairwise Constraints for Person Re-identification

Cross-View Kernel Similarity Metric Learning Using Pairwise Constraints for Person Re-identification T M Feroz Ali, Subhasis Chaudhuri, ICVGIP-20-21

Package to compute Mauve, a similarity score between neural text and human text. Install with `pip install mauve-text`.

MAUVE MAUVE is a library built on PyTorch and HuggingFace Transformers to measure the gap between neural text and human text with the eponymous MAUVE

Make differentially private training of transformers easy for everyone

private-transformers This codebase facilitates fast experimentation of differentially private training of Hugging Face transformers. What is this? Why

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

GNNAdvisor: An Efficient Runtime System for GNN Acceleration on GPUs

GNNAdvisor: An Efficient Runtime System for GNN Acceleration on GPUs [Paper, Slides, Video Talk] at USENIX OSDI'21 @inproceedings{GNNAdvisor, title=

A simple and efficient tool to parallelize Pandas operations on all available CPUs

Pandaral·lel Without parallelization With parallelization Installation $ pip install pandarallel [--upgrade] [--user] Requirements On Windows, Pandara

Code for paper " AdderNet: Do We Really Need Multiplications in Deep Learning?"

AdderNet: Do We Really Need Multiplications in Deep Learning? This code is a demo of CVPR 2020 paper AdderNet: Do We Really Need Multiplications in De

Spectralformer: Rethinking hyperspectral image classification with transformers

Spectralformer: Rethinking hyperspectral image classification with transformers Danfeng Hong, Zhu Han, Jing Yao, Lianru Gao, Bing Zhang, Antonio Plaza

Automated question generation and question answering from Turkish texts using text-to-text transformers

Turkish Question Generation Offical source code for "Automated question generation & question answering from Turkish texts using text-to-text transfor

Tandem Mass Spectrum Prediction with Graph Transformers

MassFormer This is the original implementation of MassFormer, a graph transformer for small molecule MS/MS prediction. Check out the preprint on arxiv

Unleashing Transformers: Parallel Token Prediction with Discrete Absorbing Diffusion for Fast High-Resolution Image Generation from Vector-Quantized Codes

Unleashing Transformers: Parallel Token Prediction with Discrete Absorbing Diffusion for Fast High-Resolution Image Generation from Vector-Quantized C

Efficient semidefinite bounds for multi-label discrete graphical models.

Low rank solvers #################################### benchmark/ : folder with the random instances used in the paper. ############################

CLIPImageClassifier wraps clip image model from transformers

CLIPImageClassifier CLIPImageClassifier wraps clip image model from transformers. CLIPImageClassifier is initialized with the argument classes, these

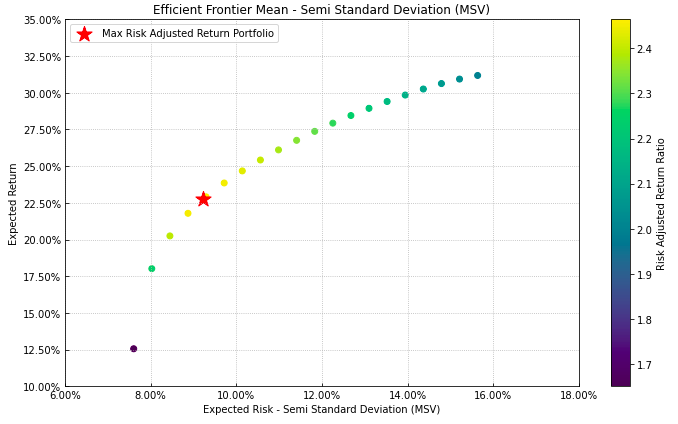

Portfolio Optimization and Quantitative Strategic Asset Allocation in Python

Riskfolio-Lib Quantitative Strategic Asset Allocation, Easy for Everyone. Description Riskfolio-Lib is a library for making quantitative strategic ass

Code to reprudece NeurIPS paper: Accelerated Sparse Neural Training: A Provable and Efficient Method to Find N:M Transposable Masks

Accelerated Sparse Neural Training: A Provable and Efficient Method to FindN:M Transposable Masks Recently, researchers proposed pruning deep neural n

An easy to use, user-friendly and efficient code for extracting OpenAI CLIP (Global/Grid) features from image and text respectively.

Extracting OpenAI CLIP (Global/Grid) Features from Image and Text This repo aims at providing an easy to use and efficient code for extracting image &

An easy to use Natural Language Processing library and framework for predicting, training, fine-tuning, and serving up state-of-the-art NLP models.

Welcome to AdaptNLP A high level framework and library for running, training, and deploying state-of-the-art Natural Language Processing (NLP) models

Use fastai-v2 with HuggingFace's pretrained transformers

FastHugs Use fastai v2 with HuggingFace's pretrained transformers, see the notebooks below depending on your task: Text classification: fasthugs_seq_c

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for Trans

The Most Efficient Temporal Difference Learning Framework for 2048

moporgic/TDL2048+ TDL2048+ is a highly optimized temporal difference (TD) learning framework for 2048. Features Many common methods related to 2048 ar

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models.

Feature-engine is a Python library with multiple transformers to engineer and select features for use in machine learning models. Feature-engine's transformers follow scikit-learn's functionality with fit() and transform() methods to first learn the transforming parameters from data and then transform the data.

Efficient Speech Processing Tookit for Automatic Speaker Recognition

Sugar Efficient Speech Processing Tookit for Automatic Speaker Recognition | HuggingFace | What's New EfficientTDNN: Efficient Architecture Search for

Iterative Normalization: Beyond Standardization towards Efficient Whitening

IterNorm Code for reproducing the results in the following paper: Iterative Normalization: Beyond Standardization towards Efficient Whitening Lei Huan

A simple, fast, and efficient object detector without FPN

You Only Look One-level Feature (YOLOF), CVPR2021 A simple, fast, and efficient object detector without FPN. This repo provides an implementation for

Complex heatmaps are efficient to visualize associations between different sources of data sets and reveal potential patterns.

Make Complex Heatmaps Complex heatmaps are efficient to visualize associations between different sources of data sets and reveal potential patterns. H

A fast, efficient universal vector embedding utility package.

Magnitude: a fast, simple vector embedding utility library A feature-packed Python package and vector storage file format for utilizing vector embeddi

A library for efficient similarity search and clustering of dense vectors.

Faiss Faiss is a library for efficient similarity search and clustering of dense vectors. It contains algorithms that search in sets of vectors of any

Multi-modal Vision Transformers Excel at Class-agnostic Object Detection

Multi-modal Vision Transformers Excel at Class-agnostic Object Detection

This is the official PyTorch implementation for "Mesa: A Memory-saving Training Framework for Transformers".

Mesa: A Memory-saving Training Framework for Transformers This is the official PyTorch implementation for Mesa: A Memory-saving Training Framework for

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models.

Backprop makes it simple to use, finetune, and deploy state-of-the-art ML models. Solve a variety of tasks with pre-trained models or finetune them in

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

![[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers](https://user-images.githubusercontent.com/55849968/135632419-e5a10fb9-ec2c-465c-8b9b-4d0c6f69b5b4.png)

[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers

Grounded Situation Recognition with Transformers Paper | Model Checkpoint This is the official PyTorch implementation of Grounded Situation Recognitio

Official repository for "Restormer: Efficient Transformer for High-Resolution Image Restoration". SOTA for motion deblurring, image deraining, denoising (Gaussian/real data), and defocus deblurring.

Restormer: Efficient Transformer for High-Resolution Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan,

Implementation EfficientDet: Scalable and Efficient Object Detection in PyTorch

Implementation EfficientDet: Scalable and Efficient Object Detection in PyTorch

This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transformers.

TransMix: Attend to Mix for Vision Transformers This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transf

Official repository for "Restormer: Efficient Transformer for High-Resolution Image Restoration". SOTA results for single-image motion deblurring, image deraining, image denoising (synthetic and real data), and dual-pixel defocus deblurring.

Restormer: Efficient Transformer for High-Resolution Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan,

This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transformers.

TransMix: Attend to Mix for Vision Transformers This repository includes the official project for the paper: TransMix: Attend to Mix for Vision Transf

![[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers](https://user-images.githubusercontent.com/55849968/135632419-e5a10fb9-ec2c-465c-8b9b-4d0c6f69b5b4.png)

[BMVC'21] Official PyTorch Implementation of Grounded Situation Recognition with Transformers

Grounded Situation Recognition with Transformers Paper | Model Checkpoint This is the official PyTorch implementation of Grounded Situation Recognitio

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 A repository part of the MarIA project. Corpora 📃 Corpora Number of documents Number of tokens Size (GB) BNE 201,080,084

InvTorch: memory-efficient models with invertible functions

InvTorch: Memory-Efficient Invertible Functions This module extends the functionality of torch.utils.checkpoint.checkpoint to work with invertible fun

EfficientDet (Scalable and Efficient Object Detection) implementation in Keras and Tensorflow

EfficientDet This is an implementation of EfficientDet for object detection on Keras and Tensorflow. The project is based on the official implementati

Powerful and efficient Computer Vision Annotation Tool (CVAT)

Computer Vision Annotation Tool (CVAT) CVAT is free, online, interactive video and image annotation tool for computer vision. It is being used by our

Non-Metric Space Library (NMSLIB): An efficient similarity search library and a toolkit for evaluation of k-NN methods for generic non-metric spaces.

Non-Metric Space Library (NMSLIB) Important Notes NMSLIB is generic but fast, see the results of ANN benchmarks. A standalone implementation of our fa

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

PESTO: Switching Point based Dynamic and Relative Positional Encoding for Code-Mixed Languages

PESTO: Switching Point based Dynamic and Relative Positional Encoding for Code-Mixed Languages Abstract NLP applications for code-mixed (CM) or mix-li

Code for "Sparse Steerable Convolutions: An Efficient Learning of SE(3)-Equivariant Features for Estimation and Tracking of Object Poses in 3D Space"

Sparse Steerable Convolution (SS-Conv) Code for "Sparse Steerable Convolutions: An Efficient Learning of SE(3)-Equivariant Features for Estimation and

Code base for reproducing results of I.Schubert, D.Driess, O.Oguz, and M.Toussaint: Learning to Execute: Efficient Learning of Universal Plan-Conditioned Policies in Robotics. NeurIPS (2021)

Learning to Execute (L2E) Official code base for completely reproducing all results reported in I.Schubert, D.Driess, O.Oguz, and M.Toussaint: Learnin

KAPAO is an efficient multi-person human pose estimation model that detects keypoints and poses as objects and fuses the detections to predict human poses.

KAPAO (Keypoints and Poses as Objects) KAPAO is an efficient single-stage multi-person human pose estimation model that models keypoints and poses as

AOT (Associating Objects with Transformers) in PyTorch

An efficient modular implementation of Associating Objects with Transformers for Video Object Segmentation in PyTorch

Spectralformer: Rethinking hyperspectral image classification with transformers

The code in this toolbox implements the "Spectralformer: Rethinking hyperspectral image classification with transformers". More specifically, it is detailed as follow.

Implementation of Uniformer, a simple attention and 3d convolutional net that achieved SOTA in a number of video classification tasks

Uniformer - Pytorch Implementation of Uniformer, a simple attention and 3d convolutional net that achieved SOTA in a number of video classification ta

ESPNet: Efficient Spatial Pyramid of Dilated Convolutions for Semantic Segmentation

ESPNet: Efficient Spatial Pyramid of Dilated Convolutions for Semantic Segmentation This repository contains the source code of our paper, ESPNet (acc

Transformers and related deep network architectures are summarized and implemented here.

Transformers: from NLP to CV This is a practical introduction to Transformers from Natural Language Processing (NLP) to Computer Vision (CV) Introduct

Boundary-aware Transformers for Skin Lesion Segmentation

Boundary-aware Transformers for Skin Lesion Segmentation Introduction This is an official release of the paper Boundary-aware Transformers for Skin Le

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

ML for NLP and Computer Vision.

Sparrow is our open-source ML product. It runs on Skipper MLOps infrastructure.

Partially offline multi-language translator built upon Huggingface transformers.

Translate Command-line interface to translation pipelines, powered by Huggingface transformers. This tool can download translation models, and then us

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers

hierarchical-transformer-1d Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers In Progress!! 2021.

Efficient and intelligent interactive segmentation annotation software

Efficient and intelligent interactive segmentation annotation software

An efficient implementation of GPNN

Efficient-GPNN An efficient implementation of GPNN as depicted in "Drop the GAN: In Defense of Patches Nearest Neighbors as Single Image Generative Mo

Powerful, efficient particle trajectory analysis in scientific Python.

freud Overview The freud Python library provides a simple, flexible, powerful set of tools for analyzing trajectories obtained from molecular dynamics

Computationally efficient algorithm that identifies boundary points of a point cloud.

BoundaryTest Included are MATLAB and Python packages, each of which implement efficient algorithms for boundary detection and normal vector estimation

Tandem Mass Spectrum Prediction with Graph Transformers

MassFormer This is the original implementation of MassFormer, a graph transformer for small molecule MS/MS prediction. Check out the preprint on arxiv

AI-UPV at IberLEF-2021 DETOXIS task: Toxicity Detection in Immigration-Related Web News Comments Using Transformers and Statistical Models

AI-UPV at IberLEF-2021 DETOXIS task: Toxicity Detection in Immigration-Related Web News Comments Using Transformers and Statistical Models Description

Certified Patch Robustness via Smoothed Vision Transformers

Certified Patch Robustness via Smoothed Vision Transformers This repository contains the code for replicating the results of our paper: Certified Patc

NLP From Scratch Without Large-Scale Pretraining: A Simple and Efficient Framework

NLP From Scratch Without Large-Scale Pretraining This repository contains the code, pre-trained model checkpoints and curated datasets for our paper:

Efficient electromagnetic solver based on rigorous coupled-wave analysis for 3D and 2D multi-layered structures with in-plane periodicity

Efficient electromagnetic solver based on rigorous coupled-wave analysis for 3D and 2D multi-layered structures with in-plane periodicity, such as gratings, photonic-crystal slabs, metasurfaces, surface-emitting lasers, nano-antennas, and more.

Implementation of Hourglass Transformer, in Pytorch, from Google and OpenAI

Hourglass Transformer - Pytorch (wip) Implementation of Hourglass Transformer, in Pytorch. It will also contain some of my own ideas about how to make

🦅 Pretrained BigBird Model for Korean (up to 4096 tokens)

Pretrained BigBird Model for Korean What is BigBird • How to Use • Pretraining • Evaluation Result • Docs • Citation 한국어 | English What is BigBird? Bi