47 Repositories

Python explainability-requires-interactivity Libraries

Responsible AI Workshop: a series of tutorials & walkthroughs to illustrate how put responsible AI into practice

Responsible AI Workshop Responsible innovation is top of mind. As such, the tech industry as well as a growing number of organizations of all kinds in

TimeSHAP explains Recurrent Neural Network predictions.

TimeSHAP TimeSHAP is a model-agnostic, recurrent explainer that builds upon KernelSHAP and extends it to the sequential domain. TimeSHAP computes even

Interactivity Lab: Household Pulse Explorable

Interactivity Lab: Household Pulse Explorable Goal: Build an interactive application that incorporates fundamental Streamlit components to offer a cur

PressurePlate is a multi-agent environment that requires agents to cooperate during the traversal of a gridworld.

PressurePlate is a multi-agent environment that requires agents to cooperate during the traversal of a gridworld. The grid is partitioned into several rooms, and each room contains a plate and a closed doorway.

This component provides a wrapper to display SHAP plots in Streamlit.

streamlit-shap This component provides a wrapper to display SHAP plots in Streamlit.

Which Style Makes Me Attractive? Interpretable Control Discovery and Counterfactual Explanation on StyleGAN

Interpretable Control Exploration and Counterfactual Explanation (ICE) on StyleGAN Which Style Makes Me Attractive? Interpretable Control Discovery an

This repository holds those infrastructure-level modules, that every application requires that follows the core 12-factor principles.

py-12f-common About This repository holds those infrastructure-level modules, that every application requires that follows the core 12-factor principl

It's the assignment 1 from the Python 2 course, that requires a ToDoApp with authentication using Django

It's the assignment 1 from the Python 2 course, that requires a ToDoApp with authentication using Django

A curated list of awesome open source libraries to deploy, monitor, version and scale your machine learning

Awesome production machine learning This repository contains a curated list of awesome open source libraries that will help you deploy, monitor, versi

🧮 Matrix Factorization for Collaborative Filtering is just Solving an Adjoint Latent Dirichlet Allocation Model after All

Accompanying source code to the paper "Matrix Factorization for Collaborative Filtering is just Solving an Adjoint Latent Dirichlet Allocation Model A

Experiments for Fake News explainability project

fake-news-explainability Experiments for fake news explainability project This repository only contains the notebooks used to train the models and eva

🔅 Shapash makes Machine Learning models transparent and understandable by everyone

🎉 What's new ? Version New Feature Description Tutorial 1.6.x Explainability Quality Metrics To help increase confidence in explainability methods, y

Good Semi-Supervised Learning That Requires a Bad GAN

Good Semi-Supervised Learning that Requires a Bad GAN This is the code we used in our paper Good Semi-supervised Learning that Requires a Bad GAN Ziha

Coerce authentication from Windows hosts via MS-FSRVP (Requires FS-VSS-AGENT service running on host)

VSSTrigger Coerce authentication from Windows hosts via MS-FSRVP (Requires FS-VS

AAAI 2022 paper - Unifying Model Explainability and Robustness for Joint Text Classification and Rationale Extraction

AT-BMC Unifying Model Explainability and Robustness for Joint Text Classification and Rationale Extraction (AAAI 2022) Paper Prerequisites Install pac

Code for 2021 NeurIPS --- Towards Multi-Grained Explainability for Graph Neural Networks

ReFine: Multi-Grained Explainability for GNNs This is the official code for Towards Multi-Grained Explainability for Graph Neural Networks (NeurIPS 20

Explainability of the Implications of Supervised and Unsupervised Face Image Quality Estimations Through Activation Map Variation Analyses in Face Recognition Models

Explainable_FIQA_WITH_AMVA Note This is the official repository of the paper: Explainability of the Implications of Supervised and Unsupervised Face I

A community based economy bot with python works only with python 3.7.8 as web3 requires cytoolz

A community based economy bot with python works only with python 3.7.8 as web3 requires cytoolz has some issues building with python 3.10

Papers about explainability of GNNs

Papers about explainability of GNNs

Code for 2021 NeurIPS --- Towards Multi-Grained Explainability for Graph Neural Networks

ReFine: Multi-Grained Explainability for GNNs We are trying hard to update the code, but it may take a while to complete due to our tight schedule rec

Rule Extraction Methods for Interactive eXplainability

REMIX: Rule Extraction Methods for Interactive eXplainability This repository contains a variety of tools and methods for extracting interpretable rul

The code of NeurIPS 2021 paper "Scalable Rule-Based Representation Learning for Interpretable Classification".

Rule-based Representation Learner This is a PyTorch implementation of Rule-based Representation Learner (RRL) as described in NeurIPS 2021 paper: Scal

XAI - An eXplainability toolbox for machine learning

XAI - An eXplainability toolbox for machine learning XAI is a Machine Learning library that is designed with AI explainability in its core. XAI contai

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

A suite of useful tools based on 3D interactivity in napari

napari-threedee A suite of useful tools based on 3D interactivity in napari This napari plugin was generated with Cookiecutter using @napari's cookiec

ACV is a python library that provides explanations for any machine learning model or data.

ACV is a python library that provides explanations for any machine learning model or data. It gives local rule-based explanations for any model or data and different Shapley Values for tree-based models.

Adaptive, interpretable wavelets across domains (NeurIPS 2021)

Adaptive wavelets Wavelets which adapt given data (and optionally a pre-trained model). This yields models which are faster, more compressible, and mo

This repository requires you to solve a problem by writing some basic python code.

Can You Solve a Problem? A beginner friendly repository that requires you to solve familiar problems with python. This could be as simple as implement

Updated for TTS(CE) = Also Known as TTN V3. The code requires the first server to be 'ttn' protocol.

Updated Updated for TTS(CE) = Also Known as TTN V3. The code requires the first server to be 'ttn' protocol. Introduction This balenaCloud (previously

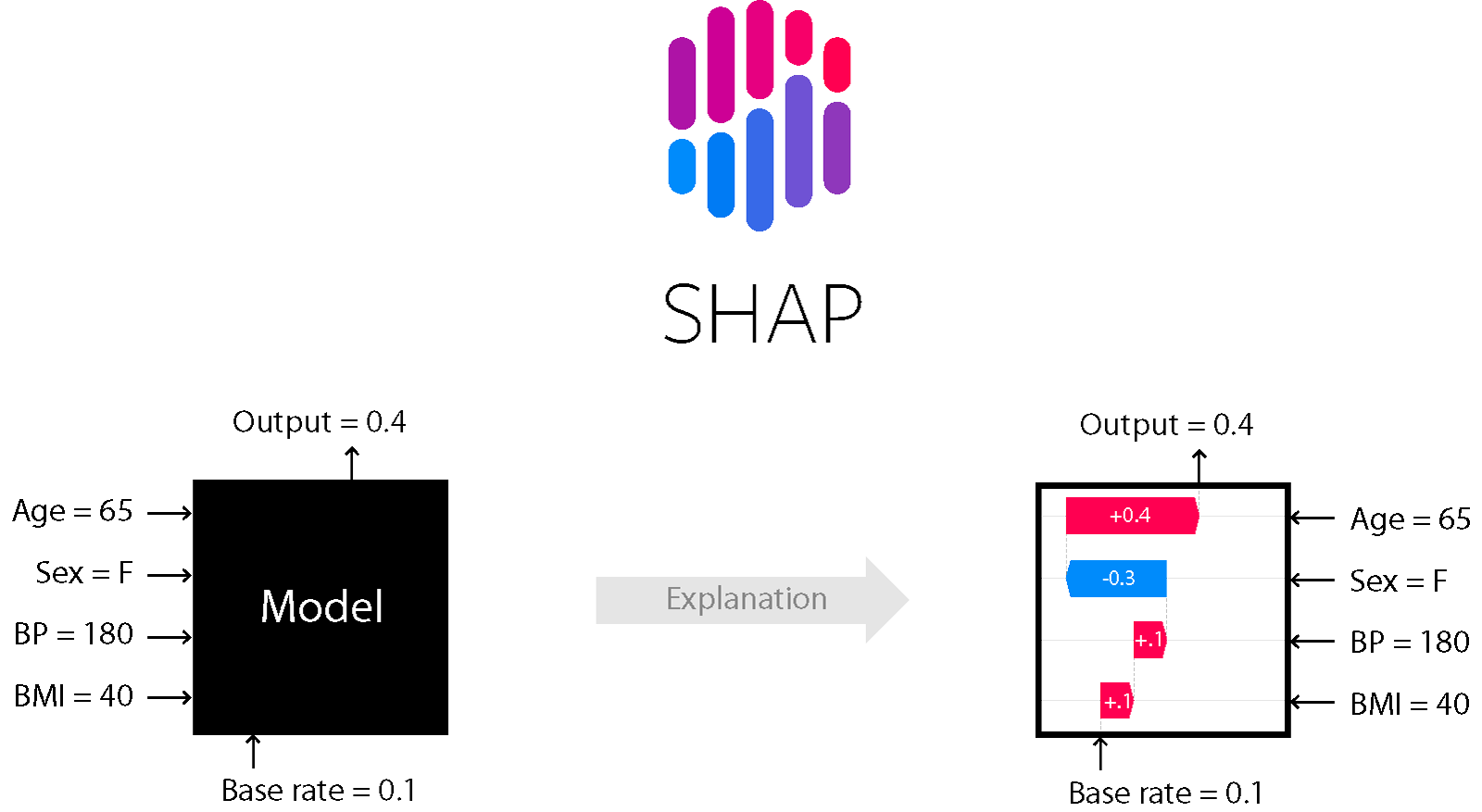

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

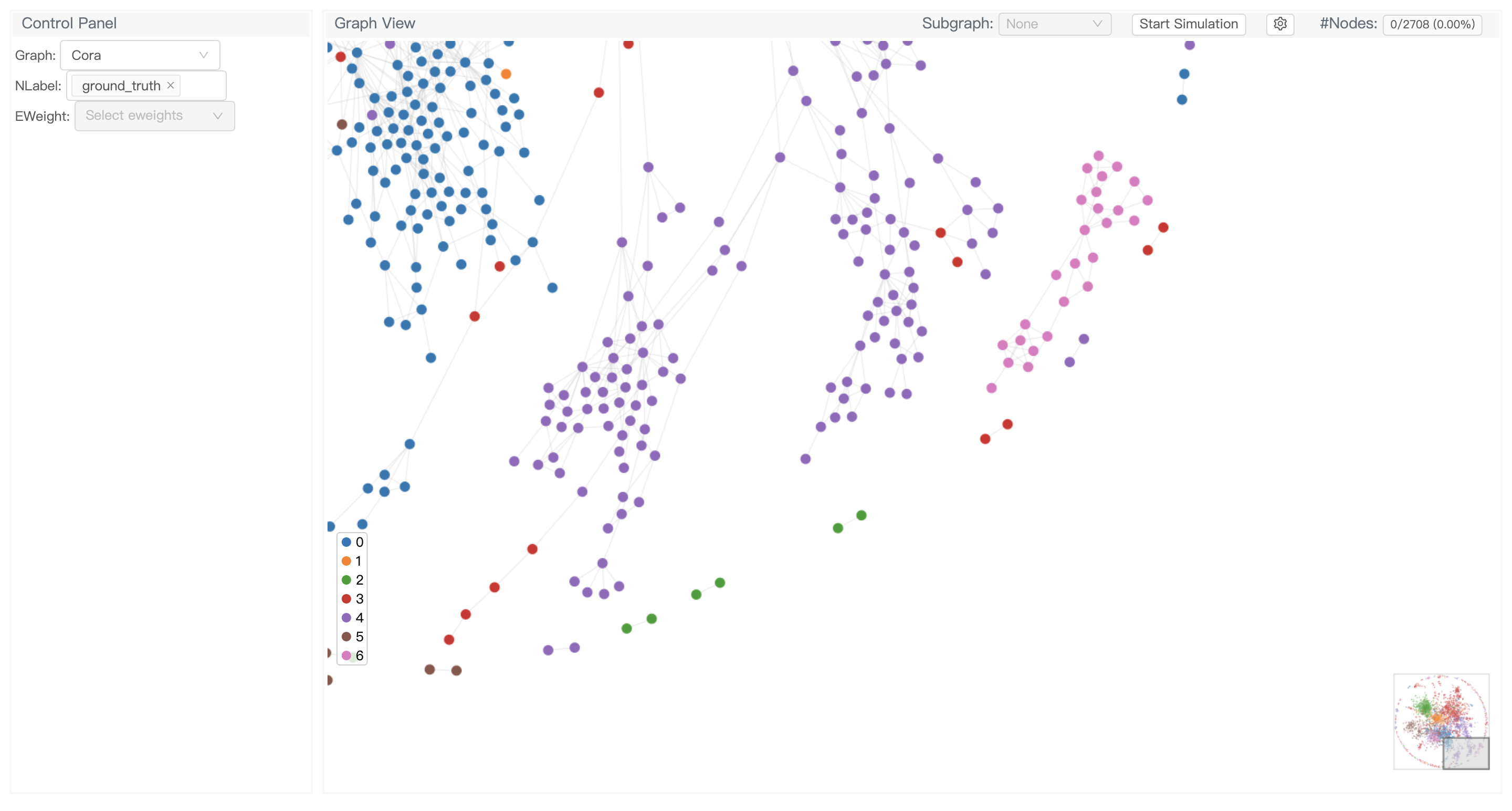

GNNLens2 is an interactive visualization tool for graph neural networks (GNN).

GNNLens2 is an interactive visualization tool for graph neural networks (GNN).

Blue noise image stippling in Processing (requires GPU)

Blue noise image stippling in Processing (requires GPU)

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

Using / reproducing ACD from the paper "Hierarchical interpretations for neural network predictions" 🧠 (ICLR 2019)

Hierarchical neural-net interpretations (ACD) 🧠 Produces hierarchical interpretations for a single prediction made by a pytorch neural network. Offic

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

Visualization toolkit for neural networks in PyTorch! Demo --

FlashTorch A Python visualization toolkit, built with PyTorch, for neural networks in PyTorch. Neural networks are often described as "black box". The

Werkzeug has a debug console that requires a pin. It's possible to bypass this with an LFI vulnerability or use it as a local privilege escalation vector.

Werkzeug Debug Console Pin Bypass Werkzeug has a debug console that requires a pin by default. It's possible to bypass this with an LFI vulnerability

Code to reproduce experiments in the paper "Explainability Requires Interactivity".

Explainability Requires Interactivity This repository contains the code to train all custom models used in the paper Explainability Requires Interacti

easyopt is a super simple yet super powerful optuna-based Hyperparameters Optimization Framework that requires no coding.

easyopt is a super simple yet super powerful optuna-based Hyperparameters Optimization Framework that requires no coding.

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

CARLA: A Python Library to Benchmark Algorithmic Recourse and Counterfactual Explanation Algorithms

CARLA - Counterfactual And Recourse Library CARLA is a python library to benchmark counterfactual explanation and recourse models. It comes out-of-the

A collection of research papers and software related to explainability in graph machine learning.

A collection of research papers and software related to explainability in graph machine learning.

Visualization toolkit for neural networks in PyTorch! Demo --

FlashTorch A Python visualization toolkit, built with PyTorch, for neural networks in PyTorch. Neural networks are often described as "black box". The

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Interpretability and explainability of data and machine learning models

AI Explainability 360 (v0.2.1) The AI Explainability 360 toolkit is an open-source library that supports interpretability and explainability of datase

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Explainability for Vision Transformers (in PyTorch)

Explainability for Vision Transformers (in PyTorch) This repository implements methods for explainability in Vision Transformers