22 Repositories

Python intel-pmu Libraries

🏎️ Accelerate training and inference of 🤗 Transformers with easy to use hardware optimization tools

Hugging Face Optimum 🤗 Optimum is an extension of 🤗 Transformers, providing a set of performance optimization tools enabling maximum efficiency to t

Semi-automated OpenVINO benchmark_app with variable parameters

Semi-automated OpenVINO benchmark_app with variable parameters. User can specify multiple options for any parameters in the benchmark_app and the progam runs the benchmark with all combinations of given options.

Final Project for the Intel AI Readiness Boot Camp NLP (Jan)

NLP Boot Camp (Jan) Synopsis Full Name: Prameya Mohanty Name of your School: Delhi Public School, Rourkela Class: VIII Title of the Project: iTransect

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

Python wrapper class for OpenVINO Model Server. User can submit inference request to OVMS with just a few lines of code

Python wrapper class for OpenVINO Model Server. User can submit inference request to OVMS with just a few lines of code.

Me cleaner - Tool for partial deblobbing of Intel ME/TXE firmware images

me_cleaner me_cleaner is a Python script able to modify an Intel ME firmware image with the final purpose of reducing its ability to interact with the

Threat Intel Platform for T-POTs

T-Pot 20.06 runs on Debian (Stable), is based heavily on docker, docker-compose

Threat Intel Platform for T-POTs

GreedyBear The project goal is to extract data of the attacks detected by a TPOT or a cluster of them and to generate some feeds that can be used to p

High performance Cross-platform Inference-engine, you could run Anakin on x86-cpu,arm, nv-gpu, amd-gpu,bitmain and cambricon devices.

Anakin2.0 Welcome to the Anakin GitHub. Anakin is a cross-platform, high-performance inference engine, which is originally developed by Baidu engineer

Intel(R) Extension for Scikit-learn is a seamless way to speed up your Scikit-learn application

Intel(R) Extension for Scikit-learn* Installation | Documentation | Examples | Support | FAQ With Intel(R) Extension for Scikit-learn you can accelera

Multiple types of NN model optimization environments. It is possible to directly access the host PC GUI and the camera to verify the operation. Intel iHD GPU (iGPU) support. NVIDIA GPU (dGPU) support.

mtomo Multiple types of NN model optimization environments. It is possible to directly access the host PC GUI and the camera to verify the operation.

A Python software implementation of the Intel 4004 processor

Pyntel4004 A Python software implementation of the Intel 4004 processor. General Information Two pass assembler using the original mnemonics, directiv

⚡️ Get notified as soon as your next CPU, GPU, or game console is in stock

Inventory Hunter This bot helped me snag an RTX 3070... hopefully it will help you get your hands on your next CPU, GPU, or game console. Requirements

NCNN implementation of Real-ESRGAN. Real-ESRGAN aims at developing Practical Algorithms for General Image Restoration.

NCNN implementation of Real-ESRGAN. Real-ESRGAN aims at developing Practical Algorithms for General Image Restoration.

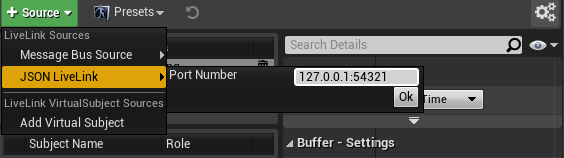

Intel Realsense t265 into Unreal Engine

t265_UE Intel Realsense t265 into Unreal Engine. Windows only, and Livelink plugin is 4.26.2 only at the moment. Might recompile it for different vers

PoC getting concret intel with chardet and charset-normalizer

aiohttp with charset-normalizer Context aiohttp.TCPConnector(limit=16) alpine linux nginx 1.21 python 3.9 aiohttp dev-master chardet 4.0.0 (aiohttp-ch

Code for the USENIX 2017 paper: kAFL: Hardware-Assisted Feedback Fuzzing for OS Kernels

kAFL: Hardware-Assisted Feedback Fuzzing for OS Kernels Blazing fast x86-64 VM kernel fuzzing framework with performant VM reloads for Linux, MacOS an

PerfSpect is a system performance characterization tool based on linux perf targeting Intel microarchitectures

PerfSpect PerfSpect is a system performance characterization tool based on linux perf targeting Intel microarchitectures. The tool has two parts perf

Demonstrates how to divide a DL model into multiple IR model files (division) and introduce a simplest way to implement a custom layer works with OpenVINO IR models.

Demonstration of OpenVINO techniques - Model-division and a simplest-way to support custom layers Description: Model Optimizer in Intel(r) OpenVINO(tm

Intel® Nervana™ reference deep learning framework committed to best performance on all hardware

DISCONTINUATION OF PROJECT. This project will no longer be maintained by Intel. Intel will not provide or guarantee development of or support for this

Reinforcement Learning Coach by Intel AI Lab enables easy experimentation with state of the art Reinforcement Learning algorithms

Coach Coach is a python reinforcement learning framework containing implementation of many state-of-the-art algorithms. It exposes a set of easy-to-us

Intel® Nervana™ reference deep learning framework committed to best performance on all hardware

DISCONTINUATION OF PROJECT. This project will no longer be maintained by Intel. Intel will not provide or guarantee development of or support for this