3844 Repositories

Python pytorch-caffe-models Libraries

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

Automatically remove the mosaics in images and videos, or add mosaics to them.

Automatically remove the mosaics in images and videos, or add mosaics to them.

Almost State-of-the-art Text Generation library

Ps: we are adding transformer model soon Text Gen 🐐 Almost State-of-the-art Text Generation library Text gen is a python library that allow you build

Official code repository for "Exploring Neural Models for Query-Focused Summarization"

Query-Focused Summarization Official code repository for "Exploring Neural Models for Query-Focused Summarization" This is a work in progress. Expect

Lex Rosetta: Transfer of Predictive Models Across Languages, Jurisdictions, and Legal Domains

Lex Rosetta: Transfer of Predictive Models Across Languages, Jurisdictions, and Legal Domains This is an accompanying repository to the ICAIL 2021 pap

"Learning and Analyzing Generation Order for Undirected Sequence Models" in Findings of EMNLP, 2021

undirected-generation-dev This repo contains the source code of the models described in the following paper "Learning and Analyzing Generation Order f

Pytorch implementation of our paper under review -- 1xN Pattern for Pruning Convolutional Neural Networks

1xN Pattern for Pruning Convolutional Neural Networks (paper) . This is Pytorch re-implementation of "1xN Pattern for Pruning Convolutional Neural Net

Code of TIP2021 Paper《SFace: Sigmoid-Constrained Hypersphere Loss for Robust Face Recognition》. We provide both MxNet and Pytorch versions.

SFace Code of TIP2021 Paper 《SFace: Sigmoid-Constrained Hypersphere Loss for Robust Face Recognition》. We provide both MxNet, PyTorch and Jittor versi

AdvStyle - Official PyTorch Implementation

AdvStyle - Official PyTorch Implementation Paper | Supp Discovering Interpretable Latent Space Directions of GANs Beyond Binary Attributes. Huiting Ya

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

[AAAI 2022] Negative Sample Matters: A Renaissance of Metric Learning for Temporal Grounding

[AAAI 2022] Negative Sample Matters: A Renaissance of Metric Learning for Temporal Grounding Official Pytorch implementation of Negative Sample Matter

Reference code for the paper "Cross-Camera Convolutional Color Constancy" (ICCV 2021)

Cross-Camera Convolutional Color Constancy, ICCV 2021 (Oral) Mahmoud Afifi1,2, Jonathan T. Barron2, Chloe LeGendre2, Yun-Ta Tsai2, and Francois Bleibe

This is the official PyTorch implementation of the CVPR 2020 paper "TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting".

TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting Project Page | YouTube | Paper This is the official PyTorch implementation of the C

PyTorch implementation of our ICCV 2019 paper: Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer and Novel View Synthesis

Impersonator PyTorch implementation of our ICCV 2019 paper: Liquid Warping GAN: A Unified Framework for Human Motion Imitation, Appearance Transfer an

PyTorch implementation for our paper Learning Character-Agnostic Motion for Motion Retargeting in 2D, SIGGRAPH 2019

Learning Character-Agnostic Motion for Motion Retargeting in 2D We provide PyTorch implementation for our paper Learning Character-Agnostic Motion for

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

Code for paper Multitask-Finetuning of Zero-shot Vision-Language Models

User-friendly Voice Cloning Application

Multi-Language-RTVC stands for Multi-Language Real Time Voice Cloning and is a Voice Cloning Tool capable of transfering speaker-specific audio featur

Ensembling Off-the-shelf Models for GAN Training

Data-Efficient GANs with DiffAugment project | paper | datasets | video | slides Generated using only 100 images of Obama, grumpy cats, pandas, the Br

Chinese Named Entity Recognization (BiLSTM with PyTorch)

BiLSTM-CRF for Name Entity Recognition PyTorch version A PyTorch implemention of Bi-LSTM-CRF model for Chinese Named Entity Recognition. 使用 PyTorch 实现

Pytorch modules for paralel models with same architecture. Ideal for multi agent-based systems

WideLinears Pytorch parallel Neural Networks A package of pytorch modules for fast paralellization of separate deep neural networks. Ideal for agent-b

Fit models to your data in Python with Sherpa.

Table of Contents Sherpa License How To Install Sherpa Using Anaconda Using pip Building from source History Release History Sherpa Sherpa is a modeli

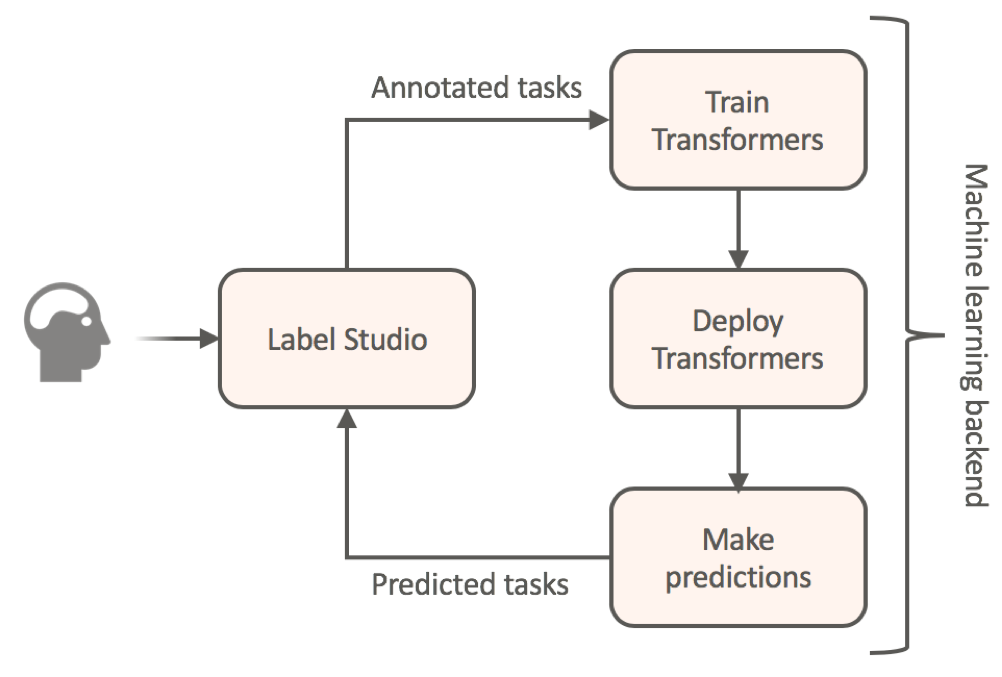

Label data using HuggingFace's transformers and automatically get a prediction service

Label Studio for Hugging Face's Transformers Website • Docs • Twitter • Join Slack Community Transfer learning for NLP models by annotating your textu

Using pytorch to implement unet network for liver image segmentation.

Using pytorch to implement unet network for liver image segmentation.

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

UniSpeech - Large Scale Self-Supervised Learning for Speech

UniSpeech The family of UniSpeech: WavLM (arXiv): WavLM: Large-Scale Self-Supervised Pre-training for Full Stack Speech Processing UniSpeech (ICML 202

This repository contains the code, models and datasets discussed in our paper "Few-Shot Question Answering by Pretraining Span Selection"

Splinter This repository contains the code, models and datasets discussed in our paper "Few-Shot Question Answering by Pretraining Span Selection", to

Official PyTorch implementation of SegFormer

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

ByT5: Towards a token-free future with pre-trained byte-to-byte models

ByT5: Towards a token-free future with pre-trained byte-to-byte models ByT5 is a tokenizer-free extension of the mT5 model. Instead of using a subword

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Dense Passage Retriever - is a set of tools and models for open domain Q&A task.

Dense Passage Retrieval Dense Passage Retrieval (DPR) - is a set of tools and models for state-of-the-art open-domain Q&A research. It is based on the

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Code for the paper "Language Models are Unsupervised Multitask Learners"

Status: Archive (code is provided as-is, no updates expected) gpt-2 Code and models from the paper "Language Models are Unsupervised Multitask Learner

Library of deep learning models and datasets designed to make deep learning more accessible and accelerate ML research.

Tensor2Tensor Tensor2Tensor, or T2T for short, is a library of deep learning models and datasets designed to make deep learning more accessible and ac

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Code for Crowd counting via unsupervised cross-domain feature adaptation.

CDFA-pytorch Code for Unsupervised crowd counting via cross-domain feature adaptation. Pre-trained models Google Drive Baidu Cloud : t4qc Environment

Using VapourSynth with super resolution models and speeding them up with TensorRT.

VSGAN-tensorrt-docker Using image super resolution models with vapoursynth and speeding them up with TensorRT. Using NVIDIA/Torch-TensorRT combined wi

Reproduce partial features of DeePMD-kit using PyTorch.

DeePMD-kit on PyTorch For better understand DeePMD-kit, we implement its partial features using PyTorch and expose interface consuing descriptors. Tec

Pytorch implementation of the paper "Class-Balanced Loss Based on Effective Number of Samples"

Class-balanced-loss-pytorch Pytorch implementation of the paper Class-Balanced Loss Based on Effective Number of Samples presented at CVPR'19. Yin Cui

Pytorch codes for Feature Transfer Learning for Face Recognition with Under-Represented Data

FTLNet_Pytorch Pytorch codes for Feature Transfer Learning for Face Recognition with Under-Represented Data 1. Introduction This repo is an unofficial

PyTorch Implementation of the paper Learning to Reweight Examples for Robust Deep Learning

Learning to Reweight Examples for Robust Deep Learning Unofficial PyTorch implementation of Learning to Reweight Examples for Robust Deep Learning. Th

A Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Training Data》

RangeLoss Pytorch This is a Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Trai

Face and Body Tracking for VRM 3D models on the web.

Kalidoface 3D - Face and Full-Body tracking for Vtubing on the web! A sequal to Kalidoface which supports Live2D avatars, Kalidoface 3D is a web app t

Learn the Deep Learning for Computer Vision in three steps: theory from base to SotA, code in PyTorch, and space-repetition with Anki

DeepCourse: Deep Learning for Computer Vision arthurdouillard.com/deepcourse/ This is a course I'm giving to the French engineering school EPITA each

💃 VALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena

💃 VALSE: A Task-Independent Benchmark for Vision and Language Models Centered on Linguistic Phenomena.

A foreign language learning aid using a neural network to predict probability of translating foreign words

Langy Langy is a reading-focused foreign language learning aid orientated towards young children. Reading is an activity that every child knows. It is

Formulae is a Python library that implements Wilkinson's formulas for mixed-effects models.

formulae formulae is a Python library that implements Wilkinson's formulas for mixed-effects models. The main difference with other implementations li

A basic implementation of Layer-wise Relevance Propagation (LRP) in PyTorch.

Layer-wise Relevance Propagation (LRP) in PyTorch Basic unsupervised implementation of Layer-wise Relevance Propagation (Bach et al., Montavon et al.)

Convert onnx models to pytorch.

onnx2torch onnx2torch is an ONNX to PyTorch converter. Our converter: Is easy to use – Convert the ONNX model with the function call convert; Is easy

TRACER: Extreme Attention Guided Salient Object Tracing Network implementation in PyTorch

TRACER: Extreme Attention Guided Salient Object Tracing Network This paper was accepted at AAAI 2022 SA poster session. Datasets All datasets are avai

A PaddlePaddle version of Neural Renderer, refer to its PyTorch version

Neural 3D Mesh Renderer in PadddlePaddle A PaddlePaddle version of Neural Renderer, refer to its PyTorch version Install Run: pip install neural-rende

Robbing the FED: Directly Obtaining Private Data in Federated Learning with Modified Models

Robbing the FED: Directly Obtaining Private Data in Federated Learning with Modified Models This repo contains a barebones implementation for the atta

Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021

Introduction Official Pytorch implementation for Deep Contextual Video Compression, NeurIPS 2021 Prerequisites Python 3.8 and conda, get Conda CUDA 11

The python SDK for Eto, the AI focused data platform for teams bringing AI models to production

Eto Labs Python SDK This is the python SDK for Eto, the AI focused data platform for teams bringing AI models to production. The python SDK makes it e

Pytorch implementation NORESQA A Framework for Speech Quality Assessment using Non-Matching References.

NORESQA: Speech Quality Assessment using Non-Matching References This is a Pytorch implementation for using NORESQA. It contains minimal code to predi

Code for Temporally Abstract Partial Models

Code for Temporally Abstract Partial Models Accompanies the code for the experimental section of the paper: Temporally Abstract Partial Models, Khetar

Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models.

WECHSEL Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models. arXiv: https://arx

Library to enable Bayesian active learning in your research or labeling work.

Bayesian Active Learning (BaaL) BaaL is an active learning library developed at ElementAI. This repository contains techniques and reusable components

Experiments on continual learning from a stream of pretrained models.

Ex-model CL Ex-model continual learning is a setting where a stream of experts (i.e. model's parameters) is available and a CL model learns from them

Discovering Explanatory Sentences in Legal Case Decisions Using Pre-trained Language Models.

Statutory Interpretation Data Set This repository contains the data set created for the following research papers: Savelka, Jaromir, and Kevin D. Ashl

A configurable, tunable, and reproducible library for CTR prediction

FuxiCTR This repo is the community dev version of the official release at huawei-noah/benchmark/FuxiCTR. Click-through rate (CTR) prediction is an cri

AI4Good project for detecting waste in the environment

Detect waste AI4Good project for detecting waste in environment. www.detectwaste.ml. Our latest results were published in Waste Management journal in

AdamW optimizer for bfloat16 models in pytorch.

Image source AdamW optimizer for bfloat16 models in pytorch. Bfloat16 is currently an optimal tradeoff between range and relative error for deep netwo

Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models.

WECHSEL Code for WECHSEL: Effective initialization of subword embeddings for cross-lingual transfer of monolingual language models. arXiv: https://arx

This is a graphql api build using ariadne python that serves a graphql-endpoint at port 3002 to perform language translation and identification using deep learning in python pytorch.

Language Translation and Identification this machine/deep learning api that will be served as a graphql-api using ariadne, to perform the following ta

MLReef is an open source ML-Ops platform that helps you collaborate, reproduce and share your Machine Learning work with thousands of other users.

The collaboration platform for Machine Learning MLReef is an open source ML-Ops platform that helps you collaborate, reproduce and share your Machine

PyGAD, a Python 3 library for building the genetic algorithm and training machine learning algorithms (Keras & PyTorch).

PyGAD: Genetic Algorithm in Python PyGAD is an open-source easy-to-use Python 3 library for building the genetic algorithm and optimizing machine lear

A python package simulating the quasi-2D pseudospin-1/2 Gross-Pitaevskii equation with NVIDIA GPU acceleration.

A python package simulating the quasi-2D pseudospin-1/2 Gross-Pitaevskii equation with NVIDIA GPU acceleration. Introduction spinor-gpe is high-level,

Hypernetwork-Ensemble Learning of Segmentation Probability for Medical Image Segmentation with Ambiguous Labels

Hypernet-Ensemble Learning of Segmentation Probability for Medical Image Segmentation with Ambiguous Labels The implementation of Hypernet-Ensemble Le

CR-FIQA: Face Image Quality Assessment by Learning Sample Relative Classifiability

This is the official repository of the paper: CR-FIQA: Face Image Quality Assessment by Learning Sample Relative Classifiability A private copy of the

Integrate GraphQL with your Pydantic models

graphene-pydantic A Pydantic integration for Graphene. Installation pip install "graphene-pydantic" Examples Here is a simple Pydantic model: import u

PyG (PyTorch Geometric) - A library built upon PyTorch to easily write and train Graph Neural Networks (GNNs)

PyG (PyTorch Geometric) is a library built upon PyTorch to easily write and train Graph Neural Networks (GNNs) for a wide range of applications related to structured data.

Using image super resolution models with vapoursynth and speeding them up with TensorRT

vs-RealEsrganAnime-tensorrt-docker Using image super resolution models with vapoursynth and speeding them up with TensorRT. Also a docker image since

Unofficial PyTorch Implementation of AHDRNet (CVPR 2019)

AHDRNet-PyTorch This is the PyTorch implementation of Attention-guided Network for Ghost-free High Dynamic Range Imaging (CVPR 2019). The official cod

A PyTorch implementation of deep-learning-based registration

DiffuseMorph Implementation A PyTorch implementation of deep-learning-based registration. Requirements OS : Ubuntu / Windows Python 3.6 PyTorch 1.4.0

Code for paper: "Spinning Language Models for Propaganda-As-A-Service"

Spinning Language Models for Propaganda-As-A-Service This is the source code for the Arxiv version of the paper. You can use this Google Colab to expl

Official PyTorch implementation of "Preemptive Image Robustification for Protecting Users against Man-in-the-Middle Adversarial Attacks" (AAAI 2022)

Preemptive Image Robustification for Protecting Users against Man-in-the-Middle Adversarial Attacks This is the code for reproducing the results of th

Official PyTorch implementation of the paper "Graph-based Generative Face Anonymisation with Pose Preservation" in ICIAP 2021

Contents AnonyGAN Installation Dataset Preparation Generating Images Using Pretrained Model Train and Test New Models Evaluation Acknowledgments Citat

A FAIR dataset of TCV experimental results for validating edge/divertor turbulence models.

TCV-X21 validation for divertor turbulence simulations Quick links Intro Welcome to TCV-X21. We're glad you've found us! This repository is designed t

Pydantic models for pywttr and aiopywttr.

Pydantic models for pywttr and aiopywttr.

A minimal, standalone viewer for 3D animations stored as stop-motion sequences of individual .obj mesh files.

ObjSequenceViewer V0.5 A minimal, standalone viewer for 3D animations stored as stop-motion sequences of individual .obj mesh files. Installation: pip

PyTorch implementation of normalizing flow models

PyTorch implementation of normalizing flow models

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts

t5-japanese Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts. The following is a list of models that

A PyTorch library and evaluation platform for end-to-end compression research

CompressAI CompressAI (compress-ay) is a PyTorch library and evaluation platform for end-to-end compression research. CompressAI currently provides: c

PyAbsorp is a python module that has the main focus to help estimate the Sound Absorption Coefficient.

This is a package developed to be use to find the Sound Absorption Coefficient through some implemented models, like Biot-Allard, Johnson-Champoux and

SeqAttack: a framework for adversarial attacks on token classification models

A framework for adversarial attacks against token classification models

Mmdetection3d Noted - MMDetection3D is an open source object detection toolbox based on PyTorch

MMDetection3D is an open source object detection toolbox based on PyTorch

Users can free try their models on SIDD dataset based on this code

SIDD benchmark 1 Train python train.py If you want to train your network, just modify the yaml in the options folder. 2 Validation python validation.p

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Continuous Augmented Positional Embeddings (CAPE) implementation for PyTorch

PyTorch implementation of Continuous Augmented Positional Embeddings (CAPE), by Likhomanenko et al. Enhance your Transformer positional embeddings with easy-to-use augmentations!

A python code to convert Keras pre-trained weights to Pytorch version

Weights_Keras_2_Pytorch 最近想在Pytorch项目里使用一下谷歌的NIMA,但是发现没有预训练好的pytorch权重,于是整理了一下将Keras预训练权重转为Pytorch的代码,目前是支持Keras的Conv2D, Dense, DepthwiseConv2D, Batch

easyNeuron is a simple way to create powerful machine learning models, analyze data and research cutting-edge AI.

easyNeuron is a simple way to create powerful machine learning models, analyze data and research cutting-edge AI.