582 Repositories

Python sentence-transformers Libraries

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

![[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery](https://github.com/IceTTTb/PlaneTR3D/raw/master/misc/network.jpg)

[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery

PlaneTR: Structure-Guided Transformers for 3D Plane Recovery This is the official implementation of our ICCV 2021 paper News There maybe some bugs in

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (supports 16 languages) of Universal Sentence Encoder (USE).

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

Relative Positional Encoding for Transformers with Linear Complexity

Stochastic Positional Encoding (SPE) This is the source code repository for the ICML 2021 paper Relative Positional Encoding for Transformers with Lin

A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers.

ViTGAN: Training GANs with Vision Transformers A PyTorch implementation of ViTGAN based on paper ViTGAN: Training GANs with Vision Transformers. Refer

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

RoBERTa Marathi Language model trained from scratch during huggingface 🤗 x flax community week

RoBERTa base model for Marathi Language (मराठी भाषा) Pretrained model on Marathi language using a masked language modeling (MLM) objective. RoBERTa wa

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 Corpora 📃 Corpora Number of documents Size (GB) BNE 201,080,084 570GB Models 🤖 RoBERTa-base BNE: https://huggingface.co

EsViT: Efficient self-supervised Vision Transformers

Efficient Self-Supervised Vision Transformers (EsViT) PyTorch implementation for EsViT, built with two techniques: A multi-stage Transformer architect

Pytorch code for ICRA'21 paper: "Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation"

Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation This repository is the pytorch implementation of our paper: Hierarchical Cr

Empirical Study of Transformers for Source Code & A Simple Approach for Handling Out-of-Vocabulary Identifiers in Deep Learning for Source Code

Transformers for variable misuse, function naming and code completion tasks The official PyTorch implementation of: Empirical Study of Transformers fo

General Multi-label Image Classification with Transformers

General Multi-label Image Classification with Transformers Jack Lanchantin, Tianlu Wang, Vicente Ordóñez Román, Yanjun Qi Conference on Computer Visio

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

[ACL 20] Probing Linguistic Features of Sentence-level Representations in Neural Relation Extraction

REval Table of Contents Introduction Overview Requirements Installation Probing Usage Citation License 🎓 Introduction REval is a simple framework for

Shared code for training sentence embeddings with Flax / JAX

flax-sentence-embeddings This repository will be used to share code for the Flax / JAX community event to train sentence embeddings on 1B+ training pa

Official repository for the paper "Going Beyond Linear Transformers with Recurrent Fast Weight Programmers"

Recurrent Fast Weight Programmers This is the official repository containing the code we used to produce the experimental results reported in the pape

Huggingface Transformers + Adapters = ❤️

adapter-transformers A friendly fork of HuggingFace's Transformers, adding Adapters to PyTorch language models adapter-transformers is an extension of

Flexible interface for high-performance research using SOTA Transformers leveraging Pytorch Lightning, Transformers, and Hydra.

Flexible interface for high performance research using SOTA Transformers leveraging Pytorch Lightning, Transformers, and Hydra. What is Lightning Tran

PyTorch impelementations of BERT-based Spelling Error Correction Models

PyTorch impelementations of BERT-based Spelling Error Correction Models

PyTorch impelementations of BERT-based Spelling Error Correction Models.

PyTorch impelementations of BERT-based Spelling Error Correction Models. 基于BERT的文本纠错模型,使用PyTorch实现。

AllenNLP integration for Shiba: Japanese CANINE model

Allennlp Integration for Shiba allennlp-shiab-model is a Python library that provides AllenNLP integration for shiba-model. SHIBA is an approximate re

中文无监督SimCSE Pytorch实现

A PyTorch implementation of unsupervised SimCSE SimCSE: Simple Contrastive Learning of Sentence Embeddings 1. 用法 无监督训练 python train_unsup.py ./data/ne

LoFTR:Detector-Free Local Feature Matching with Transformers CVPR 2021

LoFTR-with-train-script LoFTR:Detector-Free Local Feature Matching with Transformers CVPR 2021 (with train script --- unofficial ---). About Megadepth

This is an official implementation for "Video Swin Transformers".

Video Swin Transformer By Ze Liu*, Jia Ning*, Yue Cao, Yixuan Wei, Zheng Zhang, Stephen Lin and Han Hu. This repo is the official implementation of "V

LETR: Line Segment Detection Using Transformers without Edges

LETR: Line Segment Detection Using Transformers without Edges Introduction This repository contains the official code and pretrained models for Line S

The project is an official implementation of our paper "3D Human Pose Estimation with Spatial and Temporal Transformers".

3D Human Pose Estimation with Spatial and Temporal Transformers This repo is the official implementation for 3D Human Pose Estimation with Spatial and

PyTorch evaluation code for Delving Deep into the Generalization of Vision Transformers under Distribution Shifts.

Out-of-distribution Generalization Investigation on Vision Transformers This repository contains PyTorch evaluation code for Delving Deep into the Gen

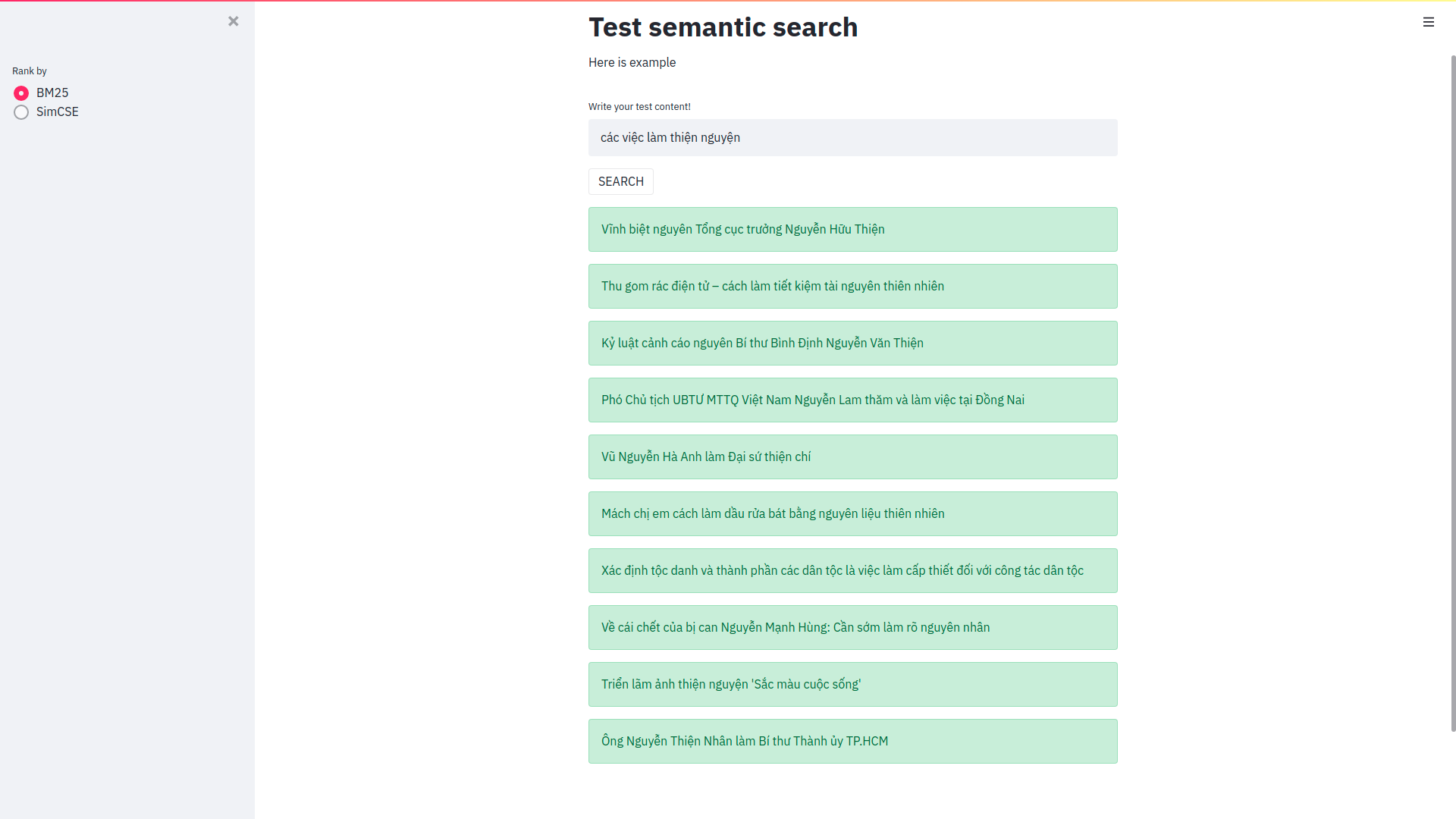

Cải thiện Elasticsearch trong bài toán semantic search sử dụng phương pháp Sentence Embeddings

Cải thiện Elasticsearch trong bài toán semantic search sử dụng phương pháp Sentence Embeddings Trong bài viết này mình sẽ sử dụng pretrain model SimCS

easySpeech is an open-source Python wrapper for google speech to text API that doesn't require PyAudio(So you especially windows user don't have to deal with the errors while installing PyAudio) and also works with hugging face transformers

easySpeech easySpeech is an open source python wrapper for google speech to text api that doesn't require PyAaudio(So you specially windows user don't

Scalable Attentive Sentence-Pair Modeling via Distilled Sentence Embedding (AAAI 2020) - PyTorch Implementation

Scalable Attentive Sentence-Pair Modeling via Distilled Sentence Embedding PyTorch implementation for the Scalable Attentive Sentence-Pair Modeling vi

An interpreter for RASP as described in the ICML 2021 paper "Thinking Like Transformers"

RASP Setup Mac or Linux Run ./setup.sh . It will create a python3 virtual environment and install the dependencies for RASP. It will also try to insta

2021海华AI挑战赛·中文阅读理解·技术组·第三名

文字是人类用以记录和表达的最基本工具,也是信息传播的重要媒介。透过文字与符号,我们可以追寻人类文明的起源,可以传播知识与经验,读懂文字是认识与了解的第一步。对于人工智能而言,它的核心问题之一就是认知,而认知的核心则是语义理解。

Official implementation of "SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers"

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

What is MUSE? MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (16 languages) of Universal Sentence Encoder (USE). MUS

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

Code + pre-trained models for the paper Keeping Your Eye on the Ball Trajectory Attention in Video Transformers

Motionformer This is an official pytorch implementation of paper Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers. In this rep

Load What You Need: Smaller Multilingual Transformers for Pytorch and TensorFlow 2.0.

Smaller Multilingual Transformers This repository shares smaller versions of multilingual transformers that keep the same representations offered by t

Official repository for "On Improving Adversarial Transferability of Vision Transformers" (2021)

Improving-Adversarial-Transferability-of-Vision-Transformers Muzammal Naseer, Kanchana Ranasinghe, Salman Khan, Fahad Khan, Fatih Porikli arxiv link A

Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers.

Less is More: Pay Less Attention in Vision Transformers Official PyTorch implementation of Less is More: Pay Less Attention in Vision Transformers. By

CATs: Semantic Correspondence with Transformers

CATs: Semantic Correspondence with Transformers For more information, check out the paper on [arXiv]. Training with different backbones and evaluation

Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning

We challenge a common assumption underlying most supervised deep learning: that a model makes a prediction depending only on its parameters and the features of a single input. To this end, we introduce a general-purpose deep learning architecture that takes as input the entire dataset instead of processing one datapoint at a time.

DynamicViT: Efficient Vision Transformers with Dynamic Token Sparsification

DynamicViT: Efficient Vision Transformers with Dynamic Token Sparsification Created by Yongming Rao, Wenliang Zhao, Benlin Liu, Jiwen Lu, Jie Zhou, Ch

Official repository for "Intriguing Properties of Vision Transformers" (2021)

Intriguing Properties of Vision Transformers Muzammal Naseer, Kanchana Ranasinghe, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan, & Ming-Hsuan Yang P

Using context-free grammar formalism to parse English sentences to determine their structure to help computer to better understand the meaning of the sentence.

Sentance Parser Executing the Program Make sure Python 3.6+ is installed. Install requirements $ pip install requirements.txt Run the program:

Official repository for HOTR: End-to-End Human-Object Interaction Detection with Transformers (CVPR'21, Oral Presentation)

Official PyTorch Implementation for HOTR: End-to-End Human-Object Interaction Detection with Transformers (CVPR'2021, Oral Presentation) HOTR: End-to-

This is an official implementation of CvT: Introducing Convolutions to Vision Transformers.

Introduction This is an official implementation of CvT: Introducing Convolutions to Vision Transformers. We present a new architecture, named Convolut

Code for our ACL 2021 paper - ConSERT: A Contrastive Framework for Self-Supervised Sentence Representation Transfer

ConSERT Code for our ACL 2021 paper - ConSERT: A Contrastive Framework for Self-Supervised Sentence Representation Transfer Requirements torch==1.6.0

simpleT5 is built on top of PyTorch-lightning⚡️ and Transformers🤗 that lets you quickly train your T5 models.

Quickly train T5 models in just 3 lines of code + ONNX support simpleT5 is built on top of PyTorch-lightning ⚡️ and Transformers 🤗 that lets you quic

Code for our ACL 2021 paper - ConSERT: A Contrastive Framework for Self-Supervised Sentence Representation Transfer

ConSERT Code for our ACL 2021 paper - ConSERT: A Contrastive Framework for Self-Supervised Sentence Representation Transfer Requirements torch==1.6.0

Implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT : Cross-Attention Multi-Scale Vision Transformer for Image Classification This is an unofficial PyTorch implementation of CrossViT: Cross-Att

A curated list of awesome resources related to Semantic Search🔎 and Semantic Similarity tasks.

A curated list of awesome resources related to Semantic Search🔎 and Semantic Similarity tasks.

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch

This is an official implementation of CvT: Introducing Convolutions to Vision Transformers.

Introduction This is an official implementation of CvT: Introducing Convolutions to Vision Transformers. We present a new architecture, named Convolut

Research code for CVPR 2021 paper "End-to-End Human Pose and Mesh Reconstruction with Transformers"

MeshTransformer ✨ This is our research code of End-to-End Human Pose and Mesh Reconstruction with Transformers. MEsh TRansfOrmer is a simple yet effec

Medical Image Segmentation using Squeeze-and-Expansion Transformers

Medical Image Segmentation using Squeeze-and-Expansion Transformers Introduction This repository contains the code of the IJCAI'2021 paper 'Medical Im

Pytorch code for ICRA'21 paper: "Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation"

Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation This repository is the pytorch implementation of our paper: Hierarchical Cr

Contains code for the paper "Vision Transformers are Robust Learners".

Vision Transformers are Robust Learners This repository contains the code for the paper Vision Transformers are Robust Learners by Sayak Paul* and Pin

source code for paper: WhiteningBERT: An Easy Unsupervised Sentence Embedding Approach.

WhiteningBERT Source code and data for paper WhiteningBERT: An Easy Unsupervised Sentence Embedding Approach. Preparation git clone https://github.com

Train 🤗transformers with DeepSpeed: ZeRO-2, ZeRO-3

Fork from https://github.com/huggingface/transformers/tree/86d5fb0b360e68de46d40265e7c707fe68c8015b/examples/pytorch/language-modeling at 2021.05.17.

XtremeDistil framework for distilling/compressing massive multilingual neural network models to tiny and efficient models for AI at scale

XtremeDistilTransformers for Distilling Massive Multilingual Neural Networks ACL 2020 Microsoft Research [Paper] [Video] Releasing [XtremeDistilTransf

Implementation of gMLP, an all-MLP replacement for Transformers, in Pytorch

Implementation of gMLP, an all-MLP replacement for Transformers, in Pytorch

This repository contains PyTorch code for Robust Vision Transformers.

This repository contains PyTorch code for Robust Vision Transformers.

Code and models used in "MUSS Multilingual Unsupervised Sentence Simplification by Mining Paraphrases".

Multilingual Unsupervised Sentence Simplification Code and pretrained models to reproduce experiments in "MUSS: Multilingual Unsupervised Sentence Sim

TrackFormer: Multi-Object Tracking with Transformers

TrackFormer: Multi-Object Tracking with Transformers This repository provides the official implementation of the TrackFormer: Multi-Object Tracking wi

Exploring whether attention is necessary for vision transformers

Do You Even Need Attention? A Stack of Feed-Forward Layers Does Surprisingly Well on ImageNet Paper/Report TL;DR We replace the attention layer in a v

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

InferSent sentence embeddings

InferSent InferSent is a sentence embeddings method that provides semantic representations for English sentences. It is trained on natural language in

jiant is an NLP toolkit

jiant is an NLP toolkit The multitask and transfer learning toolkit for natural language processing research Why should I use jiant? jiant supports mu

Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning

GenSen Learning General Purpose Distributed Sentence Representations via Large Scale Multi-task Learning Sandeep Subramanian, Adam Trischler, Yoshua B

Language-Agnostic SEntence Representations

LASER Language-Agnostic SEntence Representations LASER is a library to calculate and use multilingual sentence embeddings. NEWS 2019/11/08 CCMatrix is

keras implement of transformers for humans

keras implement of transformers for humans

An easier way to build neural search on the cloud

Jina is geared towards building search systems for any kind of data, including text, images, audio, video and many more. With the modular design & multi-layer abstraction, you can leverage the efficient patterns to build the system by parts, or chaining them into a Flow for an end-to-end experience.

ISTR: End-to-End Instance Segmentation with Transformers (https://arxiv.org/abs/2105.00637)

This is the project page for the paper: ISTR: End-to-End Instance Segmentation via Transformers, Jie Hu, Liujuan Cao, Yao Lu, ShengChuan Zhang, Yan Wa

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

PyTorch code for Vision Transformers training with the Self-Supervised learning method DINO

Self-Supervised Vision Transformers with DINO PyTorch implementation and pretrained models for DINO. For details, see Emerging Properties in Self-Supe

Geometry-Free View Synthesis: Transformers and no 3D Priors

Geometry-Free View Synthesis: Transformers and no 3D Priors Geometry-Free View Synthesis: Transformers and no 3D Priors Robin Rombach*, Patrick Esser*

Twins: Revisiting the Design of Spatial Attention in Vision Transformers

Twins: Revisiting the Design of Spatial Attention in Vision Transformers Very recently, a variety of vision transformer architectures for dense predic

Generate indoor scenes with Transformers

SceneFormer: Indoor Scene Generation with Transformers Initial code release for the Sceneformer paper, contains models, train and test scripts for the

SimCSE: Simple Contrastive Learning of Sentence Embeddings

SimCSE: Simple Contrastive Learning of Sentence Embeddings This repository contains the code and pre-trained models for our paper SimCSE: Simple Contr

Sentence boundary disambiguation tool for Japanese texts (日本語文境界判定器)

Bunkai Bunkai is a sentence boundary (SB) disambiguation tool for Japanese texts. Quick Start $ pip install bunkai $ echo -e '宿を予約しました♪!まだ2ヶ月も先だけど。早すぎ

CoaT: Co-Scale Conv-Attentional Image Transformers

CoaT: Co-Scale Conv-Attentional Image Transformers Introduction This repository contains the official code and pretrained models for CoaT: Co-Scale Co

SpikeX - SpaCy Pipes for Knowledge Extraction

SpikeX is a collection of pipes ready to be plugged in a spaCy pipeline. It aims to help in building knowledge extraction tools with almost-zero effort.

State of the art Semantic Sentence Embeddings

Contrastive Tension State of the art Semantic Sentence Embeddings Published Paper · Huggingface Models · Report Bug Overview This is the official code

VideoGPT: Video Generation using VQ-VAE and Transformers

VideoGPT: Video Generation using VQ-VAE and Transformers [Paper][Website][Colab][Gradio Demo] We present VideoGPT: a conceptually simple architecture

![[Preprint] Escaping the Big Data Paradigm with Compact Transformers, 2021](https://github.com/SHI-Labs/Compact-Transformers/raw/main/images/model_sym.png)

[Preprint] Escaping the Big Data Paradigm with Compact Transformers, 2021

Compact Transformers Preprint Link: Escaping the Big Data Paradigm with Compact Transformers By Ali Hassani[1]*, Steven Walton[1]*, Nikhil Shah[1], Ab

![[CVPR'21] Multi-Modal Fusion Transformer for End-to-End Autonomous Driving](https://github.com/autonomousvision/transfuser/raw/main/transfuser/assets/teaser.png)

[CVPR'21] Multi-Modal Fusion Transformer for End-to-End Autonomous Driving

TransFuser This repository contains the code for the CVPR 2021 paper Multi-Modal Fusion Transformer for End-to-End Autonomous Driving. If you find our

Implementation of Perceiver, General Perception with Iterative Attention in TensorFlow

Perceiver This Python package implements Perceiver: General Perception with Iterative Attention by Andrew Jaegle in TensorFlow. This model builds on t

PRTR: Pose Recognition with Cascade Transformers

PRTR: Pose Recognition with Cascade Transformers Introduction This repository is the official implementation for Pose Recognition with Cascade Transfo

Changing the Mind of Transformers for Topically-Controllable Language Generation

We will first introduce the how to run the IPython notebook demo by downloading our pretrained models. Then, we will introduce how to run our training and evaluation code.

Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch

Cross Transformers - Pytorch (wip) Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch Install $ pip install cross-t

Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GanFormer and TransGan paper

TransGanFormer (wip) Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GansFormer and TransGan paper. I

The source code for the Cutoff data augmentation approach proposed in this paper: "A Simple but Tough-to-Beat Data Augmentation Approach for Natural Language Understanding and Generation".

Cutoff: A Simple Data Augmentation Approach for Natural Language This repository contains source code necessary to reproduce the results presented in

Official repository for "PAIR: Planning and Iterative Refinement in Pre-trained Transformers for Long Text Generation"

pair-emnlp2020 Official repository for the paper: Xinyu Hua and Lu Wang: PAIR: Planning and Iterative Refinement in Pre-trained Transformers for Long

Implementation of various Vision Transformers I found interesting

Implementation of various Vision Transformers I found interesting

Code for "LoFTR: Detector-Free Local Feature Matching with Transformers", CVPR 2021

LoFTR: Detector-Free Local Feature Matching with Transformers Project Page | Paper LoFTR: Detector-Free Local Feature Matching with Transformers Jiami

![[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers](https://github.com/fudan-zvg/SETR/raw/main/fig/image.png)

[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.