231 Repositories

Python train-CLIP Libraries

Train an imgs.ai model on your own dataset

imgs.ai is a fast, dataset-agnostic, deep visual search engine for digital art history based on neural network embeddings.

Simple command line tool to train and deploy your machine learning models with AWS SageMaker

metamaker Simple command line tool to train and deploy your machine learning models with AWS SageMaker Features metamaker enables you to: Build a dock

This repository provides an unified frameworks to train and test the state-of-the-art few-shot font generation (FFG) models.

FFG-benchmarks This repository provides an unified frameworks to train and test the state-of-the-art few-shot font generation (FFG) models. What is Fe

Making a music video with Wav2CLIP and VQGAN-CLIP

music2video Overview A repo for making a music video with Wav2CLIP and VQGAN-CLIP. The base code was derived from VQGAN-CLIP The CLIP embedding for au

Generate text captions for images from their CLIP embeddings. Includes PyTorch model code and example training script.

clip-text-decoder Generate text captions for images from their CLIP embeddings. Includes PyTorch model code and example training script. Example Predi

HuggingTweets - Train a model to generate tweets

HuggingTweets - Train a model to generate tweets Create in 5 minutes a tweet generator based on your favorite Tweeter Make my own model with the demo

Train SN-GAN with AdaBelief

SNGAN-AdaBelief Train a state-of-the-art spectral normalization GAN with AdaBelief https://github.com/juntang-zhuang/Adabelief-Optimizer Acknowledgeme

NVTabular is a feature engineering and preprocessing library for tabular data designed to quickly and easily manipulate terabyte scale datasets used to train deep learning based recommender systems.

NVTabular is a feature engineering and preprocessing library for tabular data designed to quickly and easily manipulate terabyte scale datasets used to train deep learning based recommender systems.

A webapp that timestamps key moments in a football clip

A look into what we're building Demo.mp4 Prerequisites Python 3 Node v16+ Steps to run Create a virtual environment. Activate the virtual environment.

Python script for diving image data to train test and val

dataset-division-to-train-val-test-python python script for dividing image data to train test and val If you have an image dataset in the following st

Код файнтюнинга оригинального CLIP на русский язык

О чем репозиторий В этом репозитории представлен способ файтюнить оригинальный CLIP на новый язык Почему модель не видит женщину и откуда на картинке

CLIPImageClassifier wraps clip image model from transformers

CLIPImageClassifier CLIPImageClassifier wraps clip image model from transformers. CLIPImageClassifier is initialized with the argument classes, these

An easy to use, user-friendly and efficient code for extracting OpenAI CLIP (Global/Grid) features from image and text respectively.

Extracting OpenAI CLIP (Global/Grid) Features from Image and Text This repo aims at providing an easy to use and efficient code for extracting image &

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

apricot implements submodular optimization for the purpose of selecting subsets of massive data sets to train machine learning models quickly.

Please consider citing the manuscript if you use apricot in your academic work! You can find more thorough documentation here. apricot implements subm

Apply AnimeGAN-v2 across frames of a video clip

title emoji colorFrom colorTo sdk app_file pinned AnimeGAN-v2 For Videos 🔥 blue red gradio app.py false AnimeGAN-v2 For Videos Apply AnimeGAN-v2 acro

This toolkit provides codes to download and pre-process the SLUE datasets, train the baseline models, and evaluate SLUE tasks.

slue-toolkit We introduce Spoken Language Understanding Evaluation (SLUE) benchmark. This toolkit provides codes to download and pre-process the SLUE

Record railway train route profile with GNSS tools

Train route profile recording with GNSS technology based on ARDUINO platform Project target Develop GNSS recording tools based on the ARDUINO platform

Lightweight Face Image Quality Assessment

LightQNet This is a demo code of training and testing [LightQNet] using Tensorflow. Uncertainty Losses: IDQ loss PCNet loss Uncertainty Networks: Mobi

Clip Bing Maps backgound as RGB geotif image using center-point from vector data of a shapefile and Bing Maps zoom

Clip Bing Maps backgound as RGB geotif image using center-point from vector data of a shapefile and Bing Maps zoom. Also, rasterize shapefile vectors as corresponding label image.

How to Train a GAN? Tips and tricks to make GANs work

(this list is no longer maintained, and I am not sure how relevant it is in 2020) How to Train a GAN? Tips and tricks to make GANs work While research

Train CPPNs as a Generative Model, using Generative Adversarial Networks and Variational Autoencoder techniques to produce high resolution images.

cppn-gan-vae tensorflow Train Compositional Pattern Producing Network as a Generative Model, using Generative Adversarial Networks and Variational Aut

STBP is a way to train SNN with datasets by Backward propagation.

Spiking neural network (SNN), compared with depth neural network (DNN), has faster processing speed, lower energy consumption and more biological interpretability, which is expected to approach Strong AI.

🚪✊Knock Knock: Get notified when your training ends with only two additional lines of code

Knock Knock A small library to get a notification when your training is complete or when it crashes during the process with two additional lines of co

EZ graph is an easy to use AI solution that allows you to make and train your neural networks without a single line of code.

EZ-Graph EZ Graph is a GUI that allows users to make and train neural networks without writing a single line of code. Requirements python 3 pandas num

Train DeepLab for Semantic Image Segmentation

Train DeepLab for Semantic Image Segmentation Martin Kersner, [email protected] This repository contains scripts for training DeepLab for Semantic I

A Pytorch implementation of MoveNet from Google. Include training code and pre-train model.

Movenet.Pytorch Intro MoveNet is an ultra fast and accurate model that detects 17 keypoints of a body. This is A Pytorch implementation of MoveNet fro

Telegram bot to clip youtube videos

youtube-clipper-bot Telegram bot to clip youtube videos How to deploy? Create a file called config.env BOT_TOKEN: Provide your bot token generated by

Official Code Release for "TIP-Adapter: Training-free clIP-Adapter for Better Vision-Language Modeling"

Official Code Release for "TIP-Adapter: Training-free clIP-Adapter for Better Vision-Language Modeling" Pipeline of Tip-Adapter Tip-Adapter can provid

Use MATLAB to simulate the signal and extract features. Use PyTorch to build and train deep network to do spectrum sensing.

Deep-Learning-based-Spectrum-Sensing Use MATLAB to simulate the signal and extract features. Use PyTorch to build and train deep network to do spectru

An implementation of the Contrast Predictive Coding (CPC) method to train audio features in an unsupervised fashion.

CPC_audio This code implements the Contrast Predictive Coding algorithm on audio data, as described in the paper Unsupervised Pretraining Transfers we

A simple app that helps to train quick calculations.

qtcounter A simple app that helps to train quick calculations. Usage Manual Clone the repo in a folder using git clone https://github.com/Froloket64/q

Unofficial reimplementation of ECAPA-TDNN for speaker recognition (EER=0.86 for Vox1_O when train only in Vox2)

Introduction This repository contains my unofficial reimplementation of the standard ECAPA-TDNN, which is the speaker recognition in VoxCeleb2 dataset

Official PyTorch implementation of the paper Image-Based CLIP-Guided Essence Transfer.

TargetCLIP- official pytorch implementation of the paper Image-Based CLIP-Guided Essence Transfer This repository finds a global direction in StyleGAN

An example showing how to use jax to train resnet50 on multi-node multi-GPU

jax-multi-gpu-resnet50-example This repo shows how to use jax for multi-node multi-GPU training. The example is adapted from the resnet50 example in d

Official PyTorch implementation of the paper Image-Based CLIP-Guided Essence Transfer.

TargetCLIP- official pytorch implementation of the paper Image-Based CLIP-Guided Essence Transfer This repository finds a global direction in StyleGAN

Official implementation of the paper WAV2CLIP: LEARNING ROBUST AUDIO REPRESENTATIONS FROM CLIP

Wav2CLIP 🚧 WIP 🚧 Official implementation of the paper WAV2CLIP: LEARNING ROBUST AUDIO REPRESENTATIONS FROM CLIP 📄 🔗 Ho-Hsiang Wu, Prem Seetharaman

Trains an OpenNMT PyTorch model and SentencePiece tokenizer.

Trains an OpenNMT PyTorch model and SentencePiece tokenizer. Designed for use with Argos Translate and LibreTranslate.

CLOOB: Modern Hopfield Networks with InfoLOOB Outperform CLIP

CLOOB: Modern Hopfield Networks with InfoLOOB Outperform CLIP Andreas Fürst* 1, Elisabeth Rumetshofer* 1, Viet Tran1, Hubert Ramsauer1, Fei Tang3, Joh

Train a state-of-the-art yolov3 object detector from scratch!

TrainYourOwnYOLO: Building a Custom Object Detector from Scratch This repo let's you train a custom image detector using the state-of-the-art YOLOv3 c

The Self-Supervised Learner can be used to train a classifier with fewer labeled examples needed using self-supervised learning.

Published by SpaceML • About SpaceML • Quick Colab Example Self-Supervised Learner The Self-Supervised Learner can be used to train a classifier with

A framework to train language models to learn invariant representations.

Invariant Language Modeling Implementation of the training for invariant language models. Motivation Modern pretrained language models are critical co

Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train format

ttopt Description Gradient-free global optimization algorithm for multidimensional functions based on the low rank tensor train (TT) format and maximu

A Jupyter notebook to play with NVIDIA's StyleGAN3 and OpenAI's CLIP for a text-based guided image generation.

A Jupyter notebook to play with NVIDIA's StyleGAN3 and OpenAI's CLIP for a text-based guided image generation.

Tangram makes it easy for programmers to train, deploy, and monitor machine learning models.

Tangram Website | Discord Tangram makes it easy for programmers to train, deploy, and monitor machine learning models. Run tangram train to train a mo

Using contrastive learning and OpenAI's CLIP to find good embeddings for images with lossy transformations

Creating Robust Representations from Pre-Trained Image Encoders using Contrastive Learning Sriram Ravula, Georgios Smyrnis This is the code for our pr

A simple tool for searching images inside a local folder with text/image input using CLIP

clip-search (WIP) A simple tool for searching images inside a local folder with text/image input using CLIP 10 results for "a blonde woman" in a folde

Making self-supervised learning work on molecules by using their 3D geometry to pre-train GNNs. Implemented in DGL and Pytorch Geometric.

3D Infomax improves GNNs for Molecular Property Prediction Video | Paper We pre-train GNNs to understand the geometry of molecules given only their 2D

This repository contains the files for running the Patchify GUI.

Repository Name Train-Test-Validation-Dataset-Generation App Name Patchify Description This app is designed for crop images and creating smal

Simple image captioning model - CLIP prefix captioning.

Simple image captioning model - CLIP prefix captioning.

Framework to build and train RL algorithms

RayLink RayLink is a RL framework used to build and train RL algorithms. RayLink was used to build a RL framework, and tested in a large-scale multi-a

TLDR; Train custom adaptive filter optimizers without hand tuning or extra labels.

AutoDSP TLDR; Train custom adaptive filter optimizers without hand tuning or extra labels. About Adaptive filtering algorithms are commonplace in sign

An architecture that makes any doodle realistic, in any specified style, using VQGAN, CLIP and some basic embedding arithmetics.

Sketch Simulator An architecture that makes any doodle realistic, in any specified style, using VQGAN, CLIP and some basic embedding arithmetics. See

HeatNet is a python package that provides tools to build, train and evaluate neural networks designed to predict extreme heat wave events globally on daily to subseasonal timescales.

HeatNet HeatNet is a python package that provides tools to build, train and evaluate neural networks designed to predict extreme heat wave events glob

Train 🤗-transformers model with Poutyne.

poutyne-transformers Train 🤗 -transformers models with Poutyne. Installation pip install poutyne-transformers Example import torch from transformers

Text2Art is an AI art generator powered with VQGAN + CLIP and CLIPDrawer models

Text2Art is an AI art generator powered with VQGAN + CLIP and CLIPDrawer models. You can easily generate all kind of art from drawing, painting, sketch, or even a specific artist style just using a text input. You can also specify the dimensions of the image. The process can take 3-20 mins and the results will be emailed to you.

Wagtail CLIP allows you to search your Wagtail images using natural language queries.

Wagtail CLIP allows you to search your Wagtail images using natural language queries.

CLIPort: What and Where Pathways for Robotic Manipulation

CLIPort CLIPort: What and Where Pathways for Robotic Manipulation Mohit Shridhar, Lucas Manuelli, Dieter Fox CoRL 2021 CLIPort is an end-to-end imitat

A simple FastAPI web service + Vue.js based UI over a rclip-style clip embedding database.

Explore CLIP Embeddings in a rclip database A simple FastAPI web service + Vue.js based UI over a rclip-style clip embedding database. A live demo of

Reimplement of SimSwap training code

SimSwap-train Reimplement of SimSwap training code Instructions 1.Environment Preparation (1)Refer to the README document of SIMSWAP to configure the

Quickly and easily create / train a custom DeepDream model

Dream-Creator This project aims to simplify the process of creating a custom DeepDream model by using pretrained GoogleNet models and custom image dat

A Tools that help Data Scientists and ML engineers train and deploy ML models.

Domino Research This repo contains projects under active development by the Domino R&D team. We build tools that help Data Scientists and ML engineers

Just playing with getting CLIP Guided Diffusion running locally, rather than having to use colab.

CLIP-Guided-Diffusion Just playing with getting CLIP Guided Diffusion running locally, rather than having to use colab. Original colab notebooks by Ka

Train a deep learning net with OpenStreetMap features and satellite imagery.

DeepOSM Classify roads and features in satellite imagery, by training neural networks with OpenStreetMap (OSM) data. DeepOSM can: Download a chunk of

NLPIR tutorial: pretrain for IR. pre-train on raw textual corpus, fine-tune on MS MARCO Document Ranking

pretrain4ir_tutorial NLPIR tutorial: pretrain for IR. pre-train on raw textual corpus, fine-tune on MS MARCO Document Ranking 用作NLPIR实验室, Pre-training

This repository contains a set of codes to run (i.e., train, perform inference with, evaluate) a diarization method called EEND-vector-clustering.

EEND-vector clustering The EEND-vector clustering (End-to-End-Neural-Diarization-vector clustering) is a speaker diarization framework that integrates

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt. This is done by

load .txt to train YOLOX, same as Yolo others

YOLOX train your data you need generate data.txt like follow format (per line- one image). prepare one data.txt like this: img_path1 x1,y1,x2,y2,clas

Train GPT-3 model on V100(16GB Mem) Using improved Transformer.

GPT-X using transformer pytorch

Streamlit Tutorial (ex: stock price dashboard, cartoon-stylegan, vqgan-clip, stylemixing, styleclip, sefa)

Streamlit Tutorials Install pip install streamlit Run cd [directory] streamlit run app.py --server.address 0.0.0.0 --server.port [your port] # http:/

Codes to pre-train Japanese T5 models

t5-japanese Codes to pre-train a T5 (Text-to-Text Transfer Transformer) model pre-trained on Japanese web texts. The model is available at https://hug

Code used to generate the results appearing in "Train longer, generalize better: closing the generalization gap in large batch training of neural networks"

Train longer, generalize better - Big batch training This is a code repository used to generate the results appearing in "Train longer, generalize bet

Generate vibrant and detailed images using only text.

CLIP Guided Diffusion From RiversHaveWings. Generate vibrant and detailed images using only text. See captions and more generations in the Gallery See

CLIP + VQGAN / PixelDraw

clipit Yet Another VQGAN-CLIP Codebase This started as a fork of @nerdyrodent's VQGAN-CLIP code which was based on the notebooks of @RiversWithWings a

Music Source Separation; Train & Eval & Inference piplines and pretrained models we used for 2021 ISMIR MDX Challenge.

Music Source Separation with Channel-wise Subband Phase Aware ResUnet (CWS-PResUNet) Introduction This repo contains the pretrained Music Source Separ

IMS-Toucan is a toolkit to train state-of-the-art Speech Synthesis models

IMS-Toucan is a toolkit to train state-of-the-art Speech Synthesis models. Everything is pure Python and PyTorch based to keep it as simple and beginner-friendly, yet powerful as possible.

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

A tutorial showing how to train, convert, and run TensorFlow Lite object detection models on Android devices, the Raspberry Pi, and more!

A tutorial showing how to train, convert, and run TensorFlow Lite object detection models on Android devices, the Raspberry Pi, and more!

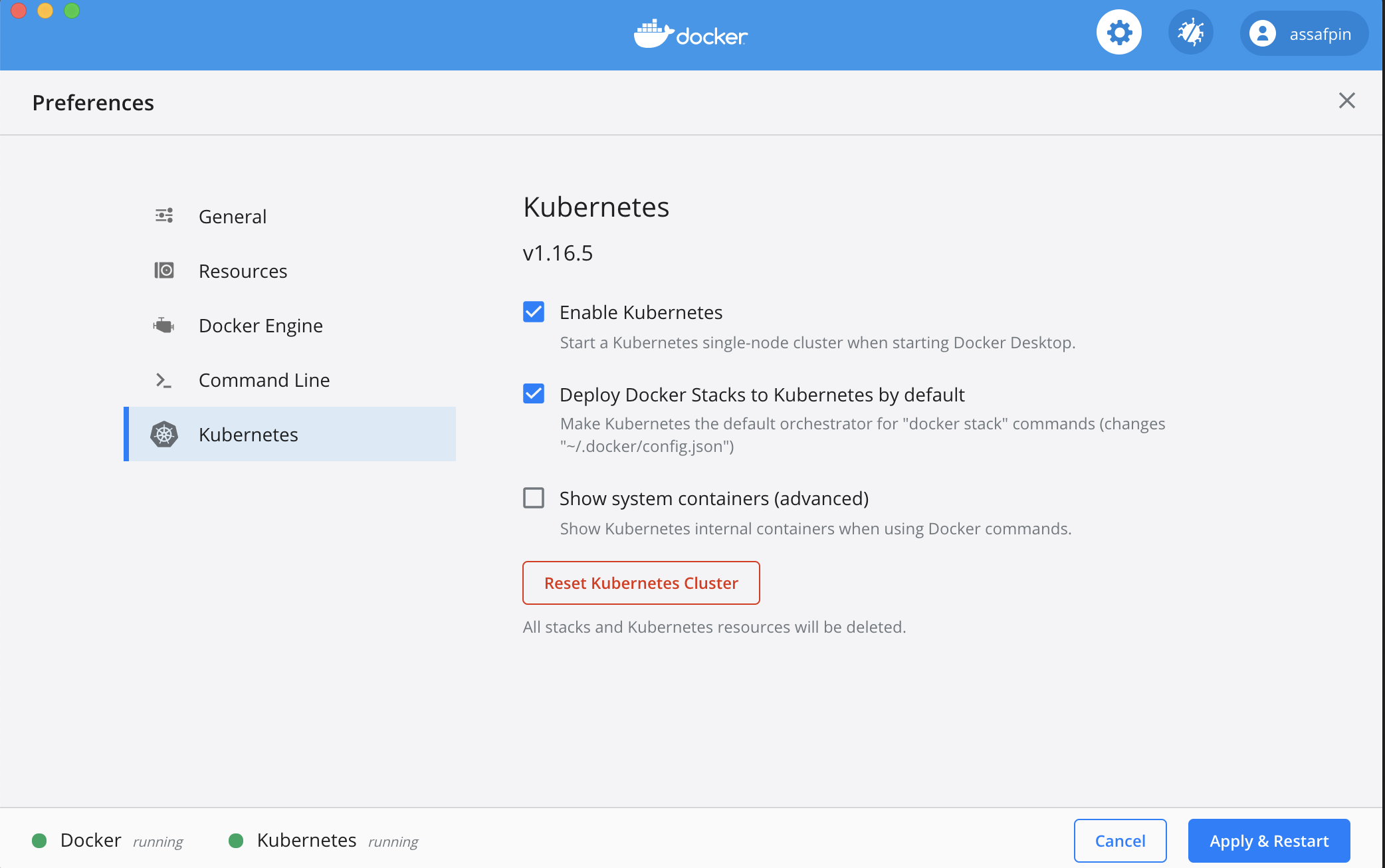

Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized

VQGAN-CLIP-Docker About Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized This is a stripped and minimal dependency repository for running loca

Segmentation in Style: Unsupervised Semantic Image Segmentation with Stylegan and CLIP

Segmentation in Style: Unsupervised Semantic Image Segmentation with Stylegan and CLIP Abstract: We introduce a method that allows to automatically se

Train an RL agent to execute natural language instructions in a 3D Environment (PyTorch)

Gated-Attention Architectures for Task-Oriented Language Grounding This is a PyTorch implementation of the AAAI-18 paper: Gated-Attention Architecture

An open source implementation of CLIP.

OpenCLIP Welcome to an open source implementation of OpenAI's CLIP (Contrastive Language-Image Pre-training). The goal of this repository is to enable

improvement of CLIP features over the traditional resnet features on the visual question answering, image captioning, navigation and visual entailment tasks.

CLIP-ViL In our paper "How Much Can CLIP Benefit Vision-and-Language Tasks?", we show the improvement of CLIP features over the traditional resnet fea

Just playing with getting VQGAN+CLIP running locally, rather than having to use colab.

Just playing with getting VQGAN+CLIP running locally, rather than having to use colab.

Spectral Tensor Train Parameterization of Deep Learning Layers

Spectral Tensor Train Parameterization of Deep Learning Layers This repository is the official implementation of our AISTATS 2021 paper titled "Spectr

Source code for models described in the paper "AudioCLIP: Extending CLIP to Image, Text and Audio" (https://arxiv.org/abs/2106.13043)

AudioCLIP Extending CLIP to Image, Text and Audio This repository contains implementation of the models described in the paper arXiv:2106.13043. This

Falken provides developers with a service that allows them to train AI that can play their games

Falken provides developers with a service that allows them to train AI that can play their games. Unlike traditional RL frameworks that learn through rewards or batches of offline training, Falken is based on training AI via realtime, human interactions.

CLIP: Connecting Text and Image (Learning Transferable Visual Models From Natural Language Supervision)

CLIP (Contrastive Language–Image Pre-training) Experiments (Evaluation) Model Dataset Acc (%) ViT-B/32 (Paper) CIFAR100 65.1 ViT-B/32 (Our) CIFAR100 6

AudioCLIP Extending CLIP to Image, Text and Audio

AudioCLIP Extending CLIP to Image, Text and Audio This repository contains implementation of the models described in the paper arXiv:2106.13043. This

LoFTR:Detector-Free Local Feature Matching with Transformers CVPR 2021

LoFTR-with-train-script LoFTR:Detector-Free Local Feature Matching with Transformers CVPR 2021 (with train script --- unofficial ---). About Megadepth

Code to train models from "Paraphrastic Representations at Scale".

Paraphrastic Representations at Scale Code to train models from "Paraphrastic Representations at Scale". The code is written in Python 3.7 and require

![A PyTorch implementation of EventProp [https://arxiv.org/abs/2009.08378], a method to train Spiking Neural Networks](https://github.com/lolemacs/pytorch-eventprop/raw/master/images/forward.png)

A PyTorch implementation of EventProp [https://arxiv.org/abs/2009.08378], a method to train Spiking Neural Networks

Spiking Neural Network training with EventProp This is an unofficial PyTorch implemenation of EventProp, a method to compute exact gradients for Spiki

Code for CPM-2 Pre-Train

CPM-2 Pre-Train Pre-train CPM-2 此分支为110亿非 MoE 模型的预训练代码,MoE 模型的预训练代码请切换到 moe 分支 CPM-2技术报告请参考link。 0 模型下载 请在智源资源下载页面进行申请,文件介绍如下: 文件名 描述 参数大小 100000.tar

simpleT5 is built on top of PyTorch-lightning⚡️ and Transformers🤗 that lets you quickly train your T5 models.

Quickly train T5 models in just 3 lines of code + ONNX support simpleT5 is built on top of PyTorch-lightning ⚡️ and Transformers 🤗 that lets you quic

Code for CPM-2 Pre-Train

CPM-2 Pre-Train Pre-train CPM-2 此分支为110亿非 MoE 模型的预训练代码,MoE 模型的预训练代码请切换到 moe 分支 CPM-2技术报告请参考link。 0 模型下载 请在智源资源下载页面进行申请,文件介绍如下: 文件名 描述 参数大小 100000.tar

Apache Liminal is an end-to-end platform for data engineers & scientists, allowing them to build, train and deploy machine learning models in a robust and agile way

Apache Liminals goal is to operationalise the machine learning process, allowing data scientists to quickly transition from a successful experiment to an automated pipeline of model training, validation, deployment and inference in production. Liminal provides a Domain Specific Language to build ML workflows on top of Apache Airflow.

Train 🤗transformers with DeepSpeed: ZeRO-2, ZeRO-3

Fork from https://github.com/huggingface/transformers/tree/86d5fb0b360e68de46d40265e7c707fe68c8015b/examples/pytorch/language-modeling at 2021.05.17.

A PyTorch Lightning solution to training OpenAI's CLIP from scratch.

train-CLIP 📎 A PyTorch Lightning solution to training CLIP from scratch. Goal ⚽ Our aim is to create an easy to use Lightning implementation of OpenA

Ever felt tired after preprocessing the dataset, and not wanting to write any code further to train your model? Ever encountered a situation where you wanted to record the hyperparameters of the trained model and able to retrieve it afterward? Models Playground is here to help you do that. Models playground allows you to train your models right from the browser.

Models Playground 🗂️ Upload a Preprocessed Dataset 🌠 Choose whether to perform Classification or Regression 🦹 Enter the Dependent Variable ?

Simple implementation of OpenAI CLIP model in PyTorch.

It was in January of 2021 that OpenAI announced two new models: DALL-E and CLIP, both multi-modality models connecting texts and images in some way. In this article we are going to implement CLIP model from scratch in PyTorch. OpenAI has open-sourced some of the code relating to CLIP model but I found it intimidating and it was far from something short and simple. I also came across a good tutorial inspired by CLIP model on Keras code examples and I translated some parts of it into PyTorch to build this tutorial totally with our beloved PyTorch!