2045 Repositories

Python transformer-network Libraries

A deep learning network built with TensorFlow and Keras to classify gender and estimate age.

Convolutional Neural Network (CNN). This repository contains a source code of a deep learning network built with TensorFlow and Keras to classify gend

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

DaProfiler allows you to get emails, social medias, adresses, works and more on your target using web scraping and google dorking techniques

DaProfiler allows you to get emails, social medias, adresses, works and more on your target using web scraping and google dorking techniques, based in France Only. The particularity of this program is its ability to find your target's e-mail adresses.

I hacked my own webcam from a Kali Linux VM in my local network, using Ettercap to do the MiTM ARP poisoning attack, sniffing with Wireshark, and using metasploit

plan I - Linux Fundamentals Les utilisateurs et les droits Installer des programmes avec apt-get Surveiller l'activité du système Exécuter des program

PyTorch implementation of Deep HDR Imaging via A Non-Local Network (TIP 2020).

NHDRRNet-PyTorch This is the PyTorch implementation of Deep HDR Imaging via A Non-Local Network (TIP 2020). 0. Differences between Original Paper and

Codes for building and training the neural network model described in Domain-informed neural networks for interaction localization within astroparticle experiments.

Domain-informed Neural Networks Codes for building and training the neural network model described in Domain-informed neural networks for interaction

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2021/11/19 Thank you for your interest in our work. We have uploaded the code of our MTUNet to help peers conduct further research on i

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t

This is the official PyTorch implementation of the CVPR 2020 paper "TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting".

TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting Project Page | YouTube | Paper This is the official PyTorch implementation of the C

A small game I made back in think 2011

Navi Network A small game I made back in think 2011. An online game inspired by the self-hosted nature of Minecraft, made with pygame, based on the Me

This Home Assistant custom component adds support for controlling Midea dehumidiferes on local network.

This is a custom component for Home assistant that adds support for Midea dehumidifier appliances via the local area network. midea-dehumidifier-lan H

Ensembling Off-the-shelf Models for GAN Training

Data-Efficient GANs with DiffAugment project | paper | datasets | video | slides Generated using only 100 images of Obama, grumpy cats, pandas, the Br

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

[ICCV2021] TransReID: Transformer-based Object Re-Identification [pdf] The official repository for TransReID: Transformer-based Object Re-Identificati

Automate the case review on legal case documents and find the most critical cases using network analysis

Automation on Legal Court Cases Review This project is to automate the case review on legal case documents and find the most critical cases using netw

A Persistent Embedded Graph Database for Python

Cog - Embedded Graph Database for Python cogdb.io New release: 2.0.5! Installing Cog pip install cogdb Cog is a persistent embedded graph database im

Using pytorch to implement unet network for liver image segmentation.

Using pytorch to implement unet network for liver image segmentation.

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

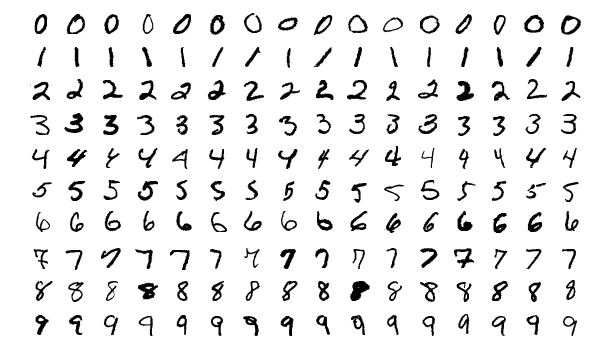

A simple Neural Network that predicts the label for a series of handwritten digits

Neural_Network A simple Neural Network that predicts the label for a series of handwritten numbers This program tries to predict the label (1,2,3 etc.

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

Official PyTorch implementation of SegFormer

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

The code for two papers: Feedback Transformer and Expire-Span.

transformer-sequential This repo contains the code for two papers: Feedback Transformer Expire-Span The training code is structured for long sequentia

Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing

Introduction Funnel-Transformer is a new self-attention model that gradually compresses the sequence of hidden states to a shorter one and hence reduc

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

An implementation of the Pay Attention when Required transformer

Pay Attention when Required (PAR) Transformer-XL An implementation of the Pay Attention when Required transformer from the paper: https://arxiv.org/pd

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer: Self-Attention with Linear Complexity)

Linear Multihead Attention (Linformer) PyTorch Implementation of reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer:

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Longformer: The Long-Document Transformer

Longformer Longformer and LongformerEncoderDecoder (LED) are pretrained transformer models for long documents. ***** New December 1st, 2020: Longforme

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Source code of paper "BP-Transformer: Modelling Long-Range Context via Binary Partitioning"

BP-Transformer This repo contains the code for our paper BP-Transformer: Modeling Long-Range Context via Binary Partition Zihao Ye, Qipeng Guo, Quan G

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Physics-Informed Neural Networks (PINN) and Deep BSDE Solvers of Differential Equations for Scientific Machine Learning (SciML) accelerated simulation

NeuralPDE NeuralPDE.jl is a solver package which consists of neural network solvers for partial differential equations using scientific machine learni

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

An implementation of quantum convolutional neural network with MindQuantum. Huawei, classifying MNIST dataset

关于实现的一点说明 山东大学 2020级 苏博南 www.subonan.com 文件说明 tools.py 这里面主要有两个函数: resize(a, lenb) 这其实是我找同学写的一个小算法hhh。给出一个$28\times 28$的方阵a,返回一个$lenb\times lenb$的方阵。因

[ACM MM 2021] Yes, "Attention is All You Need", for Exemplar based Colorization

Transformer for Image Colorization This is an implemention for Yes, "Attention Is All You Need", for Exemplar based Colorization, and the current soft

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2 Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexan

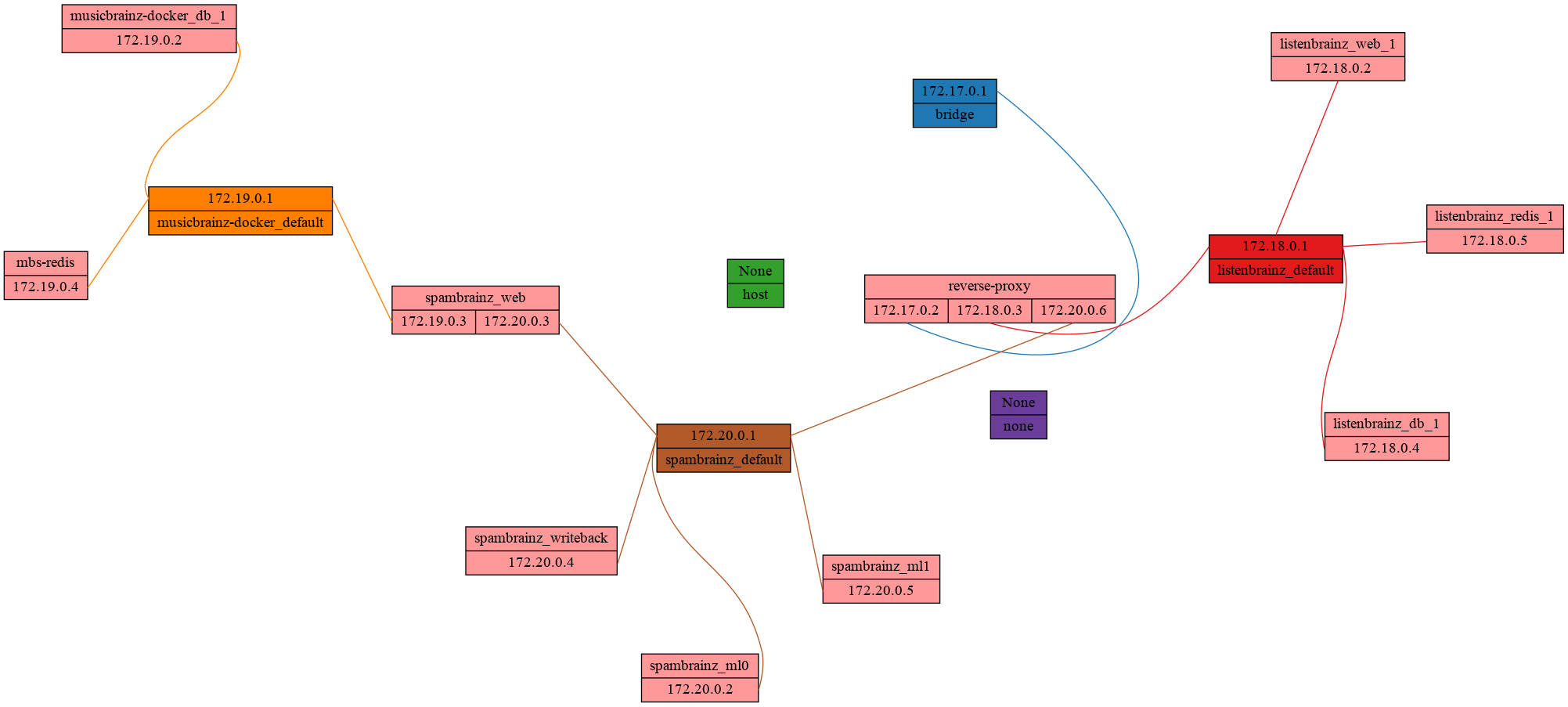

Quickly visualize docker networks with graphviz.

Docker Network Graph Visualize the relationship between Docker networks and containers as a neat graphviz graph. Example Usage usage: docker-net-graph

A foreign language learning aid using a neural network to predict probability of translating foreign words

Langy Langy is a reading-focused foreign language learning aid orientated towards young children. Reading is an activity that every child knows. It is

TRACER: Extreme Attention Guided Salient Object Tracing Network implementation in PyTorch

TRACER: Extreme Attention Guided Salient Object Tracing Network This paper was accepted at AAAI 2022 SA poster session. Datasets All datasets are avai

Internal network honeypot for detecting if an attacker or insider threat scans your network for log4j CVE-2021-44228

log4j-honeypot-flask Internal network honeypot for detecting if an attacker or insider threat scans your network for log4j CVE-2021-44228 This can be

Learning Lightweight Low-Light Enhancement Network using Pseudo Well-Exposed Images

Learning Lightweight Low-Light Enhancement Network using Pseudo Well-Exposed Images This repository contains the implementation of the following paper

The source code of the paper "SHGNN: Structure-Aware Heterogeneous Graph Neural Network"

SHGNN: Structure-Aware Heterogeneous Graph Neural Network The source code and dataset of the paper: SHGNN: Structure-Aware Heterogeneous Graph Neural

Some code of the implements of Geological Modeling Using 3D Pixel-Adaptive and Deformable Convolutional Neural Network

3D-GMPDCNN Geological Modeling Using 3D Pixel-Adaptive and Deformable Convolutional Neural Network PyTorch implementation of "Geological Modeling Usin

Twin-deep neural network for semi-supervised learning of materials properties

Deep Semi-Supervised Teacher-Student Material Synthesizability Prediction Citation: Semi-supervised teacher-student deep neural network for materials

Centroid-UNet is deep neural network model to detect centroids from satellite images.

Centroid UNet - Locating Object Centroids in Aerial/Serial Images Introduction Centroid-UNet is deep neural network model to detect centroids from Aer

Paper and Codes for “Embracing Single Stride 3D Object Detector with Sparse Transformer”

SST: Single-stride Sparse Transformer This is the official implementation of paper: Embracing Single Stride 3D Object Detector with Sparse Transformer

This is the official source code of "BiCAT: Bi-Chronological Augmentation of Transformer for Sequential Recommendation".

BiCAT This is our TensorFlow implementation for the paper: "BiCAT: Sequential Recommendation with Bidirectional Chronological Augmentation of Transfor

The code of "Dependency Learning for Legal Judgment Prediction with a Unified Text-to-Text Transformer".

Code data_preprocess.py: preprocess data for Dependent-T5. parameters.py: define parameters of Dependent-T5. train_tools.py: traning and evaluation co

Event-driven networking engine written in Python.

Twisted For information on changes in this release, see the NEWS file. What is this? Twisted is an event-based framework for internet applications, su

Impacket is a collection of Python classes for working with network protocols.

What is Impacket? Impacket is a collection of Python classes for working with network protocols. Impacket is focused on providing low-level programmat

A network address manipulation library for Python

netaddr A system-independent network address manipulation library for Python 2.7 and 3.5+. (Python 2.7 and 3.5 support is deprecated). Provides suppor

InDuDoNet+: A Model-Driven Interpretable Dual Domain Network for Metal Artifact Reduction in CT Images

InDuDoNet+: A Model-Driven Interpretable Dual Domain Network for Metal Artifact Reduction in CT Images Hong Wang, Yuexiang Li, Haimiao Zhang, Deyu Men

Network Compression via Central Filter

Network Compression via Central Filter Environments The code has been tested in the following environments: Python 3.8 PyTorch 1.8.1 cuda 10.2 torchsu

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts

t5-japanese Codes to pre-train T5 (Text-to-Text Transfer Transformer) models pre-trained on Japanese web texts. The following is a list of models that

A PyTorch library and evaluation platform for end-to-end compression research

CompressAI CompressAI (compress-ay) is a PyTorch library and evaluation platform for end-to-end compression research. CompressAI currently provides: c

Multi-vendor library to simplify CLI connections to network devices

Netmiko Multi-vendor library to simplify CLI connections to network devices Why Netmiko? Network automation to screen-scraping devices is primarily co

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

A Vision Transformer approach that uses concatenated query and reference images to learn the relationship between query and reference images directly.

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation [arxiv] This is the official repository for CDTrans: Cross-domain Transformer for

PyTorch implementation of a collections of scalable Video Transformer Benchmarks.

PyTorch implementation of Video Transformer Benchmarks This repository is mainly built upon Pytorch and Pytorch-Lightning. We wish to maintain a colle

Continuous Augmented Positional Embeddings (CAPE) implementation for PyTorch

PyTorch implementation of Continuous Augmented Positional Embeddings (CAPE), by Likhomanenko et al. Enhance your Transformer positional embeddings with easy-to-use augmentations!

Synthetic Data Generation for tabular, relational and time series data.

An Open Source Project from the Data to AI Lab, at MIT Website: https://sdv.dev Documentation: https://sdv.dev/SDV User Guides Developer Guides Github

![[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)](https://github.com/ytongbai/ViTs-vs-CNNs/raw/main/resources/teaser.png)

[NeurIPS 2021]: Are Transformers More Robust Than CNNs? (Pytorch implementation & checkpoints)

Are Transformers More Robust Than CNNs? Pytorch implementation for NeurIPS 2021 Paper: Are Transformers More Robust Than CNNs? Our implementation is b

GTK and Python based, system performance and usage monitoring tool

System Monitoring Center GTK3 and Python 3 based, system performance and usage monitoring tool. Features: Detailed system performance and usage usage

Code for ShadeGAN (NeurIPS2021) A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis

A Shading-Guided Generative Implicit Model for Shape-Accurate 3D-Aware Image Synthesis Project Page | Paper A Shading-Guided Generative Implicit Model

Embracing Single Stride 3D Object Detector with Sparse Transformer

SST: Single-stride Sparse Transformer This is the official implementation of paper: Embracing Single Stride 3D Object Detector with Sparse Transformer

Implementation of Heterogeneous Graph Attention Network

HetGAN Implementation of Heterogeneous Graph Attention Network This is the code repository of paper "Prediction of Metro Ridership During the COVID-19

A Dynamic Residual Self-Attention Network for Lightweight Single Image Super-Resolution

DRSAN A Dynamic Residual Self-Attention Network for Lightweight Single Image Super-Resolution Karam Park, Jae Woong Soh, and Nam Ik Cho Environments U

The source code for Adaptive Kernel Graph Neural Network at AAAI2022

AKGNN The source code for Adaptive Kernel Graph Neural Network at AAAI2022. Please cite our paper if you think our work is helpful to you: @inproceedi

Official implementation of "A Shared Representation for Photorealistic Driving Simulators" in PyTorch.

A Shared Representation for Photorealistic Driving Simulators The official code for the paper: "A Shared Representation for Photorealistic Driving Sim

Implementation of the paper Recurrent Glimpse-based Decoder for Detection with Transformer.

REGO-Deformable DETR By Zhe Chen, Jing Zhang, and Dacheng Tao. This repository is the implementation of the paper Recurrent Glimpse-based Decoder for

A new video text spotting framework with Transformer

TransVTSpotter: End-to-end Video Text Spotter with Transformer Introduction A Multilingual, Open World Video Text Dataset and End-to-end Video Text Sp

K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce (EMNLP Founding 2021)

Introduction K-PLUG: Knowledge-injected Pre-trained Language Model for Natural Language Understanding and Generation in E-Commerce. Installation PyTor

State-of-the-art NLP through transformer models in a modular design and consistent APIs.

Trapper (Transformers wRAPPER) Trapper is an NLP library that aims to make it easier to train transformer based models on downstream tasks. It wraps h

A modular application for performing anomaly detection in networks

Deep-Learning-Models-for-Network-Annomaly-Detection The modular app consists for mainly three annomaly detection algorithms. The system supports model

SimMIM: A Simple Framework for Masked Image Modeling

SimMIM By Zhenda Xie*, Zheng Zhang*, Yue Cao*, Yutong Lin, Jianmin Bao, Zhuliang Yao, Qi Dai and Han Hu*. This repo is the official implementation of

An end to end ASR Transformer model training repo

END TO END ASR TRANSFORMER 本项目基于transformer 6*encoder+6*decoder的基本结构构造的端到端的语音识别系统 Model Instructions 1.数据准备: 自行下载数据,遵循文件结构如下: ├── data │ ├── train │

RaceBERT -- A transformer based model to predict race and ethnicty from names

RaceBERT -- A transformer based model to predict race and ethnicty from names Installation pip install racebert Using a virtual environment is highly

Pytorch code for paper "Image Compressed Sensing Using Non-local Neural Network" TMM 2021.

NL-CSNet-Pytorch Pytorch code for paper "Image Compressed Sensing Using Non-local Neural Network" TMM 2021. Note: this repo only shows the strategy of

Bayesian Deep Learning and Deep Reinforcement Learning for Object Shape Error Response and Correction of Manufacturing Systems

Bayesian Deep Learning for Manufacturing 2.0 (dlmfg) Object Shape Error Response (OSER) Digital Lifecycle Management - In Process Quality Improvement

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design"

Unofficial implementation of "Coordinate Attention for Efficient Mobile Network Design". CoordAttention tensorflow slim

An Implementation of Transformer in Transformer in TensorFlow for image classification, attention inside local patches

Transformer-in-Transformer An Implementation of the Transformer in Transformer paper by Han et al. for image classification, attention inside local pa

Send files to your friends over network! (100mb max)

PyServed v2.0.1 Made by Shaurya Pratap Singh Installation Using pip(for stable releases.) - $ pip install pyserved Using Git (for latest updates) -

Federated learning on graph, especially on graph neural networks (GNNs), knowledge graph, and private GNN.

Federated learning on graph, especially on graph neural networks (GNNs), knowledge graph, and private GNN.

Generative Handwriting using LSTM Mixture Density Network with TensorFlow

Generative Handwriting Demo using TensorFlow An attempt to implement the random handwriting generation portion of Alex Graves' paper. See my blog post

The Simplest DCGAN Implementation

DCGAN in TensorLayer This is the TensorLayer implementation of Deep Convolutional Generative Adversarial Networks. Looking for Text to Image Synthesis

Unsupervised Image to Image Translation with Generative Adversarial Networks

Unsupervised Image to Image Translation with Generative Adversarial Networks Paper: Unsupervised Image to Image Translation with Generative Adversaria

The implementation code for "DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction"

DAGAN This is the official implementation code for DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruct

Neural Network to colorize grayscale images

#colornet Neural Network to colorize grayscale images Results Grayscale Prediction Ground Truth Eiji K used colornet for anime colorization Sources Au