815 Repositories

Python transformer-encoder Libraries

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training [Arxiv] VideoMAE: Masked Autoencoders are Data-Efficient Learne

Temporally Efficient Vision Transformer for Video Instance Segmentation, CVPR 2022, Oral

Temporally Efficient Vision Transformer for Video Instance Segmentation Temporally Efficient Vision Transformer for Video Instance Segmentation (CVPR

TopFormer: Token Pyramid Transformer for Mobile Semantic Segmentation, CVPR2022

TopFormer: Token Pyramid Transformer for Mobile Semantic Segmentation Paper Links: TopFormer: Token Pyramid Transformer for Mobile Semantic Segmentati

"MST++: Multi-stage Spectral-wise Transformer for Efficient Spectral Reconstruction" (CVPRW 2022) & (Winner of NTIRE 2022 Challenge on Spectral Reconstruction from RGB)

MST++: Multi-stage Spectral-wise Transformer for Efficient Spectral Reconstruction (CVPRW 2022) Yuanhao Cai, Jing Lin, Zudi Lin, Haoqian Wang, Yulun Z

CVPR2022 (Oral) - Rethinking Semantic Segmentation: A Prototype View

Rethinking Semantic Segmentation: A Prototype View Rethinking Semantic Segmentation: A Prototype View, Tianfei Zhou, Wenguan Wang, Ender Konukoglu and

Stratified Transformer for 3D Point Cloud Segmentation (CVPR 2022)

Stratified Transformer for 3D Point Cloud Segmentation Xin Lai*, Jianhui Liu*, Li Jiang, Liwei Wang, Hengshuang Zhao, Shu Liu, Xiaojuan Qi, Jiaya Jia

Official implementation for "Style Transformer for Image Inversion and Editing" (CVPR 2022)

Style Transformer for Image Inversion and Editing (CVPR2022) https://arxiv.org/abs/2203.07932 Existing GAN inversion methods fail to provide latent co

Implementation of Deformable Attention in Pytorch from the paper "Vision Transformer with Deformable Attention"

Deformable Attention Implementation of Deformable Attention from this paper in Pytorch, which appears to be an improvement to what was proposed in DET

Lyrics generation with GPT2-based Transformer

HuggingArtists - Train a model to generate lyrics Create AI-Artist in just 5 minutes! 🚀 Run the demo notebook to train 🚀 Run the GUI demo to test Di

Cross-view Transformers for real-time Map-view Semantic Segmentation (CVPR 2022 Oral)

Cross View Transformers This repository contains the source code and data for our paper: Cross-view Transformers for real-time Map-view Semantic Segme

Voxel Set Transformer: A Set-to-Set Approach to 3D Object Detection from Point Clouds (CVPR 2022)

Voxel Set Transformer: A Set-to-Set Approach to 3D Object Detection from Point Clouds (CVPR2022)[paper] Authors: Chenhang He, Ruihuang Li, Shuai Li, L

Implementation of the Transformer variant proposed in "Transformer Quality in Linear Time"

FLASH - Pytorch Implementation of the Transformer variant proposed in the paper Transformer Quality in Linear Time Install $ pip install FLASH-pytorch

MAT: Mask-Aware Transformer for Large Hole Image Inpainting

MAT: Mask-Aware Transformer for Large Hole Image Inpainting (CVPR2022, Oral) Wenbo Li, Zhe Lin, Kun Zhou, Lu Qi, Yi Wang, Jiaya Jia [Paper] News This

Official source code of Fast Point Transformer, CVPR 2022

Fast Point Transformer Project Page | Paper This repository contains the official source code and data for our paper: Fast Point Transformer Chunghyun

![[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links](https://github.com/michiyasunaga/LinkBERT/raw/main/figs/overview.png)

[ACL 2022] LinkBERT: A Knowledgeable Language Model 😎 Pretrained with Document Links

LinkBERT: A Knowledgeable Language Model Pretrained with Document Links This repo provides the model, code & data of our paper: LinkBERT: Pretraining

Generate images from texts. In Russian

ruDALL-E Generate images from texts pip install rudalle==1.1.0rc0 🤗 HF Models: ruDALL-E Malevich (XL) ruDALL-E Emojich (XL) (readme here) ruDALL-E S

The official implementation of Autoregressive Image Generation using Residual Quantization (CVPR '22)

Autoregressive Image Generation using Residual Quantization (CVPR 2022) The official implementation of "Autoregressive Image Generation using Residual

RuDOLPH: One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP

[Paper] [Хабр] [Model Card] [Colab] [Kaggle] RuDOLPH 🦌 🎄 ☃️ One Hyper-Modal Transformer can be creative as DALL-E and smart as CLIP Russian Diffusio

Official codebase used to develop Vision Transformer, MLP-Mixer, LiT and more.

Big Vision This codebase is designed for training large-scale vision models on Cloud TPU VMs. It is based on Jax/Flax libraries, and uses tf.data and

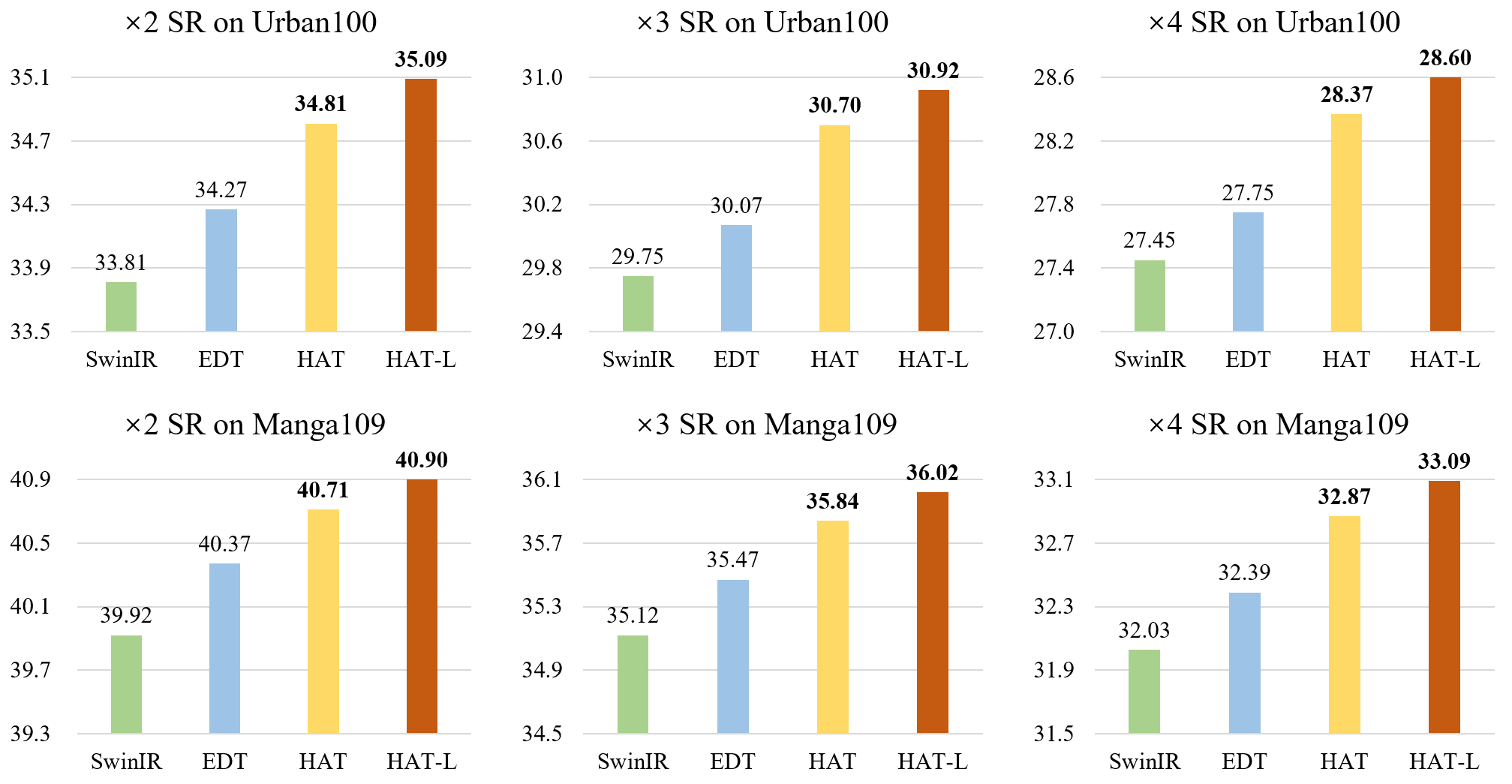

Activating More Pixels in Image Super-Resolution Transformer

HAT [Paper Link] Activating More Pixels in Image Super-Resolution Transformer Xiangyu Chen, Xintao Wang, Jiantao Zhou and Chao Dong BibTeX @article{ch

BankNote-Net: Open dataset and encoder model for assistive currency recognition

BankNote-Net: Open Dataset for Assistive Currency Recognition Millions of people around the world have low or no vision. Assistive software applicatio

Building a real-time environment using webcam frame division in OpenCV and classify cropped images using a fine-tuned vision transformers on hybryd datasets samples for facial emotion recognition.

Visual Transformer for Facial Emotion Recognition (FER) This project has the aim to build an efficient Visual Transformer for the Facial Emotion Recog

Video Frame Interpolation with Transformer (CVPR2022)

VFIformer Official PyTorch implementation of our CVPR2022 paper Video Frame Interpolation with Transformer Dependencies python = 3.8 pytorch = 1.8.0

Adaptive FNO transformer - official Pytorch implementation

Adaptive Fourier Neural Operators: Efficient Token Mixers for Transformers This repository contains PyTorch implementation of the Adaptive Fourier Neu

Implementation of ETSformer, state of the art time-series Transformer, in Pytorch

ETSformer - Pytorch Implementation of ETSformer, state of the art time-series Transformer, in Pytorch Install $ pip install etsformer-pytorch Usage im

Incremental Transformer Structure Enhanced Image Inpainting with Masking Positional Encoding (CVPR2022)

Incremental Transformer Structure Enhanced Image Inpainting with Masking Positional Encoding by Qiaole Dong*, Chenjie Cao*, Yanwei Fu Paper and Supple

The code for our paper submitted to RAL/IROS 2022: OverlapTransformer: An Efficient and Rotation-Invariant Transformer Network for LiDAR-Based Place Recognition.

OverlapTransformer The code for our paper submitted to RAL/IROS 2022: OverlapTransformer: An Efficient and Rotation-Invariant Transformer Network for

A Persian Image Captioning model based on Vision Encoder Decoder Models of the transformers🤗.

Persian-Image-Captioning We fine-tuning the Vision Encoder Decoder Model for the task of image captioning on the coco-flickr-farsi dataset. The implem

![[CVPR 2022] TransEditor: Transformer-Based Dual-Space GAN for Highly Controllable Facial Editing](https://github.com/BillyXYB/TransEditor/raw/main/resources/teaser_change_order.png)

[CVPR 2022] TransEditor: Transformer-Based Dual-Space GAN for Highly Controllable Facial Editing

TransEditor: Transformer-Based Dual-Space GAN for Highly Controllable Facial Editing (CVPR 2022) This repository provides the official PyTorch impleme

As-ViT: Auto-scaling Vision Transformers without Training

As-ViT: Auto-scaling Vision Transformers without Training [PDF] Wuyang Chen, Wei Huang, Xianzhi Du, Xiaodan Song, Zhangyang Wang, Denny Zhou In ICLR 2

(CVPR 2022) Pytorch implementation of "Self-supervised transformers for unsupervised object discovery using normalized cut"

(CVPR 2022) TokenCut Pytorch implementation of Tokencut: Self-supervised Transformers for Unsupervised Object Discovery using Normalized Cut Yangtao W

KoCLIP: Korean port of OpenAI CLIP, in Flax

KoCLIP This repository contains code for KoCLIP, a Korean port of OpenAI's CLIP. This project was conducted as part of Hugging Face's Flax/JAX communi

This is a pytorch implementation for the BST model from Alibaba https://arxiv.org/pdf/1905.06874.pdf

Behavior-Sequence-Transformer-Pytorch This is a pytorch implementation for the BST model from Alibaba https://arxiv.org/pdf/1905.06874.pdf This model

Official Implementation of "Third Time's the Charm? Image and Video Editing with StyleGAN3" https://arxiv.org/abs/2201.13433

Third Time's the Charm? Image and Video Editing with StyleGAN3 Yuval Alaluf*, Or Patashnik*, Zongze Wu, Asif Zamir, Eli Shechtman, Dani Lischinski, Da

PyTorch implementation of Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy

Anomaly Transformer in PyTorch This is an implementation of Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy. This pape

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology (LMRL Workshop, NeurIPS 2021)

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology Self-Supervised Vision Transformers Learn Visual Concepts in Histopatholog

.png)

Contextual Attention Network: Transformer Meets U-Net

Contextual Attention Network: Transformer Meets U-Net Contexual attention network for medical image segmentation with state of the art results on skin

SciFive: a text-text transformer model for biomedical literature

SciFive SciFive provided a Text-Text framework for biomedical language and natural language in NLP. Under the T5's framework and desrbibed in the pape

Rethinking Portrait Matting with Privacy Preserving

Rethinking Portrait Matting with Privacy Preserving This is the official repository of the paper Rethinking Portrait Matting with Privacy Preserving.

[CVPR 2022] Official Pytorch code for OW-DETR: Open-world Detection Transformer

OW-DETR: Open-world Detection Transformer (CVPR 2022) [Paper] Akshita Gupta*, Sanath Narayan*, K J Joseph, Salman Khan, Fahad Shahbaz Khan, Mubarak Sh

Multilingual Emotion classification using BERT (fine-tuning). Published at the WASSA workshop (ACL2022).

XLM-EMO: Multilingual Emotion Prediction in Social Media Text Abstract Detecting emotion in text allows social and computational scientists to study h

Official Implementation of DE-DETR and DELA-DETR in "Towards Data-Efficient Detection Transformers"

DE-DETRs By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao This repository is an official implementation of DE-DETR and DELA-DETR in

![[CVPR22] Official codebase of Semantic Segmentation by Early Region Proxy.](https://github.com/YiF-Zhang/RegionProxy/raw/master/.github/perf-gflops-param.jpg)

[CVPR22] Official codebase of Semantic Segmentation by Early Region Proxy.

RegionProxy Figure 2. Performance vs. GFLOPs on ADE20K val split. Semantic Segmentation by Early Region Proxy Yifan Zhang, Bo Pang, Cewu Lu CVPR 2022

Official Implementation of DE-CondDETR and DELA-CondDETR in "Towards Data-Efficient Detection Transformers"

DE-DETRs By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao This repository is an official implementation of DE-CondDETR and DELA-Cond

Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy" (ICLR 2022 Spotlight)

About Code release for Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy (ICLR 2022 Spotlight)

![[ICCV2021] 3DVG-Transformer: Relation Modeling for Visual Grounding on Point Clouds](https://github.com/zlccccc/3DVG-Transformer/raw/main/demo/Model.png)

[ICCV2021] 3DVG-Transformer: Relation Modeling for Visual Grounding on Point Clouds

3DVG-Transformer This repository is for the ICCV 2021 paper "3DVG-Transformer: Relation Modeling for Visual Grounding on Point Clouds" Our method "3DV

Code for the SIGIR 2022 paper "Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge Graph Completion"

MKGFormer Code for the SIGIR 2022 paper "Hybrid Transformer with Multi-level Fusion for Multimodal Knowledge Graph Completion" Model Architecture Illu

SIGIR'22 paper: Axiomatically Regularized Pre-training for Ad hoc Search

Introduction This codebase contains source-code of the Python-based implementation (ARES) of our SIGIR 2022 paper. Chen, Jia, et al. "Axiomatically Re

Implementation of the GVP-Transformer, which was used in the paper "Learning inverse folding from millions of predicted structures" for de novo protein design alongside Alphafold2

GVP Transformer (wip) Implementation of the GVP-Transformer, which was used in the paper Learning inverse folding from millions of predicted structure

Official Implementation of HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation

HRDA: Context-Aware High-Resolution Domain-Adaptive Semantic Segmentation by Lukas Hoyer, Dengxin Dai, and Luc Van Gool [Arxiv] [Paper] Overview Unsup

PSTR: End-to-End One-Step Person Search With Transformers (CVPR2022)

PSTR (CVPR2022) This code is an official implementation of "PSTR: End-to-End One-Step Person Search With Transformers (CVPR2022)". End-to-end one-step

The official code of LM-Debugger, an interactive tool for inspection and intervention in transformer-based language models.

LM-Debugger is an open-source interactive tool for inspection and intervention in transformer-based language models. This repository includes the code

Unofficial Implement PU-Transformer

PU-Transformer-pytorch Pytorch unofficial implementation of PU-Transformer (PU-Transformer: Point Cloud Upsampling Transformer) https://arxiv.org/abs/

The repo for the paper "I3CL: Intra- and Inter-Instance Collaborative Learning for Arbitrary-shaped Scene Text Detection".

I3CL: Intra- and Inter-Instance Collaborative Learning for Arbitrary-shaped Scene Text Detection Updates | Introduction | Results | Usage | Citation |

![[arXiv22] Disentangled Representation Learning for Text-Video Retrieval](https://github.com/foolwood/DRL/raw/main/demo/pipeline.png)

[arXiv22] Disentangled Representation Learning for Text-Video Retrieval

Disentangled Representation Learning for Text-Video Retrieval This is a PyTorch implementation of the paper Disentangled Representation Learning for T

This repository contains the data and code for the paper "Diverse Text Generation via Variational Encoder-Decoder Models with Gaussian Process Priors" (SPNLP@ACL2022)

GP-VAE This repository provides datasets and code for preprocessing, training and testing models for the paper: Diverse Text Generation via Variationa

![[CVPR 2022]](https://github.com/VITA-Group/Diverse-ViT/raw/main/Figs/Diversity_overview.png)

[CVPR 2022] "The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy" by Tianlong Chen, Zhenyu Zhang, Yu Cheng, Ahmed Awadallah, Zhangyang Wang

The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy Codes for this paper: [CVPR 2022] The Pr

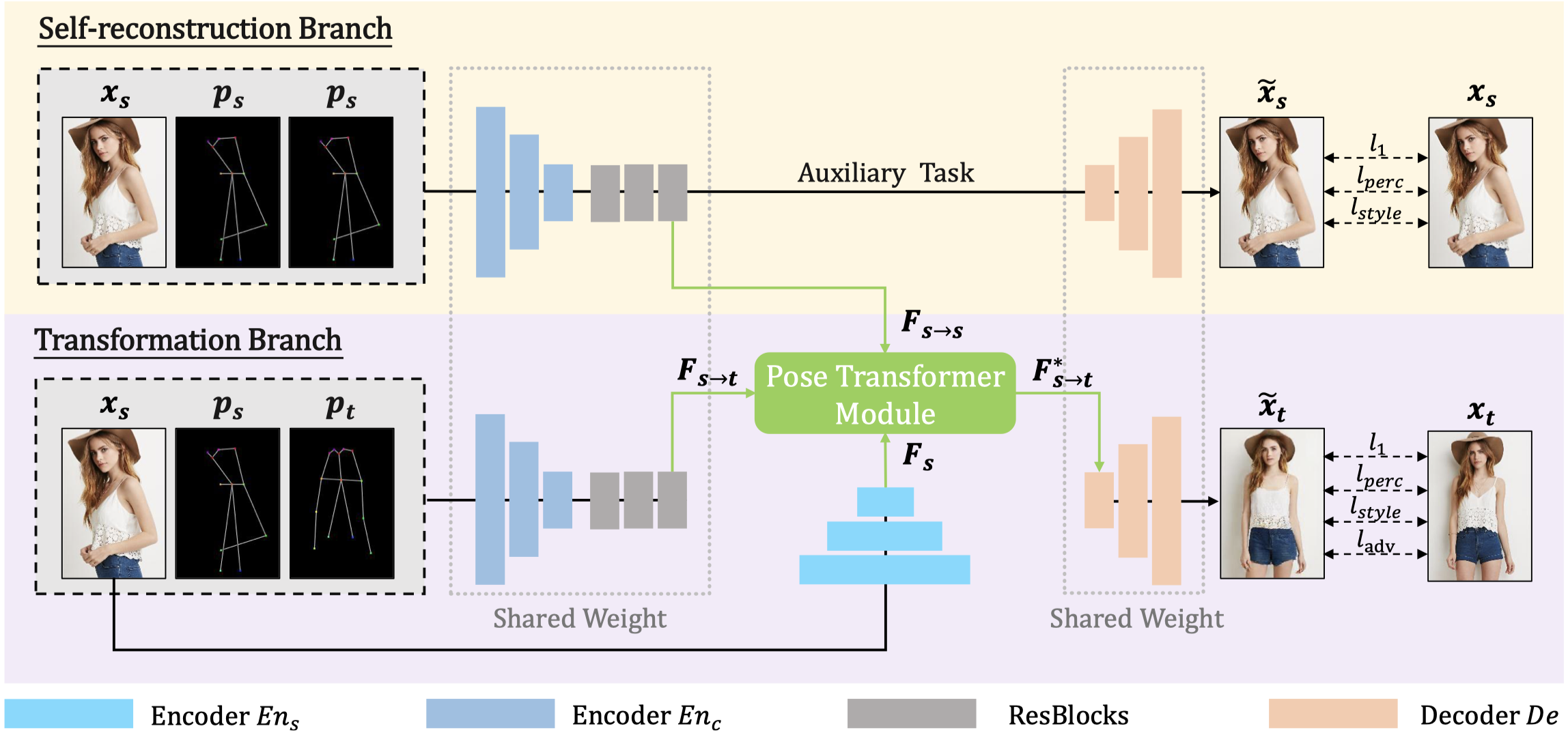

Exploring the Dual-task Correlation for Pose Guided Person Image Generation

Dual-task Pose Transformer Network The source code for our paper "Exploring Dual-task Correlation for Pose Guided Person Image Generation“ (CVPR2022)

Transformer Huffman coding - Complete Huffman coding through transformer

Transformer_Huffman_coding Complete Huffman coding through transformer 2022/2/19

SmallInitEmb - LayerNorm(SmallInit(Embedding)) in a Transformer to improve convergence

SmallInitEmb LayerNorm(SmallInit(Embedding)) in a Transformer I find that when t

GeoTransformer - Geometric Transformer for Fast and Robust Point Cloud Registration

Geometric Transformer for Fast and Robust Point Cloud Registration PyTorch imple

Accuracy Aligned. Concise Implementation of Swin Transformer

Accuracy Aligned. Concise Implementation of Swin Transformer This repository contains the implementation of Swin Transformer, and the training codes o

A very simple python script to encode and decode PowerShell one-liners.

PowerShell Encoder A very simple python script to encode and decode PowerShell one-liners. I used Raikia's PowerShell encoder ALOT, but one day it wen

This repository contains code from the paper "TTS-GAN: A Transformer-based Time-Series Generative Adversarial Network"

TTS-GAN: A Transformer-based Time-Series Generative Adversarial Network This repository contains code from the paper "TTS-GAN: A Transformer-based Tim

A python package to fine-tune transformer-based models for named entity recognition (NER).

nerblackbox A python package to fine-tune transformer-based language models for named entity recognition (NER). Resources Source Code: https://github.

TorchMD-Net provides state-of-the-art graph neural networks and equivariant transformer neural networks potentials for learning molecular potentials

TorchMD-net TorchMD-Net provides state-of-the-art graph neural networks and equivariant transformer neural networks potentials for learning molecular

Official implementation for paper: Feature-Style Encoder for Style-Based GAN Inversion

Feature-Style Encoder for Style-Based GAN Inversion Official implementation for paper: Feature-Style Encoder for Style-Based GAN Inversion. Code will

Clean and readable code for Decision Transformer: Reinforcement Learning via Sequence Modeling

Minimal implementation of Decision Transformer: Reinforcement Learning via Sequence Modeling in PyTorch for mujoco control tasks in OpenAI gym

Non-Autoregressive Translation with Layer-Wise Prediction and Deep Supervision

Deeply Supervised, Layer-wise Prediction-aware (DSLP) Transformer for Non-autoregressive Neural Machine Translation

This project uses ViT to perform image classification tasks on DATA set CIFAR10.

Vision-Transformer-Multiprocess-DistributedDataParallel-Apex Introduction This project uses ViT to perform image classification tasks on DATA set CIFA

A Novel Plug-in Module for Fine-grained Visual Classification

Pytorch implementation for A Novel Plug-in Module for Fine-Grained Visual Classification. fine-grained visual classification task.

Pytorch implementation of MaskGIT: Masked Generative Image Transformer

Pytorch implementation of MaskGIT: Masked Generative Image Transformer

Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection"

Hierarchical Token Semantic Audio Transformer Introduction The Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound

Codebase to experiment with a hybrid Transformer that combines conditional sequence generation with regression

Regression Transformer Codebase to experiment with a hybrid Transformer that combines conditional sequence generation with regression . Development se

NeuralForecast is a Python library for time series forecasting with deep learning models

NeuralForecast is a Python library for time series forecasting with deep learning models. It includes benchmark datasets, data-loading utilities, evaluation functions, statistical tests, univariate model benchmarks and SOTA models implemented in PyTorch and PyTorchLightning.

A transformer which can randomly augment VOC format dataset (both image and bbox) online.

VocAug It is difficult to find a script which can augment VOC-format dataset, especially the bbox. Or find a script needs complex requirements so it i

Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5

NLP-Summarizer Natural language processing summarizer using 3 state of the art Transformer models: BERT, GPT2, and T5 This project aimed to provide in

A U-Net combined with a variational auto-encoder that is able to learn conditional distributions over semantic segmentations.

Probabilistic U-Net + **Update** + An improved Model (the Hierarchical Probabilistic U-Net) + LIDC crops is now available. See below. Re-implementatio

Semantic Segmentation Suite in TensorFlow

Semantic Segmentation Suite in TensorFlow. Implement, train, and test new Semantic Segmentation models easily!

Mae segmentation - Reproduction of semantic segmentation using masked autoencoder (mae)

ADE20k Semantic segmentation with MAE Getting started Install the mmsegmentation

The official code repo of "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound Classification and Detection"

Hierarchical Token Semantic Audio Transformer Introduction The Code Repository for "HTS-AT: A Hierarchical Token-Semantic Audio Transformer for Sound

This repo contains the code required to train the multivariate time-series Transformer.

Multi-Variate Time-Series Transformer This repo contains the code required to train the multivariate time-series Transformer. Download the data The No

MoRecon - A tool for reconstructing missing frames in motion capture data.

MoRecon - A tool for reconstructing missing frames in motion capture data.

PyTorch code for BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax

Simple Transformer An implementation of the "Attention is all you need" paper without extra bells and whistles, or difficult syntax. Note: The only ex

DocEnTr: An end-to-end document image enhancement transformer

DocEnTR Description Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer. This model is implemented on to

RuCLIP-SB (Russian Contrastive Language–Image Pretraining SWIN-BERT) is a multimodal model for obtaining images and text similarities and rearranging captions and pictures. Unlike other versions of the model we use BERT for text encoder and SWIN transformer for image encoder.

ruCLIP-SB RuCLIP-SB (Russian Contrastive Language–Image Pretraining SWIN-BERT) is a multimodal model for obtaining images and text similarities and re

Computer Vision Paper Reviews with Key Summary of paper, End to End Code Practice and Jupyter Notebook converted papers

Computer-Vision-Paper-Reviews Computer Vision Paper Reviews with Key Summary along Papers & Codes. Jonathan Choi 2021 The repository provides 100+ Pap

PyTorch implementation of "VRT: A Video Restoration Transformer"

VRT: A Video Restoration Transformer Jingyun Liang, Jiezhang Cao, Yuchen Fan, Kai Zhang, Rakesh Ranjan, Yawei Li, Radu Timofte, Luc Van Gool Computer

This repository contains code accompanying the paper "An End-to-End Chinese Text Normalization Model based on Rule-Guided Flat-Lattice Transformer"

FlatTN This repository contains code accompanying the paper "An End-to-End Chinese Text Normalization Model based on Rule-Guided Flat-Lattice Transfor

Decision Transformer: A brand new Offline RL Pattern

DecisionTransformer_StepbyStep Intro Decision Transformer: A brand new Offline RL Pattern. 这是关于NeurIPS 2021 热门论文Decision Transformer的复现。 👍 原文地址: Deci

Geometric Interpretation of Matrix Square Root and Inverse Square Root

Fast Differentiable Matrix Sqrt Root Geometric Interpretation of Matrix Square Root and Inverse Square Root This repository constains the official Pyt

This repository contains the code for TABS, a 3D CNN-Transformer hybrid automated brain tissue segmentation algorithm using T1w structural MRI scans

This repository contains the code for TABS, a 3D CNN-Transformer hybrid automated brain tissue segmentation algorithm using T1w structural MRI scans. TABS relies on a Res-Unet backbone, with a Vision Transformer embedded between the encoder and decoder layers.

SegTransVAE: Hybrid CNN - Transformer with Regularization for medical image segmentation

SegTransVAE: Hybrid CNN - Transformer with Regularization for medical image segmentation This repo is the official implementation for SegTransVAE. Seg

PyTorch implementation for the paper Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime

Visual Representation Learning with Self-Supervised Attention for Low-Label High-Data Regime Created by Prarthana Bhattacharyya. Disclaimer: This is n

Transformer based SAR image despeckling

Transformer based SAR image despeckling Using the code: The code is stable while using Python 3.6.13, CUDA =10.1 Clone this repository: git clone htt

PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks

AttentionHTR PyTorch implementation of an end-to-end Handwritten Text Recognition (HTR) system based on attention encoder-decoder networks. Scene Text

CvT2DistilGPT2 is an encoder-to-decoder model that was developed for chest X-ray report generation.

CvT2DistilGPT2 Improving Chest X-Ray Report Generation by Leveraging Warm-Starting This repository houses the implementation of CvT2DistilGPT2 from [1

Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer.

DocEnTR Description Pytorch implementation of the paper DocEnTr: An End-to-End Document Image Enhancement Transformer. This model is implemented on to

Easy to use and customizable SOTA Semantic Segmentation models with abundant datasets in PyTorch

Semantic Segmentation Easy to use and customizable SOTA Semantic Segmentation models with abundant datasets in PyTorch Features Applicable to followin