Experiments and examples converting Transformers to ONNX

This repository containes experiments and examples on converting different Transformers to ONNX.

This repository containes experiments and examples on converting different Transformers to ONNX.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

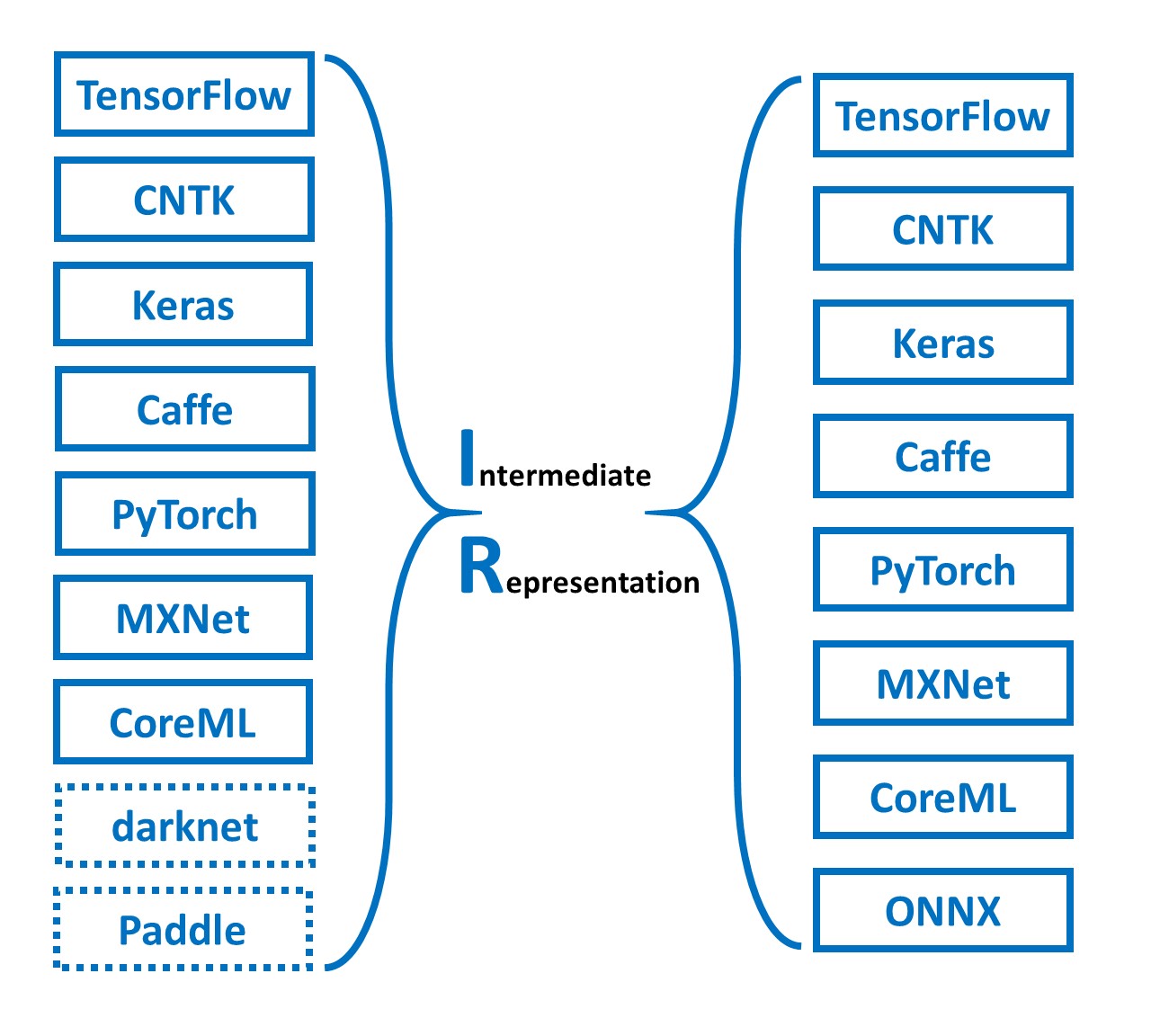

MMdnn MMdnn is a comprehensive and cross-framework tool to convert, visualize and diagnose deep learning (DL) models. The "MM" stands for model manage

PyTorch Infer Utils This package proposes simplified exporting pytorch models to ONNX and TensorRT, and also gives some base interface for model infer

Library for converting RGB / Grayscale numpy images from to base64 and back. Installation pip install -U image_to_base_64 Conversion RGB to base 64 b

PyTorch ,ONNX and TensorRT implementation of YOLOv4

tf2onnx converts TensorFlow (tf-1.x or tf-2.x), tf.keras and tflite models to ONNX via command line or python api.

YOLOX is an anchor-free version of YOLO, with a simpler design but better performance! It aims to bridge the gap between research and industrial communities. For more details, please refer to our report on Arxiv.

Introduction YOLOX is an anchor-free version of YOLO, with a simpler design but better performance! It aims to bridge the gap between research and ind

ONNX msg_chn_wacv20 depth completion Python script for performing depth completion from sparse depth and rgb images using the msg_chn_wacv20 model in

root@2516503eaa22:/workspace/github/onnx-transformers/seq2seq/bart# python run_onnx_exporter.py --model_name_or_path facebook/bart-base

Downloading: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.72k/1.72k [00:00<00:00, 2.54MB/s]

Downloading: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 558M/558M [01:00<00:00, 9.16MB/s]

Downloading: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 899k/899k [00:13<00:00, 66.8kB/s]

Downloading: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 456k/456k [00:04<00:00, 109kB/s]

Downloading: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.36M/1.36M [00:11<00:00, 117kB/s]

2022-12-02 07:20:59 | INFO | __main__ | [run_onnx_exporter.py:207] Exporting model to ONNX

/usr/local/lib/python3.8/dist-packages/transformers/models/bart/modeling_bart.py:213: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert attn_weights.size() == (

/usr/local/lib/python3.8/dist-packages/transformers/models/bart/modeling_bart.py:220: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert attention_mask.size() == (

/usr/local/lib/python3.8/dist-packages/transformers/models/bart/modeling_bart.py:252: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert attn_output.size() == (

/usr/local/lib/python3.8/dist-packages/transformers/models/bart/modeling_bart.py:856: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if input_shape[-1] > 1:

/usr/local/lib/python3.8/dist-packages/torch/jit/_trace.py:976: TracerWarning: Encountering a list at the output of the tracer might cause the trace to be incorrect, this is only valid if the container structure does not change based on the module's inputs. Consider using a constant container instead (e.g. for `list`, use a `tuple` instead. for `dict`, use a `NamedTuple` instead). If you absolutely need this and know the side effects, pass strict=False to trace() to allow this behavior.

module._c._create_method_from_trace(

/usr/local/lib/python3.8/dist-packages/torch/jit/_trace.py:154: UserWarning: The .grad attribute of a Tensor that is not a leaf Tensor is being accessed. Its .grad attribute won't be populated during autograd.backward(). If you indeed want the .grad field to be populated for a non-leaf Tensor, use .retain_grad() on the non-leaf Tensor. If you access the non-leaf Tensor by mistake, make sure you access the leaf Tensor instead. See github.com/pytorch/pytorch/pull/30531 for more informations. (Triggered internally at aten/src/ATen/core/TensorBody.h:480.)

if a.grad is not None:

/usr/local/lib/python3.8/dist-packages/torch/jit/annotations.py:299: UserWarning: TorchScript will treat type annotations of Tensor dtype-specific subtypes as if they are normal Tensors. dtype constraints are not enforced in compilation either.

warnings.warn("TorchScript will treat type annotations of Tensor "

Traceback (most recent call last):

File "run_onnx_exporter.py", line 212, in <module>

main()

File "run_onnx_exporter.py", line 208, in main

export_and_validate_model(model, tokenizer, output_name, num_beams, max_length)

File "run_onnx_exporter.py", line 127, in export_and_validate_model

torch.onnx.export(

File "/usr/local/lib/python3.8/dist-packages/torch/onnx/utils.py", line 504, in export

_export(

File "/usr/local/lib/python3.8/dist-packages/torch/onnx/utils.py", line 1529, in _export

graph, params_dict, torch_out = _model_to_graph(

File "/usr/local/lib/python3.8/dist-packages/torch/onnx/utils.py", line 1131, in _model_to_graph

example_outputs = _get_example_outputs(model, args)

File "/usr/local/lib/python3.8/dist-packages/torch/onnx/utils.py", line 1017, in _get_example_outputs

example_outputs = model(*input_args, **input_kwargs)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1190, in _call_impl

return forward_call(*input, **kwargs)

RuntimeError: forward() Expected a value of type 'Tensor (inferred)' for argument 'num_beams' but instead found type 'int'.

Inferred 'num_beams' to be of type 'Tensor' because it was not annotated with an explicit type.

Position: 3

Value: 4

Declaration: forward(__torch__.bart_onnx.generation_onnx.BARTBeamSearchGenerator self, Tensor input_ids, Tensor attention_mask, Tensor num_beams, Tensor max_length, Tensor decoder_start_token_id) -> Tensor

Cast error details: Unable to cast 4 to Tensor

https://github.com/philschmid/onnx-transformers/blob/master/seq2seq/bart/run_onnx_exporter.py

not working.

Is it typing problem?

sam4onnx A very simple tool to rewrite parameters such as attributes and constants for OPs in ONNX models. Simple Attribute and Constant Modifier for

ONNXEncoder An executor that loads ONNX models and embeds documents using the ONNX runtime. Usage via Docker image (recommended) from jina import Flow

ONNX Runtime Web demo is an interactive demo portal showing real use cases running ONNX Runtime Web in VueJS. It currently supports four examples for you to quickly experience the power of ONNX Runtime Web.

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

ONNX-GLPDepth - Python scripts for performing monocular depth estimation using the GLPDepth model in ONNX

Python scripts for performing monocular depth estimation using the PackNet-SfM model in ONNX

sne4onnx A very simple tool for situations where optimization with onnx-simplifier would exceed the Protocol Buffers upper file size limit of 2GB, or

sog4onnx Simple ONNX operation generator. Simple Operation Generator for ONNX. https://github.com/PINTO0309/simple-onnx-processing-tools Key concept V

snc4onnx Simple tool to combine(merge) onnx models. Simple Network Combine Tool for ONNX. https://github.com/PINTO0309/simple-onnx-processing-tools 1.

ColossalAI-Examples This repository contains examples of training models with Co