1361 Repositories

Python 3d-background-model Libraries

Code for KDD'20 "An Efficient Neighborhood-based Interaction Model for Recommendation on Heterogeneous Graph"

Heterogeneous INteract and aggreGatE (GraphHINGE) This is a pytorch implementation of GraphHINGE model. This is the experiment code in the following w

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

The official implementation of VAENAR-TTS, a VAE based non-autoregressive TTS model.

VAENAR-TTS This repo contains code accompanying the paper "VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis". Sa

Implemented fully documented Particle Swarm Optimization algorithm (basic model with few advanced features) using Python programming language

Implemented fully documented Particle Swarm Optimization (PSO) algorithm in Python which includes a basic model along with few advanced features such as updating inertia weight, cognitive, social learning coefficients and maximum velocity of the particle.

ML model to classify between cats and dogs

Cats-and-dogs-classifier This is my first ML model which can classify between cats and dogs. Here the accuracy is around 75%, however , the accuracy c

WAGMA-SGD is a decentralized asynchronous SGD for distributed deep learning training based on model averaging.

WAGMA-SGD is a decentralized asynchronous SGD based on wait-avoiding group model averaging. The synchronization is relaxed by making the collectives externally-triggerable, namely, a collective can be initiated without requiring that all the processes enter it. It partially reduces the data within non-overlapping groups of process, improving the parallel scalability.

Simple transformer model for CIFAR10

CIFAR-Transformer Simple transformer model for CIFAR10. Reference: https://www.tensorflow.org/text/tutorials/transformer https://github.com/huggingfac

Use different orders of N-gram model to play Hangman game.

Hangman game The Hangman game is a game whereby one person thinks of a word, which is kept secret from another person, who tries to guess the word one

A simple implementation of N-gram language model.

About A simple implementation of N-gram language model. Requirements numpy Data preparation Corpus Training data for the N-gram model, a text file lik

ElegantRL is featured with lightweight, efficient and stable, for researchers and practitioners.

Lightweight, efficient and stable implementations of deep reinforcement learning algorithms using PyTorch. 🔥

A highly sophisticated sequence-to-sequence model for code generation

CoderX A proof-of-concept AI system by Graham Neubig (June 30, 2021). About CoderX CoderX is a retrieval-based code generation AI system reminiscent o

gym-anm is a framework for designing reinforcement learning (RL) environments that model Active Network Management (ANM) tasks in electricity distribution networks.

gym-anm is a framework for designing reinforcement learning (RL) environments that model Active Network Management (ANM) tasks in electricity distribution networks. It is built on top of the OpenAI Gym toolkit.

This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code for training a DPR model then continuing training with RAG.

KGI (Knowledge Graph Induction) for slot filling This is the code for our KILT leaderboard submission to the T-REx and zsRE tasks. It includes code fo

This repository contains the code, data, and models of the paper titled "XL-Sum: Large-Scale Multilingual Abstractive Summarization for 44 Languages" published in Findings of the Association for Computational Linguistics: ACL 2021.

XL-Sum This repository contains the code, data, and models of the paper titled "XL-Sum: Large-Scale Multilingual Abstractive Summarization for 44 Lang

A Multi-modal Model Chinese Spell Checker Released on ACL2021.

ReaLiSe ReaLiSe is a multi-modal Chinese spell checking model. This the office code for the paper Read, Listen, and See: Leveraging Multimodal Informa

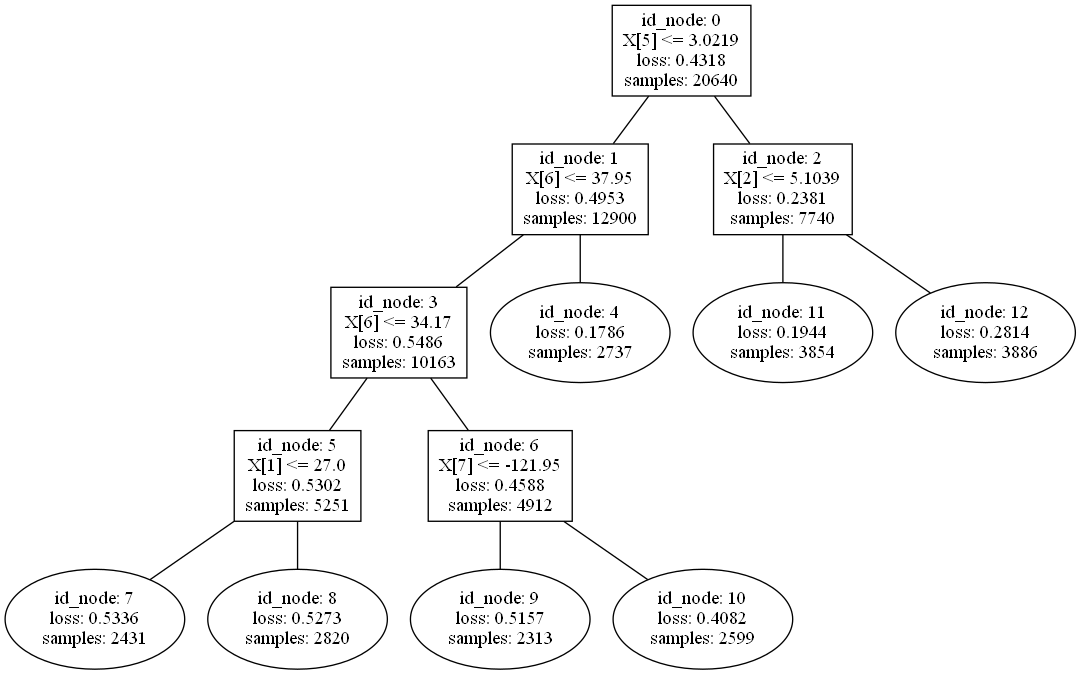

A python library to build Model Trees with Linear Models at the leaves.

A python library to build Model Trees with Linear Models at the leaves.

This is a template for the Non-autoregressive Deep Learning-Based TTS model (in PyTorch).

Non-autoregressive Deep Learning-Based TTS Template This is a template for the Non-autoregressive TTS model. It contains Data Preprocessing Pipeline D

This repository contains the code, data, and models of the paper titled "XL-Sum: Large-Scale Multilingual Abstractive Summarization for 44 Languages" published in Findings of the Association for Computational Linguistics: ACL 2021.

XL-Sum This repository contains the code, data, and models of the paper titled "XL-Sum: Large-Scale Multilingual Abstractive Summarization for 44 Lang

CLIP: Connecting Text and Image (Learning Transferable Visual Models From Natural Language Supervision)

CLIP (Contrastive Language–Image Pre-training) Experiments (Evaluation) Model Dataset Acc (%) ViT-B/32 (Paper) CIFAR100 65.1 ViT-B/32 (Our) CIFAR100 6

Implementation of FitVid video prediction model in JAX/Flax.

FitVid Video Prediction Model Implementation of FitVid video prediction model in JAX/Flax. If you find this code useful, please cite it in your paper:

AllenNLP integration for Shiba: Japanese CANINE model

Allennlp Integration for Shiba allennlp-shiab-model is a Python library that provides AllenNLP integration for shiba-model. SHIBA is an approximate re

Supporting code for the paper "Dangers of Bayesian Model Averaging under Covariate Shift"

Dangers of Bayesian Model Averaging under Covariate Shift This repository contains the code to reproduce the experiments in the paper Dangers of Bayes

CausaLM: Causal Model Explanation Through Counterfactual Language Models

CausaLM: Causal Model Explanation Through Counterfactual Language Models Authors: Amir Feder, Nadav Oved, Uri Shalit, Roi Reichart Abstract: Understan

Unified tracking framework with a single appearance model

Paper: Do different tracking tasks require different appearance model? [ArXiv] (comming soon) [Project Page] (comming soon) UniTrack is a simple and U

Model-based 3D Hand Reconstruction via Self-Supervised Learning, CVPR2021

S2HAND: Model-based 3D Hand Reconstruction via Self-Supervised Learning S2HAND presents a self-supervised 3D hand reconstruction network that can join

This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard evaluation metric to measure the accuracy and robustness of 3D face reconstruction methods from a single image under variations in viewing angle, lighting, and common occlusions.

NoW Evaluation This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard e

Baseline model for "GraspNet-1Billion: A Large-Scale Benchmark for General Object Grasping" (CVPR 2020)

GraspNet Baseline Baseline model for "GraspNet-1Billion: A Large-Scale Benchmark for General Object Grasping" (CVPR 2020). [paper] [dataset] [API] [do

JittorVis - Visual understanding of deep learning model.

JittorVis is a deep neural network computational graph visualization library based on Jittor.

A new GCN model for Point Cloud Analyse

Pytorch Implementation of PointNet and PointNet++ This repo is implementation for VA-GCN in pytorch. Classification (ModelNet10/40) Data Preparation D

TunBERT is the first release of a pre-trained BERT model for the Tunisian dialect using a Tunisian Common-Crawl-based dataset.

TunBERT is the first release of a pre-trained BERT model for the Tunisian dialect using a Tunisian Common-Crawl-based dataset. TunBERT was applied to three NLP downstream tasks: Sentiment Analysis (SA), Tunisian Dialect Identification (TDI) and Reading Comprehension Question-Answering (RCQA)

Sequence model architectures from scratch in PyTorch

This repository implements a variety of sequence model architectures from scratch in PyTorch. Effort has been put to make the code well structured so that it can serve as learning material. The training loop implements the learner design pattern from fast.ai in pure PyTorch, with access to the loop provided through callbacks. Detailed logging and graphs are also provided with python logging and wandb. Additional implementations will be added.

A Fast Monotone Rotating Shallow Water model

pyRSW A Fast Monotone Rotating Shallow Water model How fast? As fast as a sustained 2 Gflop/s per core on a 2.5 GHz cpu (or 2048 Gflop/s with 1024 cor

Machine Learning Model to predict the payment date of an invoice when it gets created in the system.

Payment-Date-Prediction Machine Learning Model to predict the payment date of an invoice when it gets created in the system.

This repository holds the code for the paper "Deep Conditional Gaussian Mixture Model forConstrained Clustering".

Deep Conditional Gaussian Mixture Model for Constrained Clustering. This repository holds the code for the paper Deep Conditional Gaussian Mixture Mod

In this repository, I have developed an end to end Automatic speech recognition project. I have developed the neural network model for automatic speech recognition with PyTorch and used MLflow to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry.

End to End Automatic Speech Recognition In this repository, I have developed an end to end Automatic speech recognition project. I have developed the

Visualize Camera's Pose Using Extrinsic Parameter by Plotting Pyramid Model on 3D Space

extrinsic2pyramid Visualize Camera's Pose Using Extrinsic Parameter by Plotting Pyramid Model on 3D Space Intro A very simple and straightforward modu

Official Pytorch implementation of paper "Reverse Engineering of Generative Models: Inferring Model Hyperparameters from Generated Images"

Reverse_Engineering_GMs Official Pytorch implementation of paper "Reverse Engineering of Generative Models: Inferring Model Hyperparameters from Gener

Code for "LoRA: Low-Rank Adaptation of Large Language Models"

LoRA: Low-Rank Adaptation of Large Language Models This repo contains the implementation of LoRA in GPT-2 and steps to replicate the results in our re

Tensorflow implementation of Swin Transformer model.

Swin Transformer (Tensorflow) Tensorflow reimplementation of Swin Transformer model. Based on Official Pytorch implementation. Requirements tensorflow

A denoising diffusion probabilistic model (DDPM) tailored for conditional generation of protein distograms

Denoising Diffusion Probabilistic Model for Proteins Implementation of Denoising Diffusion Probabilistic Model in Pytorch. It is a new approach to gen

modelvshuman is a Python library to benchmark the gap between human and machine vision

modelvshuman is a Python library to benchmark the gap between human and machine vision. Using this library, both PyTorch and TensorFlow models can be evaluated on 17 out-of-distribution datasets with high-quality human comparison data.

This repository implements and evaluates convolutional networks on the Möbius strip as toy model instantiations of Coordinate Independent Convolutional Networks.

Orientation independent Möbius CNNs This repository implements and evaluates convolutional networks on the Möbius strip as toy model instantiations of

Build tensorflow keras model pipelines in a single line of code. Created by Ram Seshadri. Collaborators welcome. Permission granted upon request.

deep_autoviml Build keras pipelines and models in a single line of code! Table of Contents Motivation How it works Technology Install Usage API Image

On the model-based stochastic value gradient for continuous reinforcement learning

On the model-based stochastic value gradient for continuous reinforcement learning This repository is by Brandon Amos, Samuel Stanton, Denis Yarats, a

JAX code for the paper "Control-Oriented Model-Based Reinforcement Learning with Implicit Differentiation"

Optimal Model Design for Reinforcement Learning This repository contains JAX code for the paper Control-Oriented Model-Based Reinforcement Learning wi

deployment of a hybrid model for automatic weapon detection/ anomaly detection for surveillance applications

Automatic Weapon Detection Deployment of a hybrid model for automatic weapon detection/ anomaly detection for surveillance applications. Loved the pro

Intruder detection systems are common place now, and readily available in industry, but how do they work? They must detect people and large animals, but not generate false alarms in the presence of small animals, changes in lighting, environmental motion such as trees, or melting snow. To work correctly, the system must learn the background, in order to differentiate foreground objects.

Intruder-Detection Intruder detection systems are common place now, and readily available in industry, but how do they work? They must detect people a

Handwritten Text Recognition (HTR) system implemented with TensorFlow (TF) and trained on the IAM off-line HTR dataset. This Neural Network (NN) model recognizes the text contained in the images of segmented words.

Handwritten-Text-Recognition Handwritten Text Recognition (HTR) system implemented with TensorFlow (TF) and trained on the IAM off-line HTR dataset. T

HNECV: Heterogeneous Network Embedding via Cloud model and Variational inference

HNECV This repository provides a reference implementation of HNECV as described in the paper: HNECV: Heterogeneous Network Embedding via Cloud model a

The BCNet related data and inference model.

BCNet This repository includes the some source code and related dataset of paper BCNet: Learning Body and Cloth Shape from A Single Image, ECCV 2020,

Codebase for the Summary Loop paper at ACL2020

Summary Loop This repository contains the code for ACL2020 paper: The Summary Loop: Learning to Write Abstractive Summaries Without Examples. Training

Efficient Lottery Ticket Finding: Less Data is More

The lottery ticket hypothesis (LTH) reveals the existence of winning tickets (sparse but critical subnetworks) for dense networks, that can be trained in isolation from random initialization to match the latter’s accuracies.

Pytorch Implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension)

DiffSinger - PyTorch Implementation PyTorch implementation of DiffSinger: Diffusion Acoustic Model for Singing Voice Synthesis (TTS Extension). Status

Model-free Vehicle Tracking and State Estimation in Point Cloud Sequences

Model-free Vehicle Tracking and State Estimation in Point Cloud Sequences 1. Introduction This project is for paper Model-free Vehicle Tracking and St

Chinese clinical named entity recognition using pre-trained BERT model

Chinese clinical named entity recognition (CNER) using pre-trained BERT model Introduction Code for paper Chinese clinical named entity recognition wi

Protein Language Model

ProteinLM We pretrain protein language model based on Megatron-LM framework, and then evaluate the pretrained model results on TAPE (Tasks Assessing P

simpleT5 is built on top of PyTorch-lightning⚡️ and Transformers🤗 that lets you quickly train your T5 models.

Quickly train T5 models in just 3 lines of code + ONNX support simpleT5 is built on top of PyTorch-lightning ⚡️ and Transformers 🤗 that lets you quic

SparseML is a libraries for applying sparsification recipes to neural networks with a few lines of code, enabling faster and smaller models

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.

chen2020iros: Learning an Overlap-based Observation Model for 3D LiDAR Localization.

Overlap-based 3D LiDAR Monte Carlo Localization This repo contains the code for our IROS2020 paper: Learning an Overlap-based Observation Model for 3D

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

Run object detection model on the Raspberry Pi

Using TensorFlow Lite with Python is great for embedded devices based on Linux, such as Raspberry Pi.

ProteinBERT is a universal protein language model pretrained on ~106M proteins from the UniRef90 dataset.

ProteinBERT is a universal protein language model pretrained on ~106M proteins from the UniRef90 dataset. Through its Python API, the pretrained model can be fine-tuned on any protein-related task in a matter of minutes. Based on our experiments with a wide range of benchmarks, ProteinBERT usually achieves state-of-the-art performance. ProteinBERT is built on TenforFlow/Keras.

NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling

NU-Wave: A Diffusion Probabilistic Model for Neural Audio Upsampling For Official repo of NU-Wave: A Diffusion Probabilistic Model for Neural Audio Up

A GPT, made only of MLPs, in Jax

MLP GPT - Jax (wip) A GPT, made only of MLPs, in Jax. The specific MLP to be used are gMLPs with the Spatial Gating Units. Working Pytorch implementat

Codes for our IJCAI21 paper: Dialogue Discourse-Aware Graph Model and Data Augmentation for Meeting Summarization

DDAMS This is the pytorch code for our IJCAI 2021 paper Dialogue Discourse-Aware Graph Model and Data Augmentation for Meeting Summarization [Arxiv Pr

Localization of thoracic abnormalities model based on VinBigData (top 1%)

Repository contains the code for 2nd place solution of VinBigData Chest X-ray Abnormalities Detection competition. The goal of competition was to auto

Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Parallel Tacotron2 Pytorch Implementation of Google's Parallel Tacotron 2: A Non-Autoregressive Neural TTS Model with Differentiable Duration Modeling

Inference code for "StylePeople: A Generative Model of Fullbody Human Avatars" paper. This code is for the part of the paper describing video-based avatars.

NeuralTextures This is repository with inference code for paper "StylePeople: A Generative Model of Fullbody Human Avatars" (CVPR21). This code is for

Train 🤗transformers with DeepSpeed: ZeRO-2, ZeRO-3

Fork from https://github.com/huggingface/transformers/tree/86d5fb0b360e68de46d40265e7c707fe68c8015b/examples/pytorch/language-modeling at 2021.05.17.

시각 장애인을 위한 스마트 지팡이에 활용될 딥러닝 모델 (DL Model Repo)

SmartCane-DL-Model Smart Cane using semantic segmentation 참고한 Github repositoy 🔗 https://github.com/JunHyeok96/Road-Segmentation.git 데이터셋 🔗 https://

A PaddlePaddle version image model zoo.

Paddle-Image-Models English | 简体中文 A PaddlePaddle version image model zoo. Install Package Install by pip: $ pip install ppim Install by wheel package

An ultra fast tiny model for lane detection, using onnx_parser, TensorRTAPI, torch2trt to accelerate. our model support for int8, dynamic input and profiling. (Nvidia-Alibaba-TensoRT-hackathon2021)

Ultra_Fast_Lane_Detection_TensorRT An ultra fast tiny model for lane detection, using onnx_parser, TensorRTAPI to accelerate. our model support for in

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

![[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.](https://github.com/cambridgeltl/sapbert/raw/main/sapbert_front_graphs_v6.png?raw=true)

[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.

SapBERT: Self-alignment pretraining for BERT This repo holds code for the SapBERT model presented in our NAACL 2021 paper: Self-Alignment Pretraining

A DeepStack custom model for detecting common objects in dark/night images and videos.

DeepStack_ExDark This repository provides a custom DeepStack model that has been trained and can be used for creating a new object detection API for d

Ever felt tired after preprocessing the dataset, and not wanting to write any code further to train your model? Ever encountered a situation where you wanted to record the hyperparameters of the trained model and able to retrieve it afterward? Models Playground is here to help you do that. Models playground allows you to train your models right from the browser.

Models Playground 🗂️ Upload a Preprocessed Dataset 🌠 Choose whether to perform Classification or Regression 🦹 Enter the Dependent Variable ?

Simple implementation of OpenAI CLIP model in PyTorch.

It was in January of 2021 that OpenAI announced two new models: DALL-E and CLIP, both multi-modality models connecting texts and images in some way. In this article we are going to implement CLIP model from scratch in PyTorch. OpenAI has open-sourced some of the code relating to CLIP model but I found it intimidating and it was far from something short and simple. I also came across a good tutorial inspired by CLIP model on Keras code examples and I translated some parts of it into PyTorch to build this tutorial totally with our beloved PyTorch!

Implementation of Kaneko et al.'s MaskCycleGAN-VC model for non-parallel voice conversion.

MaskCycleGAN-VC Unofficial PyTorch implementation of Kaneko et al.'s MaskCycleGAN-VC (2021) for non-parallel voice conversion. MaskCycleGAN-VC is the

A fast Text-to-Speech (TTS) model. Work well for English, Mandarin/Chinese, Japanese, Korean, Russian and Tibetan (so far). 快速语音合成模型,适用于英语、普通话/中文、日语、韩语、俄语和藏语(当前已测试)。

简体中文 | English 并行语音合成 [TOC] 新进展 2021/04/20 合并 wavegan 分支到 main 主分支,删除 wavegan 分支! 2021/04/13 创建 encoder 分支用于开发语音风格迁移模块! 2021/04/13 softdtw 分支 支持使用 Sof

MILES is a multilingual text simplifier inspired by LSBert - A BERT-based lexical simplification approach proposed in 2018. Unlike LSBert, MILES uses the bert-base-multilingual-uncased model, as well as simple language-agnostic approaches to complex word identification (CWI) and candidate ranking.

MILES Multilingual Lexical Simplifier Explore the docs » Read LSBert Paper · Report Bug · Request Feature About The Project MILES is a multilingual te

This Repostory contains the pretrained DTLN-aec model for real-time acoustic echo cancellation.

This Repostory contains the pretrained DTLN-aec model for real-time acoustic echo cancellation.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

pytorch-kaldi is a project for developing state-of-the-art DNN/RNN hybrid speech recognition systems. The DNN part is managed by pytorch, while feature extraction, label computation, and decoding are performed with the kaldi toolkit.

The PyTorch-Kaldi Speech Recognition Toolkit PyTorch-Kaldi is an open-source repository for developing state-of-the-art DNN/HMM speech recognition sys

Google AI 2018 BERT pytorch implementation

BERT-pytorch Pytorch implementation of Google AI's 2018 BERT, with simple annotation BERT 2018 BERT: Pre-training of Deep Bidirectional Transformers f

Store model history and view/revert changes from admin site.

django-simple-history django-simple-history stores Django model state on every create/update/delete. This app supports the following combinations of D

Ray provides a simple, universal API for building distributed applications.

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Pydantic model support for Django ORM

Pydantic model support for Django ORM

erdantic is a simple tool for drawing entity relationship diagrams (ERDs) for Python data model classes

erdantic is a simple tool for drawing entity relationship diagrams (ERDs) for Python data model classes. Diagrams are rendered using the venerable Graphviz library.

A simple app that provides django integration for RQ (Redis Queue)

Django-RQ Django integration with RQ, a Redis based Python queuing library. Django-RQ is a simple app that allows you to configure your queues in djan

RL and distillation in CARLA using a factorized world model

World on Rails Learning to drive from a world on rails Dian Chen, Vladlen Koltun, Philipp Krähenbühl, arXiv techical report (arXiv 2105.00636) This re

TorchFlare is a simple, beginner-friendly, and easy-to-use PyTorch Framework train your models effortlessly.

TorchFlare TorchFlare is a simple, beginner-friendly and an easy-to-use PyTorch Framework train your models without much effort. It provides an almost

DiffQ performs differentiable quantization using pseudo quantization noise. It can automatically tune the number of bits used per weight or group of weights, in order to achieve a given trade-off between model size and accuracy.

Differentiable Model Compression via Pseudo Quantization Noise DiffQ performs differentiable quantization using pseudo quantization noise. It can auto

ReConsider is a re-ranking model that re-ranks the top-K (passage, answer-span) predictions of an Open-Domain QA Model like DPR (Karpukhin et al., 2020).

ReConsider ReConsider is a re-ranking model that re-ranks the top-K (passage, answer-span) predictions of an Open-Domain QA Model like DPR (Karpukhin

TorchShard is a lightweight engine for slicing a PyTorch tensor into parallel shards

TorchShard is a lightweight engine for slicing a PyTorch tensor into parallel shards. It can reduce GPU memory and scale up the training when the model has massive linear layers (e.g., ViT, BERT and GPT) or huge classes (millions). It has the same API design as PyTorch.

An official implementation for "CLIP4Clip: An Empirical Study of CLIP for End to End Video Clip Retrieval"

The implementation of paper CLIP4Clip: An Empirical Study of CLIP for End to End Video Clip Retrieval. CLIP4Clip is a video-text retrieval model based

PyTorch implementation of the end-to-end coreference resolution model with different higher-order inference methods.

End-to-End Coreference Resolution with Different Higher-Order Inference Methods This repository contains the implementation of the paper: Revealing th

TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Prediction.

TalkNet 2 [WIP] TalkNet 2: Non-Autoregressive Depth-Wise Separable Convolutional Model for Speech Synthesis with Explicit Pitch and Duration Predictio

mbrl-lib is a toolbox for facilitating development of Model-Based Reinforcement Learning algorithms.

mbrl-lib is a toolbox for facilitating development of Model-Based Reinforcement Learning algorithms. It provides easily interchangeable modeling and planning components, and a set of utility functions that allow writing model-based RL algorithms with only a few lines of code.

Implementation for the paper SMPLicit: Topology-aware Generative Model for Clothed People (CVPR 2021)

SMPLicit: Topology-aware Generative Model for Clothed People [Project] [arXiv] License Software Copyright License for non-commercial scientific resear

Source code for AAAI20 "Generating Persona Consistent Dialogues by Exploiting Natural Language Inference".

Generating Persona Consistent Dialogues by Exploiting Natural Language Inference Source code for RCDG model in AAAI20 Generating Persona Consistent Di