7190 Repositories

Python Chrome-dino-deep-Q-learning-pytorch Libraries

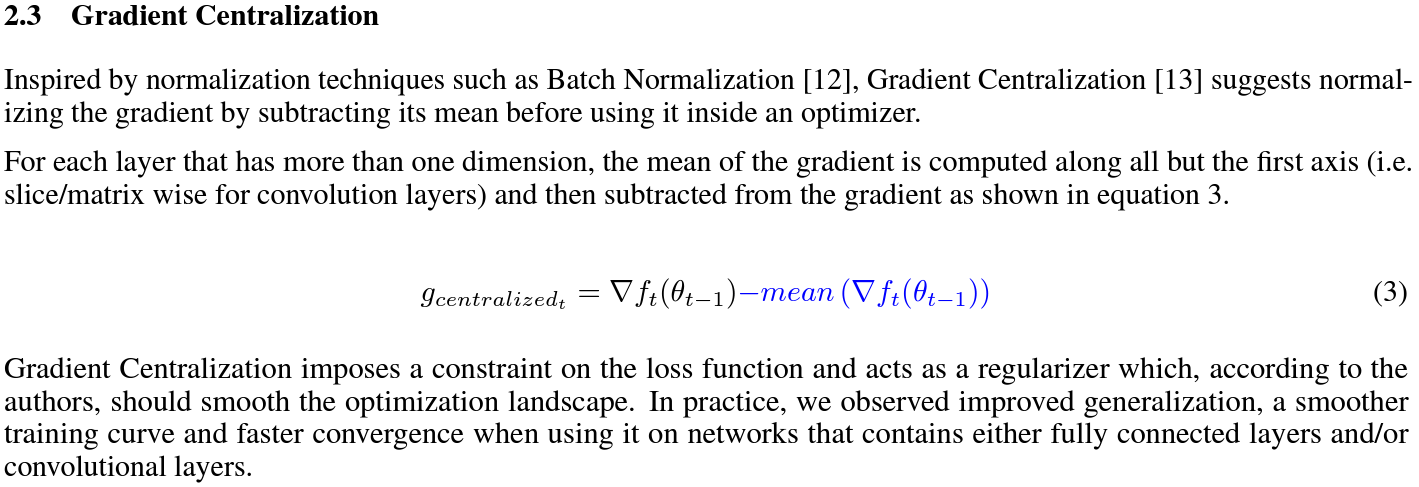

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

ilpyt: imitation learning library with modular, baseline implementations in Pytorch

ilpyt The imitation learning toolbox (ilpyt) contains modular implementations of common deep imitation learning algorithms in PyTorch, with unified in

Scripts for training an AI to play the endless runner Subway Surfers using a supervised machine learning approach by imitation and a convolutional neural network (CNN) for image classification

About subwAI subwAI - a project for training an AI to play the endless runner Subway Surfers using a supervised machine learning approach by imitation

A Closer Look at Structured Pruning for Neural Network Compression

A Closer Look at Structured Pruning for Neural Network Compression Code used to reproduce experiments in https://arxiv.org/abs/1810.04622. To prune, w

PyTorch Implementation of [1611.06440] Pruning Convolutional Neural Networks for Resource Efficient Inference

PyTorch implementation of [1611.06440 Pruning Convolutional Neural Networks for Resource Efficient Inference] This demonstrates pruning a VGG16 based

Official implementations of EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis.

EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis This repo contains the official implementations of EigenDamage: Structured Prunin

Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and Layer Input Masking"

model_based_energy_constrained_compression Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and

Learning Sparse Neural Networks through L0 regularization

Example implementation of the L0 regularization method described at Learning Sparse Neural Networks through L0 regularization, Christos Louizos, Max W

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres

A tutorial on "Bayesian Compression for Deep Learning" published at NIPS (2017).

Code release for "Bayesian Compression for Deep Learning" In "Bayesian Compression for Deep Learning" we adopt a Bayesian view for the compression of

Tutorial for surrogate gradient learning in spiking neural networks

SpyTorch A tutorial on surrogate gradient learning in spiking neural networks Version: 0.4 This repository contains tutorial files to get you started

A PyTorch implementation of Learning to learn by gradient descent by gradient descent

Intro PyTorch implementation of Learning to learn by gradient descent by gradient descent. Run python main.py TODO Initial implementation Toy data LST

OptNet: Differentiable Optimization as a Layer in Neural Networks

OptNet: Differentiable Optimization as a Layer in Neural Networks This repository is by Brandon Amos and J. Zico Kolter and contains the PyTorch sourc

A PyTorch implementation of L-BFGS.

PyTorch-LBFGS: A PyTorch Implementation of L-BFGS Authors: Hao-Jun Michael Shi (Northwestern University) and Dheevatsa Mudigere (Facebook) What is it?

Riemannian Adaptive Optimization Methods with pytorch optim

geoopt Manifold aware pytorch.optim. Unofficial implementation for “Riemannian Adaptive Optimization Methods” ICLR2019 and more. Installation Make sur

On the Variance of the Adaptive Learning Rate and Beyond

RAdam On the Variance of the Adaptive Learning Rate and Beyond We are in an early-release beta. Expect some adventures and rough edges. Table of Conte

lookahead optimizer (Lookahead Optimizer: k steps forward, 1 step back) for pytorch

lookahead optimizer for pytorch PyTorch implement of Lookahead Optimizer: k steps forward, 1 step back Usage: base_opt = torch.optim.Adam(model.parame

functorch is a prototype of JAX-like composable function transforms for PyTorch.

functorch is a prototype of JAX-like composable function transforms for PyTorch.

docTR by Mindee (Document Text Recognition) - a seamless, high-performing & accessible library for OCR-related tasks powered by Deep Learning.

docTR by Mindee (Document Text Recognition) - a seamless, high-performing & accessible library for OCR-related tasks powered by Deep Learning.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

Pytorch Geometric Tutorials

Pytorch Geometric Tutorials

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming. Outperforming `GPT-3` on SuperGLUE Few-Shot text classification.

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

Bunch of optimizer implementations in PyTorch

Bunch of optimizer implementations in PyTorch

![Dense Deep Unfolding Network with 3D-CNN Prior for Snapshot Compressive Imaging, ICCV2021 [PyTorch Code]](https://github.com/jianzhangcs/SCI3D/raw/main/fig/SCI_cropped_page-0001.jpg)

Dense Deep Unfolding Network with 3D-CNN Prior for Snapshot Compressive Imaging, ICCV2021 [PyTorch Code]

Dense Deep Unfolding Network with 3D-CNN Prior for Snapshot Compressive Imaging, ICCV2021 [PyTorch Code]

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

A pytorch implementation of the ACL2019 paper "Simple and Effective Text Matching with Richer Alignment Features".

RE2 This is a pytorch implementation of the ACL 2019 paper "Simple and Effective Text Matching with Richer Alignment Features". The original Tensorflo

PyTorch implementation of Glow

glow-pytorch PyTorch implementation of Glow, Generative Flow with Invertible 1x1 Convolutions (https://arxiv.org/abs/1807.03039) Usage: python train.p

PyTorch implementation of SIFT descriptor

This is an differentiable pytorch implementation of SIFT patch descriptor. It is very slow for describing one patch, but quite fast for batch. It can

Unofficial PyTorch implementation of Google AI's VoiceFilter system

VoiceFilter Note from Seung-won (2020.10.25) Hi everyone! It's Seung-won from MINDs Lab, Inc. It's been a long time since I've released this open-sour

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks This repository implements a capsule model Inten

ppo_pytorch_cpp - an implementation of the proximal policy optimization algorithm for the C++ API of Pytorch

PPO Pytorch C++ This is an implementation of the proximal policy optimization algorithm for the C++ API of Pytorch. It uses a simple TestEnvironment t

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

Simple Text-Generator with OpenAI gpt-2 Pytorch Implementation

GPT2-Pytorch with Text-Generator Better Language Models and Their Implications Our model, called GPT-2 (a successor to GPT), was trained simply to pre

PyTorch original implementation of Cross-lingual Language Model Pretraining.

XLM NEW: Added XLM-R model. PyTorch original implementation of Cross-lingual Language Model Pretraining. Includes: Monolingual language model pretrain

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

Adversarial Framework for (non-) Parametric Image Stylisation Mosaics

Fully Adversarial Mosaics (FAMOS) Pytorch implementation of the paper "Copy the Old or Paint Anew? An Adversarial Framework for (non-) Parametric Imag

A PyTorch implementation of the WaveGlow: A Flow-based Generative Network for Speech Synthesis

WaveGlow A PyTorch implementation of the WaveGlow: A Flow-based Generative Network for Speech Synthesis Quick Start: Install requirements: pip install

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

A Fast Sequence Transducer Implementation with PyTorch Bindings

transducer A Fast Sequence Transducer Implementation with PyTorch Bindings. The corresponding publication is Sequence Transduction with Recurrent Neur

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

Implements pytorch code for the Accelerated SGD algorithm.

AccSGD This is the code associated with Accelerated SGD algorithm used in the paper On the insufficiency of existing momentum schemes for Stochastic O

Tacotron 2 - PyTorch implementation with faster-than-realtime inference

Tacotron 2 (without wavenet) PyTorch implementation of Natural TTS Synthesis By Conditioning Wavenet On Mel Spectrogram Predictions. This implementati

PyTorch implementation of convolutional neural networks-based text-to-speech synthesis models

Deepvoice3_pytorch PyTorch implementation of convolutional networks-based text-to-speech synthesis models: arXiv:1710.07654: Deep Voice 3: Scaling Tex

PyTorch Implementation of "Non-Autoregressive Neural Machine Translation"

Non-Autoregressive Transformer Code release for Non-Autoregressive Neural Machine Translation by Jiatao Gu, James Bradbury, Caiming Xiong, Victor O.K.

PyTorch implementation of the NIPS-17 paper "Poincaré Embeddings for Learning Hierarchical Representations"

Poincaré Embeddings for Learning Hierarchical Representations PyTorch implementation of Poincaré Embeddings for Learning Hierarchical Representations

Wind Speed Prediction using LSTMs in PyTorch

Implementation of Deep-Forecast using PyTorch Deep Forecast: Deep Learning-based Spatio-Temporal Forecasting Adapted from original implementation Setu

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

StarGAN - Official PyTorch Implementation (CVPR 2018)

StarGAN - Official PyTorch Implementation ***** New: StarGAN v2 is available at https://github.com/clovaai/stargan-v2 ***** This repository provides t

A Pytorch Implementation for Compact Bilinear Pooling.

CompactBilinearPooling-Pytorch A Pytorch Implementation for Compact Bilinear Pooling. Adapted from tensorflow_compact_bilinear_pooling Prerequisites I

Compact Bilinear Pooling for PyTorch

Compact Bilinear Pooling for PyTorch. This repository has a pure Python implementation of Compact Bilinear Pooling and Count Sketch for PyTorch. This

Skipgram Negative Sampling in PyTorch

PyTorch SGNS Word2Vec's SkipGramNegativeSampling in Python. Yet another but quite general negative sampling loss implemented in PyTorch. It can be use

PyTorch implementation of Tacotron speech synthesis model.

tacotron_pytorch PyTorch implementation of Tacotron speech synthesis model. Inspired from keithito/tacotron. Currently not as much good speech quality

Pytorch implementation of winner from VQA Chllange Workshop in CVPR'17

2017 VQA Challenge Winner (CVPR'17 Workshop) pytorch implementation of Tips and Tricks for Visual Question Answering: Learnings from the 2017 Challeng

Pytorch implementation of Distributed Proximal Policy Optimization: https://arxiv.org/abs/1707.02286

Pytorch-DPPO Pytorch implementation of Distributed Proximal Policy Optimization: https://arxiv.org/abs/1707.02286 Using PPO with clip loss (from https

WIT (Wikipedia-based Image Text) Dataset is a large multimodal multilingual dataset comprising 37M+ image-text sets with 11M+ unique images across 100+ languages.

WIT (Wikipedia-based Image Text) Dataset is a large multimodal multilingual dataset comprising 37M+ image-text sets with 11M+ unique images across 100+ languages.

The PASS dataset: pretrained models and how to get the data - PASS: Pictures without humAns for Self-Supervised Pretraining

The PASS dataset: pretrained models and how to get the data - PASS: Pictures without humAns for Self-Supervised Pretraining

Merlion: A Machine Learning Framework for Time Series Intelligence

Merlion is a Python library for time series intelligence. It provides an end-to-end machine learning framework that includes loading and transforming data, building and training models, post-processing model outputs, and evaluating model performance. I

PyTorch implementations of normalizing flow and its variants.

PyTorch implementations of normalizing flow and its variants.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

Silero Models: pre-trained speech-to-text, text-to-speech models and benchmarks made embarrassingly simple

这是一个deeplabv3-plus-pytorch的源码,可以用于训练自己的模型。

DeepLabv3+:Encoder-Decoder with Atrous Separable Convolution语义分割模型在Pytorch当中的实现 目录 性能情况 Performance 所需环境 Environment 注意事项 Attention 文件下载 Download 训练步骤

MetaDrive: Composing Diverse Scenarios for Generalizable Reinforcement Learning

MetaDrive: Composing Diverse Driving Scenarios for Generalizable RL [ Documentation | Demo Video ] MetaDrive is a driving simulator with the following

easyopt is a super simple yet super powerful optuna-based Hyperparameters Optimization Framework that requires no coding.

easyopt is a super simple yet super powerful optuna-based Hyperparameters Optimization Framework that requires no coding.

Labelling platform for text using distant supervision

With DataQA, you can label unstructured text documents using rule-based distant supervision.

Apply Graph Self-Supervised Learning methods to graph-level task(TUDataset, MolculeNet Datset)

Graphlevel-SSL Overview Apply Graph Self-Supervised Learning methods to graph-level task(TUDataset, MolculeNet Dataset). It is unified framework to co

![[ICCV-2021] An Empirical Study of the Collapsing Problem in Semi-Supervised 2D Human Pose Estimation](https://github.com/xierc/Semi_Human_Pose/raw/master/assets/posedual.png)

[ICCV-2021] An Empirical Study of the Collapsing Problem in Semi-Supervised 2D Human Pose Estimation

An Empirical Study of the Collapsing Problem in Semi-Supervised 2D Human Pose Estimation (ICCV 2021) Introduction This is an official pytorch implemen

Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models.

Tevatron Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models. The toolkit has a modularized

Dynamic Attentive Graph Learning for Image Restoration, ICCV2021 [PyTorch Code]

Dynamic Attentive Graph Learning for Image Restoration This repository is for GATIR introduced in the following paper: Chong Mou, Jian Zhang, Zhuoyuan

High level network definitions with pre-trained weights in TensorFlow

TensorNets High level network definitions with pre-trained weights in TensorFlow (tested with 2.1.0 = TF = 1.4.0). Guiding principles Applicability.

TensorFlowOnSpark brings TensorFlow programs to Apache Spark clusters.

TensorFlowOnSpark TensorFlowOnSpark brings scalable deep learning to Apache Hadoop and Apache Spark clusters. By combining salient features from the T

Tensorforce: a TensorFlow library for applied reinforcement learning

Tensorforce: a TensorFlow library for applied reinforcement learning Introduction Tensorforce is an open-source deep reinforcement learning framework,

Deep Learning and Reinforcement Learning Library for Scientists and Engineers 🔥

TensorLayer is a novel TensorFlow-based deep learning and reinforcement learning library designed for researchers and engineers. It provides an extens

Tensorflow implementation of Human-Level Control through Deep Reinforcement Learning

Human-Level Control through Deep Reinforcement Learning Tensorflow implementation of Human-Level Control through Deep Reinforcement Learning. This imp

Train a deep learning net with OpenStreetMap features and satellite imagery.

DeepOSM Classify roads and features in satellite imagery, by training neural networks with OpenStreetMap (OSM) data. DeepOSM can: Download a chunk of

A best practice for tensorflow project template architecture.

A best practice for tensorflow project template architecture.

SenseNet is a sensorimotor and touch simulator for deep reinforcement learning research

SenseNet is a sensorimotor and touch simulator for deep reinforcement learning research

Sequence-to-Sequence learning using PyTorch

Seq2Seq in PyTorch This is a complete suite for training sequence-to-sequence models in PyTorch. It consists of several models and code to both train

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Deal or No Deal? End-to-End Learning for Negotiation Dialogues

Introduction This is a PyTorch implementation of the following research papers: (1) Hierarchical Text Generation and Planning for Strategic Dialogue (

Intent parsing and slot filling in PyTorch with seq2seq + attention

PyTorch Seq2Seq Intent Parsing Reframing intent parsing as a human - machine translation task. Work in progress successor to torch-seq2seq-intent-pars

pytorch implementation for PointNet

PointNet.pytorch This repo is implementation for PointNet in pytorch. The model is in pointnet/model.py. It is teste

DeepLab resnet v2 model in pytorch

pytorch-deeplab-resnet DeepLab resnet v2 model implementation in pytorch. The architecture of deepLab-ResNet has been replicated exactly as it is from

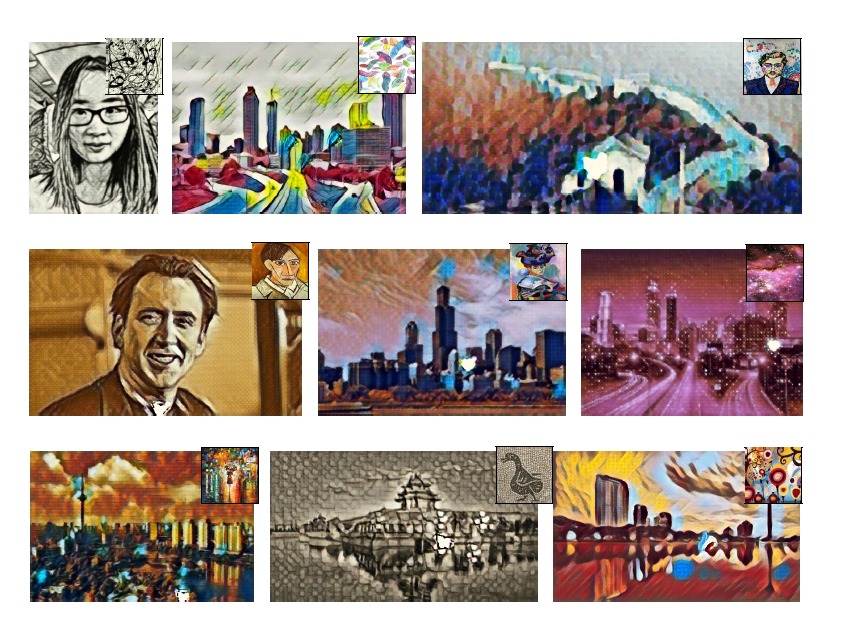

Neural Style and MSG-Net

PyTorch-Style-Transfer This repo provides PyTorch Implementation of MSG-Net (ours) and Neural Style (Gatys et al. CVPR 2016), which has been included

Pytorch implementation of Value Iteration Networks (NIPS 2016 best paper)

VIN: Value Iteration Networks A quick thank you A few others have released amazing related work which helped inspire and improve my own implementation

PyTorch implementation of Advantage async actor-critic Algorithms (A3C) in PyTorch

Advantage async actor-critic Algorithms (A3C) in PyTorch @inproceedings{mnih2016asynchronous, title={Asynchronous methods for deep reinforcement lea

Image-to-Image Translation in PyTorch

CycleGAN and pix2pix in PyTorch New: Please check out contrastive-unpaired-translation (CUT), our new unpaired image-to-image translation model that e

LeafSnap replicated using deep neural networks to test accuracy compared to traditional computer vision methods.

Deep-Leafsnap Convolutional Neural Networks have become largely popular in image tasks such as image classification recently largely due to to Krizhev

Deep Reinforcement Learning with pytorch & visdom

Deep Reinforcement Learning with pytorch & visdom Sample testings of trained agents (DQN on Breakout, A3C on Pong, DoubleDQN on CartPole, continuous A

Reproduces ResNet-V3 with pytorch

ResNeXt.pytorch Reproduces ResNet-V3 (Aggregated Residual Transformations for Deep Neural Networks) with pytorch. Tried on pytorch 1.6 Trains on Cifar

Tree LSTM implementation in PyTorch

Tree-Structured Long Short-Term Memory Networks This is a PyTorch implementation of Tree-LSTM as described in the paper Improved Semantic Representati

BEGAN in PyTorch

BEGAN in PyTorch This project is still in progress. If you are looking for the working code, use BEGAN-tensorflow. Requirements Python 2.7 Pillow tqdm

PyTorch implementation of Deformable Convolution

PyTorch implementation of Deformable Convolution !!!Warning: There is some issues in this implementation and this repo is not maintained any more, ple

A PyTorch implementation of Multi-digit Number Recognition from Street View Imagery using Deep Convolutional Neural Networks

SVHNClassifier-PyTorch A PyTorch implementation of Multi-digit Number Recognition from Street View Imagery using Deep Convolutional Neural Networks If

Improving Convolutional Networks via Attention Transfer (ICLR 2017)

Attention Transfer PyTorch code for "Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Tran

YOLOv2 in PyTorch

YOLOv2 in PyTorch NOTE: This project is no longer maintained and may not compatible with the newest pytorch (after 0.4.0). This is a PyTorch implement

PyTorch implementation of Value Iteration Networks (VIN): Clean, Simple and Modular. Visualization in Visdom.

VIN: Value Iteration Networks This is an implementation of Value Iteration Networks (VIN) in PyTorch to reproduce the results.(TensorFlow version) Key