7396 Repositories

Python Deep-Reinforcement-Learning-Algorithms-with-PyTorch Libraries

Using approximate bayesian posteriors in deep nets for active learning

Bayesian Active Learning (BaaL) BaaL is an active learning library developed at ElementAI. This repository contains techniques and reusable components

Functional tensors for probabilistic programming

Funsor Funsor is a tensor-like library for functions and distributions. See Functional tensors for probabilistic programming for a system description.

Python Library for learning (Structure and Parameter) and inference (Statistical and Causal) in Bayesian Networks.

pgmpy pgmpy is a python library for working with Probabilistic Graphical Models. Documentation and list of algorithms supported is at our official sit

Gaussian processes in TensorFlow

Website | Documentation (release) | Documentation (develop) | Glossary Table of Contents What does GPflow do? Installation Getting Started with GPflow

Fast, flexible and easy to use probabilistic modelling in Python.

Please consider citing the JMLR-MLOSS Manuscript if you've used pomegranate in your academic work! pomegranate is a package for building probabilistic

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

Deep universal probabilistic programming with Python and PyTorch

Getting Started | Documentation | Community | Contributing Pyro is a flexible, scalable deep probabilistic programming library built on PyTorch. Notab

Probabilistic reasoning and statistical analysis in TensorFlow

TensorFlow Probability TensorFlow Probability is a library for probabilistic reasoning and statistical analysis in TensorFlow. As part of the TensorFl

machine learning with logical rules in Python

skope-rules Skope-rules is a Python machine learning module built on top of scikit-learn and distributed under the 3-Clause BSD license. Skope-rules a

A scikit-learn based module for multi-label et. al. classification

scikit-multilearn scikit-multilearn is a Python module capable of performing multi-label learning tasks. It is built on-top of various scientific Pyth

Scikit-learn compatible estimation of general graphical models

skggm : Gaussian graphical models using the scikit-learn API In the last decade, learning networks that encode conditional independence relationships

A Python library for dynamic classifier and ensemble selection

DESlib DESlib is an easy-to-use ensemble learning library focused on the implementation of the state-of-the-art techniques for dynamic classifier and

Extra blocks for scikit-learn pipelines.

scikit-lego We love scikit learn but very often we find ourselves writing custom transformers, metrics and models. The goal of this project is to atte

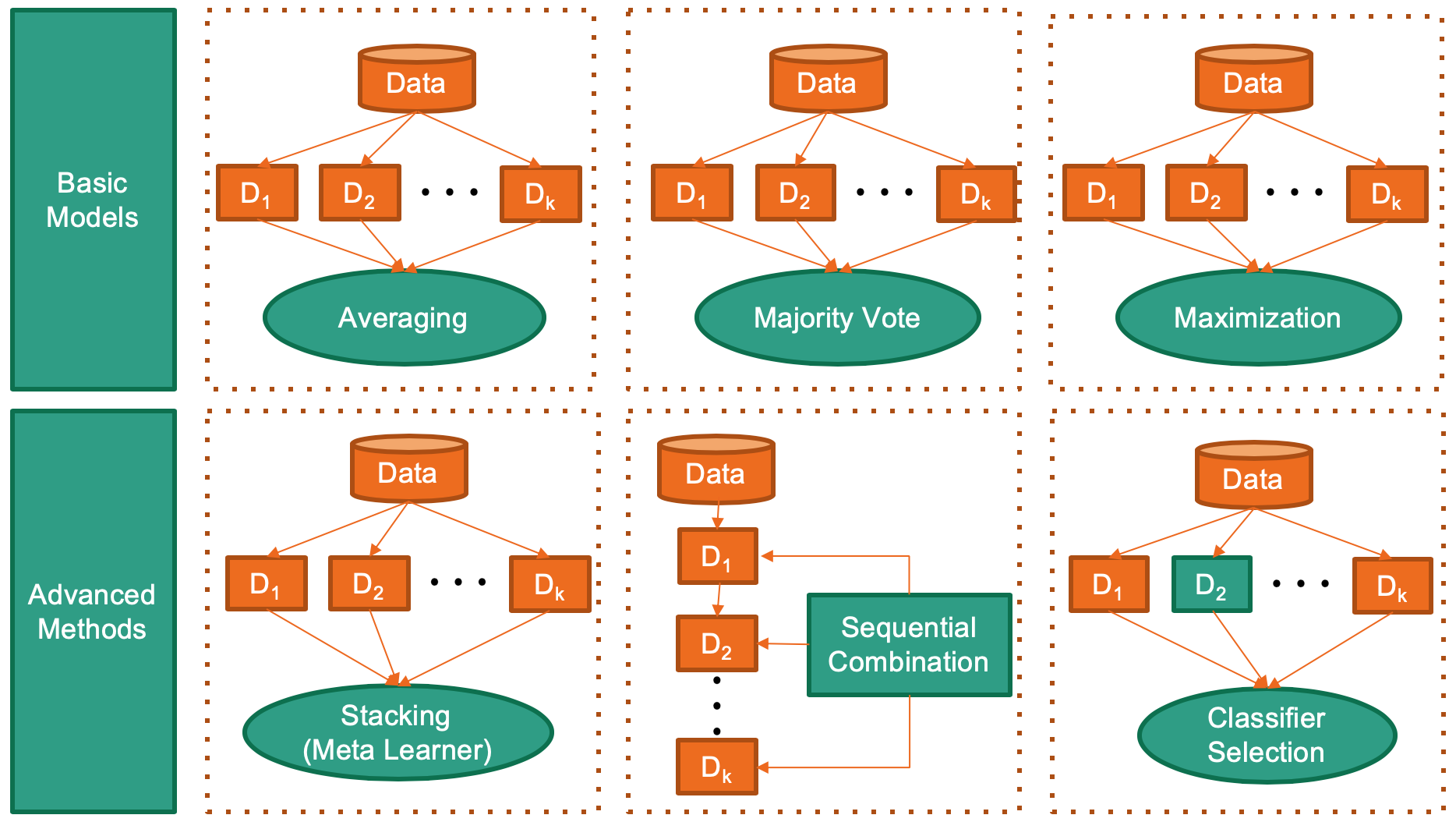

(AAAI' 20) A Python Toolbox for Machine Learning Model Combination

combo: A Python Toolbox for Machine Learning Model Combination Deployment & Documentation & Stats Build Status & Coverage & Maintainability & License

Multivariate imputation and matrix completion algorithms implemented in Python

A variety of matrix completion and imputation algorithms implemented in Python 3.6. To install: pip install fancyimpute Do not use conda. We don't sup

Genetic Algorithm, Particle Swarm Optimization, Simulated Annealing, Ant Colony Optimization Algorithm,Immune Algorithm, Artificial Fish Swarm Algorithm, Differential Evolution and TSP(Traveling salesman)

scikit-opt Swarm Intelligence in Python (Genetic Algorithm, Particle Swarm Optimization, Simulated Annealing, Ant Colony Algorithm, Immune Algorithm,A

Large-scale linear classification, regression and ranking in Python

lightning lightning is a library for large-scale linear classification, regression and ranking in Python. Highlights: follows the scikit-learn API con

A library of extension and helper modules for Python's data analysis and machine learning libraries.

Mlxtend (machine learning extensions) is a Python library of useful tools for the day-to-day data science tasks. Sebastian Raschka 2014-2021 Links Doc

A Python Package to Tackle the Curse of Imbalanced Datasets in Machine Learning

imbalanced-learn imbalanced-learn is a python package offering a number of re-sampling techniques commonly used in datasets showing strong between-cla

A simplified framework and utilities for PyTorch

Here is Poutyne. Poutyne is a simplified framework for PyTorch and handles much of the boilerplating code needed to train neural networks. Use Poutyne

A tiny scalar-valued autograd engine and a neural net library on top of it with PyTorch-like API

micrograd A tiny Autograd engine (with a bite! :)). Implements backpropagation (reverse-mode autodiff) over a dynamically built DAG and a small neural

Tez is a super-simple and lightweight Trainer for PyTorch. It also comes with many utils that you can use to tackle over 90% of deep learning projects in PyTorch.

Tez: a simple pytorch trainer NOTE: Currently, we are not accepting any pull requests! All PRs will be closed. If you want a feature or something does

High-level batteries-included neural network training library for Pytorch

Pywick High-Level Training framework for Pytorch Pywick is a high-level Pytorch training framework that aims to get you up and running quickly with st

Differentiable SDE solvers with GPU support and efficient sensitivity analysis.

PyTorch Implementation of Differentiable SDE Solvers This library provides stochastic differential equation (SDE) solvers with GPU support and efficie

Fast, general, and tested differentiable structured prediction in PyTorch

Torch-Struct: Structured Prediction Library A library of tested, GPU implementations of core structured prediction algorithms for deep learning applic

Implementation of LambdaNetworks, a new approach to image recognition that reaches SOTA with less compute

Lambda Networks - Pytorch Implementation of λ Networks, a new approach to image recognition that reaches SOTA on ImageNet. The new method utilizes λ l

You like pytorch? You like micrograd? You love tinygrad! ❤️

For something in between a pytorch and a karpathy/micrograd This may not be the best deep learning framework, but it is a deep learning framework. Due

The goal of this library is to generate more helpful exception messages for numpy/pytorch matrix algebra expressions.

Tensor Sensor See article Clarifying exceptions and visualizing tensor operations in deep learning code. One of the biggest challenges when writing co

An implementation of Performer, a linear attention-based transformer, in Pytorch

Performer - Pytorch An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random

PyTorch extensions for fast R&D prototyping and Kaggle farming

Pytorch-toolbelt A pytorch-toolbelt is a Python library with a set of bells and whistles for PyTorch for fast R&D prototyping and Kaggle farming: What

PyTorch implementation of TabNet paper : https://arxiv.org/pdf/1908.07442.pdf

README TabNet : Attentive Interpretable Tabular Learning This is a pyTorch implementation of Tabnet (Arik, S. O., & Pfister, T. (2019). TabNet: Attent

higher is a pytorch library allowing users to obtain higher order gradients over losses spanning training loops rather than individual training steps.

higher is a library providing support for higher-order optimization, e.g. through unrolled first-order optimization loops, of "meta" aspects of these

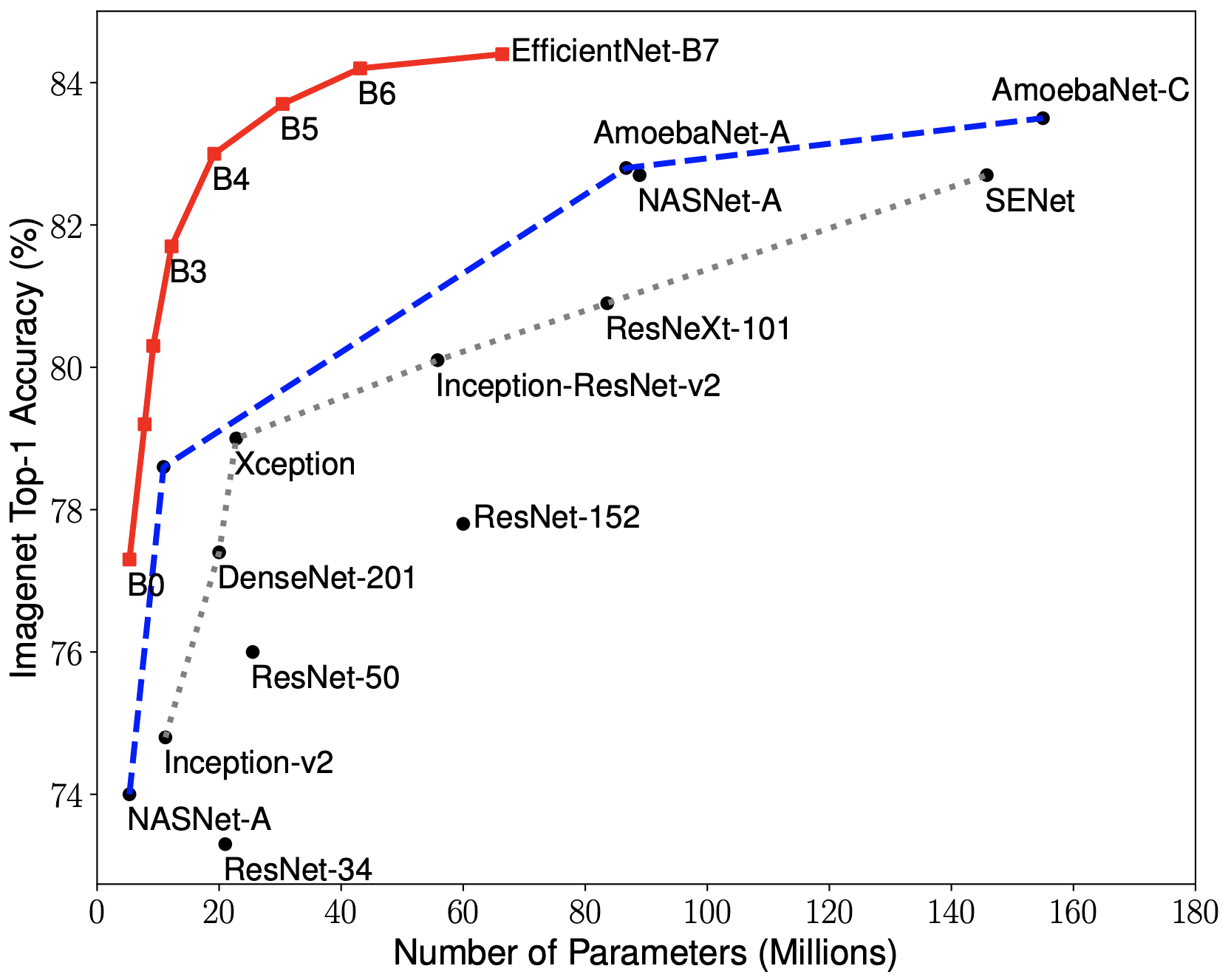

Pretrained EfficientNet, EfficientNet-Lite, MixNet, MobileNetV3 / V2, MNASNet A1 and B1, FBNet, Single-Path NAS

(Generic) EfficientNets for PyTorch A 'generic' implementation of EfficientNet, MixNet, MobileNetV3, etc. that covers most of the compute/parameter ef

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

PyTorch Extension Library of Optimized Autograd Sparse Matrix Operations

PyTorch Sparse This package consists of a small extension library of optimized sparse matrix operations with autograd support. This package currently

PyTorch Extension Library of Optimized Scatter Operations

PyTorch Scatter Documentation This package consists of a small extension library of highly optimized sparse update (scatter and segment) operations fo

A collection of extensions and data-loaders for few-shot learning & meta-learning in PyTorch

Torchmeta A collection of extensions and data-loaders for few-shot learning & meta-learning in PyTorch. Torchmeta contains popular meta-learning bench

Training RNNs as Fast as CNNs (https://arxiv.org/abs/1709.02755)

News SRU++, a new SRU variant, is released. [tech report] [blog] The experimental code and SRU++ implementation are available on the dev branch which

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

News March 3: v0.9.97 has various bug fixes and improvements: Bug fixes for NTXentLoss Efficiency improvement for AccuracyCalculator, by using torch i

A PyTorch implementation of EfficientNet

EfficientNet PyTorch Quickstart Install with pip install efficientnet_pytorch and load a pretrained EfficientNet with: from efficientnet_pytorch impor

torch-optimizer -- collection of optimizers for Pytorch

torch-optimizer torch-optimizer -- collection of optimizers for PyTorch compatible with optim module. Simple example import torch_optimizer as optim

Model summary in PyTorch similar to `model.summary()` in Keras

Keras style model.summary() in PyTorch Keras has a neat API to view the visualization of the model which is very helpful while debugging your network.

Pretrained ConvNets for pytorch: NASNet, ResNeXt, ResNet, InceptionV4, InceptionResnetV2, Xception, DPN, etc.

Pretrained models for Pytorch (Work in progress) The goal of this repo is: to help to reproduce research papers results (transfer learning setups for

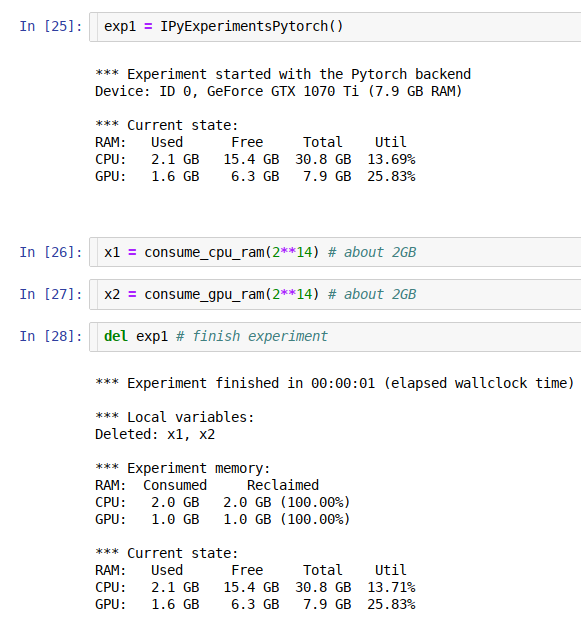

jupyter/ipython experiment containers for GPU and general RAM re-use

ipyexperiments jupyter/ipython experiment containers and utils for profiling and reclaiming GPU and general RAM, and detecting memory leaks. About Thi

Library for faster pinned CPU - GPU transfer in Pytorch

SpeedTorch Faster pinned CPU tensor - GPU Pytorch variabe transfer and GPU tensor - GPU Pytorch variable transfer, in certain cases. Update 9-29-1

General purpose GPU compute framework for cross vendor graphics cards (AMD, Qualcomm, NVIDIA & friends). Blazing fast, mobile-enabled, asynchronous and optimized for advanced GPU data processing usecases.

Vulkan Kompute The general purpose GPU compute framework for cross vendor graphics cards (AMD, Qualcomm, NVIDIA & friends). Blazing fast, mobile-enabl

BlazingSQL is a lightweight, GPU accelerated, SQL engine for Python. Built on RAPIDS cuDF.

A lightweight, GPU accelerated, SQL engine built on the RAPIDS.ai ecosystem. Get Started on app.blazingsql.com Getting Started | Documentation | Examp

cuML - RAPIDS Machine Learning Library

cuML - GPU Machine Learning Algorithms cuML is a suite of libraries that implement machine learning algorithms and mathematical primitives functions t

A GPU-accelerated library containing highly optimized building blocks and an execution engine for data processing to accelerate deep learning training and inference applications.

NVIDIA DALI The NVIDIA Data Loading Library (DALI) is a library for data loading and pre-processing to accelerate deep learning applications. It provi

cuDF - GPU DataFrame Library

cuDF - GPU DataFrames NOTE: For the latest stable README.md ensure you are on the main branch. Resources cuDF Reference Documentation: Python API refe

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

Introduction This repository holds NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pytorch. Some of the code her

🎛 Distributed machine learning made simple.

🎛 lazycluster Distributed machine learning made simple. Use your preferred distributed ML framework like a lazy engineer. Getting Started • Highlight

Distributed Evolutionary Algorithms in Python

DEAP DEAP is a novel evolutionary computation framework for rapid prototyping and testing of ideas. It seeks to make algorithms explicit and data stru

Distributed scikit-learn meta-estimators in PySpark

sk-dist: Distributed scikit-learn meta-estimators in PySpark What is it? sk-dist is a Python package for machine learning built on top of scikit-learn

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

Distributed Computing for AI Made Simple

Project Home Blog Documents Paper Media Coverage Join Fiber users email list [email protected] Fiber Distributed Computing for AI Made Simp

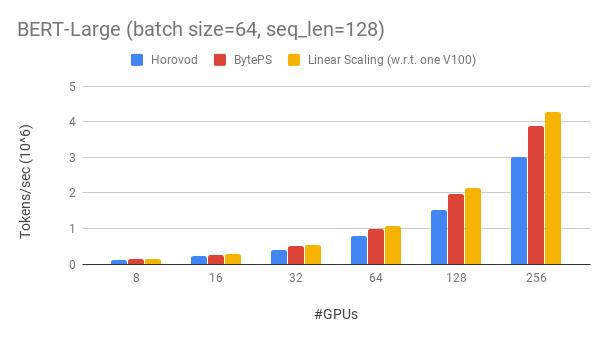

A high performance and generic framework for distributed DNN training

BytePS BytePS is a high performance and general distributed training framework. It supports TensorFlow, Keras, PyTorch, and MXNet, and can run on eith

a distributed deep learning platform

Apache SINGA Distributed deep learning system http://singa.apache.org Quick Start Installation Examples Issues JIRA tickets Code Analysis: Mailing Lis

PyTorch extensions for high performance and large scale training.

Description FairScale is a PyTorch extension library for high performance and large scale training on one or multiple machines/nodes. This library ext

Distributed Tensorflow, Keras and PyTorch on Apache Spark/Flink & Ray

A unified Data Analytics and AI platform for distributed TensorFlow, Keras and PyTorch on Apache Spark/Flink & Ray What is Analytics Zoo? Analytics Zo

Microsoft Machine Learning for Apache Spark

Microsoft Machine Learning for Apache Spark MMLSpark is an ecosystem of tools aimed towards expanding the distributed computing framework Apache Spark

TensorFlowOnSpark brings TensorFlow programs to Apache Spark clusters.

TensorFlowOnSpark TensorFlowOnSpark brings scalable deep learning to Apache Hadoop and Apache Spark clusters. By combining salient features from the T

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

BigDL: Distributed Deep Learning Framework for Apache Spark

BigDL: Distributed Deep Learning on Apache Spark What is BigDL? BigDL is a distributed deep learning library for Apache Spark; with BigDL, users can w

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Ray provides a simple, universal API for building distributed applications. Ray is packaged with the following libraries for accelerating machine lear

Python module for machine learning time series:

seglearn Seglearn is a python package for machine learning time series or sequences. It provides an integrated pipeline for segmentation, feature extr

Time series forecasting with PyTorch

Our article on Towards Data Science introduces the package and provides background information. Pytorch Forecasting aims to ease state-of-the-art time

A Python package for time series classification

pyts: a Python package for time series classification pyts is a Python package for time series classification. It aims to make time series classificat

A python library for easy manipulation and forecasting of time series.

Time Series Made Easy in Python darts is a python library for easy manipulation and forecasting of time series. It contains a variety of models, from

Probabilistic time series modeling in Python

GluonTS - Probabilistic Time Series Modeling in Python GluonTS is a Python toolkit for probabilistic time series modeling, built around Apache MXNet (

A machine learning toolkit dedicated to time-series data

tslearn The machine learning toolkit for time series analysis in Python Section Description Installation Installing the dependencies and tslearn Getti

A statistical library designed to fill the void in Python's time series analysis capabilities, including the equivalent of R's auto.arima function.

pmdarima Pmdarima (originally pyramid-arima, for the anagram of 'py' + 'arima') is a statistical library designed to fill the void in Python's time se

A unified framework for machine learning with time series

Welcome to sktime A unified framework for machine learning with time series We provide specialized time series algorithms and scikit-learn compatible

Find big moving stocks before they move using machine learning and anomaly detection

Surpriver - Find High Moving Stocks before they Move Find high moving stocks before they move using anomaly detection and machine learning. Surpriver

:mag_right: :chart_with_upwards_trend: :snake: :moneybag: Backtest trading strategies in Python.

Backtesting.py Backtest trading strategies with Python. Project website Documentation the project if you use it. Installation $ pip install backtestin

Common financial technical indicators implemented in Pandas.

FinTA (Financial Technical Analysis) Common financial technical indicators implemented in Pandas. This is work in progress, bugs are expected and resu

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technologies in quantitative investment. With Qlib, you can easily try your ideas to create better Quant investment strategies.

Qlib is an AI-oriented quantitative investment platform, which aims to realize the potential, empower the research, and create the value of AI technol

An open source reinforcement learning framework for training, evaluating, and deploying robust trading agents.

TensorTrade: Trade Efficiently with Reinforcement Learning TensorTrade is still in Beta, meaning it should be used very cautiously if used in producti

Objax Apache-2Objax (🥉19 · ⭐ 580) - Objax is a machine learning framework that provides an Object.. Apache-2 jax

Objax Tutorials | Install | Documentation | Philosophy This is not an officially supported Google product. Objax is an open source machine learning fr

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

PyTorch reimplementation of minimal-hand (CVPR2020)

Minimal Hand Pytorch Unofficial PyTorch reimplementation of minimal-hand (CVPR2020). you can also find in youtube or bilibili bare hand youtube or bil

Code for CoMatch: Semi-supervised Learning with Contrastive Graph Regularization

CoMatch: Semi-supervised Learning with Contrastive Graph Regularization (Salesforce Research) This is a PyTorch implementation of the CoMatch paper [B

PyTorch implementation of "Contrast to Divide: self-supervised pre-training for learning with noisy labels"

Contrast to Divide: self-supervised pre-training for learning with noisy labels This is an official implementation of "Contrast to Divide: self-superv

Source code, datasets and trained models for the paper Learning Advanced Mathematical Computations from Examples (ICLR 2021), by François Charton, Amaury Hayat (ENPC-Rutgers) and Guillaume Lample

Maths from examples - Learning advanced mathematical computations from examples This is the source code and data sets relevant to the paper Learning a

![[ICML 2020] Prediction-Guided Multi-Objective Reinforcement Learning for Continuous Robot Control](https://github.com/mit-gfx/PGMORL/raw/master/images/teaser.gif)

[ICML 2020] Prediction-Guided Multi-Objective Reinforcement Learning for Continuous Robot Control

PG-MORL This repository contains the implementation for the paper Prediction-Guided Multi-Objective Reinforcement Learning for Continuous Robot Contro

Open-L2O: A Comprehensive and Reproducible Benchmark for Learning to Optimize Algorithms

Open-L2O This repository establishes the first comprehensive benchmark efforts of existing learning to optimize (L2O) approaches on a number of proble

Deep Implicit Moving Least-Squares Functions for 3D Reconstruction

DeepMLS: Deep Implicit Moving Least-Squares Functions for 3D Reconstruction This repository contains the implementation of the paper: Deep Implicit Mo

A pytorch reprelication of the model-based reinforcement learning algorithm MBPO

Overview This is a re-implementation of the model-based RL algorithm MBPO in pytorch as described in the following paper: When to Trust Your Model: Mo

An efficient and effective learning to rank algorithm by mining information across ranking candidates. This repository contains the tensorflow implementation of SERank model. The code is developed based on TF-Ranking.

SERank An efficient and effective learning to rank algorithm by mining information across ranking candidates. This repository contains the tensorflow

Optimising chemical reactions using machine learning

Summit Summit is a set of tools for optimising chemical processes. We’ve started by targeting reactions. What is Summit? Currently, reaction optimisat

An implementation of Geoffrey Hinton's paper "How to represent part-whole hierarchies in a neural network" in Pytorch.

GLOM An implementation of Geoffrey Hinton's paper "How to represent part-whole hierarchies in a neural network" for MNIST Dataset. To understand this

Code for our paper at ECCV 2020: Post-Training Piecewise Linear Quantization for Deep Neural Networks

PWLQ Updates 2020/07/16 - We are working on getting permission from our institution to release our source code. We will release it once we are granted

Source code of "Hold me tight! Influence of discriminative features on deep network boundaries"

Hold me tight! Influence of discriminative features on deep network boundaries This is the source code to reproduce the experiments of the NeurIPS 202

Implementation of the 😇 Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones

HaloNet - Pytorch Implementation of the Attention layer from the paper, Scaling Local Self-Attention For Parameter Efficient Visual Backbones. This re

Spatial Intention Maps for Multi-Agent Mobile Manipulation (ICRA 2021)

spatial-intention-maps This code release accompanies the following paper: Spatial Intention Maps for Multi-Agent Mobile Manipulation Jimmy Wu, Xingyua

Understanding and Improving Encoder Layer Fusion in Sequence-to-Sequence Learning (ICLR 2021)

Understanding and Improving Encoder Layer Fusion in Sequence-to-Sequence Learning (ICLR 2021) Citation Please cite as: @inproceedings{liu2020understan

This is an implementation of PIFuhd based on Pytorch

Open-PIFuhd This is a unofficial implementation of PIFuhd PIFuHD: Multi-Level Pixel-Aligned Implicit Function forHigh-Resolution 3D Human Digitization