819 Repositories

Python FG-transformer-TTS Libraries

Reproduction of Vision Transformer in Tensorflow2. Train from scratch and Finetune.

Vision Transformer(ViT) in Tensorflow2 Tensorflow2 implementation of the Vision Transformer(ViT). This repository is for An image is worth 16x16 words

Official pytorch code for SSAT: A Symmetric Semantic-Aware Transformer Network for Makeup Transfer and Removal

SSAT: A Symmetric Semantic-Aware Transformer Network for Makeup Transfer and Removal This is the official pytorch code for SSAT: A Symmetric Semantic-

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fusion Framework via Self-Supervised Multi-Task Learning. Code will be available soon.

Official-PyTorch-Implementation-of-TransMEF Official PyTorch implementation of our AAAI22 paper: TransMEF: A Transformer-Based Multi-Exposure Image Fu

Official PyTorch implementation for paper "Efficient Two-Stage Detection of Human–Object Interactions with a Novel Unary–Pairwise Transformer"

UPT: Unary–Pairwise Transformers This repository contains the official PyTorch implementation for the paper Frederic Z. Zhang, Dylan Campbell and Step

Implementation of Google Brain's WaveGrad high-fidelity vocoder

WaveGrad Implementation (PyTorch) of Google Brain's high-fidelity WaveGrad vocoder (paper). First implementation on GitHub with high-quality generatio

Neural HMMs are all you need (for high-quality attention-free TTS)

Neural HMMs are all you need (for high-quality attention-free TTS) Shivam Mehta, Éva Székely, Jonas Beskow, and Gustav Eje Henter This is the official

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

SwinTrack: A Simple and Strong Baseline for Transformer Tracking

SwinTrack This is the official repo for SwinTrack. A Simple and Strong Baseline Prerequisites Environment conda (recommended) conda create -y -n SwinT

Code release for "Masked-attention Mask Transformer for Universal Image Segmentation"

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexander Kirillov, Ro

The official repository for "Score Transformer: Generating Musical Scores from Note-level Representation" (MMAsia '21)

Score Transformer This is the official repository for "Score Transformer": Score Transformer: Generating Musical Scores from Note-level Representation

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

Implementation of the paper 'Sentence Bottleneck Autoencoders from Transformer Language Models'

Introduction This repository contains the code for the paper Sentence Bottleneck Autoencoders from Transformer Language Models by Ivan Montero, Nikola

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network)

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network) BERTAC is a framework that combines a

Code for the paper "Are Sixteen Heads Really Better than One?"

Are Sixteen Heads Really Better than One? This repository contains code to reproduce the experiments in our paper Are Sixteen Heads Really Better than

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Norm-based Analysis of Transformer

Norm-based Analysis of Transformer Implementations for 2 papers introducing to analyze Transformers using vector norms: Kobayashi+'20 Attention is Not

Understanding the Difficulty of Training Transformers

Admin Understanding the Difficulty of Training Transformers Guided by our analyses, we propose Adaptive Model Initialization (Admin), which successful

Official Pytorch Implementation of Length-Adaptive Transformer (ACL 2021)

Length-Adaptive Transformer This is the official Pytorch implementation of Length-Adaptive Transformer. For detailed information about the method, ple

Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP 2021.

The Stem Cell Hypothesis Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP

🥇Samsung AI Challenge 2021 1등 솔루션입니다🥇

MoT - Molecular Transformer Large-scale Pretraining for Molecular Property Prediction Samsung AI Challenge for Scientific Discovery This repository is

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion. NÜWA is a unified multimodal p

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification [pdf] The official repository for Self-Supervised Pre-Training for Transfo

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer

CycleTransGAN-EVC CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer Demo emotion CycleTransGAN CycleTransGAN Cycle

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

Pytorch implementation of Integrating Tree Path in Transformer for Code Representation

This is an official Pytorch implementation of the approaches proposed in: Han Peng, Ge Li, Wenhan Wang, Yunfei Zhao, Zhi Jin “Integrating Tree Path in

This is a collection of our NAS and Vision Transformer work.

This is a collection of our NAS and Vision Transformer work.

Swin-Transformer is basically a hierarchical Transformer whose representation is computed with shifted windows.

Swin-Transformer Swin-Transformer is basically a hierarchical Transformer whose representation is computed with shifted windows. For more details, ple

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

The official implementation for "FQ-ViT: Fully Quantized Vision Transformer without Retraining".

FQ-ViT [arXiv] This repo contains the official implementation of "FQ-ViT: Fully Quantized Vision Transformer without Retraining". Table of Contents In

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

Text-to-Speech for Belarusian language

title emoji colorFrom colorTo sdk app_file pinned Belarusian TTS 🐸 green green gradio app.py false Belarusian TTS 📢 🤖 Belarusian TTS (text-to-speec

Pytorch library for end-to-end transformer models training and serving

Pytorch library for end-to-end transformer models training and serving

Official Code for Cross-Modality Fusion Transformer for Multispectral Object Detection.

Multispectral-Object-Detection Intro Official Code for Cross-Modality Fusion Transformer for Multispectral Object Detection. Multispectral Object Dete

Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation

TVT Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation Datasets: Digit: MNIST, SVHN, USPS Object: Office, Office-Home, Vi

A python gui program to generate reddit text to speech videos from the id of any post.

Reddit text to speech generator A python gui program to generate reddit text to speech videos from the id of any post. Current functionality Generate

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis, accepted at ACMMM 2021.

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification [pdf] The official repository for Self-Supervised Pre-Training for Transfo

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing Paper Introduction Multi-task indoor scene understanding is widely considered a

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: "NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion". NÜWA is a unified multimodal

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing Paper Introduction Multi-task indoor scene understanding is widely considered a

OpenMMLab Text Detection, Recognition and Understanding Toolbox

Introduction English | 简体中文 MMOCR is an open-source toolbox based on PyTorch and mmdetection for text detection, text recognition, and the correspondi

OpenMMLab Image Classification Toolbox and Benchmark

Introduction English | 简体中文 MMClassification is an open source image classification toolbox based on PyTorch. It is a part of the OpenMMLab project. D

Question and answer retrieval in Turkish with BERT

trfaq Google supported this work by providing Google Cloud credit. Thank you Google for supporting the open source! 🎉 What is this? At this repo, I'm

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation This repo is the official implementation of "MHFormer: Multi-Hypothesis Transforme

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

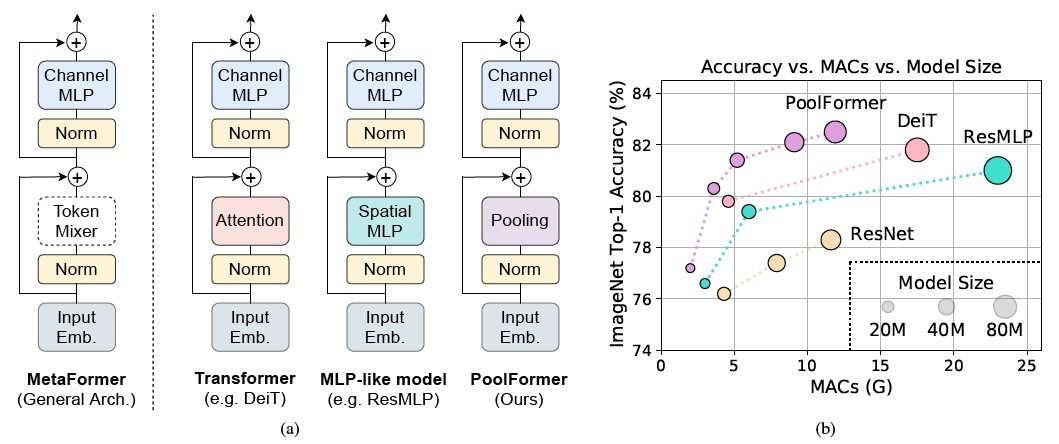

PoolFormer: MetaFormer is Actually What You Need for Vision

PoolFormer: MetaFormer is Actually What You Need for Vision (arXiv) This is a PyTorch implementation of PoolFormer proposed by our paper "MetaFormer i

Skoot is a lightweight python library of machine learning transformer classes that interact with scikit-learn and pandas.

Skoot is a lightweight python library of machine learning transformer classes that interact with scikit-learn and pandas. Its objective is to ex

Tensorflow 2.x implementation of Vision-Transformer model

Vision Transformer Unofficial Tensorflow 2.x implementation of the Transformer based Image Classification model proposed by the paper AN IMAGE IS WORT

State of the art faster Natural Language Processing in Tensorflow 2.0 .

tf-transformers: faster and easier state-of-the-art NLP in TensorFlow 2.0 ****************************************************************************

This is an official implementation for "Exploiting Temporal Contexts with Strided Transformer for 3D Human Pose Estimation".

Exploiting Temporal Contexts with Strided Transformer for 3D Human Pose Estimation This repo is the official implementation of Exploiting Temporal Con

Generalized Decision Transformer for Offline Hindsight Information Matching

Generalized Decision Transformer for Offline Hindsight Information Matching [arxiv] If you use this codebase for your research, please cite the paper:

The pure and clear PyTorch Distributed Training Framework.

The pure and clear PyTorch Distributed Training Framework. Introduction Requirements and Usage Dependency Dataset Basic Usage Slurm Cluster Usage Base

TransMorph: Transformer for Medical Image Registration

TransMorph: Transformer for Medical Image Registration keywords: Vision Transformer, Swin Transformer, convolutional neural networks, image registrati

Official repository for "Restormer: Efficient Transformer for High-Resolution Image Restoration". SOTA for motion deblurring, image deraining, denoising (Gaussian/real data), and defocus deblurring.

Restormer: Efficient Transformer for High-Resolution Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan,

Code for the paper "Offline Reinforcement Learning as One Big Sequence Modeling Problem"

Trajectory Transformer Code release for Offline Reinforcement Learning as One Big Sequence Modeling Problem. Installation All python dependencies are

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch

nlp-tutorial is a tutorial for who is studying NLP(Natural Language Processing) using Pytorch. Most of the models in NLP were implemented with less than 100 lines of code.(except comments or blank lines)

Deploy optimized transformer based models on Nvidia Triton server

🤗 Hugging Face Transformer submillisecond inference 🤯 and deployment on Nvidia Triton server Yes, you can perfom inference with transformer based mo

Official repository for the paper "GN-Transformer: Fusing AST and Source Code information in Graph Networks".

GN-Transformer AST This is the official repository for the paper "GN-Transformer: Fusing AST and Source Code information in Graph Networks". Data Prep

The implementation of DeBERTa

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Official repository for "Restormer: Efficient Transformer for High-Resolution Image Restoration". SOTA results for single-image motion deblurring, image deraining, image denoising (synthetic and real data), and dual-pixel defocus deblurring.

Restormer: Efficient Transformer for High-Resolution Image Restoration Syed Waqas Zamir, Aditya Arora, Salman Khan, Munawar Hayat, Fahad Shahbaz Khan,

A PyTorch implementation of "CoAtNet: Marrying Convolution and Attention for All Data Sizes".

CoAtNet Overview This is a PyTorch implementation of CoAtNet specified in "CoAtNet: Marrying Convolution and Attention for All Data Sizes", arXiv 2021

Implementation of DocFormer: End-to-End Transformer for Document Understanding, a multi-modal transformer based architecture for the task of Visual Document Understanding (VDU)

DocFormer - PyTorch Implementation of DocFormer: End-to-End Transformer for Document Understanding, a multi-modal transformer based architecture for t

Official implementation of UTNet: A Hybrid Transformer Architecture for Medical Image Segmentation

UTNet (Accepted at MICCAI 2021) Official implementation of UTNet: A Hybrid Transformer Architecture for Medical Image Segmentation Introduction Transf

PyTorch implementation for our NeurIPS 2021 Spotlight paper "Long Short-Term Transformer for Online Action Detection".

Long Short-Term Transformer for Online Action Detection Introduction This is a PyTorch implementation for our NeurIPS 2021 Spotlight paper "Long Short

A transformer-based method for Healthcare Image Captioning in Vietnamese

vieCap4H Challenge 2021: A transformer-based method for Healthcare Image Captioning in Vietnamese This repo GitHub contains our solution for vieCap4H

TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios

TPH-YOLOv5 This repo is the implementation of "TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured

Flaxformer: transformer architectures in JAX/Flax

Flaxformer is a transformer library for primarily NLP and multimodal research at Google.

A Pytorch implement of paper "Anomaly detection in dynamic graphs via transformer" (TADDY).

TADDY: Anomaly detection in dynamic graphs via transformer This repo covers an reference implementation for the paper "Anomaly detection in dynamic gr

Session-aware Item-combination Recommendation with Transformer Network

Session-aware Item-combination Recommendation with Transformer Network 2nd place (0.39224) code and report for IEEE BigData Cup 2021 Track1 Report EDA

Official implementation of Meta-StyleSpeech and StyleSpeech

Meta-StyleSpeech : Multi-Speaker Adaptive Text-to-Speech Generation Dongchan Min, Dong Bok Lee, Eunho Yang, and Sung Ju Hwang This is an official code

A relatively simple python program to generate one of those reddit text to speech videos dominating youtube.

Reddit text to speech generator A basic reddit tts video generator Current functionality Generate videos for subs based on comments,(askreddit) so rea

High-resolution networks and Segmentation Transformer for Semantic Segmentation

High-resolution networks and Segmentation Transformer for Semantic Segmentation Branches This is the implementation for HRNet + OCR. The PyTroch 1.1 v

![[CVPR'20] TTSR: Learning Texture Transformer Network for Image Super-Resolution](https://github.com/FuzhiYang/TTSR/raw/master/IMG/TT.png)

[CVPR'20] TTSR: Learning Texture Transformer Network for Image Super-Resolution

TTSR Official PyTorch implementation of the paper Learning Texture Transformer Network for Image Super-Resolution accepted in CVPR 2020. Contents Intr

Boundary-aware Transformers for Skin Lesion Segmentation

Boundary-aware Transformers for Skin Lesion Segmentation Introduction This is an official release of the paper Boundary-aware Transformers for Skin Le

History Aware Multimodal Transformer for Vision-and-Language Navigation

History Aware Multimodal Transformer for Vision-and-Language Navigation This repository is the official implementation of History Aware Multimodal Tra

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

aMLP Transformer Model for Japanese

aMLP-japanese Japanese aMLP Pretrained Model aMLPとは、Liu, Daiらが提案する、Transformerモデルです。 ざっくりというと、BERTの代わりに使えて、より性能の良いモデルです。 詳しい解説は、こちらの記事などを参考にしてください。 この

History Aware Multimodal Transformer for Vision-and-Language Navigation

History Aware Multimodal Transformer for Vision-and-Language Navigation This repository is the official implementation of History Aware Multimodal Tra

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers

hierarchical-transformer-1d Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning using 🤗 transformers In Progress!! 2021.

Oriented Object Detection: Oriented RepPoints + Swin Transformer/ReResNet

Oriented RepPoints for Aerial Object Detection The code for the implementation of “Oriented RepPoints + Swin Transformer/ReResNet”. Introduction Based

Transformer part of 12th place solution in Riiid! Answer Correctness Prediction

kaggle_riiid Transformer part of 12th place solution in Riiid! Answer Correctness Prediction. Please see here for more information. Execution You need

Implementation of TTS with combination of Tacotron2 and HiFi-GAN

Tacotron2-HiFiGAN-master Implementation of TTS with combination of Tacotron2 and HiFi-GAN for Mandarin TTS. Inference In order to inference, we need t

Meta-TTS: Meta-Learning for Few-shot SpeakerAdaptive Text-to-Speech

Meta-TTS: Meta-Learning for Few-shot SpeakerAdaptive Text-to-Speech This repository is the official implementation of "Meta-TTS: Meta-Learning for Few

The official implementation of Theme Transformer

Theme Transformer This is the official implementation of Theme Transformer. Checkout our demo and paper : Demo | arXiv Environment: using python versi

Azure Neural Speech Service TTS

Written in Python using the Azure Speech SDK. App.py provides an easy way to create an Text-To-Speech request to Azure Speech and download the wav file. Azure Neural Voices Text-To-Speech enables fluid, natural-sounding text to speech that matches the patterns and intonation of human voices.

Implementation of Hourglass Transformer, in Pytorch, from Google and OpenAI

Hourglass Transformer - Pytorch (wip) Implementation of Hourglass Transformer, in Pytorch. It will also contain some of my own ideas about how to make

ViDT: An Efficient and Effective Fully Transformer-based Object Detector

ViDT: An Efficient and Effective Fully Transformer-based Object Detector by Hwanjun Song1, Deqing Sun2, Sanghyuk Chun1, Varun Jampani2, Dongyoon Han1,

Official code and pretrained models for CTRL-C (Camera calibration TRansformer with Line-Classification).

CTRL-C: Camera calibration TRansformer with Line-Classification This repository contains the official code and pretrained models for CTRL-C (Camera ca

ICCV2021, Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet

Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet, ICCV 2021 Update: 2021/03/11: update our new results. Now our T2T-ViT-14 w

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

ICCV2021 Papers with Code

ICCV2021 Papers with Code

Efficient Training of Audio Transformers with Patchout

PaSST: Efficient Training of Audio Transformers with Patchout This is the implementation for Efficient Training of Audio Transformers with Patchout Pa

Flaxformer: transformer architectures in JAX/Flax

Flaxformer: transformer architectures in JAX/Flax Flaxformer is a transformer library for primarily NLP and multimodal research at Google. It is used