248 Repositories

Python distillation-loss Libraries

Classify bird species based on their songs using SIamese Networks and 1D dilated convolutions.

The goal is to classify different birds species based on their songs/calls. Spectrograms have been extracted from the audio samples and used as features for classification.

PyTorch implementation for Partially View-aligned Representation Learning with Noise-robust Contrastive Loss (CVPR 2021)

2021-CVPR-MvCLN This repo contains the code and data of the following paper accepted by CVPR 2021 Partially View-aligned Representation Learning with

Code implementation of Data Efficient Stagewise Knowledge Distillation paper.

Data Efficient Stagewise Knowledge Distillation Table of Contents Data Efficient Stagewise Knowledge Distillation Table of Contents Requirements Image

clDice - a Novel Topology-Preserving Loss Function for Tubular Structure Segmentation

README clDice - a Novel Topology-Preserving Loss Function for Tubular Structure Segmentation CVPR 2021 Authors: Suprosanna Shit and Johannes C. Paetzo

Official PyTorch Implementation of Embedding Transfer with Label Relaxation for Improved Metric Learning, CVPR 2021

Embedding Transfer with Label Relaxation for Improved Metric Learning Official PyTorch implementation of CVPR 2021 paper Embedding Transfer with Label

PyTorch implementation of Soft-DTW: a Differentiable Loss Function for Time-Series in CUDA

Soft DTW Loss Function for PyTorch in CUDA This is a Pytorch Implementation of Soft-DTW: a Differentiable Loss Function for Time-Series which is batch

This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard evaluation metric to measure the accuracy and robustness of 3D face reconstruction methods from a single image under variations in viewing angle, lighting, and common occlusions.

NoW Evaluation This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard e

The official implementation of Equalization Loss v1 & v2 (CVPR 2020, 2021) based on MMDetection.

The Equalization Losses for Long-tailed Object Detection and Instance Segmentation This repo is official implementation CVPR 2021 paper: Equalization

2021海华AI挑战赛·中文阅读理解·技术组·第三名

文字是人类用以记录和表达的最基本工具,也是信息传播的重要媒介。透过文字与符号,我们可以追寻人类文明的起源,可以传播知识与经验,读懂文字是认识与了解的第一步。对于人工智能而言,它的核心问题之一就是认知,而认知的核心则是语义理解。

A Pancakeswap and Uniswap trading client (and bot) with limit orders, marker orders, stop-loss, custom gas strategies, a GUI and much more.

Pancakeswap and Uniswap trading client Adam A A Pancakeswap and Uniswap trading client (and bot) with market orders, limit orders, stop-loss, custom g

Sharpness-Aware Minimization for Efficiently Improving Generalization

Sharpness-Aware-Minimization-TensorFlow This repository provides a minimal implementation of sharpness-aware minimization (SAM) (Sharpness-Aware Minim

Registration Loss Learning for Deep Probabilistic Point Set Registration

RLLReg This repository contains a Pytorch implementation of the point set registration method RLLReg. Details about the method can be found in the 3DV

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Multi-Scale Aligned Distillation for Low-Resolution Detection (CVPR2021)

MSAD Multi-Scale Aligned Distillation for Low-Resolution Detection Lu Qi*, Jason Kuen*, Jiuxiang Gu, Zhe Lin, Yi Wang, Yukang Chen, Yanwei Li, Jiaya J

Official implementation for (Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching, AAAI-2021)

Show, Attend and Distill: Knowledge Distillation via Attention-based Feature Matching Official pytorch implementation of "Show, Attend and Distill: Kn

An end-to-end machine learning library to directly optimize AUC loss

LibAUC An end-to-end machine learning library for AUC optimization. Why LibAUC? Deep AUC Maximization (DAM) is a paradigm for learning a deep neural n

A Pancakeswap v2 trading client (and bot) with limit orders, stop-loss, custom gas strategies, a GUI and much more.

Pancakeswap v2 trading client A Pancakeswap trading client (and bot) with limit orders, stop-loss, custom gas strategies, a GUI and much more. If you

Auto Seg-Loss: Searching Metric Surrogates for Semantic Segmentation

Auto-Seg-Loss By Hao Li, Chenxin Tao, Xizhou Zhu, Xiaogang Wang, Gao Huang, Jifeng Dai This is the official implementation of the ICLR 2021 paper Auto

RL and distillation in CARLA using a factorized world model

World on Rails Learning to drive from a world on rails Dian Chen, Vladlen Koltun, Philipp Krähenbühl, arXiv techical report (arXiv 2105.00636) This re

Location-Sensitive Visual Recognition with Cross-IOU Loss

The trained models are temporarily unavailable, but you can train the code using reasonable computational resource. Location-Sensitive Visual Recognit

CVPR 2021: "The Spatially-Correlative Loss for Various Image Translation Tasks"

Spatially-Correlative Loss arXiv | website We provide the Pytorch implementation of "The Spatially-Correlative Loss for Various Image Translation Task

Multi-scale discriminator feature-wise loss function

Multi-Scale Discriminative Feature Loss This repository provides code for Multi-Scale Discriminative Feature (MDF) loss for image reconstruction algor

Tools for use in DeFi. Impermanent Loss calculations, staking and farming strategies, coingecko and pancakeswap API queries, liquidity pools and more

DeFi open source tools Get Started Instalation General Tools Impermanent Loss, simple calculation Compare Buy & Hold with Staking and Farming Complete

An unofficial implementation of the paper "AutoVC: Zero-Shot Voice Style Transfer with Only Autoencoder Loss".

AutoVC: Zero-Shot Voice Style Transfer with Only Autoencoder Loss This is an unofficial implementation of AutoVC based on the official one. The reposi

The repo contains the code of the ACL2020 paper `Dice Loss for Data-imbalanced NLP Tasks`

Dice Loss for NLP Tasks This repository contains code for Dice Loss for Data-imbalanced NLP Tasks at ACL2020. Setup Install Package Dependencies The c

AMTML-KD: Adaptive Multi-teacher Multi-level Knowledge Distillation

AMTML-KD: Adaptive Multi-teacher Multi-level Knowledge Distillation

Multi-Scale Aligned Distillation for Low-Resolution Detection (CVPR2021)

MSAD Multi-Scale Aligned Distillation for Low-Resolution Detection Lu Qi*, Jason Kuen*, Jiuxiang Gu, Zhe Lin, Yi Wang, Yukang Chen, Yanwei Li, Jiaya J

[ICLR 2021 Spotlight Oral] "Undistillable: Making A Nasty Teacher That CANNOT teach students", Haoyu Ma, Tianlong Chen, Ting-Kuei Hu, Chenyu You, Xiaohui Xie, Zhangyang Wang

Undistillable: Making A Nasty Teacher That CANNOT teach students "Undistillable: Making A Nasty Teacher That CANNOT teach students" Haoyu Ma, Tianlong

Adversarial Adaptation with Distillation for BERT Unsupervised Domain Adaptation

Knowledge Distillation for BERT Unsupervised Domain Adaptation Official PyTorch implementation | Paper Abstract A pre-trained language model, BERT, ha

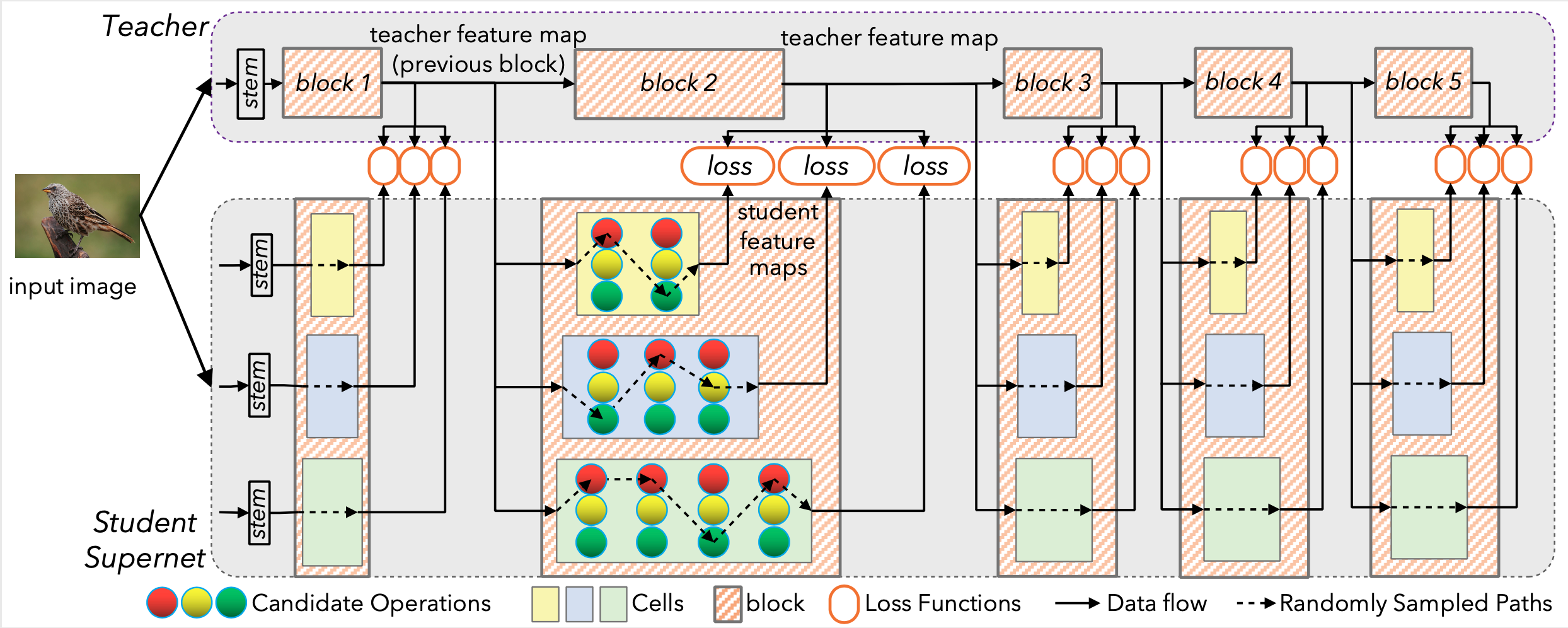

Block-wisely Supervised Neural Architecture Search with Knowledge Distillation (CVPR 2020)

DNA This repository provides the code of our paper: Blockwisely Supervised Neural Architecture Search with Knowledge Distillation. Illustration of DNA

A collection of loss functions for medical image segmentation

A collection of loss functions for medical image segmentation

Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation

SimplePose Code and pre-trained models for our paper, “Simple Pose: Rethinking and Improving a Bottom-up Approach for Multi-Person Pose Estimation”, a

Official implementation for (Refine Myself by Teaching Myself : Feature Refinement via Self-Knowledge Distillation, CVPR-2021)

FRSKD Official implementation for Refine Myself by Teaching Myself : Feature Refinement via Self-Knowledge Distillation (CVPR-2021) Requirements Pytho

PyTorch extensions for fast R&D prototyping and Kaggle farming

Pytorch-toolbelt A pytorch-toolbelt is a Python library with a set of bells and whistles for PyTorch for fast R&D prototyping and Kaggle farming: What

git《Investigating Loss Functions for Extreme Super-Resolution》(CVPR 2020) GitHub:

Investigating Loss Functions for Extreme Super-Resolution NTIRE 2020 Perceptual Extreme Super-Resolution Submission. Our method ranked first and secon

CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent performances in several models such as Faster R-CNN, YOLOv4, RetinaNet and . There is a maximum AP improvement of 1.9% and an average AP of 0.8% improvement on MS COCO dataset, compared to traditional evaluation-feedback modules. Here we just use as an example to illustrate the code.

CDIoU-CDIoUloss CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent p

Convolutional Recurrent Neural Networks(CRNN) for Scene Text Recognition

CRNN_Tensorflow This is a TensorFlow implementation of a Deep Neural Network for scene text recognition. It is mainly based on the paper "An End-to-En

Tensorflow-based CNN+LSTM trained with CTC-loss for OCR

Overview This collection demonstrates how to construct and train a deep, bidirectional stacked LSTM using CNN features as input with CTC loss to perfo

Use Convolutional Recurrent Neural Network to recognize the Handwritten line text image without pre segmentation into words or characters. Use CTC loss Function to train.

Handwritten Line Text Recognition using Deep Learning with Tensorflow Description Use Convolutional Recurrent Neural Network to recognize the Handwrit

Unofficial implementation of "TTNet: Real-time temporal and spatial video analysis of table tennis" (CVPR 2020)

TTNet-Pytorch The implementation for the paper "TTNet: Real-time temporal and spatial video analysis of table tennis" An introduction of the project c

Dogs classification with Deep Metric Learning using some popular losses

Tsinghua Dogs classification with Deep Metric Learning 1. Introduction Tsinghua Dogs dataset Tsinghua Dogs is a fine-grained classification dataset fo

Official implementation of our paper "LLA: Loss-aware Label Assignment for Dense Pedestrian Detection" in Pytorch.

LLA: Loss-aware Label Assignment for Dense Pedestrian Detection This project provides an implementation for "LLA: Loss-aware Label Assignment for Dens

EDCNN: Edge enhancement-based Densely Connected Network with Compound Loss for Low-Dose CT Denoising

EDCNN: Edge enhancement-based Densely Connected Network with Compound Loss for Low-Dose CT Denoising By Tengfei Liang, Yi Jin, Yidong Li, Tao Wang. Th

Let Python optimize the best stop loss and take profits for your TradingView strategy.

TradingView Machine Learning TradeView is a free and open source Trading View bot written in Python. It is designed to support all major exchanges. It

Official code for paper "Optimization for Oriented Object Detection via Representation Invariance Loss".

Optimization for Oriented Object Detection via Representation Invariance Loss By Qi Ming, Zhiqiang Zhou, Lingjuan Miao, Xue Yang, and Yunpeng Dong. Th

Localization Distillation for Object Detection

Localization Distillation for Object Detection This repo is based on mmDetection. This is the code for our paper: Localization Distillation

Seach Losses of our paper 'Loss Function Discovery for Object Detection via Convergence-Simulation Driven Search', accepted by ICLR 2021.

CSE-Autoloss Designing proper loss functions for vision tasks has been a long-standing research direction to advance the capability of existing models

Create animations for the optimization trajectory of neural nets

Animating the Optimization Trajectory of Neural Nets loss-landscape-anim lets you create animated optimization path in a 2D slice of the loss landscap