248 Repositories

Python distillation-loss Libraries

Official code for our CVPR '22 paper "Dataset Distillation by Matching Training Trajectories"

Dataset Distillation by Matching Training Trajectories Project Page | Paper This repo contains code for training expert trajectories and distilling sy

I will implement Fastai in each projects present in this repository.

DEEP LEARNING FOR CODERS WITH FASTAI AND PYTORCH The repository contains a list of the projects which I have worked on while reading the book Deep Lea

🐤 Nix-TTS: An Incredibly Lightweight End-to-End Text-to-Speech Model via Non End-to-End Distillation

🐤 Nix-TTS An Incredibly Lightweight End-to-End Text-to-Speech Model via Non End-to-End Distillation Rendi Chevi, Radityo Eko Prasojo, Alham Fikri Aji

Anomaly Detection via Reverse Distillation from One-Class Embedding

Anomaly Detection via Reverse Distillation from One-Class Embedding Implementation (Official Code ⭐️ ⭐️ ⭐️ ) Environment pytorch == 1.91 torchvision =

(CVPR 2022) A minimalistic mapless end-to-end stack for joint perception, prediction, planning and control for self driving.

LAV Learning from All Vehicles Dian Chen, Philipp Krähenbühl CVPR 2022 (also arXiV 2203.11934) This repo contains code for paper Learning from all veh

WarpRNNT loss ported in Numba CPU/CUDA for Pytorch

RNNT loss in Pytorch - Numba JIT compiled (warprnnt_numba) Warp RNN Transducer Loss for ASR in Pytorch, ported from HawkAaron/warp-transducer and a re

CLIP (Contrastive Language–Image Pre-training) for Italian

Italian CLIP CLIP (Radford et al., 2021) is a multimodal model that can learn to represent images and text jointly in the same space. In this project,

LiDAR Distillation: Bridging the Beam-Induced Domain Gap for 3D Object Detection

LiDAR Distillation Paper | Model LiDAR Distillation: Bridging the Beam-Induced Domain Gap for 3D Object Detection Yi Wei, Zibu Wei, Yongming Rao, Jiax

Implementation of some unbalanced loss like focal_loss, dice_loss, DSC Loss, GHM Loss et.al

Implementation of some unbalanced loss for NLP task like focal_loss, dice_loss, DSC Loss, GHM Loss et.al Summary Here is a loss implementation reposit

The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation"

SD-AANet The code is for the paper "A Self-Distillation Embedded Supervised Affinity Attention Model for Few-Shot Segmentation" [arxiv] Overview confi

Codes for SIGIR'22 Paper 'On-Device Next-Item Recommendation with Self-Supervised Knowledge Distillation'

OD-Rec Codes for SIGIR'22 Paper 'On-Device Next-Item Recommendation with Self-Supervised Knowledge Distillation' Paper, saved teacher models and Andro

SCALoss: Side and Corner Aligned Loss for Bounding Box Regression (AAAI2022).

SCALoss PyTorch implementation of the paper "SCALoss: Side and Corner Aligned Loss for Bounding Box Regression" (AAAI 2022). Introduction IoU-based lo

![[CVPR 2022] Official code for the paper:](https://github.com/mdca-loss/MDCA-Calibration/raw/main/content/teaser.png)

[CVPR 2022] Official code for the paper: "A Stitch in Time Saves Nine: A Train-Time Regularizing Loss for Improved Neural Network Calibration"

MDCA Calibration This is the official PyTorch implementation for the paper: "A Stitch in Time Saves Nine: A Train-Time Regularizing Loss for Improved

ReLoss - Official implementation for paper "Relational Surrogate Loss Learning" ICLR 2022

Relational Surrogate Loss Learning (ReLoss) Official implementation for paper "R

SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING - The Facebook paper about fine tuning RoBERTa with contrastive loss

"# SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING" i

Locally Differentially Private Distributed Deep Learning via Knowledge Distillation (LDP-DL)

Locally Differentially Private Distributed Deep Learning via Knowledge Distillation (LDP-DL) A preprint version of our paper: Link here This is a samp

Pytorch implementation of "Peer Loss Functions: Learning from Noisy Labels without Knowing Noise Rates"

Peer Loss functions This repository is the (Multi-Class & Deep Learning) Pytorch implementation of "Peer Loss Functions: Learning from Noisy Labels wi

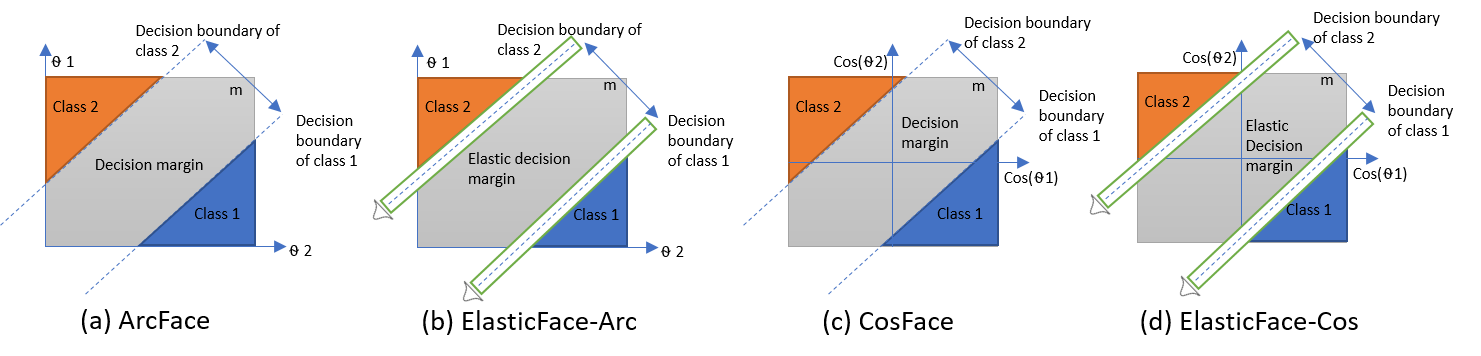

ElasticFace: Elastic Margin Loss for Deep Face Recognition

This is the official repository of the paper: ElasticFace: Elastic Margin Loss for Deep Face Recognition Paper on arxiv: arxiv Model Log file Pretrain

A pure PyTorch implementation of the loss described in "Online Segment to Segment Neural Transduction"

ssnt-loss ℹ️ This is a WIP project. the implementation is still being tested. A pure PyTorch implementation of the loss described in "Online Segment t

A PyTorch-based R-YOLOv4 implementation which combines YOLOv4 model and loss function from R3Det for arbitrary oriented object detection.

R-YOLOv4 This is a PyTorch-based R-YOLOv4 implementation which combines YOLOv4 model and loss function from R3Det for arbitrary oriented object detect

Supervised Sliding Window Smoothing Loss Function Based on MS-TCN for Video Segmentation

SSWS-loss_function_based_on_MS-TCN Supervised Sliding Window Smoothing Loss Function Based on MS-TCN for Video Segmentation Supervised Sliding Window

Contrastive Loss Gradient Attack (CLGA)

Contrastive Loss Gradient Attack (CLGA) Official implementation of Unsupervised Graph Poisoning Attack via Contrastive Loss Back-propagation, WWW22 Bu

Detailed analysis on fraud claims in insurance companies, gives you information as to why huge loss take place in insurance companies

Insurance-Fraud-Claims Detailed analysis on fraud claims in insurance companies, gives you information as to why huge loss take place in insurance com

Improving Object Detection by Label Assignment Distillation

Improving Object Detection by Label Assignment Distillation This is the official implementation of the WACV 2022 paper Improving Object Detection by L

Unsupervised Feature Loss (UFLoss) for High Fidelity Deep learning (DL)-based reconstruction

Unsupervised Feature Loss (UFLoss) for High Fidelity Deep learning (DL)-based reconstruction Official github repository for the paper High Fidelity De

PyTorch source code for Distilling Knowledge by Mimicking Features

LSHFM.detection This is the PyTorch source code for Distilling Knowledge by Mimicking Features. And this project contains code for object detection wi

Pytorch implementation of ICASSP 2022 paper Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

Keras Image Embeddings using Contrastive Loss

Image to Embedding projection in vector space. Implementation in keras and tensorflow of batch all triplet loss for one-shot/few-shot learning.

Attention Probe: Vision Transformer Distillation in the Wild

Attention Probe: Vision Transformer Distillation in the Wild Jiahao Wang, Mingdeng Cao, Shuwei Shi, Baoyuan Wu, Yujiu Yang In ICASSP 2022 This code is

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images

HistoSeg : Quick attention with multi-loss function for multi-structure segmentation in digital histology images Histological Image Segmentation This

Keras Image Embeddings using Contrastive Loss

Keras-Image-Embeddings-using-Contrastive-Loss Image to Embedding projection in vector space. Implementation in keras and tensorflow for custom data. B

🚀 PyTorch Implementation of "Progressive Distillation for Fast Sampling of Diffusion Models(v-diffusion)"

PyTorch Implementation of "Progressive Distillation for Fast Sampling of Diffusion Models(v-diffusion)" Unofficial PyTorch Implementation of Progressi

CAMoE + Dual SoftMax Loss (DSL): Improving Video-Text Retrieval by Multi-Stream Corpus Alignment and Dual Softmax Loss

CAMoE + Dual SoftMax Loss (DSL): Improving Video-Text Retrieval by Multi-Stream Corpus Alignment and Dual Softmax Loss This is official implement of "

CKD - Collaborative Knowledge Distillation for Heterogeneous Information Network Embedding

Collaborative Knowledge Distillation for Heterogeneous Information Network Embed

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

Implementations for the ICLR-2021 paper: SEED: Self-supervised Distillation For Visual Representation.

Implement of "Training deep neural networks via direct loss minimization" in PyTorch for 0-1 loss

This is the implementation of "Training deep neural networks via direct loss minimization" published at ICML 2016 in PyTorch. The implementation targe

📚 A collection of all the Deep Learning Metrics that I came across which are not accuracy/loss.

📚 A collection of all the Deep Learning Metrics that I came across which are not accuracy/loss.

Denoising images with Fourier Ring Correlation loss

Denoising images with Fourier Ring Correlation loss The python code accompanies the working manuscript Image quality measurements and denoising using

Source code for the BMVC-2021 paper "SimReg: Regression as a Simple Yet Effective Tool for Self-supervised Knowledge Distillation".

SimReg: A Simple Regression Based Framework for Self-supervised Knowledge Distillation Source code for the paper "SimReg: Regression as a Simple Yet E

Repo for the paper Extrapolating from a Single Image to a Thousand Classes using Distillation

Extrapolating from a Single Image to a Thousand Classes using Distillation by Yuki M. Asano* and Aaqib Saeed* (*Equal Contribution) Extrapolating from

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

Demonstration of transfer of knowledge and generalization with distillation

Distilling-the-Knowledge-in-a-Neural-Network This is an implementation of a part of the paper "Distilling the Knowledge in a Neural Network" (https://

A PyTorch implementation of the continual learning experiments with deep neural networks

Brain-Inspired Replay A PyTorch implementation of the continual learning experiments with deep neural networks described in the following paper: Brain

Official repository for "Orthogonal Projection Loss" (ICCV'21)

Orthogonal Projection Loss (ICCV'21) Kanchana Ranasinghe, Muzammal Naseer, Munawar Hayat, Salman Khan, & Fahad Shahbaz Khan Paper Link | Project Page

Paper Title: Heterogeneous Knowledge Distillation for Simultaneous Infrared-Visible Image Fusion and Super-Resolution

HKDnet Paper Title: "Heterogeneous Knowledge Distillation for Simultaneous Infrared-Visible Image Fusion and Super-Resolution" Email: 18186470991@163.

Code for the Lovász-Softmax loss (CVPR 2018)

The Lovász-Softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks Maxim Berman, Amal Ranne

PyTorch implementations of the beta divergence loss.

Beta Divergence Loss - PyTorch Implementation This repository contains code for a PyTorch implementation of the beta divergence loss. Dependencies Thi

SAFL: A Self-Attention Scene Text Recognizer with Focal Loss

SAFL: A Self-Attention Scene Text Recognizer with Focal Loss This repository implements the SAFL in pytorch. Installation conda env create -f environm

An Extendible (General) Continual Learning Framework based on Pytorch - official codebase of Dark Experience for General Continual Learning

Mammoth - An Extendible (General) Continual Learning Framework for Pytorch NEWS STAY TUNED: We are working on an update of this repository to include

Sparse Progressive Distillation: Resolving Overfitting under Pretrain-and-Finetune Paradigm

Sparse Progressive Distillation: Resolving Overfitting under Pretrain-and-Finetu

Losslandscapetaxonomy - Taxonomizing local versus global structure in neural network loss landscapes

Taxonomizing local versus global structure in neural network loss landscapes Int

BRNet - code for Automated assessment of BI-RADS categories for ultrasound images using multi-scale neural networks with an order-constrained loss function

BRNet code for "Automated assessment of BI-RADS categories for ultrasound images using multi-scale neural networks with an order-constrained loss func

Memory efficient transducer loss computation

Introduction This project implements the optimization techniques proposed in Improving RNN Transducer Modeling for End-to-End Speech Recognition to re

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

PyTorch code for training MM-DistillNet for multimodal knowledge distillation

There is More than Meets the Eye: Self-Supervised Multi-Object Detection and Tracking with Sound by Distilling Multimodal Knowledge MM-DistillNet is a

Official repository of the paper Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

Official repository of the paper Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

Simple and Robust Loss Design for Multi-Label Learning with Missing Labels

Simple and Robust Loss Design for Multi-Label Learning with Missing Labels Official PyTorch Implementation of the paper Simple and Robust Loss Design

chainladder - Property and Casualty Loss Reserving in Python

chainladder (python) chainladder - Property and Casualty Loss Reserving in Python This package gets inspiration from the popular R ChainLadder package

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation

Weak-supervised Visual Geo-localization via Attention-based Knowledge Distillation Introduction WAKD is a PyTorch implementation for our ICPR-2022 pap

simple demo codes for Learning to Teach with Dynamic Loss Functions

Learning to Teach with Dynamic Loss Functions This repo contains the simple demo for the NeurIPS-18 paper: Learning to Teach with Dynamic Loss Functio

This code provides a PyTorch implementation for OTTER (Optimal Transport distillation for Efficient zero-shot Recognition), as described in the paper.

Data Efficient Language-Supervised Zero-Shot Recognition with Optimal Transport Distillation This repository contains PyTorch evaluation code, trainin

![[AAAI 2021] EMLight: Lighting Estimation via Spherical Distribution Approximation and [ICCV 2021] Sparse Needlets for Lighting Estimation with Spherical Transport Loss](https://github.com/fnzhan/Illumination-Estimation/raw/master/teaser1.png)

[AAAI 2021] EMLight: Lighting Estimation via Spherical Distribution Approximation and [ICCV 2021] Sparse Needlets for Lighting Estimation with Spherical Transport Loss

EMLight: Lighting Estimation via Spherical Distribution Approximation (AAAI 2021) Update 12/2021: We release our Virtual Object Relighting (VOR) Datas

Code of TIP2021 Paper《SFace: Sigmoid-Constrained Hypersphere Loss for Robust Face Recognition》. We provide both MxNet and Pytorch versions.

SFace Code of TIP2021 Paper 《SFace: Sigmoid-Constrained Hypersphere Loss for Robust Face Recognition》. We provide both MxNet, PyTorch and Jittor versi

Hierarchical Cross-modal Talking Face Generation with Dynamic Pixel-wise Loss (ATVGnet)

Hierarchical Cross-modal Talking Face Generation with Dynamic Pixel-wise Loss (ATVGnet) By Lele Chen , Ross K Maddox, Zhiyao Duan, Chenliang Xu. Unive

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

The entmax mapping and its loss, a family of sparse softmax alternatives.

entmax This package provides a pytorch implementation of entmax and entmax losses: a sparse family of probability mappings and corresponding loss func

Pytorch implementation of the paper "Class-Balanced Loss Based on Effective Number of Samples"

Class-balanced-loss-pytorch Pytorch implementation of the paper Class-Balanced Loss Based on Effective Number of Samples presented at CVPR'19. Yin Cui

A Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Training Data》

RangeLoss Pytorch This is a Pytorch reproduction of Range Loss, which is proposed in paper 《Range Loss for Deep Face Recognition with Long-Tailed Trai

Getting Profit and Loss Make Easy From Binance

Getting Profit and Loss Make Easy From Binance I have been in Binance Automated Trading for some time and have generated a lot of transaction records,

Sleep staging from ECG, assisted with EEG

Sleep_Staging_Knowledge Distillation This codebase implements knowledge distillation approach for ECG based sleep staging assisted by EEG based sleep

Official implementation of the paper "Lightweight Deep CNN for Natural Image Matting via Similarity Preserving Knowledge Distillation"

Lightweight-Deep-CNN-for-Natural-Image-Matting-via-Similarity-Preserving-Knowledge-Distillation Introduction Accepted at IEEE Signal Processing Letter

Mask-invariant Face Recognition through Template-level Knowledge Distillation

Mask-invariant Face Recognition through Template-level Knowledge Distillation This is the official repository of "Mask-invariant Face Recognition thro

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

(Preprint) Official PyTorch implementation of "How Do Vision Transformers Work?"

Searching Parameterized AP Loss for Object Detection.

Parameterized AP Loss By Chenxin Tao, Zizhang Li, Xizhou Zhu, Gao Huang, Yong Liu, Jifeng Dai This is the official implementation of the Neurips 2021

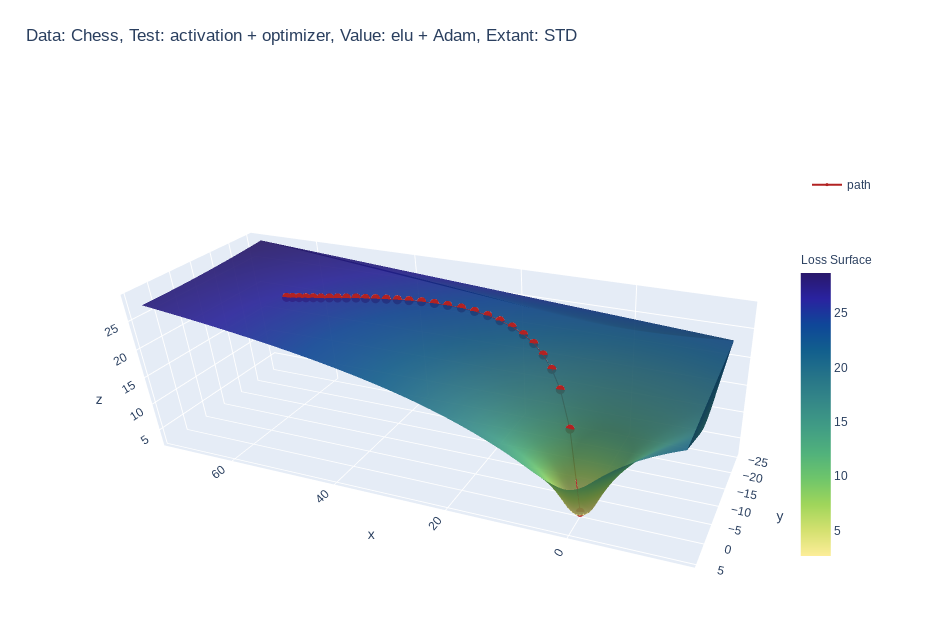

Create 3d loss surface visualizations, with optimizer path. Issues welcome!

MLVTK A loss surface visualization tool Simple feed-forward network trained on chess data, using elu activation and Adam optimizer Simple feed-forward

Official implementation of the Neurips 2021 paper Searching Parameterized AP Loss for Object Detection.

Parameterized AP Loss By Chenxin Tao, Zizhang Li, Xizhou Zhu, Gao Huang, Yong Liu, Jifeng Dai This is the official implementation of the Neurips 2021

Class-Balanced Loss Based on Effective Number of Samples. CVPR 2019

Class-Balanced Loss Based on Effective Number of Samples Tensorflow code for the paper: Class-Balanced Loss Based on Effective Number of Samples Yin C

[NeurIPS 2019] Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss

Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, Tengyu Ma This is the offi

Code for "Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification", ECCV 2020 Spotlight

Learning From Multiple Experts: Self-paced Knowledge Distillation for Long-tailed Classification Implementation of "Learning From Multiple Experts: Se

Pytorch Implementations of large number classical backbone CNNs, data enhancement, torch loss, attention, visualization and some common algorithms.

Torch-template-for-deep-learning Pytorch implementations of some **classical backbone CNNs, data enhancement, torch loss, attention, visualization and

Python implementation of ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images, AAAI2022.

ADD: Frequency Attention and Multi-View based Knowledge Distillation to Detect Low-Quality Compressed Deepfake Images Binh M. Le & Simon S. Woo, "ADD:

Perturbed Self-Distillation: Weakly Supervised Large-Scale Point Cloud Semantic Segmentation (ICCV2021)

Perturbed Self-Distillation: Weakly Supervised Large-Scale Point Cloud Semantic Segmentation (ICCV2021) This is the implementation of PSD (ICCV 2021),

The Codebase for Causal Distillation for Language Models.

Causal Distillation for Language Models Zhengxuan Wu*,Atticus Geiger*, Josh Rozner, Elisa Kreiss, Hanson Lu, Thomas Icard, Christopher Potts, Noah D.

A simple library that implements CLIP guided loss in PyTorch.

pytorch_clip_guided_loss: Pytorch implementation of the CLIP guided loss for Text-To-Image, Image-To-Image, or Image-To-Text generation. A simple libr

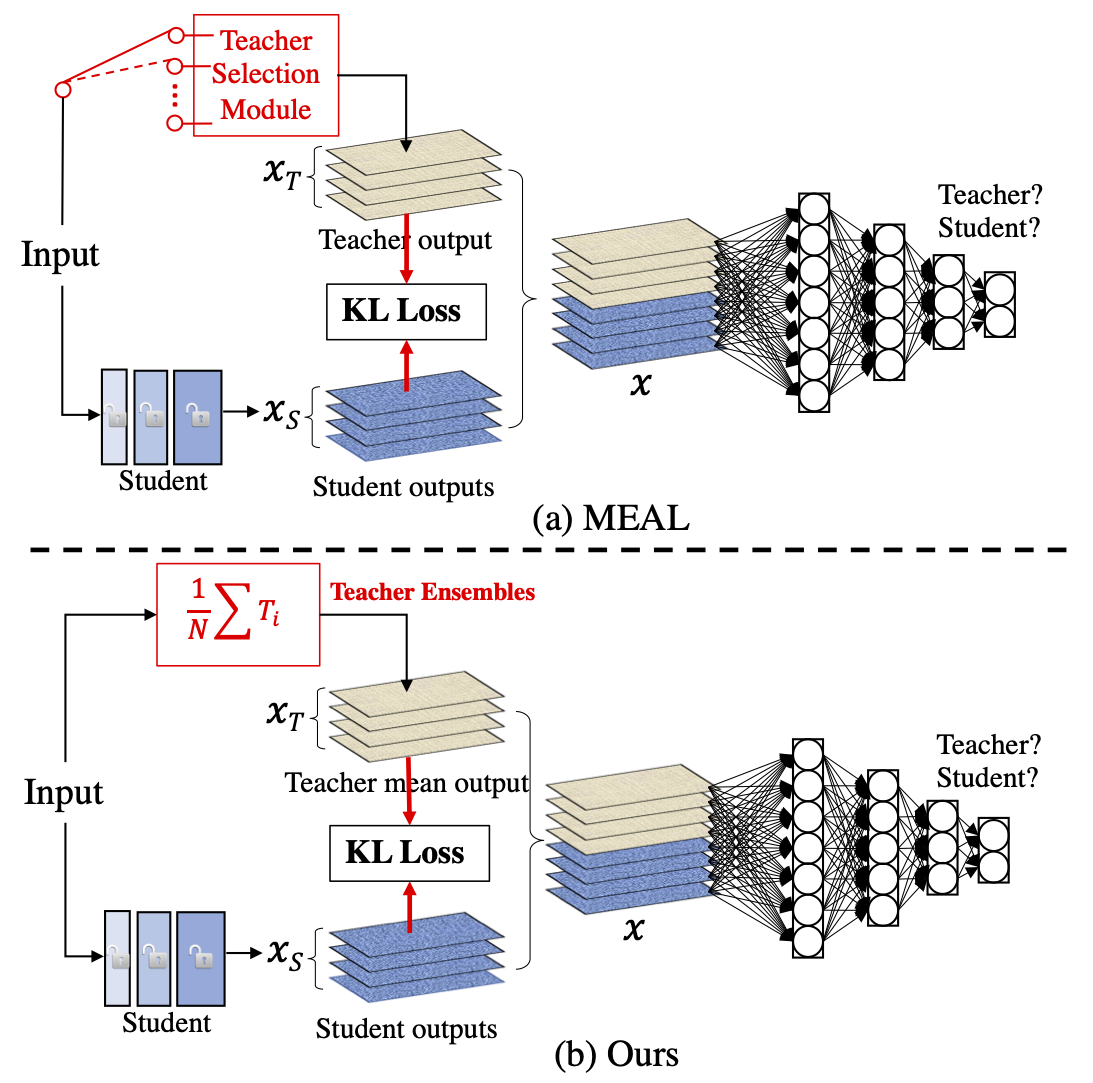

MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks

MEAL-V2 This is the official pytorch implementation of our paper: "MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tric

A Loss Function for Generative Neural Networks Based on Watson’s Perceptual Model

This repository contains the similarity metrics designed and evaluated in the paper, and instructions and code to re-run the experiments. Implementation in the deep-learning framework PyTorch

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

A Fast Knowledge Distillation Framework for Visual Recognition

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

![[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation](https://github.com/canqin001/Efficient_Graph_Similarity_Computation/raw/main/Figs/our-setting.png)

[NeurIPS-2021] Slow Learning and Fast Inference: Efficient Graph Similarity Computation via Knowledge Distillation

Efficient Graph Similarity Computation - (EGSC) This repo contains the source code and dataset for our paper: Slow Learning and Fast Inference: Effici

PyTorch implementation of paper A Fast Knowledge Distillation Framework for Visual Recognition.

FKD: A Fast Knowledge Distillation Framework for Visual Recognition Official PyTorch implementation of paper A Fast Knowledge Distillation Framework f

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

EasyTransfer is designed to make the development of transfer learning in NLP applications easier.

EasyTransfer is designed to make the development of transfer learning in NLP applications easier. The literature has witnessed the success of applying

UnFlow: Unsupervised Learning of Optical Flow with a Bidirectional Census Loss

UnFlow: Unsupervised Learning of Optical Flow with a Bidirectional Census Loss This repository contains the TensorFlow implementation of the paper UnF

The implementation for "Comprehensive Knowledge Distillation with Causal Intervention".

Comprehensive Knowledge Distillation with Causal Intervention This repository is a PyTorch implementation of "Comprehensive Knowledge Distillation wit

![[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️](https://github.com/sungyoon-lee/LossLandscapeMatters/raw/master/media/fig.png)

[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️

Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples This repository is the official implementation of "Tow

Official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021.

Introduction This repository is the official PyTorch implementation of Data-free Knowledge Distillation for Object Detection, WACV 2021. Data-free Kno

Focal Loss for Dense Rotation Object Detection

Convert ResNets weights from GluonCV to Tensorflow Abstract GluonCV released some new resnet pre-training weights and designed some new resnets (such

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

Implement the Pareto Optimizer and pcgrad to make a self-adaptive loss for multi-task

multi-task_losses_optimizer Implement the Pareto Optimizer and pcgrad to make a self-adaptive loss for multi-task 已经实验过了,不会有cuda out of memory情况 ##Par

Pytorch implementation for Patient Knowledge Distillation for BERT Model Compression

Patient Knowledge Distillation for BERT Model Compression Knowledge distillation for BERT model Installation Run command below to install the environm