248 Repositories

Python distillation-loss Libraries

An official implementation of "Background-Aware Pooling and Noise-Aware Loss for Weakly-Supervised Semantic Segmentation" (CVPR 2021) in PyTorch.

BANA This is the implementation of the paper "Background-Aware Pooling and Noise-Aware Loss for Weakly-Supervised Semantic Segmentation". For more inf

Unofficial implementation of Proxy Anchor Loss for Deep Metric Learning

Proxy Anchor Loss for Deep Metric Learning Unofficial pytorch, tensorflow and mxnet implementations of Proxy Anchor Loss for Deep Metric Learning. Not

EvDistill: Asynchronous Events to End-task Learning via Bidirectional Reconstruction-guided Cross-modal Knowledge Distillation (CVPR'21)

EvDistill: Asynchronous Events to End-task Learning via Bidirectional Reconstruction-guided Cross-modal Knowledge Distillation (CVPR'21) Citation If y

Code for DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning

DisCo: Remedy Self-supervised Learning on Lightweight Models with Distilled Contrastive Learning Pytorch Implementation for DisCo: Remedy Self-supervi

Official PyTorch implementation of "Meta-Learning with Task-Adaptive Loss Function for Few-Shot Learning" (ICCV2021 Oral)

MeTAL - Meta-Learning with Task-Adaptive Loss Function for Few-Shot Learning (ICCV2021 Oral) Sungyong Baik, Janghoon Choi, Heewon Kim, Dohee Cho, Jaes

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

Focal and Global Knowledge Distillation for Detectors

FGD Paper: Focal and Global Knowledge Distillation for Detectors Install MMDetection and MS COCO2017 Our codes are based on MMDetection. Please follow

Implementation of the Chamfer Distance as a module for pyTorch

Chamfer Distance for pyTorch This is an implementation of the Chamfer Distance as a module for pyTorch. It is written as a custom C++/CUDA extension.

Complete-IoU (CIoU) Loss and Cluster-NMS for Object Detection and Instance Segmentation (YOLACT)

Complete-IoU Loss and Cluster-NMS for Improving Object Detection and Instance Segmentation. Our paper is accepted by IEEE Transactions on Cybernetics

Live training loss plot in Jupyter Notebook for Keras, PyTorch and others

livelossplot Don't train deep learning models blindfolded! Be impatient and look at each epoch of your training! (RECENT CHANGES, EXAMPLES IN COLAB, A

Quantization library for PyTorch. Support low-precision and mixed-precision quantization, with hardware implementation through TVM.

HAWQ: Hessian AWare Quantization HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform

Official implementation for CVPR 2021 paper: Adaptive Class Suppression Loss for Long-Tail Object Detection

Adaptive Class Suppression Loss for Long-Tail Object Detection This repo is the official implementation for CVPR 2021 paper: Adaptive Class Suppressio

DA2Lite is an automated model compression toolkit for PyTorch.

DA2Lite (Deep Architecture to Lite) is a toolkit to compress and accelerate deep network models. ⭐ Star us on GitHub — it helps!! Frameworks & Librari

Knowledge Distillation Toolbox for Semantic Segmentation

SegDistill: Toolbox for Knowledge Distillation on Semantic Segmentation Networks This repo contains the supported code and configuration files for Seg

Confident Semantic Ranking Loss for Part Parsing

Confident Semantic Ranking Loss for Part Parsing

Python package for visualizing the loss landscape of parameterized quantum algorithms.

orqviz A Python package for easily visualizing the loss landscape of Variational Quantum Algorithms by Zapata Computing Inc. orqviz provides a collect

Contrastive Feature Loss for Image Prediction

Contrastive Feature Loss for Image Prediction We provide a PyTorch implementation of our contrastive feature loss presented in: Contrastive Feature Lo

A demo of chinese asr

chinese_asr_demo 一个端到端的中文语音识别模型训练、测试框架 具备数据预处理、模型训练、解码、计算wer等等功能 训练数据 训练数据采用thchs_30,

Official implementation for "Image Quality Assessment using Contrastive Learning"

Image Quality Assessment using Contrastive Learning Pavan C. Madhusudana, Neil Birkbeck, Yilin Wang, Balu Adsumilli and Alan C. Bovik This is the offi

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

Robust and Accurate Object Detection via Self-Knowledge Distillation

Robust and Accurate Object Detection via Self-Knowledge Distillation paper:https://arxiv.org/abs/2111.07239 Environments Python 3.7 Cuda 10.1 Prepare

Deeplab-resnet-101 in Pytorch with Jaccard loss

Deeplab-resnet-101 Pytorch with Lovász hinge loss Train deeplab-resnet-101 with binary Jaccard loss surrogate, the Lovász hinge, as described in http:

CoReD: Generalizing Fake Media Detection with Continual Representation using Distillation (ACMMM'21 Oral Paper)

CoReD: Generalizing Fake Media Detection with Continual Representation using Distillation (ACMMM'21 Oral Paper) (Accepted for oral presentation at ACM

Instance-conditional Knowledge Distillation for Object Detection

Instance-conditional Knowledge Distillation for Object Detection This is a MegEngine implementation of the paper "Instance-conditional Knowledge Disti

PyTorch Implementation of Temporal Output Discrepancy for Active Learning, ICCV 2021

Temporal Output Discrepancy for Active Learning PyTorch implementation of Semi-Supervised Active Learning with Temporal Output Discrepancy, ICCV 2021.

Arch-Net: Model Distillation for Architecture Agnostic Model Deployment

Arch-Net: Model Distillation for Architecture Agnostic Model Deployment The official implementation of Arch-Net: Model Distillation for Architecture A

A simple tool to test internet stability.

pingtest Description A personal project for testing internet stability, intended for use in Linux and Windows.

[NeurIPS 2021] Introspective Distillation for Robust Question Answering

Introspective Distillation (IntroD) This repository is the Pytorch implementation of our paper "Introspective Distillation for Robust Question Answeri

A treasure chest for visual recognition powered by PaddlePaddle

简体中文 | English PaddleClas 简介 飞桨图像识别套件PaddleClas是飞桨为工业界和学术界所准备的一个图像识别任务的工具集,助力使用者训练出更好的视觉模型和应用落地。 近期更新 2021.11.1 发布PP-ShiTu技术报告,新增饮料识别demo 2021.10.23 发

Repository for the semantic WMI loss

Installation: pip install -e . Installing DL2: First clone DL2 in a separate directory and install it using the following commands: git clone https:/

![DiscoNet: Learning Distilled Collaboration Graph for Multi-Agent Perception [NeurIPS 2021]](https://github.com/ai4ce/DiscoNet/raw/main/img.png)

DiscoNet: Learning Distilled Collaboration Graph for Multi-Agent Perception [NeurIPS 2021]

DiscoNet: Learning Distilled Collaboration Graph for Multi-Agent Perception [NeurIPS 2021] Yiming Li, Shunli Ren, Pengxiang Wu, Siheng Chen, Chen Feng

![[ICCV'21] Learning Conditional Knowledge Distillation for Degraded-Reference Image Quality Assessment](https://user-images.githubusercontent.com/35843017/128686858-534ac4c1-8556-4999-a2cb-695119251e78.jpg)

[ICCV'21] Learning Conditional Knowledge Distillation for Degraded-Reference Image Quality Assessment

CKDN The official implementation of the ICCV2021 paper "Learning Conditional Knowledge Distillation for Degraded-Reference Image Quality Assessment" O

🔥 Real-time Super Resolution enhancement (4x) with content loss and relativistic adversarial optimization 🔥

🔥 Real-time Super Resolution enhancement (4x) with content loss and relativistic adversarial optimization 🔥

Chinese NER(Named Entity Recognition) using BERT(Softmax, CRF, Span)

Chinese NER(Named Entity Recognition) using BERT(Softmax, CRF, Span)

![[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data](https://github.com/zju-vipa/MosaicKD/raw/main/assets/intro.jpg)

[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data

MosaicKD Code for NeurIPS-21 paper "Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data" 1. Motivation Natural images share common l

![[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data](https://github.com/zju-vipa/MosaicKD/raw/main/assets/intro.jpg)

[NeurIPS-2021] Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data

MosaicKD Code for NeurIPS-21 paper "Mosaicking to Distill: Knowledge Distillation from Out-of-Domain Data" 1. Motivation Natural images share common l

Covid-19 Test AI (Deep Learning - NNs) Software. Accuracy is the %96.5, loss is the 0.09 :)

Covid-19 Test AI (Deep Learning - NNs) Software I developed a segmentation algorithm to understand whether Covid-19 Test Photos are positive or negati

Generalized Jensen-Shannon Divergence Loss for Learning with Noisy Labels

The official code for the NeurIPS 2021 paper Generalized Jensen-Shannon Divergence Loss for Learning with Noisy Labels

An implementation for the loss function proposed in Decoupled Contrastive Loss paper.

Decoupled-Contrastive-Learning This repository is an implementation for the loss function proposed in Decoupled Contrastive Loss paper. Requirements P

Light-weight network, depth estimation, knowledge distillation, real-time depth estimation, auxiliary data.

light-weight-depth-estimation Boosting Light-Weight Depth Estimation Via Knowledge Distillation, https://arxiv.org/abs/2105.06143 Junjie Hu, Chenyou F

PyTorch implementation of MSBG hearing loss model and MBSTOI intelligibility metric

PyTorch implementation of MSBG hearing loss model and MBSTOI intelligibility metric This repository contains the implementation of MSBG hearing loss m

Adaptive, interpretable wavelets across domains (NeurIPS 2021)

Adaptive wavelets Wavelets which adapt given data (and optionally a pre-trained model). This yields models which are faster, more compressible, and mo

This is the code of NeurIPS'21 paper "Towards Enabling Meta-Learning from Target Models".

ST This is the code of NeurIPS 2021 paper "Towards Enabling Meta-Learning from Target Models". If you use any content of this repo for your work, plea

Official implementation for the paper: "Multi-label Classification with Partial Annotations using Class-aware Selective Loss"

Multi-label Classification with Partial Annotations using Class-aware Selective Loss Paper | Pretrained models Official PyTorch Implementation Emanuel

This repository contains all source code, pre-trained models related to the paper "An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator"

An Empirical Study on GANs with Margin Cosine Loss and Relativistic Discriminator This is a Pytorch implementation for the paper "An Empirical Study o

Official implementation for the paper: Multi-label Classification with Partial Annotations using Class-aware Selective Loss

Multi-label Classification with Partial Annotations using Class-aware Selective Loss Paper | Pretrained models Official PyTorch Implementation Emanuel

Spatial-Location-Constraint-Prototype-Loss-for-Open-Set-Recognition

Spatial Location Constraint Prototype Loss for Open Set Recognition Official PyTorch implementation of "Spatial Location Constraint Prototype Loss for

The official implementation of Equalization Loss for Long-Tailed Object Recognition (CVPR 2020) based on Detectron2

Equalization Loss for Long-Tailed Object Recognition Jingru Tan, Changbao Wang, Buyu Li, Quanquan Li, Wanli Ouyang, Changqing Yin, Junjie Yan ⚠️ We re

Pytorch implementation for "Distribution-Balanced Loss for Multi-Label Classification in Long-Tailed Datasets" (ECCV 2020 Spotlight)

Distribution-Balanced Loss [Paper] The implementation of our paper Distribution-Balanced Loss for Multi-Label Classification in Long-Tailed Datasets (

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Connectionist Temporal Classification (CTC) decoding algorithms: best path, beam search, lexicon search, prefix search, and token passing. Implemented in Python.

CTC Decoding Algorithms Update 2021: installable Python package Python implementation of some common Connectionist Temporal Classification (CTC) decod

MutualGuide is a compact object detector specially designed for embedded devices

Introduction MutualGuide is a compact object detector specially designed for embedded devices. Comparing to existing detectors, this repo contains two

This repository contains the code for the paper in EMNLP 2021: "HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression".

HRKD: Hierarchical Relational Knowledge Distillation for Cross-domain Language Model Compression This repository contains the code for the paper in EM

Multi-Objective Loss Balancing for Physics-Informed Deep Learning

Multi-Objective Loss Balancing for Physics-Informed Deep Learning Code for ReLoBRaLo. Abstract Physics Informed Neural Networks (PINN) are algorithms

Handling Information Loss of Graph Neural Networks for Session-based Recommendation

LESSR A PyTorch implementation of LESSR (Lossless Edge-order preserving aggregation and Shortcut graph attention for Session-based Recommendation) fro

ALL Snow Removed: Single Image Desnowing Algorithm Using Hierarchical Dual-tree Complex Wavelet Representation and Contradict Channel Loss (HDCWNet)

ALL Snow Removed: Single Image Desnowing Algorithm Using Hierarchical Dual-tree Complex Wavelet Representation and Contradict Channel Loss (HDCWNet) (

![[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis](https://raw.githubusercontent.com/EndlessSora/focal-frequency-loss/master/resources/teaser.jpg)

[ICCV 2021] Focal Frequency Loss for Image Reconstruction and Synthesis

Focal Frequency Loss - Official PyTorch Implementation This repository provides the official PyTorch implementation for the following paper: Focal Fre

A Python library for adversarial machine learning focusing on benchmarking adversarial robustness.

ARES This repository contains the code for ARES (Adversarial Robustness Evaluation for Safety), a Python library for adversarial machine learning rese

Code for the TIP 2021 Paper "Salient Object Detection with Purificatory Mechanism and Structural Similarity Loss"

PurNet Project for the TIP 2021 Paper "Salient Object Detection with Purificatory Mechanism and Structural Similarity Loss" Abstract Image-based salie

The official implementation of CVPR 2021 Paper: Improving Weakly Supervised Visual Grounding by Contrastive Knowledge Distillation.

Improving Weakly Supervised Visual Grounding by Contrastive Knowledge Distillation This repository is the official implementation of CVPR 2021 paper:

Code for the preprint "Well-classified Examples are Underestimated in Classification with Deep Neural Networks"

This is a repository for the paper of "Well-classified Examples are Underestimated in Classification with Deep Neural Networks" The implementation and

CAMoE + Dual SoftMax Loss (DSL): Improving Video-Text Retrieval by Multi-Stream Corpus Alignment and Dual Softmax Loss

CAMoE + Dual SoftMax Loss (DSL): Improving Video-Text Retrieval by Multi-Stream Corpus Alignment and Dual Softmax Loss This is official implement of "

Disturbing Target Values for Neural Network regularization: attacking the loss layer to prevent overfitting

Disturbing Target Values for Neural Network regularization: attacking the loss layer to prevent overfitting 1. Classification Task PyTorch implementat

Official implementation of Influence-balanced Loss for Imbalanced Visual Classification in PyTorch.

Official implementation of Influence-balanced Loss for Imbalanced Visual Classification in PyTorch.

This is the official pytorch implementation of Student Helping Teacher: Teacher Evolution via Self-Knowledge Distillation(TESKD)

Student Helping Teacher: Teacher Evolution via Self-Knowledge Distillation (TESKD) By Zheng Li[1,4], Xiang Li[2], Lingfeng Yang[2,4], Jian Yang[2], Zh

Official implementation of NeurIPS 2021 paper "One Loss for All: Deep Hashing with a Single Cosine Similarity based Learning Objective"

Official implementation of NeurIPS 2021 paper "One Loss for All: Deep Hashing with a Single Cosine Similarity based Learning Objective"

Pytorch implementation of four neural network based domain adaptation techniques: DeepCORAL, DDC, CDAN and CDAN+E. Evaluated on benchmark dataset Office31.

Deep-Unsupervised-Domain-Adaptation Pytorch implementation of four neural network based domain adaptation techniques: DeepCORAL, DDC, CDAN and CDAN+E.

Deep Face Recognition in PyTorch

Face Recognition in PyTorch By Alexey Gruzdev and Vladislav Sovrasov Introduction A repository for different experimental Face Recognition models such

Code for visualizing the loss landscape of neural nets

Visualizing the Loss Landscape of Neural Nets This repository contains the PyTorch code for the paper Hao Li, Zheng Xu, Gavin Taylor, Christoph Studer

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres

A CRM department in a local bank works on classify their lost customers with their past datas. So they want predict with these method that average loss balance and passive duration for future.

Rule-Based-Classification-in-a-Banking-Case. A CRM department in a local bank works on classify their lost customers with their past datas. So they wa

Improving Convolutional Networks via Attention Transfer (ICLR 2017)

Attention Transfer PyTorch code for "Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Tran

NEG loss implemented in pytorch

Pytorch Negative Sampling Loss Negative Sampling Loss implemented in PyTorch. Usage neg_loss = NEG_loss(num_classes, embedding_size) optimizer =

Code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

This repository contains the code for the paper in Findings of EMNLP 2021: "EfficientBERT: Progressively Searching Multilayer Perceptron via Warm-up Knowledge Distillation".

Official pytorch implementation of "Feature Stylization and Domain-aware Contrastive Loss for Domain Generalization" ACMMM 2021 (Oral)

Feature Stylization and Domain-aware Contrastive Loss for Domain Generalization This is an official implementation of "Feature Stylization and Domain-

yolov5目标检测模型的知识蒸馏(基于响应的蒸馏)

代码地址: https://github.com/Sharpiless/yolov5-knowledge-distillation 教师模型: python train.py --weights weights/yolov5m.pt \ --cfg models/yolov5m.ya

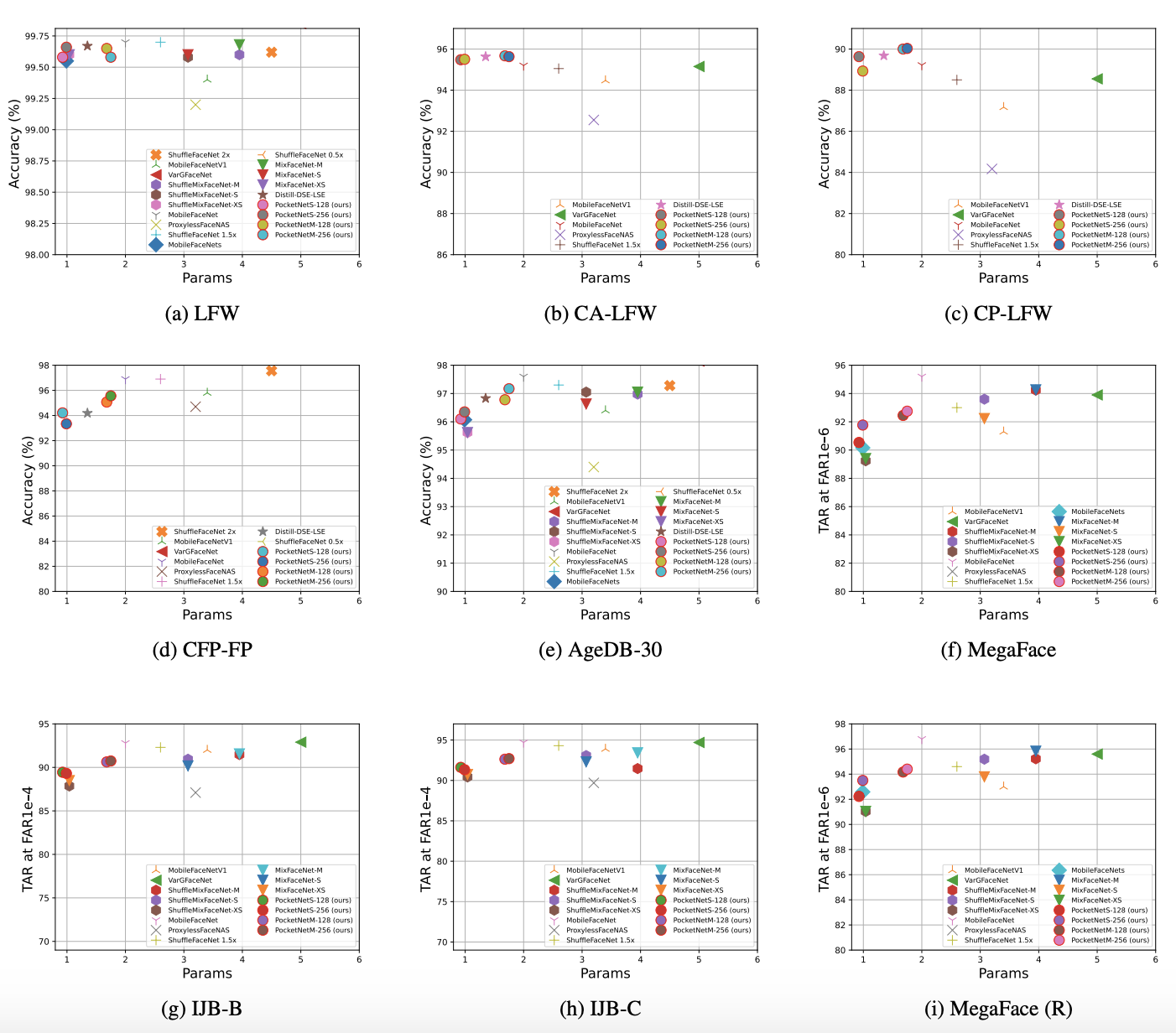

PocketNet: Extreme Lightweight Face Recognition Network using Neural Architecture Search and Multi-Step Knowledge Distillation

PocketNet This is the official repository of the paper: PocketNet: Extreme Lightweight Face Recognition Network using Neural Architecture Search and M

Implementation of "A Deep Learning Loss Function based on Auditory Power Compression for Speech Enhancement" by pytorch

This repository is used to suspend the results of our paper "A Deep Learning Loss Function based on Auditory Power Compression for Speech Enhancement"

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations

GeDML is an easy-to-use generalized deep metric learning library

GeDML is an easy-to-use generalized deep metric learning library

This repo uses a combination of logits and feature distillation method to teach the PSPNet model of ResNet18 backbone with the PSPNet model of ResNet50 backbone. All the models are trained and tested on the PASCAL-VOC2012 dataset.

PSPNet-logits and feature-distillation Introduction This repository is based on PSPNet and modified from semseg and Pixelwise_Knowledge_Distillation_P

🌈 PyTorch Implementation for EMNLP'21 Findings "Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer"

SGLKT-VisDial Pytorch Implementation for the paper: Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer Gi-Cheon Kang, Junseok P

TorchDistiller - a collection of the open source pytorch code for knowledge distillation, especially for the perception tasks, including semantic segmentation, depth estimation, object detection and instance segmentation.

This project is a collection of the open source pytorch code for knowledge distillation, especially for the perception tasks, including semantic segmentation, depth estimation, object detection and instance segmentation.

This Binance trading bot detects new coins as soon as they are listed on the Binance exchange and automatically places sell and buy orders. It comes with trailing stop loss and other features. If you like this project please consider donating via Brave.

binance-trading-bot-new-coins This Binance trading bot detects new coins as soon as they are listed on the Binance exchange and automatically places s

Exploring Classification Equilibrium in Long-Tailed Object Detection, ICCV2021

Exploring Classification Equilibrium in Long-Tailed Object Detection (LOCE, ICCV 2021) Paper Introduction The conventional detectors tend to make imba

Online Multi-Granularity Distillation for GAN Compression (ICCV2021)

Online Multi-Granularity Distillation for GAN Compression (ICCV2021) This repository contains the pytorch codes and trained models described in the IC

Code for ICCV 2021 paper: ARAPReg: An As-Rigid-As Possible Regularization Loss for Learning Deformable Shape Generators..

ARAPReg Code for ICCV 2021 paper: ARAPReg: An As-Rigid-As Possible Regularization Loss for Learning Deformable Shape Generators.. Installation The cod

TOOD: Task-aligned One-stage Object Detection, ICCV2021 Oral

One-stage object detection is commonly implemented by optimizing two sub-tasks: object classification and localization, using heads with two parallel branches, which might lead to a certain level of spatial misalignment in predictions between the two tasks.

We evaluate our method on different datasets (including ShapeNet, CUB-200-2011, and Pascal3D+) and achieve state-of-the-art results, outperforming all the other supervised and unsupervised methods and 3D representations, all in terms of performance, accuracy, and training time.

An Effective Loss Function for Generating 3D Models from Single 2D Image without Rendering Papers with code | Paper Nikola Zubić Pietro Lio University

Code release for The Devil is in the Channels: Mutual-Channel Loss for Fine-Grained Image Classification (TIP 2020)

The Devil is in the Channels: Mutual-Channel Loss for Fine-Grained Image Classification Code release for The Devil is in the Channels: Mutual-Channel

Versatile Generative Language Model

Versatile Generative Language Model This is the implementation of the paper: Exploring Versatile Generative Language Model Via Parameter-Efficient Tra

Recall Loss for Semantic Segmentation (This repo implements the paper: Recall Loss for Semantic Segmentation)

Recall Loss for Semantic Segmentation (This repo implements the paper: Recall Loss for Semantic Segmentation) Download Synthia dataset The model uses

TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A good teacher is patient and consistent by Beyer et al.

FunMatch-Distillation TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A g

A tiny, friendly, strong baseline code for Person-reID (based on pytorch).

Pytorch ReID Strong, Small, Friendly A tiny, friendly, strong baseline code for Person-reID (based on pytorch). Strong. It is consistent with the new

![Official PyTorch Implementation of Rank & Sort Loss [ICCV2021]](https://github.com/kemaloksuz/RankSortLoss/raw/main/assets/Teaser.png)

Official PyTorch Implementation of Rank & Sort Loss [ICCV2021]

Rank & Sort Loss for Object Detection and Instance Segmentation The official implementation of Rank & Sort Loss. Our implementation is based on mmdete

![[IJCAI-2021] A benchmark of data-free knowledge distillation from paper](https://github.com/zju-vipa/DataFree/raw/main/assets/cmi.png)

[IJCAI-2021] A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation"

DataFree A benchmark of data-free knowledge distillation from paper "Contrastive Model Inversion for Data-Free Knowledge Distillation" Authors: Gongfa

Code for visualizing the loss landscape of neural nets

Visualizing the Loss Landscape of Neural Nets This repository contains the PyTorch code for the paper Hao Li, Zheng Xu, Gavin Taylor, Christoph Studer

Align before Fuse: Vision and Language Representation Learning with Momentum Distillation

This is the official PyTorch implementation of the ALBEF paper [Blog]. This repository supports pre-training on custom datasets, as well as finetuning on VQA, SNLI-VE, NLVR2, Image-Text Retrieval on MSCOCO and Flickr30k, and visual grounding on RefCOCO+. Pre-trained and finetuned checkpoints are released.

S2-BNN: Bridging the Gap Between Self-Supervised Real and 1-bit Neural Networks via Guided Distribution Calibration (CVPR 2021)

S2-BNN (Self-supervised Binary Neural Networks Using Distillation Loss) This is the official pytorch implementation of our paper: "S2-BNN: Bridging th

noisy labels; missing labels; semi-supervised learning; entropy; uncertainty; robustness and generalisation.

ProSelfLC: CVPR 2021 ProSelfLC: Progressive Self Label Correction for Training Robust Deep Neural Networks For any specific discussion or potential fu