804 Repositories

Python distributed-training Libraries

🎛 Distributed machine learning made simple.

🎛 lazycluster Distributed machine learning made simple. Use your preferred distributed ML framework like a lazy engineer. Getting Started • Highlight

Distributed Evolutionary Algorithms in Python

DEAP DEAP is a novel evolutionary computation framework for rapid prototyping and testing of ideas. It seeks to make algorithms explicit and data stru

Massively parallel self-organizing maps: accelerate training on multicore CPUs, GPUs, and clusters

Somoclu Somoclu is a massively parallel implementation of self-organizing maps. It exploits multicore CPUs, it is able to rely on MPI for distributing

Distributed scikit-learn meta-estimators in PySpark

sk-dist: Distributed scikit-learn meta-estimators in PySpark What is it? sk-dist is a Python package for machine learning built on top of scikit-learn

Decentralized deep learning in PyTorch. Built to train models on thousands of volunteers across the world.

Hivemind: decentralized deep learning in PyTorch Hivemind is a PyTorch library to train large neural networks across the Internet. Its intended usage

Distributed Computing for AI Made Simple

Project Home Blog Documents Paper Media Coverage Join Fiber users email list [email protected] Fiber Distributed Computing for AI Made Simp

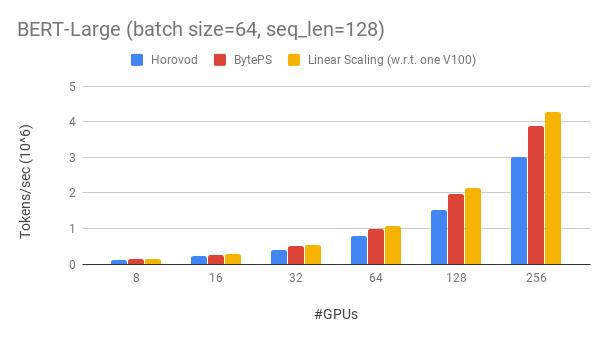

A high performance and generic framework for distributed DNN training

BytePS BytePS is a high performance and general distributed training framework. It supports TensorFlow, Keras, PyTorch, and MXNet, and can run on eith

a distributed deep learning platform

Apache SINGA Distributed deep learning system http://singa.apache.org Quick Start Installation Examples Issues JIRA tickets Code Analysis: Mailing Lis

PyTorch extensions for high performance and large scale training.

Description FairScale is a PyTorch extension library for high performance and large scale training on one or multiple machines/nodes. This library ext

Distributed Tensorflow, Keras and PyTorch on Apache Spark/Flink & Ray

A unified Data Analytics and AI platform for distributed TensorFlow, Keras and PyTorch on Apache Spark/Flink & Ray What is Analytics Zoo? Analytics Zo

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

BigDL: Distributed Deep Learning Framework for Apache Spark

BigDL: Distributed Deep Learning on Apache Spark What is BigDL? BigDL is a distributed deep learning library for Apache Spark; with BigDL, users can w

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Ray provides a simple, universal API for building distributed applications. Ray is packaged with the following libraries for accelerating machine lear

An open source reinforcement learning framework for training, evaluating, and deploying robust trading agents.

TensorTrade: Trade Efficiently with Reinforcement Learning TensorTrade is still in Beta, meaning it should be used very cautiously if used in producti

PyTorch implementation of "Contrast to Divide: self-supervised pre-training for learning with noisy labels"

Contrast to Divide: self-supervised pre-training for learning with noisy labels This is an official implementation of "Contrast to Divide: self-superv

Code for our paper at ECCV 2020: Post-Training Piecewise Linear Quantization for Deep Neural Networks

PWLQ Updates 2020/07/16 - We are working on getting permission from our institution to release our source code. We will release it once we are granted

Pytorch Lightning Distributed Accelerators using Ray

Distributed PyTorch Lightning Training on Ray This library adds new PyTorch Lightning plugins for distributed training using the Ray distributed compu

Generate text images for training deep learning ocr model

New version release:https://github.com/oh-my-ocr/text_renderer Text Renderer Generate text images for training deep learning OCR model (e.g. CRNN). Su

A synthetic data generator for text recognition

TextRecognitionDataGenerator A synthetic data generator for text recognition What is it for? Generating text image samples to train an OCR software. N

Awesome multilingual OCR toolkits based on PaddlePaddle (practical ultra lightweight OCR system, provide data annotation and synthesis tools, support training and deployment among server, mobile, embedded and IoT devices)

English | 简体中文 Introduction PaddleOCR aims to create multilingual, awesome, leading, and practical OCR tools that help users train better models and a

Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics

Dataset Cartography Code for the paper Dataset Cartography: Mapping and Diagnosing Datasets with Training Dynamics at EMNLP 2020. This repository cont

Code for the paper "Training GANs with Stronger Augmentations via Contrastive Discriminator" (ICLR 2021)

Training GANs with Stronger Augmentations via Contrastive Discriminator (ICLR 2021) This repository contains the code for reproducing the paper: Train

Unofficial implementation of "TTNet: Real-time temporal and spatial video analysis of table tennis" (CVPR 2020)

TTNet-Pytorch The implementation for the paper "TTNet: Real-time temporal and spatial video analysis of table tennis" An introduction of the project c

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

This repository holds NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pytorch. Some of the code here will be included in upstream Pytorch eventually. The intention of Apex is to make up-to-date utilities available to users as quickly as possible.

Official code of our work, Unified Pre-training for Program Understanding and Generation [NAACL 2021].

PLBART Code pre-release of our work, Unified Pre-training for Program Understanding and Generation accepted at NAACL 2021. Note. A detailed documentat

Pytorch Lightning Distributed Accelerators using Ray

Distributed PyTorch Lightning Training on Ray This library adds new PyTorch Lightning accelerators for distributed training using the Ray distributed

![[CVPR2021 Oral] UP-DETR: Unsupervised Pre-training for Object Detection with Transformers](https://github.com/dddzg/up-detr/raw/master/.github/UP-DETR.png)

[CVPR2021 Oral] UP-DETR: Unsupervised Pre-training for Object Detection with Transformers

UP-DETR: Unsupervised Pre-training for Object Detection with Transformers This is the official PyTorch implementation and models for UP-DETR paper: @a

Consistency Regularization for Adversarial Robustness

Consistency Regularization for Adversarial Robustness Official PyTorch implementation of Consistency Regularization for Adversarial Robustness by Jiho

A complete end-to-end demonstration in which we collect training data in Unity and use that data to train a deep neural network to predict the pose of a cube. This model is then deployed in a simulated robotic pick-and-place task.

Object Pose Estimation Demo This tutorial will go through the steps necessary to perform pose estimation with a UR3 robotic arm in Unity. You’ll gain

PArallel Distributed Deep LEarning: Machine Learning Framework from Industrial Practice (『飞桨』核心框架,深度学习&机器学习高性能单机、分布式训练和跨平台部署)

English | 简体中文 Welcome to the PaddlePaddle GitHub. PaddlePaddle, as the only independent R&D deep learning platform in China, has been officially open

Microsoft Distributed Machine Learning Toolkit

DMTK Distributed Machine Learning Toolkit https://www.dmtk.io Please open issues in the project below. For any technical support email to dmtk@microso

Framework and Library for Distributed Online Machine Learning

Jubatus The Jubatus library is an online machine learning framework which runs in distributed environment. See http://jubat.us/ for details. Quick Sta

Distributed machine learning platform

Veles Distributed platform for rapid Deep learning application development Consists of: Platform - https://github.com/Samsung/veles Znicz Plugin - Neu

Distributed training framework for TensorFlow, Keras, PyTorch, and Apache MXNet.

Horovod Horovod is a distributed deep learning training framework for TensorFlow, Keras, PyTorch, and Apache MXNet. The goal of Horovod is to make dis

Distributed Asynchronous Hyperparameter Optimization in Python

Hyperopt: Distributed Hyperparameter Optimization Hyperopt is a Python library for serial and parallel optimization over awkward search spaces, which

Distributed Evolutionary Algorithms in Python

DEAP DEAP is a novel evolutionary computation framework for rapid prototyping and testing of ideas. It seeks to make algorithms explicit and data stru

Modin: Speed up your Pandas workflows by changing a single line of code

Scale your pandas workflows by changing one line of code To use Modin, replace the pandas import: # import pandas as pd import modin.pandas as pd Inst

Lightweight, Portable, Flexible Distributed/Mobile Deep Learning with Dynamic, Mutation-aware Dataflow Dep Scheduler; for Python, R, Julia, Scala, Go, Javascript and more

Apache MXNet (incubating) for Deep Learning Master Docs License Apache MXNet (incubating) is a deep learning framework designed for both efficiency an

Distributed Deep learning with Keras & Spark

Elephas: Distributed Deep Learning with Keras & Spark Elephas is an extension of Keras, which allows you to run distributed deep learning models at sc

A Neural Net Training Interface on TensorFlow, with focus on speed + flexibility

Tensorpack is a neural network training interface based on TensorFlow. Features: It's Yet Another TF high-level API, with speed, and flexibility built

Simple tools for logging and visualizing, loading and training

TNT TNT is a library providing powerful dataloading, logging and visualization utilities for Python. It is closely integrated with PyTorch and is desi

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

MLBox is a powerful Automated Machine Learning python library.

MLBox is a powerful Automated Machine Learning python library. It provides the following features: Fast reading and distributed data preprocessing/cle

PySpark + Scikit-learn = Sparkit-learn

Sparkit-learn PySpark + Scikit-learn = Sparkit-learn GitHub: https://github.com/lensacom/sparkit-learn About Sparkit-learn aims to provide scikit-lear

Implementation of COCO-LM, Correcting and Contrasting Text Sequences for Language Model Pretraining, in Pytorch

COCO LM Pretraining (wip) Implementation of COCO-LM, Correcting and Contrasting Text Sequences for Language Model Pretraining, in Pytorch. They were a

网络协议2天集训

网络协议2天集训 抓包工具安装 Wireshark wireshark下载地址 Tcpdump CentOS yum install tcpdump -y Ubuntu apt-get install tcpdump -y k8s抓包测试环境 查看虚拟网卡veth pair 查看

Ultra-Data-Efficient GAN Training: Drawing A Lottery Ticket First, Then Training It Toughly

Ultra-Data-Efficient GAN Training: Drawing A Lottery Ticket First, Then Training It Toughly Code for this paper Ultra-Data-Efficient GAN Tra

MazeRL is an application oriented Deep Reinforcement Learning (RL) framework

MazeRL is an application oriented Deep Reinforcement Learning (RL) framework, addressing real-world decision problems. Our vision is to cover the complete development life cycle of RL applications ranging from simulation engineering up to agent development, training and deployment.

Sandwich Batch Normalization

Sandwich Batch Normalization Code for Sandwich Batch Normalization. Introduction We present Sandwich Batch Normalization (SaBN), an extremely easy imp

Simple tutorials on Pytorch DDP training

pytorch-distributed-training Distribute Dataparallel (DDP) Training on Pytorch Features Easy to study DDP training You can directly copy this code for

Py4J enables Python programs to dynamically access arbitrary Java objects

Py4J Py4J enables Python programs running in a Python interpreter to dynamically access Java objects in a Java Virtual Machine. Methods are called as

Learning to Initialize Neural Networks for Stable and Efficient Training

GradInit This repository hosts the code for experiments in the paper, GradInit: Learning to Initialize Neural Networks for Stable and Efficient Traini

Reviving Iterative Training with Mask Guidance for Interactive Segmentation

This repository provides the source code for training and testing state-of-the-art click-based interactive segmentation models with the official PyTorch implementation

🏖 Easy training and deployment of seq2seq models.

Headliner Headliner is a sequence modeling library that eases the training and in particular, the deployment of custom sequence models for both resear

A framework for training and evaluating AI models on a variety of openly available dialogue datasets.

ParlAI (pronounced “par-lay”) is a python framework for sharing, training and testing dialogue models, from open-domain chitchat, to task-oriented dia

GAP-text2SQL: Learning Contextual Representations for Semantic Parsing with Generation-Augmented Pre-Training

GAP-text2SQL: Learning Contextual Representations for Semantic Parsing with Generation-Augmented Pre-Training Code and model from our AAAI 2021 paper

🏖 Easy training and deployment of seq2seq models.

Headliner Headliner is a sequence modeling library that eases the training and in particular, the deployment of custom sequence models for both resear

A framework for training and evaluating AI models on a variety of openly available dialogue datasets.

ParlAI (pronounced “par-lay”) is a python framework for sharing, training and testing dialogue models, from open-domain chitchat, to task-oriented dia

Fast and Easy Infinite Neural Networks in Python

Neural Tangents ICLR 2020 Video | Paper | Quickstart | Install guide | Reference docs | Release notes Overview Neural Tangents is a high-level neural

High-level library to help with training and evaluating neural networks in PyTorch flexibly and transparently.

TL;DR Ignite is a high-level library to help with training and evaluating neural networks in PyTorch flexibly and transparently. Click on the image to

Microsoft Cognitive Toolkit (CNTK), an open source deep-learning toolkit

CNTK Chat Windows build status Linux build status The Microsoft Cognitive Toolkit (https://cntk.ai) is a unified deep learning toolkit that describes

A Neural Net Training Interface on TensorFlow, with focus on speed + flexibility

Tensorpack is a neural network training interface based on TensorFlow. Features: It's Yet Another TF high-level API, with speed, and flexibility built

PArallel Distributed Deep LEarning: Machine Learning Framework from Industrial Practice (『飞桨』核心框架,深度学习&机器学习高性能单机、分布式训练和跨平台部署)

English | 简体中文 Welcome to the PaddlePaddle GitHub. PaddlePaddle, as the only independent R&D deep learning platform in China, has been officially open

Lightweight, Portable, Flexible Distributed/Mobile Deep Learning with Dynamic, Mutation-aware Dataflow Dep Scheduler; for Python, R, Julia, Scala, Go, Javascript and more

Apache MXNet (incubating) for Deep Learning Apache MXNet is a deep learning framework designed for both efficiency and flexibility. It allows you to m

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

An Open Source Machine Learning Framework for Everyone

Documentation TensorFlow is an end-to-end open source platform for machine learning. It has a comprehensive, flexible ecosystem of tools, libraries, a

Distributed Task Queue (development branch)

Version: 5.0.5 (singularity) Web: http://celeryproject.org/ Download: https://pypi.org/project/celery/ Source: https://github.com/celery/celery/ Keywo

pytest plugin for distributed testing and loop-on-failures testing modes.

xdist: pytest distributed testing plugin The pytest-xdist plugin extends pytest with some unique test execution modes: test run parallelization: if yo

A multiprocessing distributed task queue for Django

A multiprocessing distributed task queue for Django Features Multiprocessing worker pool Asynchronous tasks Scheduled, cron and repeated tasks Signed

A high-level distributed crawling framework.

Cola: high-level distributed crawling framework Overview Cola is a high-level distributed crawling framework, used to crawl pages and extract structur

Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Django and Vue.js

Gerapy Distributed Crawler Management Framework Based on Scrapy, Scrapyd, Scrapyd-Client, Scrapyd-API, Django and Vue.js. Documentation Documentation

A PyTorch Toolbox for Face Recognition

FaceX-Zoo FaceX-Zoo is a PyTorch toolbox for face recognition. It provides a training module with various supervisory heads and backbones towards stat

Open-AI's DALL-E for large scale training in mesh-tensorflow.

DALL-E in Mesh-Tensorflow [WIP] Open-AI's DALL-E in Mesh-Tensorflow. If this is similarly efficient to GPT-Neo, this repo should be able to train mode

Distributed Asynchronous Hyperparameter Optimization better than HyperOpt.

UltraOpt : Distributed Asynchronous Hyperparameter Optimization better than HyperOpt. UltraOpt is a simple and efficient library to minimize expensive

The algorithm performs a simple user registration (Name, CPF, E-mail and Telephone) in an Amazon RDS database and also performs the storage, training and facial recognition of the user's face to identify the users already registered in the system in a next time the user is seen.

Registration form with RDS AWS database and facial recognition via OpenCV The algorithm performs a simple user registration (Name, CPF, E-mail and Tel

A framework for joint super-resolution and image synthesis, without requiring real training data

SynthSR This repository contains code to train a Convolutional Neural Network (CNN) for Super-resolution (SR), or joint SR and data synthesis. The met

Reinforcement Learning Coach by Intel AI Lab enables easy experimentation with state of the art Reinforcement Learning algorithms

Coach Coach is a python reinforcement learning framework containing implementation of many state-of-the-art algorithms. It exposes a set of easy-to-us

A fast, distributed, high performance gradient boosting (GBT, GBDT, GBRT, GBM or MART) framework based on decision tree algorithms, used for ranking, classification and many other machine learning tasks.

Light Gradient Boosting Machine LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed a

Backtest 1000s of minute-by-minute trading algorithms for training AI with automated pricing data from: IEX, Tradier and FinViz. Datasets and trading performance automatically published to S3 for building AI training datasets for teaching DNNs how to trade. Runs on Kubernetes and docker-compose. 150 million trading history rows generated from +5000 algorithms. Heads up: Yahoo's Finance API was disabled on 2019-01-03 https://developer.yahoo.com/yql/

Stock Analysis Engine Build and tune investment algorithms for use with artificial intelligence (deep neural networks) with a distributed stack for ru

Mr. Queue - A distributed worker task queue in Python using Redis & gevent

MRQ MRQ is a distributed task queue for python built on top of mongo, redis and gevent. Full documentation is available on readthedocs Why? MRQ is an

A fast and reliable background task processing library for Python 3.

dramatiq A fast and reliable distributed task processing library for Python 3. Changelog: https://dramatiq.io/changelog.html Community: https://groups

Massively parallel self-organizing maps: accelerate training on multicore CPUs, GPUs, and clusters

Somoclu Somoclu is a massively parallel implementation of self-organizing maps. It exploits multicore CPUs, it is able to rely on MPI for distributing

Simple, realtime visualization of neural network training performance.

pastalog Simple, realtime visualization server for training neural networks. Use with Lasagne, Keras, Tensorflow, Torch, Theano, and basically everyth

Determined: Deep Learning Training Platform

Determined: Deep Learning Training Platform Determined is an open-source deep learning training platform that makes building models fast and easy. Det

Accelerated deep learning R&D

Accelerated deep learning R&D PyTorch framework for Deep Learning research and development. It focuses on reproducibility, rapid experimentation, and

Lightweight, Portable, Flexible Distributed/Mobile Deep Learning with Dynamic, Mutation-aware Dataflow Dep Scheduler; for Python, R, Julia, Scala, Go, Javascript and more

Apache MXNet (incubating) for Deep Learning Apache MXNet is a deep learning framework designed for both efficiency and flexibility. It allows you to m

Deep Learning GPU Training System

DIGITS DIGITS (the Deep Learning GPU Training System) is a webapp for training deep learning models. The currently supported frameworks are: Caffe, To

Distributed Evolutionary Algorithms in Python

DEAP DEAP is a novel evolutionary computation framework for rapid prototyping and testing of ideas. It seeks to make algorithms explicit and data stru

An Open Source Machine Learning Framework for Everyone

Documentation TensorFlow is an end-to-end open source platform for machine learning. It has a comprehensive, flexible ecosystem of tools, libraries, a

Unified Interface for Constructing and Managing Workflows on different workflow engines, such as Argo Workflows, Tekton Pipelines, and Apache Airflow.

Couler What is Couler? Couler aims to provide a unified interface for constructing and managing workflows on different workflow engines, such as Argo

Microsoft Cognitive Toolkit (CNTK), an open source deep-learning toolkit

CNTK Chat Windows build status Linux build status The Microsoft Cognitive Toolkit (https://cntk.ai) is a unified deep learning toolkit that describes

Scalable, Portable and Distributed Gradient Boosting (GBDT, GBRT or GBM) Library, for Python, R, Java, Scala, C++ and more. Runs on single machine, Hadoop, Spark, Dask, Flink and DataFlow

eXtreme Gradient Boosting Community | Documentation | Resources | Contributors | Release Notes XGBoost is an optimized distributed gradient boosting l

H2O is an Open Source, Distributed, Fast & Scalable Machine Learning Platform: Deep Learning, Gradient Boosting (GBM) & XGBoost, Random Forest, Generalized Linear Modeling (GLM with Elastic Net), K-Means, PCA, Generalized Additive Models (GAM), RuleFit, Support Vector Machine (SVM), Stacked Ensembles, Automatic Machine Learning (AutoML), etc.

H2O H2O is an in-memory platform for distributed, scalable machine learning. H2O uses familiar interfaces like R, Python, Scala, Java, JSON and the Fl

A high-level distributed crawling framework.

Cola: high-level distributed crawling framework Overview Cola is a high-level distributed crawling framework, used to crawl pages and extract structur

Python Stream Processing

Python Stream Processing Version: 1.10.4 Web: http://faust.readthedocs.io/ Download: http://pypi.org/project/faust Source: http://github.com/robinhood