343 Repositories

Python distributed-hyperparameter-tuning Libraries

Code for T-Few from "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning"

T-Few This repository contains the official code for the paper: "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learni

A library for building and serving multi-node distributed faiss indices.

About Distributed faiss index service. A lightweight library that lets you work with FAISS indexes which don't fit into a single server memory. It fol

A Python library that enables ML teams to share, load, and transform data in a collaborative, flexible, and efficient way :chestnut:

Squirrel Core Share, load, and transform data in a collaborative, flexible, and efficient way What is Squirrel? Squirrel is a Python library that enab

Easy Parallel Library (EPL) is a general and efficient deep learning framework for distributed model training.

English | 简体中文 Easy Parallel Library Overview Easy Parallel Library (EPL) is a general and efficient library for distributed model training. Usability

Code for intrusion detection system (IDS) development using CNN models and transfer learning

Intrusion-Detection-System-Using-CNN-and-Transfer-Learning This is the code for the paper entitled "A Transfer Learning and Optimized CNN Based Intrus

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

Sky Computing: Accelerating Geo-distributed Computing in Federated Learning

Sky Computing Introduction Sky Computing is a load-balanced framework for federated learning model parallelism. It adaptively allocate model layers to

Official code for "Distributed Deep Learning in Open Collaborations" (NeurIPS 2021)

Distributed Deep Learning in Open Collaborations This repository contains the code for the NeurIPS 2021 paper "Distributed Deep Learning in Open Colla

Includes PyTorch - Keras model porting code for ConvNeXt family of models with fine-tuning and inference notebooks.

ConvNeXt-TF This repository provides TensorFlow / Keras implementations of different ConvNeXt [1] variants. It also provides the TensorFlow / Keras mo

Prompt tuning toolkit for GPT-2 and GPT-Neo

mkultra mkultra is a prompt tuning toolkit for GPT-2 and GPT-Neo. Prompt tuning injects a string of 20-100 special tokens into the context in order to

Multilingual Emotion classification using BERT (fine-tuning). Published at the WASSA workshop (ACL2022).

XLM-EMO: Multilingual Emotion Prediction in Social Media Text Abstract Detecting emotion in text allows social and computational scientists to study h

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

Code for our SIGIR 2022 accepted paper : P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-based Learning and Pre-finetuning

P3 Ranker Implementation for our SIGIR2022 accepted paper: P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-bas

In this project we predict the forest cover type using the cartographic variables in the training/test datasets.

Kaggle Competition: Forest Cover Type Prediction In this project we predict the forest cover type (the predominant kind of tree cover) using the carto

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning This repository is official Tensorflow implementation of paper: Ensemb

code for the ICLR'22 paper: On Robust Prefix-Tuning for Text Classification

On Robust Prefix-Tuning for Text Classification Prefix-tuning has drawed much attention as it is a parameter-efficient and modular alternative to adap

Codes for "Template-free Prompt Tuning for Few-shot NER".

EntLM The source codes for EntLM. Dependencies: Cuda 10.1, python 3.6.5 To install the required packages by following commands: $ pip3 install -r requ

Programmers-quest - Programmer's Quest! An open source MMO built on top of the Panda3D game engine and Astron server

Programmer's Quest! Programmer's Quest! The open source Python 3 2D MMORPG showc

Autolfads-tf2 - A TensorFlow 2.0 implementation of Latent Factor Analysis via Dynamical Systems (LFADS) and AutoLFADS

autolfads-tf2 A TensorFlow 2.0 implementation of LFADS and AutoLFADS. Installati

SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING - The Facebook paper about fine tuning RoBERTa with contrastive loss

"# SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING" i

OpenDelta - An Open-Source Framework for Paramter Efficient Tuning.

OpenDelta is a toolkit for parameter efficient methods (we dub it as delta tuning), by which users could flexibly assign (or add) a small amount parameters to update while keeping the most paramters frozen. By using OpenDelta, users could easily implement prefix-tuning, adapters, Lora, or any other types of delta tuning with preferred PTMs.

OpenFed: A Comprehensive and Versatile Open-Source Federated Learning Framework

OpenFed: A Comprehensive and Versatile Open-Source Federated Learning Framework Introduction OpenFed is a foundational library for federated learning

Locally Differentially Private Distributed Deep Learning via Knowledge Distillation (LDP-DL)

Locally Differentially Private Distributed Deep Learning via Knowledge Distillation (LDP-DL) A preprint version of our paper: Link here This is a samp

LightGBM + Optuna: no brainer

AutoLGBM LightGBM + Optuna: no brainer auto train lightgbm directly from CSV files auto tune lightgbm using optuna auto serve best lightgbm model usin

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

Large-scale Knowledge Graph Construction with Prompting

Large-scale Knowledge Graph Construction with Prompting across tasks (predictive and generative), and modalities (language, image, vision + language, etc.)

Pytorch Performace Tuning, WandB, AMP, Multi-GPU, TensorRT, Triton

Plant Pathology 2020 FGVC7 Introduction A deep learning model pipeline for training, experimentaiton and deployment for the Kaggle Competition, Plant

A new version of the CIDACS-RL linkage tool suitable to a cluster computing environment.

Fully Distributed CIDACS-RL The CIDACS-RL is a brazillian record linkage tool suitable to integrate large amount of data with high accuracy. However,

Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners

DART Implementation for ICLR2022 paper Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. Environment [email protected] Use pi

BaseCls BaseCls 是一个基于 MegEngine 的预训练模型库,帮助大家挑选或训练出更适合自己科研或者业务的模型结构

BaseCls BaseCls 是一个基于 MegEngine 的预训练模型库,帮助大家挑选或训练出更适合自己科研或者业务的模型结构。 文档地址:https://basecls.readthedocs.io 安装 安装环境 BaseCls 需要 Python = 3.6。 BaseCls 依赖 M

FedML: A Research Library and Benchmark for Federated Machine Learning

FedML: A Research Library and Benchmark for Federated Machine Learning 📄 https://arxiv.org/abs/2007.13518 News 2021-02-01 (Award): #NeurIPS 2020# Fed

Near-Optimal Sparse Allreduce for Distributed Deep Learning (published in PPoPP'22)

Near-Optimal Sparse Allreduce for Distributed Deep Learning (published in PPoPP'22) Ok-Topk is a scheme for distributed training with sparse gradients

Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On

UPMT Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On See main.py as an example: from model import PopM

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

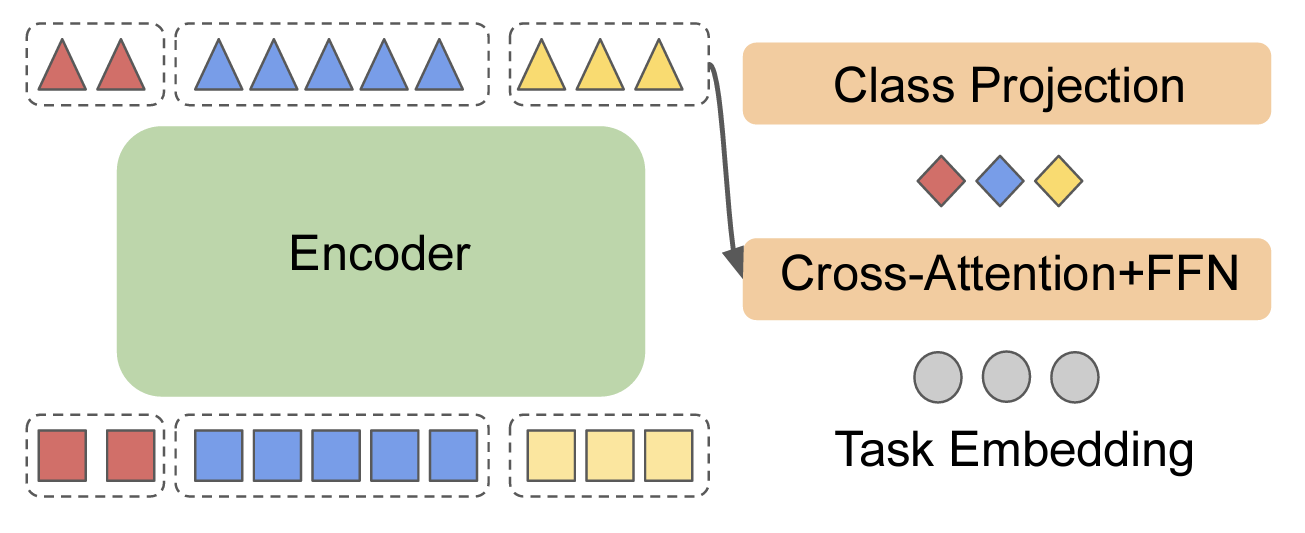

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

Orchestrating Distributed Materials Acceleration Platform Tutorial

Orchestrating Distributed Materials Acceleration Platform Tutorial This tutorial for orchestrating distributed materials acceleration platform was pre

ShadowClone allows you to distribute your long running tasks dynamically across thousands of serverless functions and gives you the results within seconds where it would have taken hours to complete

ShadowClone allows you to distribute your long running tasks dynamically across thousands of serverless functions and gives you the results within seconds where it would have taken hours to complete

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML)

package tests docs license stats support This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML

Optuna is an automatic hyperparameter optimization software framework, particularly designed for machine learning

Optuna is an automatic hyperparameter optimization software framework, particularly designed for machine learning. It features an imperative, define-by-run style user API.

GNES enables large-scale index and semantic search for text-to-text, image-to-image, video-to-video and any-to-any content form

GNES is Generic Neural Elastic Search, a cloud-native semantic search system based on deep neural network.

Original Implementation of Prompt Tuning from Lester, et al, 2021

Prompt Tuning This is the code to reproduce the experiments from the EMNLP 2021 paper "The Power of Scale for Parameter-Efficient Prompt Tuning" (Lest

Circuit Training: An open-source framework for generating chip floor plans with distributed deep reinforcement learning

Circuit Training: An open-source framework for generating chip floor plans with distributed deep reinforcement learning. Circuit Training is an open-s

A handy tool for common machine learning models' hyper-parameter tuning.

Common machine learning models' hyperparameter tuning This repo is for a collection of hyper-parameter tuning for "common" machine learning models, in

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

Source code for paper "Black-Box Tuning for Language-Model-as-a-Service"

Black-Box-Tuning Source code for paper "Black-Box Tuning for Language-Model-as-a-Service". Being busy recently, the code in this repo and this tutoria

Collection of machine learning related notebooks to share.

ML_Notebooks Collection of machine learning related notebooks to share. Notebooks GAN_distributed_training.ipynb In this Notebook, TensorFlow's tutori

Black-Box-Tuning - Black-Box Tuning for Language-Model-as-a-Service

Black-Box-Tuning Source code for paper "Black-Box Tuning for Language-Model-as-a

We present a regularized self-labeling approach to improve the generalization and robustness properties of fine-tuning.

Overview This repository provides the implementation for the paper "Improved Regularization and Robustness for Fine-tuning in Neural Networks", which

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

This code is the implementation of the paper "Coherence-Based Distributed Document Representation Learning for Scientific Documents".

Introduction This code is the implementation of the paper "Coherence-Based Distributed Document Representation Learning for Scientific Documents". If

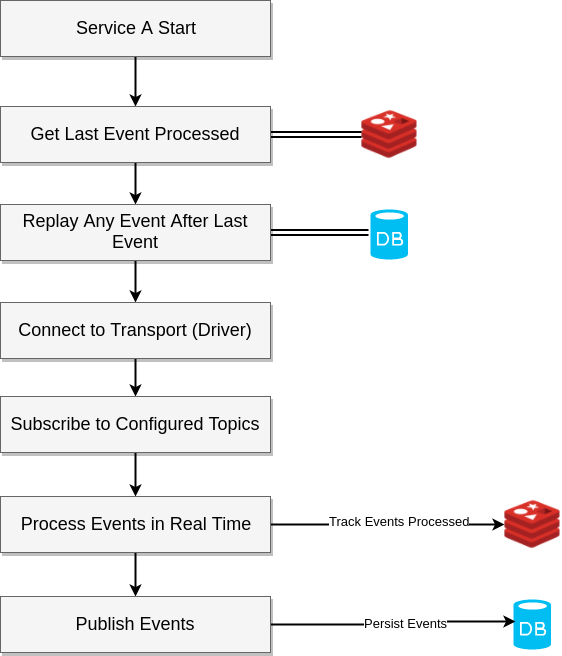

A lightweight python module for building event driven distributed systems

Eventify A lightweight python module for building event driven distributed systems. Installation pip install eventify Problem Developers need a easy a

An API-first distributed deployment system of deep learning models using timeseries data to analyze and predict systems behaviour

Gordo Building thousands of models with timeseries data to monitor systems. Table of content About Examples Install Uninstall Developer manual How to

Medical appointments No-Show classifier

Medical Appointments No-shows Why do 20% of patients miss their scheduled appointments? A person makes a doctor appointment, receives all the instruct

Open-source implementation of Google Vizier for hyper parameters tuning

Advisor Introduction Advisor is the hyper parameters tuning system for black box optimization. It is the open-source implementation of Google Vizier w

Distributed deep learning on Hadoop and Spark clusters.

Note: we're lovingly marking this project as Archived since we're no longer supporting it. You are welcome to read the code and fork your own version

Develop and deploy applications with the Ionburst Cloud Python SDK.

Ionburst SDK for Python The Ionburst SDK for Python enables developers to easily integrate with Ionburst Cloud, building in ultra-secure and private o

BigDL - Evaluate the performance of BigDL (Distributed Deep Learning on Apache Spark) in big data analysis problems

Evaluate the performance of BigDL (Distributed Deep Learning on Apache Spark) in big data analysis problems.

High available distributed ip proxy pool, powerd by Scrapy and Redis

高可用IP代理池 README | 中文文档 本项目所采集的IP资源都来自互联网,愿景是为大型爬虫项目提供一个高可用低延迟的高匿IP代理池。 项目亮点 代理来源丰富 代理抓取提取精准 代理校验严格合理 监控完备,鲁棒性强 架构灵活,便于扩展 各个组件分布式部署 快速开始 注意,代码请在release

A distributed crawler for weibo, building with celery and requests.

A distributed crawler for weibo, building with celery and requests.

Fine tuning keras-ocr python package with custom synthetic dataset from scratch

OCR-Pipeline-with-Keras The keras-ocr package generally consists of two parts: a Detector and a Recognizer: Detector is responsible for creating bound

RLMeta is a light-weight flexible framework for Distributed Reinforcement Learning Research.

RLMeta rlmeta - a flexible lightweight research framework for Distributed Reinforcement Learning based on PyTorch and moolib Installation To build fro

Calibrated Hyperspectral Image Reconstruction via Graph-based Self-Tuning Network.

mask-uncertainty-in-HSI This repository contains the testing code and pre-trained models for the paper Calibrated Hyperspectral Image Reconstruction v

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control

Distributed Grid Descent: an algorithm for hyperparameter tuning guided by Bayesian inference, designed to run on multiple processes and potentially many machines with no central point of control.

AutoGluon: AutoML for Text, Image, and Tabular Data

AutoML for Text, Image, and Tabular Data AutoGluon automates machine learning tasks enabling you to easily achieve strong predictive performance in yo

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning And private Server services

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning

Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai

Coursera-deep-learning-specialization - Notes, programming assignments and quizzes from all courses within the Coursera Deep Learning specialization offered by deeplearning.ai: (i) Neural Networks and Deep Learning; (ii) Improving Deep Neural Networks: Hyperparameter tuning, Regularization and Optimization; (iii) Structuring Machine Learning Projects; (iv) Convolutional Neural Networks; (v) Sequence Models

Implementation of hyperparameter optimization/tuning methods for machine learning & deep learning models

Hyperparameter Optimization of Machine Learning Algorithms This code provides a hyper-parameter optimization implementation for machine learning algor

Client - 🔥 A tool for visualizing and tracking your machine learning experiments

Weights and Biases Use W&B to build better models faster. Track and visualize all the pieces of your machine learning pipeline, from datasets to produ

Distributed-systems-algos - Distributed Systems Algorithms For Python

Distributed Systems Algorithms ISIS algorithm In an asynchronous system that kee

Bigdata - This Scrapy project uses Redis and Kafka to create a distributed on demand scraping cluster

Scrapy Cluster This Scrapy project uses Redis and Kafka to create a distributed

Codeflare - Scale complex AI/ML pipelines anywhere

Scale complex AI/ML pipelines anywhere CodeFlare is a framework to simplify the integration, scaling and acceleration of complex multi-step analytics

This Scrapy project uses Redis and Kafka to create a distributed on demand scraping cluster

This Scrapy project uses Redis and Kafka to create a distributed on demand scraping cluster.

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend

Hyperopt for solving CIFAR-100 with a convolutional neural network (CNN) built with Keras and TensorFlow, GPU backend This project acts as both a tuto

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking."

Expert-Linking Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking." This is

An distributed automation framework.

Automation Kit Repository Welcome to the Automation Kit repository! Note: This package is progressing quickly but is not yet ready for full production

Implementation of the paper "Fine-Tuning Transformers: Vocabulary Transfer"

Transformer-vocabulary-transfer Implementation of the paper "Fine-Tuning Transfo

Multilingual word vectors in 78 languages

Aligning the fastText vectors of 78 languages Facebook recently open-sourced word vectors in 89 languages. However these vectors are monolingual; mean

Code for a real-time distributed cooperative slam(RDC-SLAM) system for ROS compatible platforms.

RDC-SLAM This repository contains code for a real-time distributed cooperative slam(RDC-SLAM) system for ROS compatible platforms. The system takes in

Instrument asyncio Python for distributed tracing with AWS X-Ray.

xraysink (aka xray-asyncio) Extra AWS X-Ray instrumentation to use distributed tracing with asyncio Python libraries that are not (yet) supported by t

An off-line judger supporting distributed problem repositories

Thaw 中文 | English Thaw is an off-line judger supporting distributed problem repositories. Everyone can use Thaw release problems with license on GitHu

Python Actor concurrency library

Thespian Actor Library This library provides the framework of an Actor model for use by applications implementing Actors. Thespian Site with Documenta

Implementation of paper "Towards a Unified View of Parameter-Efficient Transfer Learning"

A Unified Framework for Parameter-Efficient Transfer Learning This is the official implementation of the paper: Towards a Unified View of Parameter-Ef

Free and Open, Distributed, RESTful Search Engine

Elasticsearch Elasticsearch is the distributed, RESTful search and analytics engine at the heart of the Elastic Stack. You can use Elasticsearch to st

Distributed behavioral experiments

Autopilot Docs Paper Forum Hardware Autopilot is a Python framework for performing complex, hardware-intensive behavioral experiments with swarms of n

Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning"

Prompt-Tuning Implementation of "The Power of Scale for Parameter-Efficient Prompt Tuning" Currently, we support the following huggigface models: Bart

A PyTorch Extension: Tools for easy mixed precision and distributed training in Pytorch

Introduction This is a Python package available on PyPI for NVIDIA-maintained utilities to streamline mixed precision and distributed training in Pyto

A pythonic interface to high-throughput virtual screening software

pyscreener A pythonic interface to high-throughput virtual screening software Overview This repository contains the source of pyscreener, both a libra

A pure-Python KSUID implementation

Svix - Webhooks as a service Svix-KSUID This library is inspired by Segment's KSUID implementation: https://github.com/segmentio/ksuid What is a ksuid

Clearly see and debug your celery cluster in real time!

Clearly see and debug your celery cluster in real time! Do you use celery, and monitor your tasks with flower? You'll probably like Clearly! 👍 Clearl

Deep Distributed Control of Port-Hamiltonian Systems

De(e)pendable Distributed Control of Port-Hamiltonian Systems (DeepDisCoPH) This repository is associated to the paper [1] and it contains: The full p

Robust fine-tuning of zero-shot models

Robust fine-tuning of zero-shot models This repository contains code for the paper Robust fine-tuning of zero-shot models by Mitchell Wortsman*, Gabri

A full pipeline AutoML tool for tabular data

HyperGBM Doc | 中文 We Are Hiring! Dear folks,we are offering challenging opportunities located in Beijing for both professionals and students who are k

A distributed block-based data storage and compute engine

Nebula is an extremely-fast end-to-end interactive big data analytics solution. Nebula is designed as a high-performance columnar data storage and tabular OLAP engine.

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

A small distributed download manager to help bypass device-specific bandwidth limitations.

Distributed Download Manager A small distributed download manager to help bypass device-specific bandwidth limitations. Architecture The download mana

A high-level yet extensible library for fast language model tuning via automatic prompt search

ruPrompts ruPrompts is a high-level yet extensible library for fast language model tuning via automatic prompt search, featuring integration with Hugg

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und