1034 Repositories

Python fast-transformers Libraries

Global Tracking Transformers, CVPR 2022

Global Tracking Transformers Global Tracking Transformers, Xingyi Zhou, Tianwei Yin, Vladlen Koltun, Philipp Krähenbühl, CVPR 2022 (arXiv 2203.13250)

JF⚡can - Super fast port scanning & service discovery using Masscan and Nmap. Scan large networks with Masscan and use Nmap's scripting abilities to discover information about services. Generate report.

Description Killing features Perform a large-scale scans using Nmap! Allows you to use Masscan to scan targets and execute Nmap on detected ports with

Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memories using approximate nearest neighbors, in Pytorch

Memorizing Transformers - Pytorch Implementation of Memorizing Transformers (ICLR 2022), attention net augmented with indexing and retrieval of memori

ViewFormer: NeRF-free Neural Rendering from Few Images Using Transformers

ViewFormer: NeRF-free Neural Rendering from Few Images Using Transformers Official implementation of ViewFormer. ViewFormer is a NeRF-free neural rend

BDDM: Bilateral Denoising Diffusion Models for Fast and High-Quality Speech Synthesis

Bilateral Denoising Diffusion Models (BDDMs) This is the official PyTorch implementation of the following paper: BDDM: BILATERAL DENOISING DIFFUSION M

Lyrics generation with GPT2-based Transformer

HuggingArtists - Train a model to generate lyrics Create AI-Artist in just 5 minutes! 🚀 Run the demo notebook to train 🚀 Run the GUI demo to test Di

I will implement Fastai in each projects present in this repository.

DEEP LEARNING FOR CODERS WITH FASTAI AND PYTORCH The repository contains a list of the projects which I have worked on while reading the book Deep Lea

Cross-view Transformers for real-time Map-view Semantic Segmentation (CVPR 2022 Oral)

Cross View Transformers This repository contains the source code and data for our paper: Cross-view Transformers for real-time Map-view Semantic Segme

Accelerated NLP pipelines for fast inference on CPU and GPU. Built with Transformers, Optimum and ONNX Runtime.

Optimum Transformers Accelerated NLP pipelines for fast inference 🚀 on CPU and GPU. Built with 🤗 Transformers, Optimum and ONNX runtime. Installatio

Implementation of the Transformer variant proposed in "Transformer Quality in Linear Time"

FLASH - Pytorch Implementation of the Transformer variant proposed in the paper Transformer Quality in Linear Time Install $ pip install FLASH-pytorch

Official source code of Fast Point Transformer, CVPR 2022

Fast Point Transformer Project Page | Paper This repository contains the official source code and data for our paper: Fast Point Transformer Chunghyun

Code release for "BoxeR: Box-Attention for 2D and 3D Transformers"

BoxeR By Duy-Kien Nguyen, Jihong Ju, Olaf Booij, Martin R. Oswald, Cees Snoek. This repository is an official implementation of the paper BoxeR: Box-A

Python package to generate image embeddings with CLIP without PyTorch/TensorFlow

imgbeddings A Python package to generate embedding vectors from images, using OpenAI's robust CLIP model via Hugging Face transformers. These image em

Lightning ⚡️ fast forecasting with statistical and econometric models.

Nixtla Statistical ⚡️ Forecast Lightning fast forecasting with statistical and econometric models StatsForecast offers a collection of widely used uni

maximal update parametrization (µP)

Maximal Update Parametrization (μP) and Hyperparameter Transfer (μTransfer) Paper link | Blog link In Tensor Programs V: Tuning Large Neural Networks

HugsVision is a easy to use huggingface wrapper for state-of-the-art computer vision

HugsVision is an open-source and easy to use all-in-one huggingface wrapper for computer vision. The goal is to create a fast, flexible and user-frien

🤗🖼️ HuggingPics: Fine-tune Vision Transformers for anything using images found on the web.

🤗 🖼️ HuggingPics Fine-tune Vision Transformers for anything using images found on the web. Check out the video below for a walkthrough of this proje

Curso práctico: NLP de cero a cien 🤗

Curso Práctico: NLP de cero a cien Comprende todos los conceptos y arquitecturas clave del estado del arte del NLP y aplícalos a casos prácticos utili

Face recognition system using MTCNN, FACENET, SVM and FAST API to track participants of Big Brother Brasil in real time.

BBB Face Recognizer Face recognition system using MTCNN, FACENET, SVM and FAST API to track participants of Big Brother Brasil in real time. Instalati

Building a real-time environment using webcam frame division in OpenCV and classify cropped images using a fine-tuned vision transformers on hybryd datasets samples for facial emotion recognition.

Visual Transformer for Facial Emotion Recognition (FER) This project has the aim to build an efficient Visual Transformer for the Facial Emotion Recog

Simple, Fast, Powerful and Easily extensible python package for extracting patterns from text, with over than 60 predefined Regular Expressions.

patterns-finder Simple, Fast, Powerful and Easily extensible python package for extracting patterns from text, with over than 60 predefined Regular Ex

A fast poisson image editing implementation that can utilize multi-core CPU or GPU to handle a high-resolution image input.

Poisson Image Editing - A Parallel Implementation Jiayi Weng (jiayiwen), Zixu Chen (zixuc) Poisson Image Editing is a technique that can fuse two imag

Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch

CoCa - Pytorch Implementation of CoCa, Contrastive Captioners are Image-Text Foundation Models, in Pytorch. They were able to elegantly fit in contras

HuggingSound: A toolkit for speech-related tasks based on HuggingFace's tools

HuggingSound HuggingSound: A toolkit for speech-related tasks based on HuggingFace's tools. I have no intention of building a very complex tool here.

Optical character recognition for Japanese text, with the main focus being Japanese manga

Manga OCR Optical character recognition for Japanese text, with the main focus being Japanese manga. It uses a custom end-to-end model built with Tran

Implementation of ETSformer, state of the art time-series Transformer, in Pytorch

ETSformer - Pytorch Implementation of ETSformer, state of the art time-series Transformer, in Pytorch Install $ pip install etsformer-pytorch Usage im

SentimentArcs: a large ensemble of dozens of sentiment analysis models to analyze emotion in text over time

SentimentArcs - Emotion in Text An end-to-end pipeline based on Jupyter notebooks to detect, extract, process and anlayze emotion over time in text. E

A fast hierarchical dimensionality reduction algorithm.

h-NNE: Hierarchical Nearest Neighbor Embedding A fast hierarchical dimensionality reduction algorithm. h-NNE is a general purpose dimensionality reduc

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

A Persian Image Captioning model based on Vision Encoder Decoder Models of the transformers🤗.

Persian-Image-Captioning We fine-tuning the Vision Encoder Decoder Model for the task of image captioning on the coco-flickr-farsi dataset. The implem

Official Code Implementation of the paper : XAI for Transformers: Better Explanations through Conservative Propagation

Official Code Implementation of The Paper : XAI for Transformers: Better Explanations through Conservative Propagation For the SST-2 and IMDB expermin

Azure free vpn for students only! (Self hosted/No sketchy services/Fast and free)

Azpn-Azure-Free-VPN Azure free vpn for students only! (Self hosted/No sketchy services/Fast and free) This is an alternative secure way of accessing f

Contains the code and data for our #ICSE2022 paper titled as "CodeFill: Multi-token Code Completion by Jointly Learning from Structure and Naming Sequences"

CodeFill This repository contains the code for our paper titled as "CodeFill: Multi-token Code Completion by Jointly Learning from Structure and Namin

As-ViT: Auto-scaling Vision Transformers without Training

As-ViT: Auto-scaling Vision Transformers without Training [PDF] Wuyang Chen, Wei Huang, Xianzhi Du, Xiaodan Song, Zhangyang Wang, Denny Zhou In ICLR 2

QuadTree Attention for Vision Transformers (ICLR2022)

This repository contains codes for quadtree attention. This repo contains codes for feature matching, image classficiation, object detection and seman

(CVPR 2022) Pytorch implementation of "Self-supervised transformers for unsupervised object discovery using normalized cut"

(CVPR 2022) TokenCut Pytorch implementation of Tokencut: Self-supervised Transformers for Unsupervised Object Discovery using Normalized Cut Yangtao W

CLIP (Contrastive Language–Image Pre-training) for Italian

Italian CLIP CLIP (Radford et al., 2021) is a multimodal model that can learn to represent images and text jointly in the same space. In this project,

🏎️ Accelerate training and inference of 🤗 Transformers with easy to use hardware optimization tools

Hugging Face Optimum 🤗 Optimum is an extension of 🤗 Transformers, providing a set of performance optimization tools enabling maximum efficiency to t

This is a pytorch implementation for the BST model from Alibaba https://arxiv.org/pdf/1905.06874.pdf

Behavior-Sequence-Transformer-Pytorch This is a pytorch implementation for the BST model from Alibaba https://arxiv.org/pdf/1905.06874.pdf This model

A repository to run gpt-j-6b on low vram machines (4.2 gb minimum vram for 2000 token context, 3.5 gb for 1000 token context). Model loading takes 12gb free ram.

Basic-UI-for-GPT-J-6B-with-low-vram A repository to run GPT-J-6B on low vram systems by using both ram, vram and pinned memory. There seem to be some

Persian Bert For Long-Range Sequences

ParsBigBird: Persian Bert For Long-Range Sequences The Bert and ParsBert algorithms can handle texts with token lengths of up to 512, however, many ta

PyTorch implementation of Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy

Anomaly Transformer in PyTorch This is an implementation of Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy. This pape

Fast SHAP value computation for interpreting tree-based models

FastTreeSHAP FastTreeSHAP package is built based on the paper Fast TreeSHAP: Accelerating SHAP Value Computation for Trees published in NeurIPS 2021 X

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology (LMRL Workshop, NeurIPS 2021)

Self-Supervised Vision Transformers Learn Visual Concepts in Histopathology Self-Supervised Vision Transformers Learn Visual Concepts in Histopatholog

.png)

Contextual Attention Network: Transformer Meets U-Net

Contextual Attention Network: Transformer Meets U-Net Contexual attention network for medical image segmentation with state of the art results on skin

Dome - Subdomain Enumeration Tool. Fast and reliable python script that makes active and/or passive scan to obtain subdomains and search for open ports.

DOME - A subdomain enumeration tool Check the Spanish Version Dome is a fast and reliable python script that makes active and/or passive scan to obtai

[CVPR 2022] Official Pytorch code for OW-DETR: Open-world Detection Transformer

OW-DETR: Open-world Detection Transformer (CVPR 2022) [Paper] Akshita Gupta*, Sanath Narayan*, K J Joseph, Salman Khan, Fahad Shahbaz Khan, Mubarak Sh

[CVPR 2022 Oral] TubeDETR: Spatio-Temporal Video Grounding with Transformers

TubeDETR: Spatio-Temporal Video Grounding with Transformers Website • STVG Demo • Paper This repository provides the code for our paper. This includes

REGTR: End-to-end Point Cloud Correspondences with Transformers

REGTR: End-to-end Point Cloud Correspondences with Transformers This repository contains the source code for REGTR. REGTR utilizes multiple transforme

⚡TIKTOK BOT - FAST OPTIMIZED ZEFOY SCRIPT

⚡ ZEFOY [ TikTok Zefoy Bot ] Get the script in: discord.gg/onlp !! Official shop: onlp.sellix.io Newest version v.9.0.0 Requirements pip install p

iSTFTNet : Fast and Lightweight Mel-spectrogram Vocoder Incorporating Inverse Short-time Fourier Transform

iSTFTNet : Fast and Lightweight Mel-spectrogram Vocoder Incorporating Inverse Short-time Fourier Transform This repo try to implement iSTFTNet : Fast

Code for "MetaMorph: Learning Universal Controllers with Transformers", Gupta et al, ICLR 2022

MetaMorph: Learning Universal Controllers with Transformers This is the code for the paper MetaMorph: Learning Universal Controllers with Transformers

Official Implementation of DE-DETR and DELA-DETR in "Towards Data-Efficient Detection Transformers"

DE-DETRs By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao This repository is an official implementation of DE-DETR and DELA-DETR in

![[ICLR 2022] Pretraining Text Encoders with Adversarial Mixture of Training Signal Generators](https://github.com/microsoft/AMOS/raw/main/AMOS.png)

[ICLR 2022] Pretraining Text Encoders with Adversarial Mixture of Training Signal Generators

AMOS This repository contains the scripts for fine-tuning AMOS pretrained models on GLUE and SQuAD 2.0 benchmarks. Paper: Pretraining Text Encoders wi

Official Implementation of DE-CondDETR and DELA-CondDETR in "Towards Data-Efficient Detection Transformers"

DE-DETRs By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao This repository is an official implementation of DE-CondDETR and DELA-Cond

FAST Aiming at the problems of cumbersome steps and slow download speed of GNSS data

FAST Aiming at the problems of cumbersome steps and slow download speed of GNSS data, a relatively complete set of integrated multi-source data download terminal software fast is developed. The software contains most of the data sources required in the process of GNSS scientific research and learning. The way of parallel download greatly improves the efficiency of download.

Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers

beyond masking Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers The code is coming Figure 1: Pipeline of token-based pre-

![[CVPR'22] Official PyTorch Implementation of Collaborative Transformers for Grounded Situation Recognition](https://user-images.githubusercontent.com/55849968/160762073-9a458795-03c1-4b2a-8945-2187b5a48aca.png)

[CVPR'22] Official PyTorch Implementation of Collaborative Transformers for Grounded Situation Recognition

[CVPR'22] Collaborative Transformers for Grounded Situation Recognition Paper | Model Checkpoint This is the official PyTorch implementation of Collab

Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capability)

Protein GLM (wip) Implementation of a protein autoregressive language model, but with autoregressive infilling objective (editing subsequences capabil

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

Implementation of 🦩 Flamingo, state-of-the-art few-shot visual question answering attention net out of Deepmind, in Pytorch

🦩 Flamingo - Pytorch Implementation of Flamingo, state-of-the-art few-shot visual question answering attention net, in Pytorch. It will include the p

Direct application of DALLE-2 to video synthesis, using factored space-time Unet and Transformers

DALLE2 Video (wip) ** only to be built after DALLE2 image is done and replicated, and the importance of the prior network is validated ** Direct appli

Fast and multi-threaded script to automatically claim targeted username including 14 day bypass

Instagram Username Auto Claimer Fast and multi-threaded script to automatically claim targeted username. Click here to report bugs. Usage Download ZIP

Implementation of the GVP-Transformer, which was used in the paper "Learning inverse folding from millions of predicted structures" for de novo protein design alongside Alphafold2

GVP Transformer (wip) Implementation of the GVP-Transformer, which was used in the paper Learning inverse folding from millions of predicted structure

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

Fit Fast, Explain Fast

FastExplain Fit Fast, Explain Fast Installing pip install fast-explain About FastExplain FastExplain provides an out-of-the-box tool for analysts to

PSTR: End-to-End One-Step Person Search With Transformers (CVPR2022)

PSTR (CVPR2022) This code is an official implementation of "PSTR: End-to-End One-Step Person Search With Transformers (CVPR2022)". End-to-end one-step

Fast TikTok NO Watermark Video Downloader (username or url)

💎 TD [ TikDown v4 ] Star ⭐ if you want more Discord Server * discord.gg/onlp | Waxor#9999 Why not open source anymore ? * BECAUSE PEOPLE SKID, STEA

Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch

MeMOT - Pytorch (wip) Implementation of MeMOT - Multi-Object Tracking with Memory - in Pytorch. This paper is just one in a line of work, but importan

Lane assist for ETS2, built with the ultra-fast-lane-detection model.

Euro-Truck-Simulator-2-Lane-Assist Lane assist for ETS2, built with the ultra-fast-lane-detection model. This project was made possible by the amazing

A general-purpose programming language, focused on simplicity, safety and stability.

The Rivet programming language A general-purpose programming language, focused on simplicity, safety and stability. Rivet's goal is to be a very power

UpChecker is a simple opensource project to host it fast on your server and check is server up, view statistic, get messages if it is down. UpChecker - just run file and use project easy

UpChecker UpChecker is a simple opensource project to host it fast on your server and check is server up, view statistic, get messages if it is down.

Blazing fast GraphQL endpoints finder using subdomain enumeration, scripts analysis and bruteforce.

Graphinder Graphinder is a tool that extracts all GraphQL endpoints from a given domain. Run with docker docker run -it -v $(pwd):/usr/bin/graphinder

An IPC based on Websockets, fast, stable, and reliable

winerp An IPC based on Websockets. Fast, Stable, and easy-to-use, for inter-communication between your processes or discord.py bots. Key Features Fast

Implementation of the Hybrid Perception Block and Dual-Pruned Self-Attention block from the ITTR paper for Image to Image Translation using Transformers

ITTR - Pytorch Implementation of the Hybrid Perception Block (HPB) and Dual-Pruned Self-Attention (DPSA) block from the ITTR paper for Image to Image

NoSecerets is a python script that is designed to crack hashes extremely fast. Faster even than Hashcat

NoSecerets NoSecerets is a python script that is designed to crack hashes extremely fast. Faster even than Hashcat How does it work? Instead of taking

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

![[CVPR 2022]](https://github.com/VITA-Group/Diverse-ViT/raw/main/Figs/Diversity_overview.png)

[CVPR 2022] "The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy" by Tianlong Chen, Zhenyu Zhang, Yu Cheng, Ahmed Awadallah, Zhangyang Wang

The Principle of Diversity: Training Stronger Vision Transformers Calls for Reducing All Levels of Redundancy Codes for this paper: [CVPR 2022] The Pr

Fast and customizable reconnaissance workflow tool based on simple YAML based DSL.

Fast and customizable reconnaissance workflow tool based on simple YAML based DSL, with support of notifications and distributed workload of that work

This is a general repo that helps you develop fast/effective NLP classifiers using Huggingface

NLP Classifier Introduction This project trains a bert model on any NLP classifcation model. And uses the model in make predictions on new data using

Implementation of the state-of-the-art vision transformers with tensorflow

ViT Tensorflow This repository contains the tensorflow implementation of the state-of-the-art vision transformers (a category of computer vision model

Transformers-regression - Regression Bugs Are In Your Model! Measuring, Reducing and Analyzing Regressions In NLP Model Updates

Regression Free Model Update Code for the paper: Regression Bugs Are In Your Mod

Super-Fast-Adversarial-Training - A PyTorch Implementation code for developing super fast adversarial training

Super-Fast-Adversarial-Training This is a PyTorch Implementation code for develo

Instant-nerf-pytorch - NeRF trained SUPER FAST in pytorch

instant-nerf-pytorch This is WORK IN PROGRESS, please feel free to contribute vi

Python code for ICLR 2022 spotlight paper EViT: Expediting Vision Transformers via Token Reorganizations

Expediting Vision Transformers via Token Reorganizations This repository contain

GeoTransformer - Geometric Transformer for Fast and Robust Point Cloud Registration

Geometric Transformer for Fast and Robust Point Cloud Registration PyTorch imple

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers Authors: Jaemin Cho, Abhay Zala, and Mohit Bansal (

TorchMD-Net provides state-of-the-art graph neural networks and equivariant transformer neural networks potentials for learning molecular potentials

TorchMD-net TorchMD-Net provides state-of-the-art graph neural networks and equivariant transformer neural networks potentials for learning molecular

Convert BART models to ONNX with quantization. 3X reduction in size, and upto 3X boost in inference speed

fast-Bart Reduction of BART model size by 3X, and boost in inference speed up to 3X BART implementation of the fastT5 library (https://github.com/Ki6a

CATE: Computation-aware Neural Architecture Encoding with Transformers

CATE: Computation-aware Neural Architecture Encoding with Transformers Code for paper: CATE: Computation-aware Neural Architecture Encoding with Trans

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers Authors: Jaemin Cho, Abhay Zala, and Mohit Bansal (

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

PLStream: A Framework for Fast Polarity Labelling of Massive Data Streams

PLStream: A Framework for Fast Polarity Labelling of Massive Data Streams Motivation When dataset freshness is critical, the annotating of high speed

Rank-One Model Editing for Locating and Editing Factual Knowledge in GPT

Rank-One Model Editing (ROME) This repository provides an implementation of Rank-One Model Editing (ROME) on auto-regressive transformers (GPU-only).

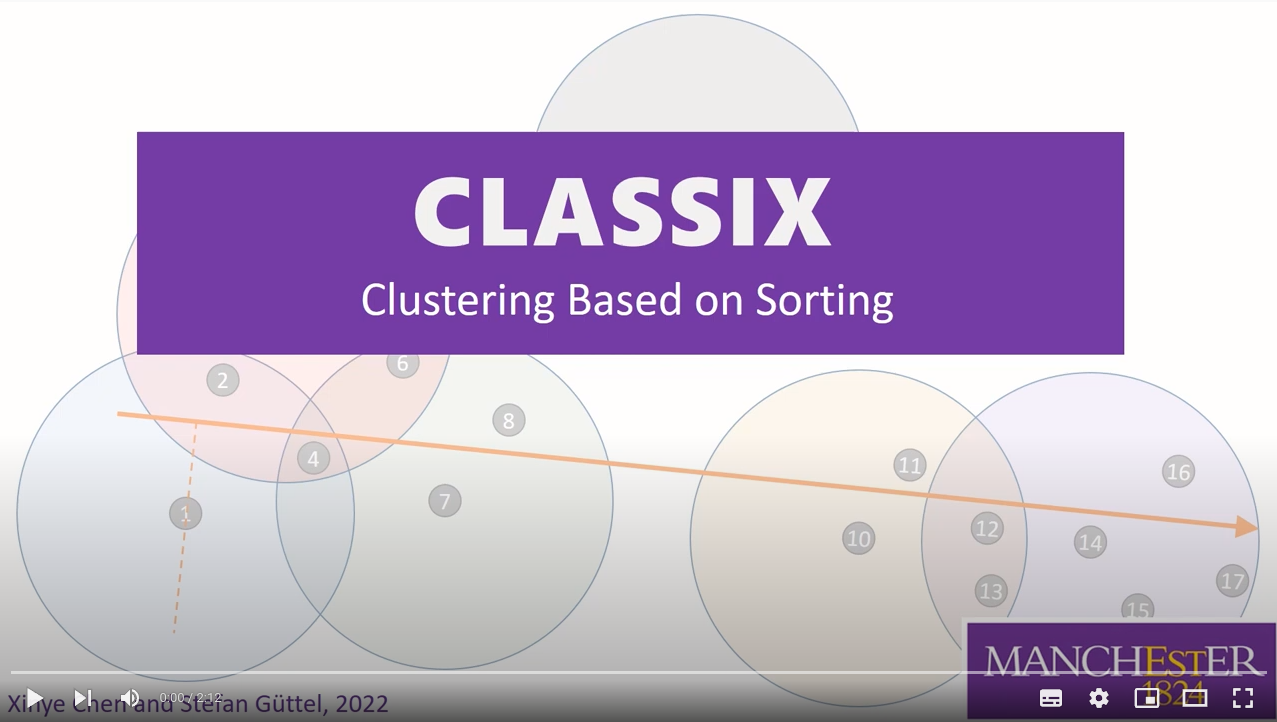

CLASSIX is a fast and explainable clustering algorithm based on sorting

CLASSIX Fast and explainable clustering based on sorting CLASSIX is a fast and explainable clustering algorithm based on sorting. Here are a few highl

MCNameBot is a fast discord bot that is used to check the availability of a Minecraft name with a simple command.

MCNameBot MCNameBot is a fast discord bot that is used to check the availability of a Minecraft name with a simple command. If you would like to just

RipsNet: a general architecture for fast and robust estimation of the persistent homology of point clouds

RipsNet: a general architecture for fast and robust estimation of the persistent homology of point clouds This repository contains the code asscoiated

Transformers provides thousands of pretrained models to perform tasks on different modalities such as text, vision, and audio.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Official Implementation of "Transformers Can Do Bayesian Inference"

Official Code for the Paper "Transformers Can Do Bayesian Inference" We train Transformers to do Bayesian Prediction on novel datasets for a large var

YOLOv7 - Framework Beyond Detection

🔥🔥🔥🔥 YOLO with Transformers and Instance Segmentation, with TensorRT acceleration! 🔥🔥🔥