1366 Repositories

Python generalized-linear-mixed-models Libraries

tf2onnx - Convert TensorFlow, Keras and Tflite models to ONNX.

tf2onnx converts TensorFlow (tf-1.x or tf-2.x), tf.keras and tflite models to ONNX via command line or python api.

This repo contains the official code and pre-trained models for the Dynamic Vision Transformer (DVT).

Dynamic-Vision-Transformer (Pytorch) This repo contains the official code and pre-trained models for the Dynamic Vision Transformer (DVT). Not All Ima

Repository for the "Gotta Go Fast When Generating Data with Score-Based Models" paper

Gotta Go Fast When Generating Data with Score-Based Models This repo contains the official implementation for the paper Gotta Go Fast When Generating

SparseML is a libraries for applying sparsification recipes to neural networks with a few lines of code, enabling faster and smaller models

SparseML is a toolkit that includes APIs, CLIs, scripts and libraries that apply state-of-the-art sparsification algorithms such as pruning and quantization to any neural network. General, recipe-driven approaches built around these algorithms enable the simplification of creating faster and smaller models for the ML performance community at large.

Anonymize BLM Protest Images

Anonymize BLM Protest Images This repository automates @BLMPrivacyBot, a Twitter bot that shows the anonymized images to help keep protesters safe. Us

This project provides an unsupervised framework for mining and tagging quality phrases on text corpora with pretrained language models (KDD'21).

UCPhrase: Unsupervised Context-aware Quality Phrase Tagging To appear on KDD'21...[pdf] This project provides an unsupervised framework for mining and

A New, Interactive Approach to Learning Python

This is the repository for The Python Workshop, published by Packt. It contains all the supporting project files necessary to work through the course from start to finish.

Official PyTorch implementation for "Mixed supervision for surface-defect detection: from weakly to fully supervised learning"

Mixed supervision for surface-defect detection: from weakly to fully supervised learning [Computers in Industry 2021] Official PyTorch implementation

This repository contains the implementations related to the experiments of a set of publicly available datasets that are used in the time series forecasting research space.

TSForecasting This repository contains the implementations related to the experiments of a set of publicly available datasets that are used in the tim

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

True Few-Shot Learning with Language Models

This codebase supports using language models (LMs) for true few-shot learning: learning to perform a task using a limited number of examples from a single task distribution.

Code for the paper "Adapting Monolingual Models: Data can be Scarce when Language Similarity is High"

Wietse de Vries • Martijn Bartelds • Malvina Nissim • Martijn Wieling Adapting Monolingual Models: Data can be Scarce when Language Similarity is High

An evaluation toolkit for voice conversion models.

Voice-conversion-evaluation An evaluation toolkit for voice conversion models. Sample test pair Generate the metadata for evaluating models. The direc

JMP is a Mixed Precision library for JAX.

Mixed precision training [0] is a technique that mixes the use of full and half precision floating point numbers during training to reduce the memory bandwidth requirements and improve the computational efficiency of a given model.

covid question answering datasets and fine tuned models

Covid-QA Fine tuned models for question answering on Covid-19 data. Hosted Inference This model has been contributed to huggingface.Click here to see

Generate lookml for views from dbt models

dbt2looker Use dbt2looker to generate Looker view files automatically from dbt models. Features Column descriptions synced to looker Dimension for eac

Finding an Unsupervised Image Segmenter in each of your Deep Generative Models

Finding an Unsupervised Image Segmenter in each of your Deep Generative Models Description Recent research has shown that numerous human-interpretable

Lipstick ain't enough: Beyond Color-Matching for In-the-Wild Makeup Transfer (CVPR 2021)

Table of Content Introduction Datasets Getting Started Requirements Usage Example Training & Evaluation CPM: Color-Pattern Makeup Transfer CPM is a ho

Apache Liminal is an end-to-end platform for data engineers & scientists, allowing them to build, train and deploy machine learning models in a robust and agile way

Apache Liminals goal is to operationalise the machine learning process, allowing data scientists to quickly transition from a successful experiment to an automated pipeline of model training, validation, deployment and inference in production. Liminal provides a Domain Specific Language to build ML workflows on top of Apache Airflow.

A Lucid Framework for Transparent and Interpretable Machine Learning Models.

Currently a Beta-Version lucidmode is an open-source, low-code and lightweight Python framework for transparent and interpretable machine learning mod

An evaluation toolkit for voice conversion models.

Voice-conversion-evaluation An evaluation toolkit for voice conversion models. Sample test pair Generate the metadata for evaluating models. The direc

XtremeDistil framework for distilling/compressing massive multilingual neural network models to tiny and efficient models for AI at scale

XtremeDistilTransformers for Distilling Massive Multilingual Neural Networks ACL 2020 Microsoft Research [Paper] [Video] Releasing [XtremeDistilTransf

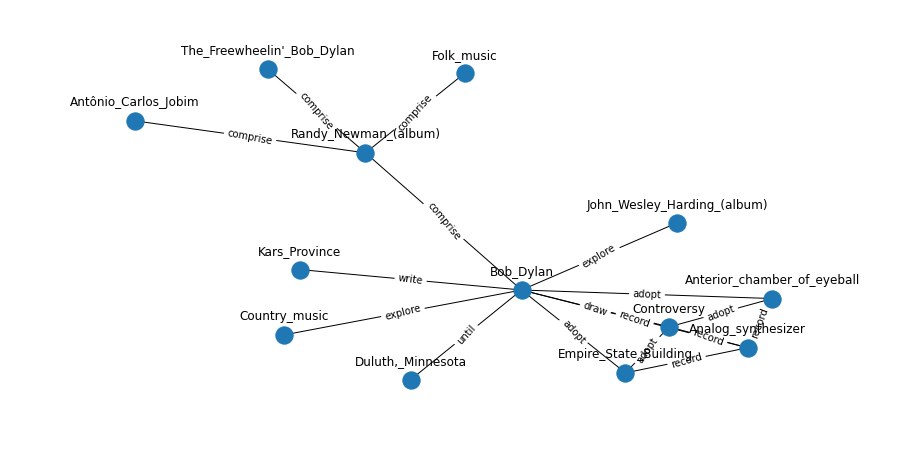

Language models are open knowledge graphs ( non official implementation )

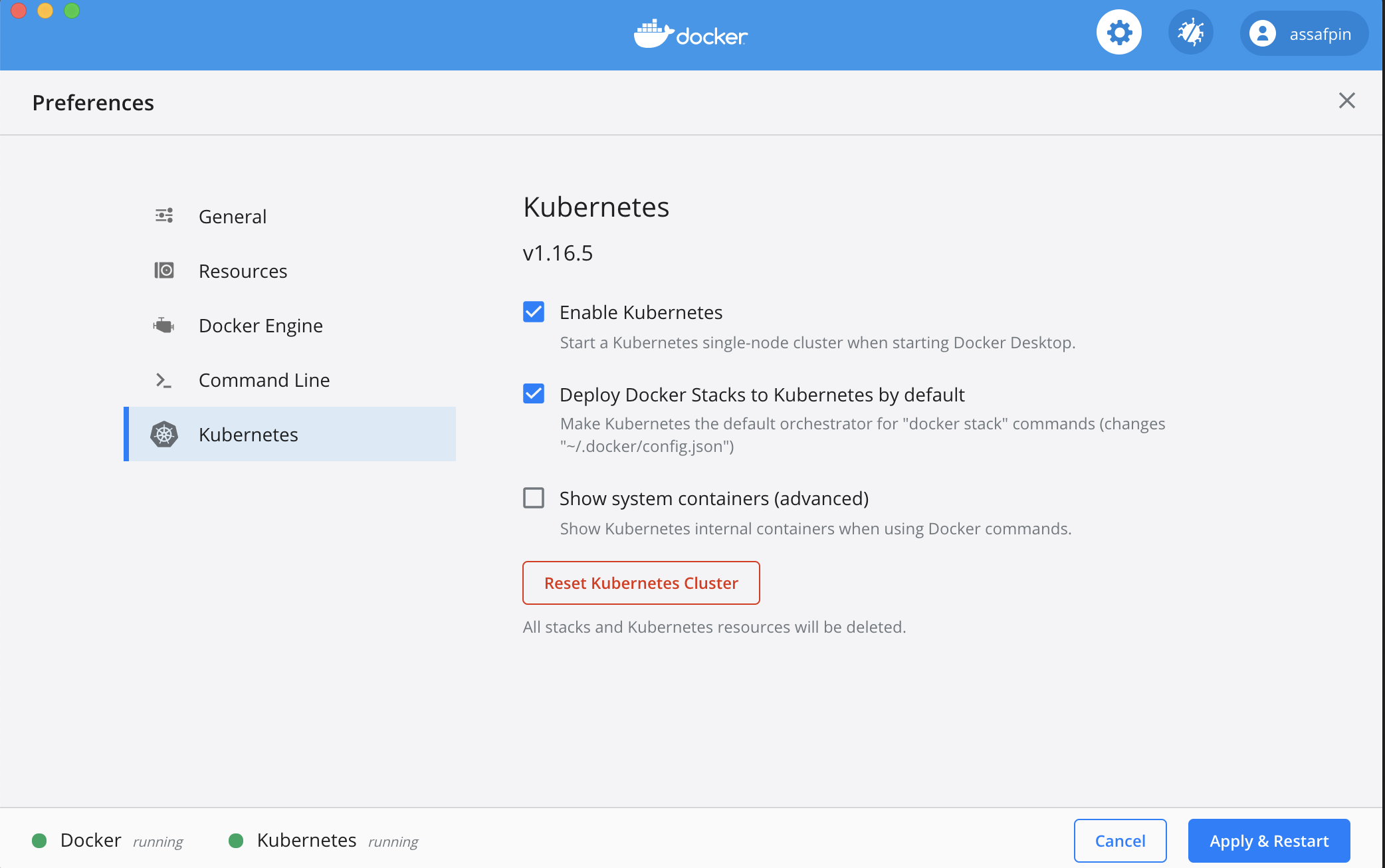

language-models-are-knowledge-graphs-pytorch Language models are open knowledge graphs ( work in progress ) A non official reimplementation of Languag

Pretrained models for Jax/Flax: StyleGAN2, GPT2, VGG, ResNet.

Pretrained models for Jax/Flax: StyleGAN2, GPT2, VGG, ResNet.

PyTorch Implementation for AAAI'21 "Do Response Selection Models Really Know What's Next? Utterance Manipulation Strategies for Multi-turn Response Selection"

UMS for Multi-turn Response Selection Implements the model described in the following paper Do Response Selection Models Really Know What's Next? Utte

Code and models used in "MUSS Multilingual Unsupervised Sentence Simplification by Mining Paraphrases".

Multilingual Unsupervised Sentence Simplification Code and pretrained models to reproduce experiments in "MUSS: Multilingual Unsupervised Sentence Sim

Ever felt tired after preprocessing the dataset, and not wanting to write any code further to train your model? Ever encountered a situation where you wanted to record the hyperparameters of the trained model and able to retrieve it afterward? Models Playground is here to help you do that. Models playground allows you to train your models right from the browser.

Models Playground 🗂️ Upload a Preprocessed Dataset 🌠 Choose whether to perform Classification or Regression 🦹 Enter the Dependent Variable ?

This is the codebase for Diffusion Models Beat GANS on Image Synthesis.

This is the codebase for Diffusion Models Beat GANS on Image Synthesis.

A collection of GNN-based fake news detection models.

This repo includes the Pytorch-Geometric implementation of a series of Graph Neural Network (GNN) based fake news detection models. All GNN models are implemented and evaluated under the User Preference-aware Fake News Detection (UPFD) framework. The fake news detection problem is instantiated as a graph classification task under the UPFD framework.

LaneDet is an open source lane detection toolbox based on PyTorch that aims to pull together a wide variety of state-of-the-art lane detection models

LaneDet is an open source lane detection toolbox based on PyTorch that aims to pull together a wide variety of state-of-the-art lane detection models. Developers can reproduce these SOTA methods and build their own methods.

a CLI that provides a generic automation layer for assessing the security of ML models

Counterfit About | Getting Started | Learn More | Acknowledgments | Contributing | Trademarks | Contact Us -------------------------------------------

Objective of the repository is to learn and build machine learning models using Pytorch. 30DaysofML Using Pytorch

30 Days Of Machine Learning Using Pytorch Objective of the repository is to learn and build machine learning models using Pytorch. List of Algorithms

a CLI that provides a generic automation layer for assessing the security of ML models

a CLI that provides a generic automation layer for assessing the security of ML models

Repository for XLM-T, a framework for evaluating multilingual language models on Twitter data

This is the XLM-T repository, which includes data, code and pre-trained multilingual language models for Twitter. XLM-T - A Multilingual Language Mode

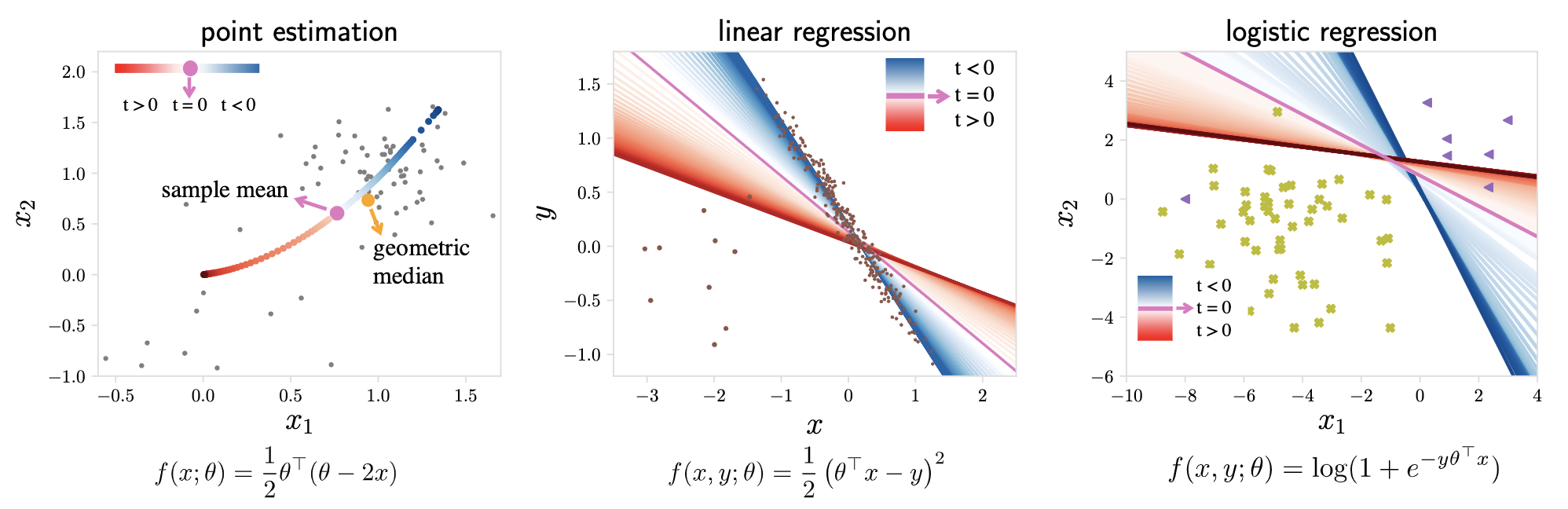

Tilted Empirical Risk Minimization (ICLR '21)

Tilted Empirical Risk Minimization This repository contains the implementation for the paper Tilted Empirical Risk Minimization ICLR 2021 Empirical ri

Official repository for the ICLR 2021 paper Evaluating the Disentanglement of Deep Generative Models with Manifold Topology

Official repository for the ICLR 2021 paper Evaluating the Disentanglement of Deep Generative Models with Manifold Topology Sharon Zhou, Eric Zelikman

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models

Parrot is a paraphrase based utterance augmentation framework purpose built to accelerate training NLU models. A paraphrase framework is more than just a paraphrasing model.

Pytorch-Named-Entity-Recognition-with-BERT

BERT NER Use google BERT to do CoNLL-2003 NER ! Train model using Python and Inference using C++ ALBERT-TF2.0 BERT-NER-TENSORFLOW-2.0 BERT-SQuAD Requi

A modular framework for vision & language multimodal research from Facebook AI Research (FAIR)

MMF is a modular framework for vision and language multimodal research from Facebook AI Research. MMF contains reference implementations of state-of-t

Neural building blocks for speaker diarization: speech activity detection, speaker change detection, overlapped speech detection, speaker embedding

⚠️ Checkout develop branch to see what is coming in pyannote.audio 2.0: a much smaller and cleaner codebase Python-first API (the good old pyannote-au

A PyTorch Implementation of End-to-End Models for Speech-to-Text

speech Speech is an open-source package to build end-to-end models for automatic speech recognition. Sequence-to-sequence models with attention, Conne

Interpretable Models for NLP using PyTorch

This repo is deprecated. Please find the updated package here. https://github.com/EdGENetworks/anuvada Anuvada: Interpretable Models for NLP using PyT

An open source framework for seq2seq models in PyTorch.

pytorch-seq2seq Documentation This is a framework for sequence-to-sequence (seq2seq) models implemented in PyTorch. The framework has modularized and

Statsmodels: statistical modeling and econometrics in Python

About statsmodels statsmodels is a Python package that provides a complement to scipy for statistical computations including descriptive statistics an

Seamlessly integrate pydantic models in your Sphinx documentation.

Seamlessly integrate pydantic models in your Sphinx documentation.

SMPLpix: Neural Avatars from 3D Human Models

subject0_validation_poses.mp4 Left: SMPL-X human mesh registered with SMPLify-X, middle: SMPLpix render, right: ground truth video. SMPLpix: Neural Av

Generative Models for Graph-Based Protein Design

Graph-Based Protein Design This repo contains code for Generative Models for Graph-Based Protein Design by John Ingraham, Vikas Garg, Regina Barzilay

[EMNLP 2020] Keep CALM and Explore: Language Models for Action Generation in Text-based Games

Contextual Action Language Model (CALM) and the ClubFloyd Dataset Code and data for paper Keep CALM and Explore: Language Models for Action Generation

Codes for our paper "SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge" (EMNLP 2020)

SentiLARE: Sentiment-Aware Language Representation Learning with Linguistic Knowledge Introduction SentiLARE is a sentiment-aware pre-trained language

Meta Language-Specific Layers in Multilingual Language Models

Meta Language-Specific Layers in Multilingual Language Models This repo contains the source codes for our paper On Negative Interference in Multilingu

Django queries

Djaq Djaq - pronounced “Jack” - provides an instant remote API to your Django models data with a powerful query language. No server-side code beyond t

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

An Easy-to-use, Modular and Prolongable package of deep-learning based Named Entity Recognition Models.

DeepNER An Easy-to-use, Modular and Prolongable package of deep-learning based Named Entity Recognition Models. This repository contains complex Deep

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

ArviZ is a Python package for exploratory analysis of Bayesian models

ArviZ (pronounced "AR-vees") is a Python package for exploratory analysis of Bayesian models. Includes functions for posterior analysis, data storage, model checking, comparison and diagnostics

Task-based datasets, preprocessing, and evaluation for sequence models.

SeqIO: Task-based datasets, preprocessing, and evaluation for sequence models. SeqIO is a library for processing sequential data to be fed into downst

Create a netflix-like service using Django, React.js, & More.

Create a netflix-like service using Django. Learn advanced Django techniques to achieve amazing results like never before.

TorchFlare is a simple, beginner-friendly, and easy-to-use PyTorch Framework train your models effortlessly.

TorchFlare TorchFlare is a simple, beginner-friendly and an easy-to-use PyTorch Framework train your models without much effort. It provides an almost

Learning Energy-Based Models by Diffusion Recovery Likelihood

Learning Energy-Based Models by Diffusion Recovery Likelihood Ruiqi Gao, Yang Song, Ben Poole, Ying Nian Wu, Diederik P. Kingma Paper: https://arxiv.o

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

MMGeneration is a powerful toolkit for generative models, based on PyTorch and MMCV.

Documentation: https://mmgeneration.readthedocs.io/ Introduction English | 简体中文 MMGeneration is a powerful toolkit for generative models, especially f

Attack classification models with transferability, black-box attack; unrestricted adversarial attacks on imagenet

Attack classification models with transferability, black-box attack; unrestricted adversarial attacks on imagenet, CVPR2021 安全AI挑战者计划第六期:ImageNet无限制对抗攻击 决赛第四名(team name: Advers)

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa

A simple way to train and use PyTorch models with multi-GPU, TPU, mixed-precision

🤗 Accelerate was created for PyTorch users who like to write the training loop of PyTorch models but are reluctant to write and maintain the boilerplate code needed to use multi-GPUs/TPU/fp16.

Pytorch implementation of our paper under review — Lottery Jackpots Exist in Pre-trained Models

Lottery Jackpots Exist in Pre-trained Models (Paper Link) Requirements Python = 3.7.4 Pytorch = 1.6.1 Torchvision = 0.4.1 Reproduce the Experiment

This repository contains the code for "Generating Datasets with Pretrained Language Models".

Datasets from Instructions (DINO 🦕 ) This repository contains the code for Generating Datasets with Pretrained Language Models. The paper introduces

A complete guide to start and improve in machine learning (ML)

A complete guide to start and improve in machine learning (ML), artificial intelligence (AI) in 2021 without ANY background in the field and stay up-to-date with the latest news and state-of-the-art techniques!

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

Bayesian Image Reconstruction using Deep Generative Models

Bayesian Image Reconstruction using Deep Generative Models R. Marinescu, D. Moyer, P. Golland For technical inquiries, please create a Github issue. F

Pretrained Pytorch face detection (MTCNN) and recognition (InceptionResnet) models

Face Recognition Using Pytorch Python 3.7 3.6 3.5 Status This is a repository for Inception Resnet (V1) models in pytorch, pretrained on VGGFace2 and

Sandbox for training deep learning networks

Deep learning networks This repo is used to research convolutional networks primarily for computer vision tasks. For this purpose, the repo contains (

Fine-tune pretrained Convolutional Neural Networks with PyTorch

Fine-tune pretrained Convolutional Neural Networks with PyTorch. Features Gives access to the most popular CNN architectures pretrained on ImageNet. A

Quickly comparing your image classification models with the state-of-the-art models (such as DenseNet, ResNet, ...)

Image Classification Project Killer in PyTorch This repo is designed for those who want to start their experiments two days before the deadline and ki

A Pythonic introduction to methods for scaling your data science and machine learning work to larger datasets and larger models, using the tools and APIs you know and love from the PyData stack (such as numpy, pandas, and scikit-learn).

This tutorial's purpose is to introduce Pythonistas to methods for scaling their data science and machine learning work to larger datasets and larger models, using the tools and APIs they know and love from the PyData stack (such as numpy, pandas, and scikit-learn).

xitorch: differentiable scientific computing library

xitorch is a PyTorch-based library of differentiable functions and functionals that can be widely used in scientific computing applications as well as deep learning.

Python library containing BART query generation and BERT-based Siamese models for neural retrieval.

Neural Retrieval Embedding-based Zero-shot Retrieval through Query Generation leverages query synthesis over large corpuses of unlabeled text (such as

QA-GNN: Question Answering using Language Models and Knowledge Graphs

QA-GNN: Question Answering using Language Models and Knowledge Graphs This repo provides the source code & data of our paper: QA-GNN: Reasoning with L

Try out deep learning models online on Google Colab

Try out deep learning models online on Google Colab

Mind the Trade-off: Debiasing NLU Models without Degrading the In-distribution Performance

Models for natural language understanding (NLU) tasks often rely on the idiosyncratic biases of the dataset, which make them brittle against test cases outside the training distribution.

Newt - a Gaussian process library in JAX.

Newt __ \/_ (' \`\ _\, \ \\/ /`\/\ \\ \ \\

Russian GPT3 models.

Russian GPT-3 models (ruGPT3XL, ruGPT3Large, ruGPT3Medium, ruGPT3Small) trained with 2048 sequence length with sparse and dense attention blocks. We also provide Russian GPT-2 large model (ruGPT2Large) trained with 1024 sequence length.

Introducing neural networks to predict stock prices

IntroNeuralNetworks in Python: A Template Project IntroNeuralNetworks is a project that introduces neural networks and illustrates an example of how o

Platform for building statistical models of cities and regions

UrbanSim UrbanSim is a platform for building statistical models of cities and regions. These models help forecast long-range patterns in real estate d

PyTorch implementation of Algorithm 1 of "On the Anatomy of MCMC-Based Maximum Likelihood Learning of Energy-Based Models"

Code for On the Anatomy of MCMC-Based Maximum Likelihood Learning of Energy-Based Models This repository will reproduce the main results from our pape

PyTorch implementation of the Deep SLDA method from our CVPRW-2020 paper "Lifelong Machine Learning with Deep Streaming Linear Discriminant Analysis"

Lifelong Machine Learning with Deep Streaming Linear Discriminant Analysis This is a PyTorch implementation of the Deep Streaming Linear Discriminant

《K-Adapter: Infusing Knowledge into Pre-Trained Models with Adapters》(2020)

K-Adapter: Infusing Knowledge into Pre-Trained Models with Adapters This repository is the implementation of the paper "K-Adapter: Infusing Knowledge

Learning recognition/segmentation models without end-to-end training. 40%-60% less GPU memory footprint. Same training time. Better performance.

InfoPro-Pytorch The Information Propagation algorithm for training deep networks with local supervision. (ICLR 2021) Revisiting Locally Supervised Lea

Set of models for classifcation of 3D volumes

Classification models 3D Zoo - Keras and TF.Keras This repository contains 3D variants of popular CNN models for classification like ResNets, DenseNet

Code for pre-training CharacterBERT models (as well as BERT models).

Pre-training CharacterBERT (and BERT) This is a repository for pre-training BERT and CharacterBERT. DISCLAIMER: The code was largely adapted from an o

Official PyTorch implementation of MX-Font (Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Experts)

Introduction Pytorch implementation of Multiple Heads are Better than One: Few-shot Font Generation with Multiple Localized Expert. | paper Song Park1

A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models

wav2vec-toolkit A collection of scripts to preprocess ASR datasets and finetune language-specific Wav2Vec2 XLSR models This repository accompanies the

Source code for the GPT-2 story generation models in the EMNLP 2020 paper "STORIUM: A Dataset and Evaluation Platform for Human-in-the-Loop Story Generation"

Storium GPT-2 Models This is the official repository for the GPT-2 models described in the EMNLP 2020 paper [STORIUM: A Dataset and Evaluation Platfor

Code, Data and Demo for Paper: Controllable Generation from Pre-trained Language Models via Inverse Prompting

InversePrompting Paper: Controllable Generation from Pre-trained Language Models via Inverse Prompting Code: The code is provided in the "chinese_ip"

Official code for the CVPR 2021 paper "How Well Do Self-Supervised Models Transfer?"

How Well Do Self-Supervised Models Transfer? This repository hosts the code for the experiments in the CVPR 2021 paper How Well Do Self-Supervised Mod

monolish: MONOlithic Liner equation Solvers for Highly-parallel architecture

monolish is a linear equation solver library that monolithically fuses variable data type, matrix structures, matrix data format, vendor specific data transfer APIs, and vendor specific numerical algebra libraries.

(ImageNet pretrained models) The official pytorch implemention of the TPAMI paper "Res2Net: A New Multi-scale Backbone Architecture"

Res2Net The official pytorch implemention of the paper "Res2Net: A New Multi-scale Backbone Architecture" Our paper is accepted by IEEE Transactions o

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.

Neural models of common sense. 🤖

Unicorn on Rainbow Neural models of common sense. This repository is for the paper: Unicorn on Rainbow: A Universal Commonsense Reasoning Model on a N

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

This project is part of Eleuther AI's quest to create a massive repository of high quality text data for training language models.

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

P-tuning A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. How to use our code We have released the code