106 Repositories

Python gpt-j Libraries

Code for CVPR 2022 paper "Bailando: 3D dance generation via Actor-Critic GPT with Choreographic Memory"

Bailando Code for CVPR 2022 (oral) paper "Bailando: 3D dance generation via Actor-Critic GPT with Choreographic Memory" [Paper] | [Project Page] | [Vi

Lyrics generation with GPT2-based Transformer

HuggingArtists - Train a model to generate lyrics Create AI-Artist in just 5 minutes! 🚀 Run the demo notebook to train 🚀 Run the GUI demo to test Di

Language Models Can See: Plugging Visual Controls in Text Generation

Language Models Can See: Plugging Visual Controls in Text Generation Authors: Yixuan Su, Tian Lan, Yahui Liu, Fangyu Liu, Dani Yogatama, Yan Wang, Lin

Contains the code and data for our #ICSE2022 paper titled as "CodeFill: Multi-token Code Completion by Jointly Learning from Structure and Naming Sequences"

CodeFill This repository contains the code for our paper titled as "CodeFill: Multi-token Code Completion by Jointly Learning from Structure and Namin

Guide to using pre-trained large language models of source code

Large Models of Source Code I occasionally train and publicly release large neural language models on programs, including PolyCoder. Here, I describe

A repository to run gpt-j-6b on low vram machines (4.2 gb minimum vram for 2000 token context, 3.5 gb for 1000 token context). Model loading takes 12gb free ram.

Basic-UI-for-GPT-J-6B-with-low-vram A repository to run GPT-J-6B on low vram systems by using both ram, vram and pinned memory. There seem to be some

Prompt tuning toolkit for GPT-2 and GPT-Neo

mkultra mkultra is a prompt tuning toolkit for GPT-2 and GPT-Neo. Prompt tuning injects a string of 20-100 special tokens into the context in order to

MAGMA - a GPT-style multimodal model that can understand any combination of images and language

MAGMA -- Multimodal Augmentation of Generative Models through Adapter-based Finetuning Authors repo (alphabetical) Constantin (CoEich), Mayukh (Mayukh

Simple Annotated implementation of GPT-NeoX in PyTorch

Simple Annotated implementation of GPT-NeoX in PyTorch This is a simpler implementation of GPT-NeoX in PyTorch. We have taken out several optimization

Creating a chess engine using GPT-3

GPT3Chess Creating a chess engine using GPT-3 Code for my article : https://towardsdatascience.com/gpt-3-play-chess-d123a96096a9 My game (white) vs GP

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

Building Ellee — A GPT-3 and Computer Vision Powered Talking Robotic Teddy Bear With Human Level Conversation Intelligence

Using an object detection and facial recognition system built on MobileNetSSDV2 and Dlib and running on an NVIDIA Jetson Nano, a GPT-3 model, Google Speech Recognition, Amazon Polly and servo motors, I built Ellee - a robotic teddy bear who can move her head and converse naturally.

Rank-One Model Editing for Locating and Editing Factual Knowledge in GPT

Rank-One Model Editing (ROME) This repository provides an implementation of Rank-One Model Editing (ROME) on auto-regressive transformers (GPU-only).

Galois is an auto code completer for code editors (or any text editor) based on OpenAI GPT-2.

Galois is an auto code completer for code editors (or any text editor) based on OpenAI GPT-2. It is trained (finetuned) on a curated list of approximately 45K Python (~470MB) files gathered from the Github. Currently, it just works properly on Python but not bad at other languages (thanks to GPT-2's power).

AI-Bot - 一个基于watermelon改造的OpenAI-GPT-2的智能机器人

AI-Bot 一个基于watermelon改造的OpenAI-GPT-2的智能机器人 在Binder上直接运行测试 目前有两种实现方式 TF2的GPT-2 TF

Backend for the Autocomplete platform. An AI assisted coding platform.

Introduction A custom predictor allows you to deploy your own prediction implementation, useful when the existing serving implementations don't fit yo

This repository serves as a place to document a toy attempt on how to create a generative text model in Catalan, based on GPT-2

GPT-2 Catalan playground and scripts to train a GPT-2 model either from scrath or from another pretrained model.

Weaviate demo with the text2vec-openai module

Weaviate demo with the text2vec-openai module This repository contains an example of how to use the Weaviate text2vec-openai module. When using this d

Use Tensorflow2.7.0 Build OpenAI'GPT-2

TF2_GPT-2 Use Tensorflow2.7.0 Build OpenAI'GPT-2 使用最新tensorflow2.7.0构建openai官方的GPT-2 NLP模型 优点 使用无监督技术 拥有大量词汇量 可实现续写(堪比“xx梦续写”) 实现对话后续将应用于FloatTech的Bot

ChatBot-Pytorch - A GPT-2 ChatBot implemented using Pytorch and Huggingface-transformers

ChatBot-Pytorch A GPT-2 ChatBot implemented using Pytorch and Huggingface-transf

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents [Project Page] [Paper] [Video] Wenlong Huang1, Pieter Abbee

This repository contains demos I made with the Transformers library by HuggingFace.

Transformers-Tutorials Hi there! This repository contains demos I made with the Transformers library by 🤗 HuggingFace. Currently, all of them are imp

Retraining OpenAI's GPT-2 on Discord Chats

Train OpenAI's GPT-2 on Discord Chats Retraining a Text Generation Model on Discord Chats using gpt-2-simple that wraps existing model fine-tuning and

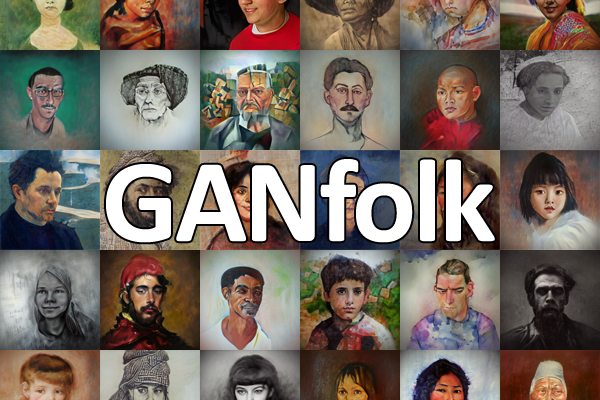

GANfolk: Using AI to create portraits of fictional people to sell as NFTs

GANfolk are AI-generated renderings of fictional people. Each image in the collection was created by a pair of Generative Adversarial Networks (GANs) with names and backstories also created with AI. The GANs were trained using portraits from artists like Renoir, Turner, and Modigliani in addition to open-source, modern photos.

Chinese version of GPT2 training code, using BERT tokenizer.

GPT2-Chinese Description Chinese version of GPT2 training code, using BERT tokenizer or BPE tokenizer. It is based on the extremely awesome repository

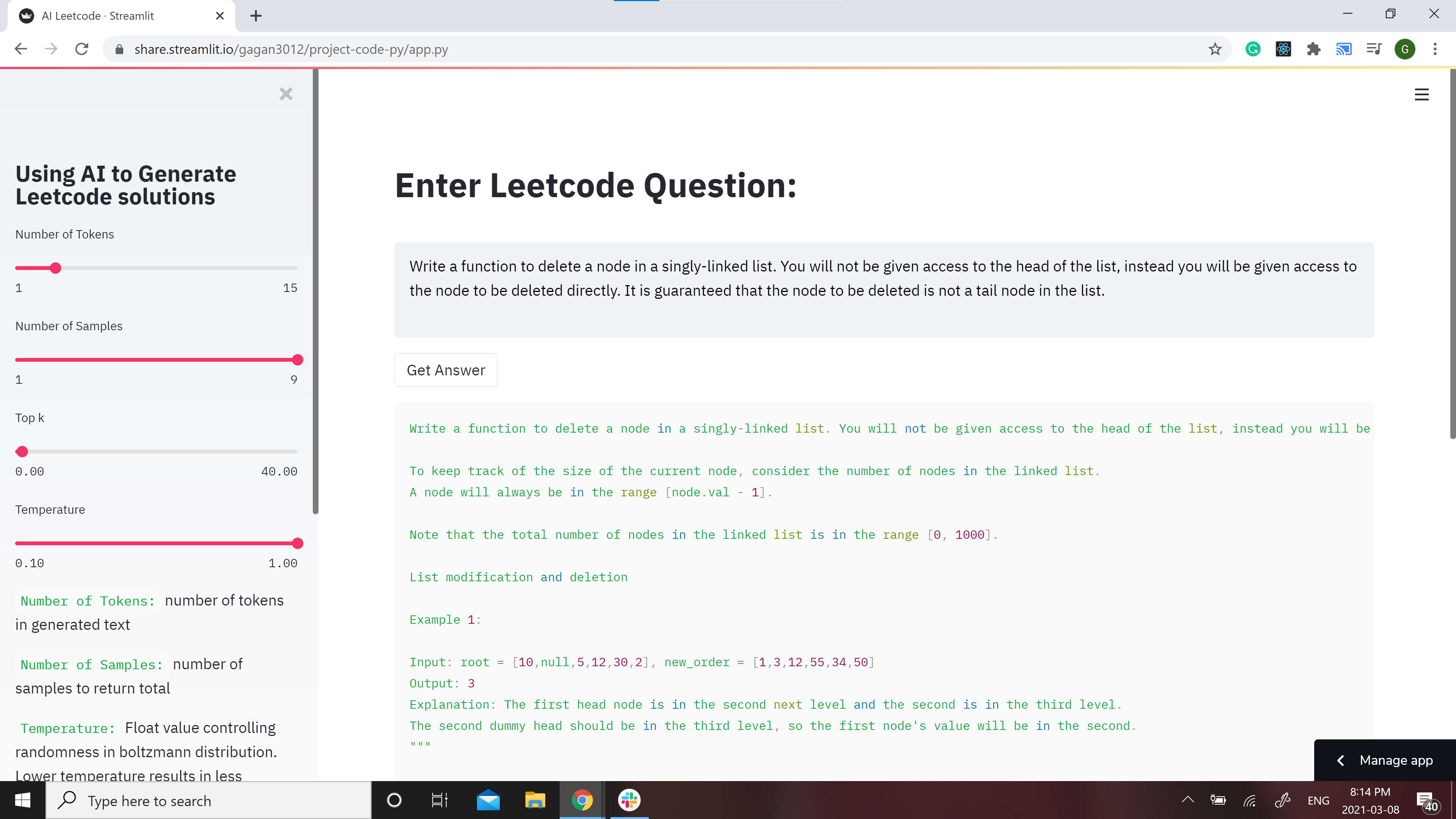

GPT-2 Model for Leetcode Questions in python

Leetcode using AI 🤖 GPT-2 Model for Leetcode Questions in python New demo here: https://huggingface.co/spaces/gagan3012/project-code-py Note: the Ans

Honor's thesis project analyzing whether the GPT-2 model can more effectively generate free-verse or structured poetry.

gpt2-poetry The following code is for my senior honor's thesis project, under the guidance of Dr. Keith Holyoak at the University of California, Los A

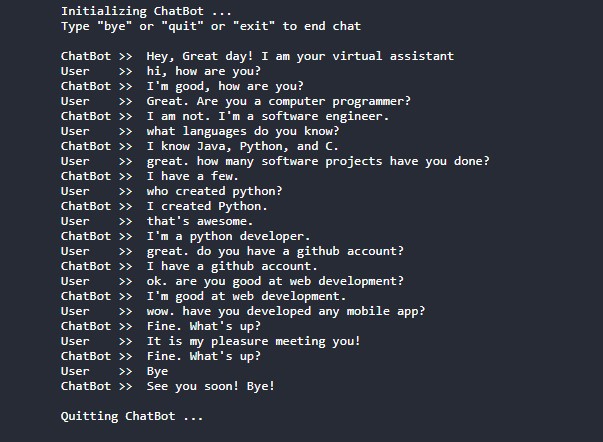

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

Twewy-discord-chatbot - Build a Discord AI Chatbot that Speaks like Your Favorite Character

Build a Discord AI Chatbot that Speaks like Your Favorite Character! This is a Discord AI Chatbot that uses the Microsoft DialoGPT conversational mode

Bot to connect a real Telegram user, simulating responses with OpenAI's davinci GPT-3 model.

AI-BOT Bot to connect a real Telegram user, simulating responses with OpenAI's davinci GPT-3 model.

A website which allows you to play with the GPT-2 transformer

transformers A website which allows you to play with the GPT-2 model Built with ❤️ by raphtlw Table of contents Model Setup About Contributors Model T

📜 GPT-2 Rhyming Limerick and Haiku models using data augmentation

Well-formed Limericks and Haikus with GPT2 📜 GPT-2 Rhyming Limerick and Haiku models using data augmentation In collaboration with Matthew Korahais &

The source code of "Language Models are Few-shot Multilingual Learners" (MRL @ EMNLP 2021)

Language Models are Few-shot Multilingual Learners Paper This is the source code of the paper [Arxiv] [ACL Anthology]: This code has been written usin

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

Few-shot Natural Language Generation for Task-Oriented Dialog

Few-shot Natural Language Generation for Task-Oriented Dialog This repository contains the dataset, source code and trained model for the following pa

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

The projects lets you extract glossary words and their definitions from a given piece of text automatically using NLP techniques

Unsupervised technique to Glossary and Definition Extraction Code Files GPT2-DefinitionModel.ipynb - GPT-2 model for definition generation. Data_Gener

Train and use generative text models in a few lines of code.

blather Train and use generative text models in a few lines of code. To see blather in action check out the colab notebook! Installation Use the packa

GPT-3: Language Models are Few-Shot Learners

GPT-3: Language Models are Few-Shot Learners arXiv link Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-trainin

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

A paper list of pre-trained language models (PLMs).

Large-scale pre-trained language models (PLMs) such as BERT and GPT have achieved great success and become a milestone in NLP.

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

Code Generation using a large neural network called GPT-J

CodeGenX is a Code Generation system powered by Artificial Intelligence! It is delivered to you in the form of a Visual Studio Code Extension and is Free and Open-source!

An easy to use Natural Language Processing library and framework for predicting, training, fine-tuning, and serving up state-of-the-art NLP models.

Welcome to AdaptNLP A high level framework and library for running, training, and deploying state-of-the-art Natural Language Processing (NLP) models

Finetune gpt-2 in google colab

gpt-2-colab finetune gpt-2 in google colab sample result (117M) from retraining on A Tale of Two Cities by Charles Di

A python project made to generate code using either OpenAI's codex or GPT-J (Although not as good as codex)

CodeJ A python project made to generate code using either OpenAI's codex or GPT-J (Although not as good as codex) Install requirements pip install -r

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

Host your own GPT-3 Discord bot

GPT3 Discord Bot Host your own GPT-3 Discord bot i'd host and make the bot invitable myself, however GPT3 terms of service prohibit public use of GPT3

Explore different way to mix speech model(wav2vec2, hubert) and nlp model(BART,T5,GPT) together

SpeechMix Explore different way to mix speech model(wav2vec2, hubert) and nlp model(BART,T5,GPT) together. Introduction For the same input: from datas

Fine-tune GPT-3 with a Google Chat conversation history

Google Chat GPT-3 This repo will help you fine-tune GPT-3 with a Google Chat conversation history. The trained model will be able to converse as one o

Simple CLI prompt for easy I/O with OpenAI's API

openai-cli-prompt Simple CLI prompt for easy I/O with OpenAI's API Quickstart Create a .env file with: OPENAI_API_KEY=Your OpenAI API Key Configure

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

PatrickStar: Parallel Training of Large Language Models via a Chunk-based Memory Management Meeting PatrickStar Pre-Trained Models (PTM) are becoming

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

PatrickStar enables Larger, Faster, Greener Pretrained Models for NLP. Democratize AI for everyone.

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Neural text generators like the GPT models promise a general-purpose means of manipulating texts.

Boolean Prompting for Neural Text Generators Neural text generators like the GPT models promise a general-purpose means of manipulating texts. These m

GPT-3 command line interaction

Writer_unblock Straight-forward command line interfacing with GPT-3. Finding yourself stuck at a conceptual stage? Spinning your wheels needlessly on

Simple Text-Generator with OpenAI gpt-2 Pytorch Implementation

GPT2-Pytorch with Text-Generator Better Language Models and Their Implications Our model, called GPT-2 (a successor to GPT), was trained simply to pre

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Train GPT-3 model on V100(16GB Mem) Using improved Transformer.

GPT-X using transformer pytorch

Ongoing research training transformer language models at scale, including: BERT & GPT-2

Megatron (1 and 2) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA.

Implementation of Token Shift GPT - An autoregressive model that solely relies on shifting the sequence space for mixing

Token Shift GPT Implementation of Token Shift GPT - An autoregressive model that relies solely on shifting along the sequence dimension and feedforwar

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

GPT-Code-Clippy (GPT-CC) is an open source version of GitHub Copilot

GPT-Code-Clippy (GPT-CC) is an open source version of GitHub Copilot, a language model -- based on GPT-3, called GPT-Codex -- that is fine-tuned on publicly available code from GitHub.

Ongoing research training transformer language models at scale, including: BERT & GPT-2

What is this fork of Megatron-LM and Megatron-DeepSpeed This is a detached fork of https://github.com/microsoft/Megatron-DeepSpeed, which in itself is

LightSeq is a high performance training and inference library for sequence processing and generation implemented in CUDA

LightSeq: A High Performance Library for Sequence Processing and Generation

API for the GPT-J language model 🦜. Including a FastAPI backend and a streamlit frontend

gpt-j-api 🦜 An API to interact with the GPT-J language model. You can use and test the model in two different ways: Streamlit web app at http://api.v

Submit issues and feature requests for our API here.

AIx GPT API Submit issues and feature requests for our API here. See https://apps.aixsolutionsgroup.com for more info. Python Quick Start pip install

GPT-Code-Clippy (GPT-CC) is an open source version of GitHub Copilot, a language model

GPT-Code-Clippy (GPT-CC) is an open source version of GitHub Copilot, a language model -- based on GPT-3, called GPT-Codex -- that is fine-tuned on publicly available code from GitHub.

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Simple Text-Generator with OpenAI gpt-2 Pytorch Implementation

GPT2-Pytorch with Text-Generator Better Language Models and Their Implications Our model, called GPT-2 (a successor to GPT), was trained simply to pre

Code for "LoRA: Low-Rank Adaptation of Large Language Models"

LoRA: Low-Rank Adaptation of Large Language Models This repo contains the implementation of LoRA in GPT-2 and steps to replicate the results in our re

Easy-to-use CPM for Chinese text generation

CPM 项目描述 CPM(Chinese Pretrained Models)模型是北京智源人工智能研究院和清华大学发布的中文大规模预训练模型。官方发布了三种规模的模型,参数量分别为109M、334M、2.6B,用户需申请与通过审核,方可下载。 由于原项目需要考虑大模型的训练和使用,需要安装较为复杂

Generate product descriptions, blogs, ads and more using GPT architecture with a single request to TextCortex API a.k.a Hemingwai

TextCortex - HemingwAI Generate product descriptions, blogs, ads and more using GPT architecture with a single request to TextCortex API a.k.a Hemingw

Training data extraction on GPT-2

Training data extraction from GPT-2 This repository contains code for extracting training data from GPT-2, following the approach outlined in the foll

Fine-Tune EleutherAI GPT-Neo to Generate Netflix Movie Descriptions in Only 47 Lines of Code Using Hugginface And DeepSpeed

GPT-Neo-2.7B Fine-Tuning Example Using HuggingFace & DeepSpeed Installation cd venv/bin ./pip install -r ../../requirements.txt ./pip install deepspe

A GPT, made only of MLPs, in Jax

MLP GPT - Jax (wip) A GPT, made only of MLPs, in Jax. The specific MLP to be used are gMLPs with the Spatial Gating Units. Working Pytorch implementat

GPT, but made only out of gMLPs

GPT - gMLP This repository will attempt to crack long context autoregressive language modeling (GPT) using variations of gMLPs. Specifically, it will

Integrating the Best of TF into PyTorch, for Machine Learning, Natural Language Processing, and Text Generation. This is part of the CASL project: http://casl-project.ai/

Texar-PyTorch is a toolkit aiming to support a broad set of machine learning, especially natural language processing and text generation tasks. Texar

[EMNLP 2020] Keep CALM and Explore: Language Models for Action Generation in Text-based Games

Contextual Action Language Model (CALM) and the ClubFloyd Dataset Code and data for paper Keep CALM and Explore: Language Models for Action Generation

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

LightSeq: A High-Performance Inference Library for Sequence Processing and Generation

LightSeq is a high performance inference library for sequence processing and generation implemented in CUDA. It enables highly efficient computation of modern NLP models such as BERT, GPT2, Transformer, etc. It is therefore best useful for Machine Translation, Text Generation, Dialog, Language Modelling, and other related tasks using these models.

KE-Dialogue: Injecting knowledge graph into a fully end-to-end dialogue system.

Learning Knowledge Bases with Parameters for Task-Oriented Dialogue Systems This is the implementation of the paper: Learning Knowledge Bases with Par

Code for producing Japanese GPT-2 provided by rinna Co., Ltd.

japanese-gpt2 This repository provides the code for training Japanese GPT-2 models. This code has been used for producing japanese-gpt2-medium release

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Source code for the GPT-2 story generation models in the EMNLP 2020 paper "STORIUM: A Dataset and Evaluation Platform for Human-in-the-Loop Story Generation"

Storium GPT-2 Models This is the official repository for the GPT-2 models described in the EMNLP 2020 paper [STORIUM: A Dataset and Evaluation Platfor

Transformer related optimization, including BERT, GPT

This repository provides a script and recipe to run the highly optimized transformer-based encoder and decoder component, and it is tested and maintained by NVIDIA.

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

P-tuning A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. How to use our code We have released the code

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 B) on a single 16 GB VRAM V100 Google Cloud instance with Huggingface Transformers using DeepSpeed

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 Billion Parameters) on a single 16 GB VRAM V100 Google Cloud instance with Huggingfa

Code associated with the "Data Augmentation using Pre-trained Transformer Models" paper

Data Augmentation using Pre-trained Transformer Models Code associated with the Data Augmentation using Pre-trained Transformer Models paper Code cont

Few-shot Learning of GPT-3

Few-shot Learning With Language Models This is a codebase to perform few-shot "in-context" learning using language models similar to the GPT-3 paper.

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Kashgari Overview | Performance | Installation | Documentation | Contributing 🎉 🎉 🎉 We released the 2.0.0 version with TF2 Support. 🎉 🎉 🎉 If you

Python package to easily retrain OpenAI's GPT-2 text-generating model on new texts

gpt-2-simple A simple Python package that wraps existing model fine-tuning and generation scripts for OpenAI's GPT-2 text generation model (specifical

Toolkit for Machine Learning, Natural Language Processing, and Text Generation, in TensorFlow. This is part of the CASL project: http://casl-project.ai/

Texar is a toolkit aiming to support a broad set of machine learning, especially natural language processing and text generation tasks. Texar provides

🛸 Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy

spacy-transformers: Use pretrained transformers like BERT, XLNet and GPT-2 in spaCy This package provides spaCy components and architectures to use tr

💥 Fast State-of-the-Art Tokenizers optimized for Research and Production

Provides an implementation of today's most used tokenizers, with a focus on performance and versatility. Main features: Train new vocabularies and tok

🤗Transformers: State-of-the-art Natural Language Processing for Pytorch and TensorFlow 2.0.

State-of-the-art Natural Language Processing for PyTorch and TensorFlow 2.0 🤗 Transformers provides thousands of pretrained models to perform tasks o

Shirt Bot is a discord bot which uses GPT-3 to generate text

SHIRT BOT · Shirt Bot is a discord bot which uses GPT-3 to generate text. Made by Cyclcrclicly#3420 (474183744685604865) on Discord. Support Server EX

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Kashgari Overview | Performance | Installation | Documentation | Contributing 🎉 🎉 🎉 We released the 2.0.0 version with TF2 Support. 🎉 🎉 🎉 If you

Python package to easily retrain OpenAI's GPT-2 text-generating model on new texts

gpt-2-simple A simple Python package that wraps existing model fine-tuning and generation scripts for OpenAI's GPT-2 text generation model (specifical