197 Repositories

Python gpu-dataframes Libraries

impy is an all-in-one image analysis library, equipped with parallel processing, GPU support, GUI based tools and so on.

impy is All You Need in Image Analysis impy is an all-in-one image analysis library, equipped with parallel processing, GPU support, GUI based tools a

Eland is a Python Elasticsearch client for exploring and analyzing data in Elasticsearch with a familiar Pandas-compatible API.

Python Client and Toolkit for DataFrames, Big Data, Machine Learning and ETL in Elasticsearch

Huggingface inference with GPU Docker on AWS

This repository contains code to containerize and deploy a GPU docker on AWS for summarization task. Find a detailed blogpost here Youtube Video Versi

Blue noise image stippling in Processing (requires GPU)

Blue noise image stippling in Processing (requires GPU)

A GUI for Face Recognition, based upon Docker, Tkinter, GPU and a camera device.

Face Recognition GUI This repository is a GUI version of Face Recognition by Adam Geitgey, where e.g. Docker and Tkinter are utilized. All the materia

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

📊📈 Serves up Pandas dataframes via the Django REST Framework for use in client-side (i.e. d3.js) visualizations and offline analysis (e.g. Excel)

📊📈 Serves up Pandas dataframes via the Django REST Framework for use in client-side (i.e. d3.js) visualizations and offline analysis (e.g. Excel)

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

BMInf (Big Model Inference) is a low-resource inference package for large-scale pretrained language models (PLMs).

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks This repository implements a capsule model Inten

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

📊📈 Serves up Pandas dataframes via the Django REST Framework for use in client-side (i.e. d3.js) visualizations and offline analysis (e.g. Excel)

Django REST Pandas Django REST Framework + pandas = A Model-driven Visualization API Django REST Pandas (DRP) provides a simple way to generate and se

Implementation of a Transformer, but completely in Triton

Transformer in Triton (wip) Implementation of a Transformer, but completely in Triton. I'm completely new to lower-level neural net code, so this repo

WarpDrive: Extremely Fast End-to-End Deep Multi-Agent Reinforcement Learning on a GPU

WarpDrive is a flexible, lightweight, and easy-to-use open-source reinforcement learning (RL) framework that implements end-to-end multi-agent RL on a single GPU (Graphics Processing Unit).

A3C LSTM Atari with Pytorch plus A3G design

NEWLY ADDED A3G A NEW GPU/CPU ARCHITECTURE OF A3C FOR SUBSTANTIALLY ACCELERATED TRAINING!! RL A3C Pytorch NEWLY ADDED A3G!! New implementation of A3C

Run Effective Large Batch Contrastive Learning on Limited Memory GPU

Gradient Cache Gradient Cache is a simple technique for unlimitedly scaling contrastive learning batch far beyond GPU memory constraint. This means tr

A modified version of DeepMind's Alphafold2 to divide CPU part (MSA and template searching) and GPU part (prediction model)

ParallelFold Author: Bozitao Zhong This is a modified version of DeepMind's Alphafold2 to divide CPU part (MSA and template searching) and GPU part (p

This demo showcase the use of onnxruntime-rs with a GPU on CUDA 11 to run Bert in a data pipeline with Rust.

Demo BERT ONNX pipeline written in rust This demo showcase the use of onnxruntime-rs with a GPU on CUDA 11 to run Bert in a data pipeline with Rust. R

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks This repository implements a capsule model Inten

The MLOps platform for innovators 🚀

DS2.ai is an integrated AI operation solution that supports all stages from custom AI development to deployment. It is an AI-specialized platform service that collects data, builds a training dataset through data labeling, and enables automatic development of artificial intelligence and easy deployment and operation.

Convert Python 3 code to CUDA code.

Py2CUDA Convert python code to CUDA. Usage To convert a python file say named py_file.py to CUDA, run python generate_cuda.py --file py_file.py --arch

A community run, 5-day PyTorch Deep Learning Bootcamp

Deep Learning Winter School, November 2107. Tel Aviv Deep Learning Bootcamp : http://deep-ml.com. About Tel-Aviv Deep Learning Bootcamp is an intensiv

A very simple and small path tracer written in pytorch meant to be run on the GPU

MentisOculi Pytorch Path Tracer A very simple and small path tracer written in pytorch meant to be run on the GPU Why use pytorch and not some other c

ocaml-torch provides some ocaml bindings for the PyTorch tensor library.

ocaml-torch provides some ocaml bindings for the PyTorch tensor library. This brings to OCaml NumPy-like tensor computations with GPU acceleration and tape-based automatic differentiation.

🛠 All-in-one web-based IDE specialized for machine learning and data science.

All-in-one web-based development environment for machine learning Getting Started • Features & Screenshots • Support • Report a Bug • FAQ • Known Issu

QPT-Quick packaging tool 前项式Python环境快捷封装工具

QPT - Quick packaging tool 快捷封装工具 GitHub主页 | Gitee主页 QPT是一款可以“模拟”开发环境的多功能封装工具,一行命令即可将普通的Python脚本打包成EXE可执行程序,与此同时还可轻松引入CUDA等深度学习加速库, 尽可能在用户使用时复现您的开发环境。

Complete U-net Implementation with keras

U Net Lowered with Keras Complete U-net Implementation with keras Original Paper Link : https://arxiv.org/abs/1505.04597 Special Implementations : The

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

DistML is a Ray extension library to support large-scale distributed ML training on heterogeneous multi-node multi-GPU clusters

Request based Python module(s) to help with the Newegg raffle.

Newegg Shuffle Python module(s) to help you with the Newegg raffle How to use $ git clone https://github.com/Matthew17-21/Newegg-Shuffle $ cd Newegg-S

🔮 Execution time predictions for deep neural network training iterations across different GPUs.

Habitat: A Runtime-Based Computational Performance Predictor for Deep Neural Network Training Habitat is a tool that predicts a deep neural network's

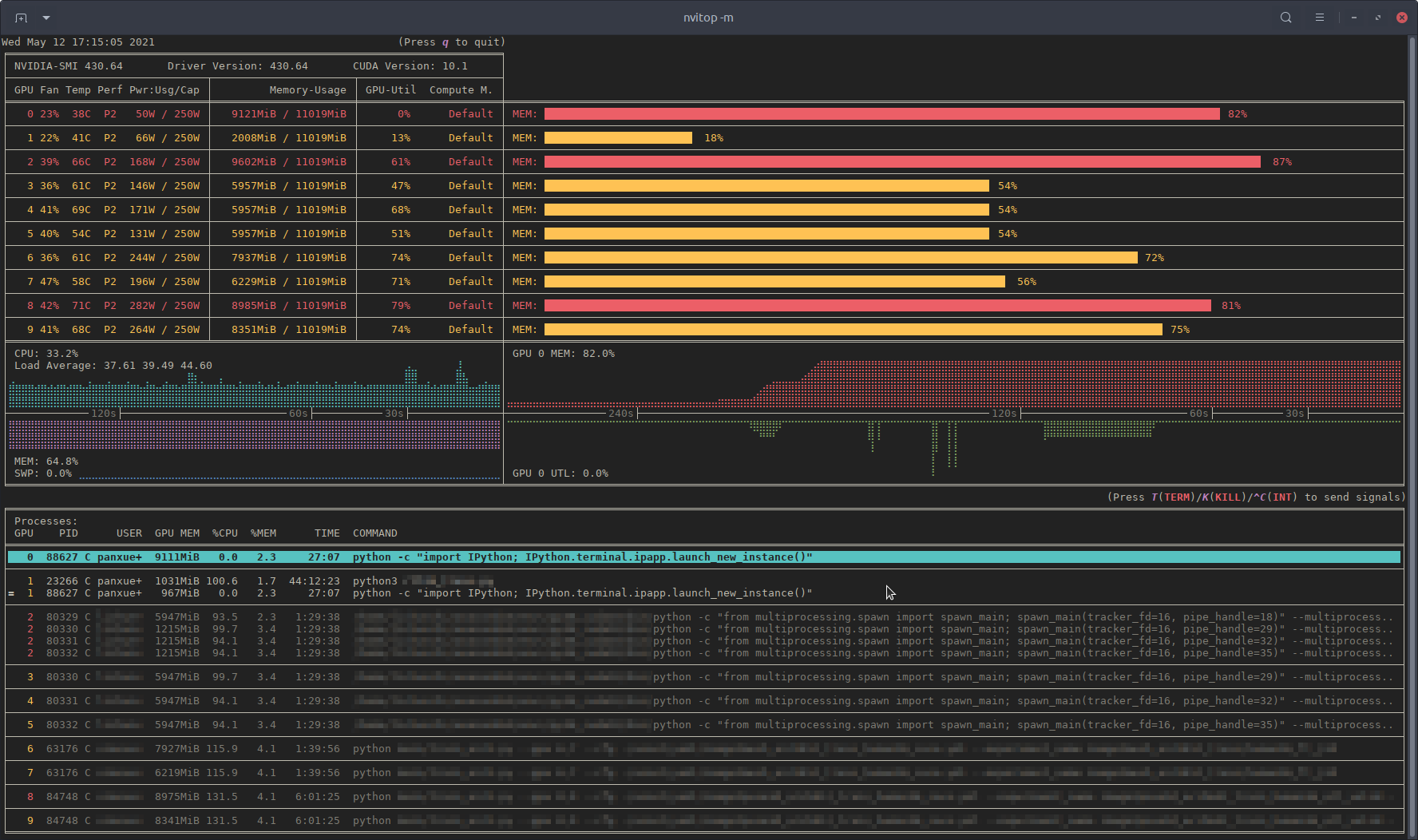

nvitop, an interactive NVIDIA-GPU process viewer, the one-stop solution for GPU process management

An interactive NVIDIA-GPU process viewer, the one-stop solution for GPU process management.

A lightweight (serverless) native python parallel processing framework based on simple decorators and call graphs.

A lightweight (serverless) native python parallel processing framework based on simple decorators and call graphs, supporting both control flow and dataflow execution paradigms as well as de-centralized CPU & GPU scheduling.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

TorchPQ is a python library for Approximate Nearest Neighbor Search (ANNS) and Maximum Inner Product Search (MIPS) on GPU using Product Quantization (PQ) algorithm.

Efficient implementations of Product Quantization and its variants using Pytorch and CUDA

A simple way to train and use PyTorch models with multi-GPU, TPU, mixed-precision

🤗 Accelerate was created for PyTorch users who like to write the training loop of PyTorch models but are reluctant to write and maintain the boilerplate code needed to use multi-GPUs/TPU/fp16.

Learn computer graphics by writing GPU shaders!

This repo contains a selection of projects designed to help you learn the basics of computer graphics. We'll be writing shaders to render interactive two-dimensional and three-dimensional scenes.

![[Open Source]. The improved version of AnimeGAN. Landscape photos/videos to anime](https://github.com/TachibanaYoshino/AnimeGANv2/raw/master/AnimeGANv2.png)

[Open Source]. The improved version of AnimeGAN. Landscape photos/videos to anime

[Open Source]. The improved version of AnimeGAN. Landscape photos/videos to anime

Learning recognition/segmentation models without end-to-end training. 40%-60% less GPU memory footprint. Same training time. Better performance.

InfoPro-Pytorch The Information Propagation algorithm for training deep networks with local supervision. (ICLR 2021) Revisiting Locally Supervised Lea

monolish: MONOlithic Liner equation Solvers for Highly-parallel architecture

monolish is a linear equation solver library that monolithically fuses variable data type, matrix structures, matrix data format, vendor specific data transfer APIs, and vendor specific numerical algebra libraries.

An open-source library of algorithms to analyse time series in GPU and CPU.

An open-source library of algorithms to analyse time series in GPU and CPU.

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 B) on a single 16 GB VRAM V100 Google Cloud instance with Huggingface Transformers using DeepSpeed

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 Billion Parameters) on a single 16 GB VRAM V100 Google Cloud instance with Huggingfa

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

Differentiable SDE solvers with GPU support and efficient sensitivity analysis.

PyTorch Implementation of Differentiable SDE Solvers This library provides stochastic differential equation (SDE) solvers with GPU support and efficie

Differentiable ODE solvers with full GPU support and O(1)-memory backpropagation.

PyTorch Implementation of Differentiable ODE Solvers This library provides ordinary differential equation (ODE) solvers implemented in PyTorch. Backpr

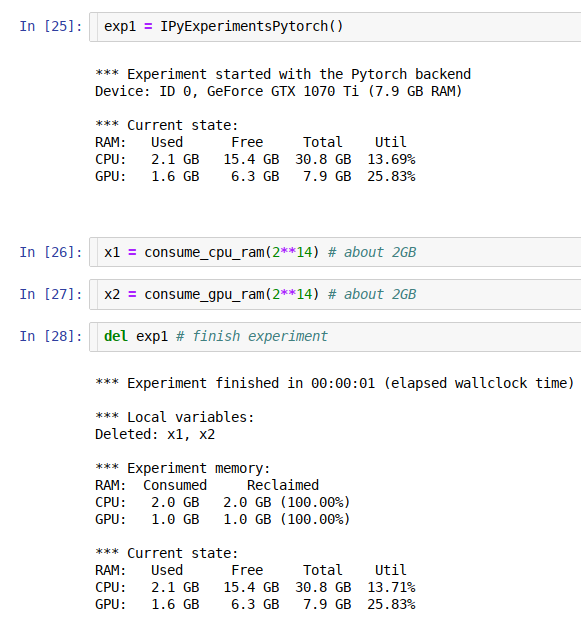

jupyter/ipython experiment containers for GPU and general RAM re-use

ipyexperiments jupyter/ipython experiment containers and utils for profiling and reclaiming GPU and general RAM, and detecting memory leaks. About Thi

Library for faster pinned CPU - GPU transfer in Pytorch

SpeedTorch Faster pinned CPU tensor - GPU Pytorch variabe transfer and GPU tensor - GPU Pytorch variable transfer, in certain cases. Update 9-29-1

A Python module for getting the GPU status from NVIDA GPUs using nvidia-smi programmically in Python

GPUtil GPUtil is a Python module for getting the GPU status from NVIDA GPUs using nvidia-smi. GPUtil locates all GPUs on the computer, determines thei

General purpose GPU compute framework for cross vendor graphics cards (AMD, Qualcomm, NVIDIA & friends). Blazing fast, mobile-enabled, asynchronous and optimized for advanced GPU data processing usecases.

Vulkan Kompute The general purpose GPU compute framework for cross vendor graphics cards (AMD, Qualcomm, NVIDIA & friends). Blazing fast, mobile-enabl

BlazingSQL is a lightweight, GPU accelerated, SQL engine for Python. Built on RAPIDS cuDF.

A lightweight, GPU accelerated, SQL engine built on the RAPIDS.ai ecosystem. Get Started on app.blazingsql.com Getting Started | Documentation | Examp

cuML - RAPIDS Machine Learning Library

cuML - GPU Machine Learning Algorithms cuML is a suite of libraries that implement machine learning algorithms and mathematical primitives functions t

A GPU-accelerated library containing highly optimized building blocks and an execution engine for data processing to accelerate deep learning training and inference applications.

NVIDIA DALI The NVIDIA Data Loading Library (DALI) is a library for data loading and pre-processing to accelerate deep learning applications. It provi

Python 3 Bindings for NVML library. Get NVIDIA GPU status inside your program.

py3nvml Documentation also available at readthedocs. Python 3 compatible bindings to the NVIDIA Management Library. Can be used to query the state of

cuDF - GPU DataFrame Library

cuDF - GPU DataFrames NOTE: For the latest stable README.md ensure you are on the main branch. Resources cuDF Reference Documentation: Python API refe

Python interface to GPU-powered libraries

Package Description scikit-cuda provides Python interfaces to many of the functions in the CUDA device/runtime, CUBLAS, CUFFT, and CUSOLVER libraries

ArrayFire: a general purpose GPU library.

ArrayFire is a general-purpose library that simplifies the process of developing software that targets parallel and massively-parallel architectures i

CUDA integration for Python, plus shiny features

PyCUDA lets you access Nvidia's CUDA parallel computation API from Python. Several wrappers of the CUDA API already exist-so what's so special about P

📊 A simple command-line utility for querying and monitoring GPU status

gpustat Just less than nvidia-smi? NOTE: This works with NVIDIA Graphics Devices only, no AMD support as of now. Contributions are welcome! Self-Promo

A NumPy-compatible array library accelerated by CUDA

CuPy : A NumPy-compatible array library accelerated by CUDA Website | Docs | Install Guide | Tutorial | Examples | API Reference | Forum CuPy is an im

[DEPRECATED] Tensorflow wrapper for DataFrames on Apache Spark

TensorFrames (Deprecated) Note: TensorFrames is deprecated. You can use pandas UDF instead. Experimental TensorFlow binding for Scala and Apache Spark

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective. 10x Larger Models 10x Faster Trainin

Time series forecasting with PyTorch

Our article on Towards Data Science introduces the package and provides background information. Pytorch Forecasting aims to ease state-of-the-art time

High-performance TensorFlow library for quantitative finance.

TF Quant Finance: TensorFlow based Quant Finance Library Table of contents Introduction Installation TensorFlow training Development roadmap Examples

Tensorflow 2 Object Detection API kurulumu, GPU desteği, custom model hazırlama

Tensorflow 2 Object Detection API Bu tutorial, TensorFlow 2.x'in kararlı sürümü olan TensorFlow 2.3'ye yöneliktir. Bu, görüntülerde / videoda nesne a

An extension to pandas dataframes describe function.

pandas_summary An extension to pandas dataframes describe function. The module contains DataFrameSummary object that extend describe() with: propertie

A Genetic Programming platform for Python with TensorFlow for wicked-fast CPU and GPU support.

Karoo GP Karoo GP is an evolutionary algorithm, a genetic programming application suite written in Python which supports both symbolic regression and

A highly efficient and modular implementation of Gaussian Processes in PyTorch

GPyTorch GPyTorch is a Gaussian process library implemented using PyTorch. GPyTorch is designed for creating scalable, flexible, and modular Gaussian

Easy pipelines for pandas DataFrames.

pdpipe ˨ Easy pipelines for pandas DataFrames (learn how!). Website: https://pdpipe.github.io/pdpipe/ Documentation: https://pdpipe.github.io/pdpipe/d

Out-of-Core DataFrames for Python, ML, visualize and explore big tabular data at a billion rows per second 🚀

What is Vaex? Vaex is a high performance Python library for lazy Out-of-Core DataFrames (similar to Pandas), to visualize and explore big tabular data

cuDF - GPU DataFrame Library

cuDF - GPU DataFrames NOTE: For the latest stable README.md ensure you are on the main branch. Built based on the Apache Arrow columnar memory format,

ThunderGBM: Fast GBDTs and Random Forests on GPUs

Documentations | Installation | Parameters | Python (scikit-learn) interface What's new? ThunderGBM won 2019 Best Paper Award from IEEE Transactions o

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

ThunderSVM: A Fast SVM Library on GPUs and CPUs

What's new We have recently released ThunderGBM, a fast GBDT and Random Forest library on GPUs. add scikit-learn interface, see here Overview The miss

50% faster, 50% less RAM Machine Learning. Numba rewritten Sklearn. SVD, NNMF, PCA, LinearReg, RidgeReg, Randomized, Truncated SVD/PCA, CSR Matrices all 50+% faster

[Due to the time taken @ uni, work + hell breaking loose in my life, since things have calmed down a bit, will continue commiting!!!] [By the way, I'm

cuML - RAPIDS Machine Learning Library

cuML - GPU Machine Learning Algorithms cuML is a suite of libraries that implement machine learning algorithms and mathematical primitives functions t

Lazy Profiler is a simple utility to collect CPU, GPU, RAM and GPU Memory stats while the program is running.

lazyprofiler Lazy Profiler is a simple utility to collect CPU, GPU, RAM and GPU Memory stats while the program is running. Installation Use the packag

A GUI for Pandas DataFrames

PandasGUI A GUI for analyzing Pandas DataFrames. Demo Installation Install latest release from PyPi: pip install pandasgui Install directly from Githu

DeepSpeech is an open source embedded (offline, on-device) speech-to-text engine which can run in real time on devices ranging from a Raspberry Pi 4 to high power GPU servers.

Project DeepSpeech DeepSpeech is an open-source Speech-To-Text engine, using a model trained by machine learning techniques based on Baidu's Deep Spee

Ivy is a templated deep learning framework which maximizes the portability of deep learning codebases.

Ivy is a templated deep learning framework which maximizes the portability of deep learning codebases. Ivy wraps the functional APIs of existing frameworks. Framework-agnostic functions, libraries and layers can then be written using Ivy, with simultaneous support for all frameworks. Ivy currently supports Jax, TensorFlow, PyTorch, MXNet and Numpy. Check out the docs for more info!

A GUI for Pandas DataFrames

PandasGUI A GUI for analyzing Pandas DataFrames. Demo Installation Install latest release from PyPi: pip install pandasgui Install directly from Githu

ThunderGBM: Fast GBDTs and Random Forests on GPUs

Documentations | Installation | Parameters | Python (scikit-learn) interface What's new? ThunderGBM won 2019 Best Paper Award from IEEE Transactions o

ThunderSVM: A Fast SVM Library on GPUs and CPUs

What's new We have recently released ThunderGBM, a fast GBDT and Random Forest library on GPUs. add scikit-learn interface, see here Overview The miss

A flexible framework of neural networks for deep learning

Chainer: A deep learning framework Website | Docs | Install Guide | Tutorials (ja) | Examples (Official, External) | Concepts | ChainerX Forum (en, ja

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

JAX: Autograd and XLA Quickstart | Transformations | Install guide | Neural net libraries | Change logs | Reference docs | Code search News: JAX tops

The fastai deep learning library

Welcome to fastai fastai simplifies training fast and accurate neural nets using modern best practices Important: This documentation covers fastai v2,

Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorch is a Python package that provides two high-level features: Tensor computation (like NumPy) with strong GPU acceleration Deep neural networks b

[ICLR 2021] "Neural Architecture Search on ImageNet in Four GPU Hours: A Theoretically Inspired Perspective" by Wuyang Chen, Xinyu Gong, Zhangyang Wang

Neural Architecture Search on ImageNet in Four GPU Hours: A Theoretically Inspired Perspective [PDF] Wuyang Chen, Xinyu Gong, Zhangyang Wang In ICLR 2

Torchserve server using a YoloV5 model running on docker with GPU and static batch inference to perform production ready inference.

Yolov5 running on TorchServe (GPU compatible) ! This is a dockerfile to run TorchServe for Yolo v5 object detection model. (TorchServe (PyTorch librar

Python bindings for ArrayFire: A general purpose GPU library.

ArrayFire Python Bindings ArrayFire is a high performance library for parallel computing with an easy-to-use API. It enables users to write scientific

The fastai deep learning library

Welcome to fastai fastai simplifies training fast and accurate neural nets using modern best practices Important: This documentation covers fastai v2,

Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

JAX: Autograd and XLA Quickstart | Transformations | Install guide | Neural net libraries | Change logs | Reference docs | Code search News: JAX tops

A fast, scalable, high performance Gradient Boosting on Decision Trees library, used for ranking, classification, regression and other machine learning tasks for Python, R, Java, C++. Supports computation on CPU and GPU.

Website | Documentation | Tutorials | Installation | Release Notes CatBoost is a machine learning method based on gradient boosting over decision tree

Deep Learning GPU Training System

DIGITS DIGITS (the Deep Learning GPU Training System) is a webapp for training deep learning models. The currently supported frameworks are: Caffe, To

A flexible framework of neural networks for deep learning

Chainer: A deep learning framework Website | Docs | Install Guide | Tutorials (ja) | Examples (Official, External) | Concepts | ChainerX Forum (en, ja

GPU-Accelerated Deep Learning Library in Python

Hebel GPU-Accelerated Deep Learning Library in Python Hebel is a library for deep learning with neural networks in Python using GPU acceleration with

H2O is an Open Source, Distributed, Fast & Scalable Machine Learning Platform: Deep Learning, Gradient Boosting (GBM) & XGBoost, Random Forest, Generalized Linear Modeling (GLM with Elastic Net), K-Means, PCA, Generalized Additive Models (GAM), RuleFit, Support Vector Machine (SVM), Stacked Ensembles, Automatic Machine Learning (AutoML), etc.

H2O H2O is an in-memory platform for distributed, scalable machine learning. H2O uses familiar interfaces like R, Python, Scala, Java, JSON and the Fl

Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorch is a Python package that provides two high-level features: Tensor computation (like NumPy) with strong GPU acceleration Deep neural networks b