55 Repositories

Python interpretability Libraries

B-cos Networks: Attention is All we Need for Interpretability

Convolutional Dynamic Alignment Networks for Interpretable Classifications M. Böhle, M. Fritz, B. Schiele. B-cos Networks: Alignment is All we Need fo

![[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).](https://github.com/Graph-COM/GSAT/raw/main/data/arch.png)

[ICML 2022] The official implementation of Graph Stochastic Attention (GSAT).

Graph Stochastic Attention (GSAT) The official implementation of GSAT for our paper: Interpretable and Generalizable Graph Learning via Stochastic Att

Rank-One Model Editing for Locating and Editing Factual Knowledge in GPT

Rank-One Model Editing (ROME) This repository provides an implementation of Rank-One Model Editing (ROME) on auto-regressive transformers (GPU-only).

Adaout is a practical and flexible regularization method with high generalization and interpretability

Adaout Adaout is a practical and flexible regularization method with high generalization and interpretability. Requirements python 3.6 (Anaconda versi

Python package for concise, transparent, and accurate predictive modeling

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. 📚 docs • 📖 demo notebooks Modern

This component provides a wrapper to display SHAP plots in Streamlit.

streamlit-shap This component provides a wrapper to display SHAP plots in Streamlit.

Which Style Makes Me Attractive? Interpretable Control Discovery and Counterfactual Explanation on StyleGAN

Interpretable Control Exploration and Counterfactual Explanation (ICE) on StyleGAN Which Style Makes Me Attractive? Interpretable Control Discovery an

Model Agnostic Interpretability for Multiple Instance Learning

MIL Model Agnostic Interpretability This repo contains the code for "Model Agnostic Interpretability for Multiple Instance Learning". Overview Executa

Toward Model Interpretability in Medical NLP

Toward Model Interpretability in Medical NLP LING380: Topics in Computational Linguistics Final Project James Cross ([email protected]) and Daniel Kim

Official implementation for "Symbolic Learning to Optimize: Towards Interpretability and Scalability"

Symbolic Learning to Optimize This is the official implementation for ICLR-2022 paper "Symbolic Learning to Optimize: Towards Interpretability and Sca

A curated list of awesome open source libraries to deploy, monitor, version and scale your machine learning

Awesome production machine learning This repository contains a curated list of awesome open source libraries that will help you deploy, monitor, versi

🧮 Matrix Factorization for Collaborative Filtering is just Solving an Adjoint Latent Dirichlet Allocation Model after All

Accompanying source code to the paper "Matrix Factorization for Collaborative Filtering is just Solving an Adjoint Latent Dirichlet Allocation Model A

Pytorch implementation of NEGEV method. Paper: "Negative Evidence Matters in Interpretable Histology Image Classification".

Pytorch 1.10.0 code for: Negative Evidence Matters in Interpretable Histology Image Classification (https://arxiv. org/abs/xxxx.xxxxx) Citation: @arti

🔅 Shapash makes Machine Learning models transparent and understandable by everyone

🎉 What's new ? Version New Feature Description Tutorial 1.6.x Explainability Quality Metrics To help increase confidence in explainability methods, y

PCACE: A Statistical Approach to Ranking Neurons for CNN Interpretability

PCACE: A Statistical Approach to Ranking Neurons for CNN Interpretability PCACE is a new algorithm for ranking neurons in a CNN architecture in order

Resources for our AAAI 2022 paper: "LOREN: Logic-Regularized Reasoning for Interpretable Fact Verification".

LOREN Resources for our AAAI 2022 paper (pre-print): "LOREN: Logic-Regularized Reasoning for Interpretable Fact Verification". DEMO System Check out o

Why Are You Weird? Infusing Interpretability in Isolation Forest for Anomaly Detection

Why, hello there! This is the supporting notebook for the research paper — Why Are You Weird? Infusing Interpretability in Isolation Forest for Anomal

HIVE: Evaluating the Human Interpretability of Visual Explanations

HIVE: Evaluating the Human Interpretability of Visual Explanations Project Page | Paper This repo provides the code for HIVE, a human evaluation frame

Interpretable and Generalizable Person Re-Identification with Query-Adaptive Convolution and Temporal Lifting

QAConv Interpretable and Generalizable Person Re-Identification with Query-Adaptive Convolution and Temporal Lifting This PyTorch code is proposed in

Code implementing "Improving Deep Learning Interpretability by Saliency Guided Training"

Saliency Guided Training Code implementing "Improving Deep Learning Interpretability by Saliency Guided Training" by Aya Abdelsalam Ismail, Hector Cor

Code for the TCAV ML interpretability project

Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV) Been Kim, Martin Wattenberg, Justin Gilmer, C

The code of NeurIPS 2021 paper "Scalable Rule-Based Representation Learning for Interpretable Classification".

Rule-based Representation Learner This is a PyTorch implementation of Rule-based Representation Learner (RRL) as described in NeurIPS 2021 paper: Scal

Responsible Machine Learning with Python

Examples of techniques for training interpretable ML models, explaining ML models, and debugging ML models for accuracy, discrimination, and security.

Python package to visualize and cluster partial dependence.

partial_dependence A python library for plotting partial dependence patterns of machine learning classifiers. The technique is a black box approach to

XAI - An eXplainability toolbox for machine learning

XAI - An eXplainability toolbox for machine learning XAI is a Machine Learning library that is designed with AI explainability in its core. XAI contai

moDel Agnostic Language for Exploration and eXplanation

moDel Agnostic Language for Exploration and eXplanation Overview Unverified black box model is the path to the failure. Opaqueness leads to distrust.

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

Measuring if attention is explanation with ROAR

NLP ROAR Interpretability Official code for: Evaluating the Faithfulness of Importance Measures in NLP by Recursively Masking Allegedly Important Toke

SimplEx - Explaining Latent Representations with a Corpus of Examples

SimplEx - Explaining Latent Representations with a Corpus of Examples Code Author: Jonathan Crabbé ([email protected]) This repository contains the imp

Estimating Example Difficulty using Variance of Gradients

Estimating Example Difficulty using Variance of Gradients This repository contains source code necessary to reproduce some of the main results in the

Adaptive, interpretable wavelets across domains (NeurIPS 2021)

Adaptive wavelets Wavelets which adapt given data (and optionally a pre-trained model). This yields models which are faster, more compressible, and mo

Class activation maps for your PyTorch models (CAM, Grad-CAM, Grad-CAM++, Smooth Grad-CAM++, Score-CAM, SS-CAM, IS-CAM, XGrad-CAM, Layer-CAM)

TorchCAM: class activation explorer Simple way to leverage the class-specific activation of convolutional layers in PyTorch. Quick Tour Setting your C

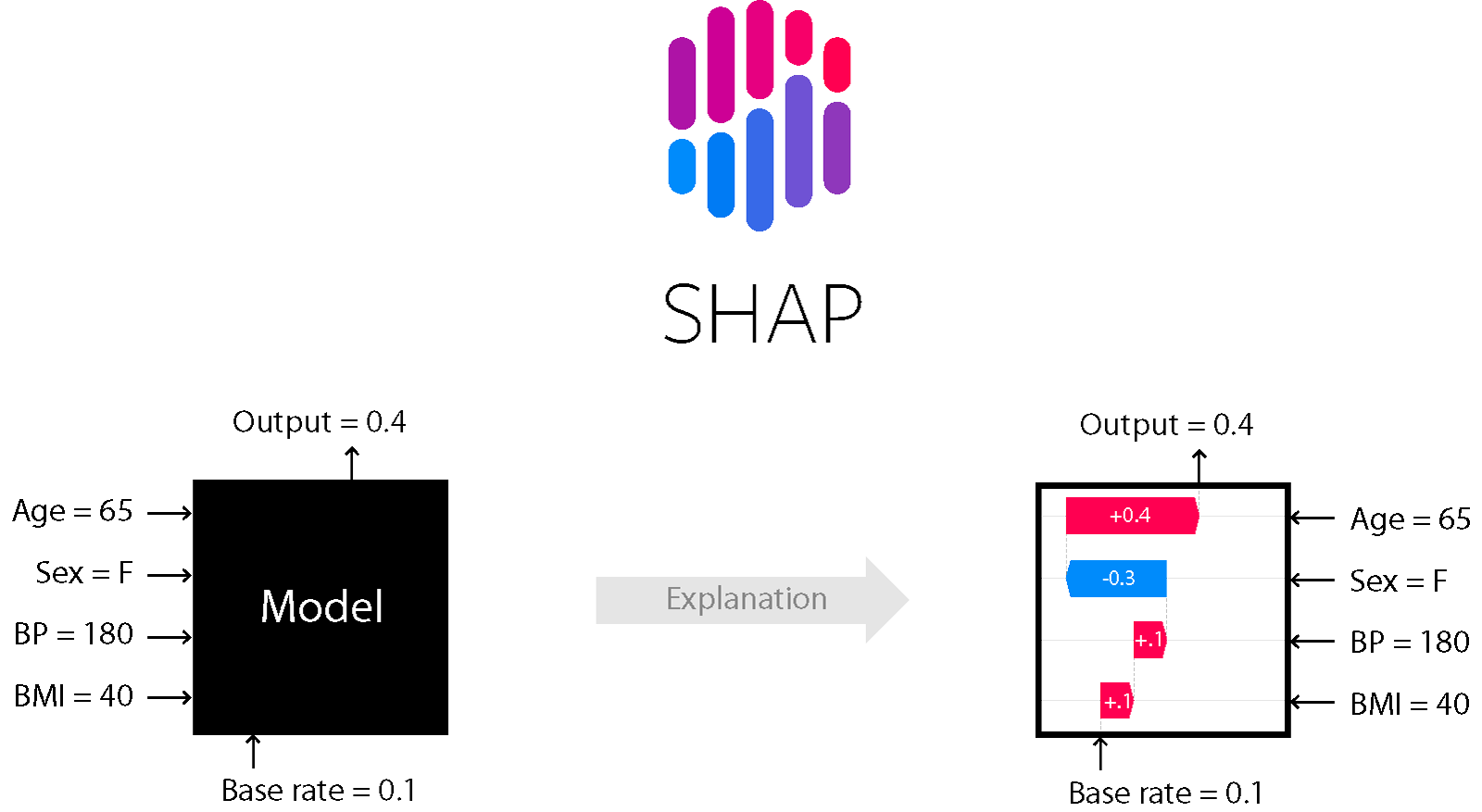

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

PyTorch implementation of the WarpedGANSpace: Finding non-linear RBF paths in GAN latent space (ICCV 2021)

Authors official PyTorch implementation of the "WarpedGANSpace: Finding non-linear RBF paths in GAN latent space" [ICCV 2021].

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

👋🦊 Xplique is a Python toolkit dedicated to explainability, currently based on Tensorflow.

Using / reproducing ACD from the paper "Hierarchical interpretations for neural network predictions" 🧠 (ICLR 2019)

Hierarchical neural-net interpretations (ACD) 🧠 Produces hierarchical interpretations for a single prediction made by a pytorch neural network. Offic

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

Visualization toolkit for neural networks in PyTorch! Demo --

FlashTorch A Python visualization toolkit, built with PyTorch, for neural networks in PyTorch. Neural networks are often described as "black box". The

Many Class Activation Map methods implemented in Pytorch for CNNs and Vision Transformers. Including Grad-CAM, Grad-CAM++, Score-CAM, Ablation-CAM and XGrad-CAM

Class Activation Map methods implemented in Pytorch pip install grad-cam ⭐ Comprehensive collection of Pixel Attribution methods for Computer Vision.

Collection of NLP model explanations and accompanying analysis tools

Thermostat is a large collection of NLP model explanations and accompanying analysis tools. Combines explainability methods from the captum library wi

Many Class Activation Map methods implemented in Pytorch for CNNs and Vision Transformers. Including Grad-CAM, Grad-CAM++, Score-CAM, Ablation-CAM and XGrad-CAM

Class Activation Map methods implemented in Pytorch pip install grad-cam ⭐ Tested on many Common CNN Networks and Vision Transformers. ⭐ Includes smoo

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

PyTorch implementation of "Transparency by Design: Closing the Gap Between Performance and Interpretability in Visual Reasoning"

Transparency-by-Design networks (TbD-nets) This repository contains code for replicating the experiments and visualizations from the paper Transparenc

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models.

TensorFlow Decision Forests (TF-DF) is a collection of state-of-the-art algorithms for the training, serving and interpretation of Decision Forest models. The library is a collection of Keras models and supports classification, regression and ranking. TF-DF is a TensorFlow wrapper around the Yggdrasil Decision Forests C++ libraries. Models trained with TF-DF are compatible with Yggdrasil Decision Forests' models, and vice versa.

Visualization toolkit for neural networks in PyTorch! Demo --

FlashTorch A Python visualization toolkit, built with PyTorch, for neural networks in PyTorch. Neural networks are often described as "black box". The

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

A collection of infrastructure and tools for research in neural network interpretability.

Lucid Lucid is a collection of infrastructure and tools for research in neural network interpretability. We're not currently supporting tensorflow 2!

Interpretability and explainability of data and machine learning models

AI Explainability 360 (v0.2.1) The AI Explainability 360 toolkit is an open-source library that supports interpretability and explainability of datase

⬛ Python Individual Conditional Expectation Plot Toolbox

⬛ PyCEbox Python Individual Conditional Expectation Plot Toolbox A Python implementation of individual conditional expecation plots inspired by R's IC

A game theoretic approach to explain the output of any machine learning model.

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model. It connects optimal credit allo

Contrastive Explanation (Foil Trees), developed at TNO/Utrecht University

Contrastive Explanation (Foil Trees) Contrastive and counterfactual explanations for machine learning (ML) Marcel Robeer (2018-2020), TNO/Utrecht Univ

Algorithms for monitoring and explaining machine learning models

Alibi is an open source Python library aimed at machine learning model inspection and interpretation. The focus of the library is to provide high-qual

A Python package implementing a new model for text classification with visualization tools for Explainable AI :octocat:

A Python package implementing a new model for text classification with visualization tools for Explainable AI 🍣 Online live demos: http://tworld.io/s

StellarGraph - Machine Learning on Graphs

StellarGraph Machine Learning Library StellarGraph is a Python library for machine learning on graphs and networks. Table of Contents Introduction Get