167 Repositories

Python lazy-evaluation Libraries

Evaluation of a Monocular Eye Tracking Set-Up

Evaluation of a Monocular Eye Tracking Set-Up As part of my master thesis, I implemented a new state-of-the-art model that is based on the work of Che

A fast implementation of bss_eval metrics for blind source separation

fast_bss_eval Do you have a zillion BSS audio files to process and it is taking days ? Is your simulation never ending ? Fear no more! fast_bss_eval i

ImageNet-CoG is a benchmark for concept generalization. It provides a full evaluation framework for pre-trained visual representations which measure how well they generalize to unseen concepts.

The ImageNet-CoG Benchmark Project Website Paper (arXiv) Code repository for the ImageNet-CoG Benchmark introduced in the paper "Concept Generalizatio

fast_bss_eval is a fast implementation of the bss_eval metrics for the evaluation of blind source separation.

fast_bss_eval Do you have a zillion BSS audio files to process and it is taking days ? Is your simulation never ending ? Fear no more! fast_bss_eval i

Supplementary materials for ISMIR 2021 LBD paper "Evaluation of Latent Space Disentanglement in the Presence of Interdependent Attributes"

Evaluation of Latent Space Disentanglement in the Presence of Interdependent Attributes Supplementary materials for ISMIR 2021 LBD submission: K. N. W

Implementation of temporal pooling methods studied in [ICIP'20] A Comparative Evaluation Of Temporal Pooling Methods For Blind Video Quality Assessment

Implementation of temporal pooling methods studied in [ICIP'20] A Comparative Evaluation Of Temporal Pooling Methods For Blind Video Quality Assessment

Novel Instances Mining with Pseudo-Margin Evaluation for Few-Shot Object Detection

Novel Instances Mining with Pseudo-Margin Evaluation for Few-Shot Object Detection (NimPme) The official implementation of Novel Instances Mining with

lazy_table - a python-tabulate wrapper for producing tables from generators

A python-tabulate wrapper for producing tables from generators. Motivation lazy_table is useful when (i) each row of your table is generated by a poss

Lazy package to start your project using FastAPI✨

Fastapi-lazy 🦥 Utilities that you use in various projects made in FastAPI. Source Code: https://github.com/yezz123/fastapi-lazy Install the project:

Demonstration that AWS IAM policy evaluation docs are incorrect

The flowchart from the AWS IAM policy evaluation documentation page, as of 2021-09-12, and dating back to at least 2018-12-27, is the following: The f

Implementation of Common Image Evaluation Metrics by Sayed Nadim (sayednadim.github.io). The repo is built based on full reference image quality metrics such as L1, L2, PSNR, SSIM, LPIPS. and feature-level quality metrics such as FID, IS. It can be used for evaluating image denoising, colorization, inpainting, deraining, dehazing etc. where we have access to ground truth.

Image Quality Evaluation Metrics Implementation of some common full reference image quality metrics. The repo is built based on full reference image q

Cross Quality LFW: A database for Analyzing Cross-Resolution Image Face Recognition in Unconstrained Environments

Cross-Quality Labeled Faces in the Wild (XQLFW) Here, we release the database, evaluation protocol and code for the following paper: Cross Quality LFW

Our CIKM21 Paper "Incorporating Query Reformulating Behavior into Web Search Evaluation"

Reformulation-Aware-Metrics Introduction This codebase contains source-code of the Python-based implementation of our CIKM 2021 paper. Chen, Jia, et a

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

Evaluation suite for large-scale language models.

This repo contains code for running the evaluations and reproducing the results from the Jurassic-1 Technical Paper (see blog post), with current support for running the tasks through both the AI21 Studio API and OpenAI's GPT3 API.

Summary Explorer is a tool to visually explore the state-of-the-art in text summarization.

Summary Explorer Summary Explorer is a tool to visually inspect the summaries from several state-of-the-art neural summarization models across multipl

Action Segmentation Evaluation

Reference Action Segmentation Evaluation Code This repository contains the reference code for action segmentation evaluation. If you have a bug-fix/im

Summary Explorer is a tool to visually explore the state-of-the-art in text summarization.

Summary Explorer is a tool to visually explore the state-of-the-art in text summarization.

Benchmark for evaluating open-ended generation

OpenMEVA Contributed by Jian Guan, Zhexin Zhang. Thank Jiaxin Wen for DeBugging. OpenMEVA is a benchmark for evaluating open-ended story generation me

A TensorFlow implementation of SOFA, the Simulator for OFfline LeArning and evaluation.

SOFA This repository is the implementation of SOFA, the Simulator for OFfline leArning and evaluation. Keeping Dataset Biases out of the Simulation: A

Active and Sample-Efficient Model Evaluation

Active Testing: Sample-Efficient Model Evaluation Hi, good to see you here! 👋 This is code for "Active Testing: Sample-Efficient Model Evaluation". P

Lazy airdrop based on private temporary ids

LobsterDAO This uses a modified MerkleDistributor, which allows to issue a lazy airdrop using temporary IDs. In this example it uses Telegram chat_id

KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark.

KLUE Baseline Korean(한국어) KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark. See our paper fo

Reference implementation of code generation projects from Facebook AI Research. General toolkit to apply machine learning to code, from dataset creation to model training and evaluation. Comes with pretrained models.

This repository is a toolkit to do machine learning for programming languages. It implements tokenization, dataset preprocessing, model training and m

The Hailo Model Zoo includes pre-trained models and a full building and evaluation environment

Hailo Model Zoo The Hailo Model Zoo provides pre-trained models for high-performance deep learning applications. Using the Hailo Model Zoo you can mea

A Toolbox for Image Feature Matching and Evaluations

This is a toolbox repository to help evaluate various methods that perform image matching from a pair of images.

Semantic Scholar's Author Disambiguation Algorithm & Evaluation Suite

S2AND This repository provides access to the S2AND dataset and S2AND reference model described in the paper S2AND: A Benchmark and Evaluation System f

A fast python implementation of DTU MVS 2014 evaluation

DTUeval-python A python implementation of DTU MVS 2014 evaluation. It only takes 1min for each mesh evaluation. And the gap between the two implementa

SIMULEVAL A General Evaluation Toolkit for Simultaneous Translation

SimulEval SimulEval is a general evaluation framework for simultaneous translation on text and speech. Requirement python = 3.7.0 Installation git cl

YoloV5 implemented by TensorFlow2 , with support for training, evaluation and inference.

Efficient implementation of YOLOV5 in TensorFlow2

This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard evaluation metric to measure the accuracy and robustness of 3D face reconstruction methods from a single image under variations in viewing angle, lighting, and common occlusions.

NoW Evaluation This is the official repository for evaluation on the NoW Benchmark Dataset. The goal of the NoW benchmark is to introduce a standard e

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation models. It contains 17 different amateur subjects performing 30 sports-related actions each, for a total of 510 action clips.

A large-scale video dataset for the training and evaluation of 3D human pose estimation models

ASPset-510 ASPset-510 (Australian Sports Pose Dataset) is a large-scale video dataset for the training and evaluation of 3D human pose estimation mode

PyTorch evaluation code for Delving Deep into the Generalization of Vision Transformers under Distribution Shifts.

Out-of-distribution Generalization Investigation on Vision Transformers This repository contains PyTorch evaluation code for Delving Deep into the Gen

NAS Benchmark in "Prioritized Architecture Sampling with Monto-Carlo Tree Search", CVPR2021

NAS-Bench-Macro This repository includes the benchmark and code for NAS-Bench-Macro in paper "Prioritized Architecture Sampling with Monto-Carlo Tree

Lazy, a tool for running things in idle time

Lazy, a tool for running things in idle time Mostly used to stop CUDA ML model training from making my desktop unusable. Simply monitors keyboard/mous

中文医疗信息处理基准CBLUE: A Chinese Biomedical LanguageUnderstanding Evaluation Benchmark

English | 中文说明 CBLUE AI (Artificial Intelligence) is playing an indispensabe role in the biomedical field, helping improve medical technology. For fur

Evaluation Pipeline for our ECCV2020: Journey Towards Tiny Perceptual Super-Resolution.

Journey Towards Tiny Perceptual Super-Resolution Test code for our ECCV2020 paper: https://arxiv.org/abs/2007.04356 Our x4 upscaling pre-trained model

QuanTaichi evaluation suite

QuanTaichi: A Compiler for Quantized Simulations (SIGGRAPH 2021) Yuanming Hu, Jiafeng Liu, Xuanda Yang, Mingkuan Xu, Ye Kuang, Weiwei Xu, Qiang Dai, W

Framework for joint representation learning, evaluation through multimodal registration and comparison with image translation based approaches

CoMIR: Contrastive Multimodal Image Representation for Registration Framework 🖼 Registration of images in different modalities with Deep Learning 🤖

The repo of the preprinting paper "Labels Are Not Perfect: Inferring Spatial Uncertainty in Object Detection"

Inferring Spatial Uncertainty in Object Detection A teaser version of the code for the paper Labels Are Not Perfect: Inferring Spatial Uncertainty in

An evaluation toolkit for voice conversion models.

Voice-conversion-evaluation An evaluation toolkit for voice conversion models. Sample test pair Generate the metadata for evaluating models. The direc

An evaluation toolkit for voice conversion models.

Voice-conversion-evaluation An evaluation toolkit for voice conversion models. Sample test pair Generate the metadata for evaluating models. The direc

Resources for the "Evaluating the Factual Consistency of Abstractive Text Summarization" paper

Evaluating the Factual Consistency of Abstractive Text Summarization Authors: Wojciech Kryściński, Bryan McCann, Caiming Xiong, and Richard Socher Int

Findings of ACL 2021

Assessing Dialogue Systems with Distribution Distances [arXiv][code] We propose to measure the performance of a dialogue system by computing the distr

laTEX is awesome but we are lazy - groff with markdown syntax and inline code execution

pyGroff A wrapper for groff using python to have a nicer syntax for groff documents DOCUMENTATION Very similar to markdown. So if you know what that i

Task-based datasets, preprocessing, and evaluation for sequence models.

SeqIO: Task-based datasets, preprocessing, and evaluation for sequence models. SeqIO is a library for processing sequential data to be fed into downst

[CVPR 2021] MiVOS - Mask Propagation module. Reproduced STM (and better) with training code :star2:. Semi-supervised video object segmentation evaluation.

MiVOS (CVPR 2021) - Mask Propagation Ho Kei Cheng, Yu-Wing Tai, Chi-Keung Tang [arXiv] [Paper PDF] [Project Page] [Papers with Code] This repo impleme

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa

A Python package that provides evaluation and visualization tools for the DexYCB dataset

DexYCB Toolkit DexYCB Toolkit is a Python package that provides evaluation and visualization tools for the DexYCB dataset. The dataset and results wer

Provided is code that demonstrates the training and evaluation of the work presented in the paper: "On the Detection of Digital Face Manipulation" published in CVPR 2020.

FFD Source Code Provided is code that demonstrates the training and evaluation of the work presented in the paper: "On the Detection of Digital Face M

中文空间语义理解评测

中文空间语义理解评测 最新消息 2021-04-10 🚩 排行榜发布: Leaderboard 2021-04-05 基线系统发布: SpaCE2021-Baseline 2021-04-05 开放数据提交: 提交结果 2021-04-01 开放报名: 我要报名 2021-04-01 数据集 pa

Source code for the GPT-2 story generation models in the EMNLP 2020 paper "STORIUM: A Dataset and Evaluation Platform for Human-in-the-Loop Story Generation"

Storium GPT-2 Models This is the official repository for the GPT-2 models described in the EMNLP 2020 paper [STORIUM: A Dataset and Evaluation Platfor

TextFlint is a multilingual robustness evaluation platform for natural language processing tasks,

TextFlint is a multilingual robustness evaluation platform for natural language processing tasks, which unifies general text transformation, task-specific transformation, adversarial attack, sub-population, and their combinations to provide a comprehensive robustness analysis.

Code for paper "A Critical Assessment of State-of-the-Art in Entity Alignment" (https://arxiv.org/abs/2010.16314)

A Critical Assessment of State-of-the-Art in Entity Alignment This repository contains the source code for the paper A Critical Assessment of State-of

Petastorm library enables single machine or distributed training and evaluation of deep learning models from datasets in Apache Parquet format. It supports ML frameworks such as Tensorflow, Pytorch, and PySpark and can be used from pure Python code.

Petastorm Contents Petastorm Installation Generating a dataset Plain Python API Tensorflow API Pytorch API Spark Dataset Converter API Analyzing petas

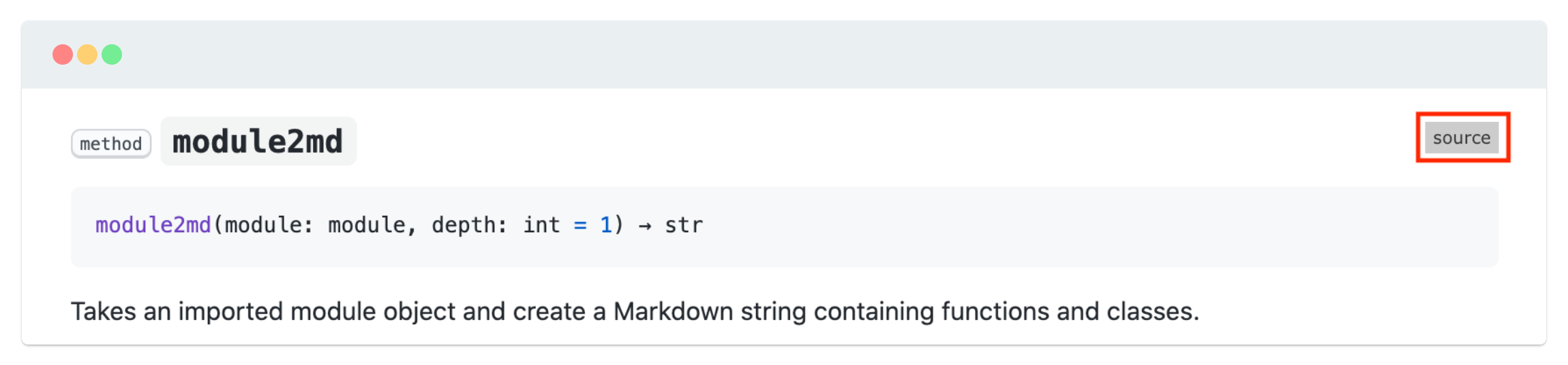

📖 Generate markdown API documentation from Google-style Python docstring. The lazy alternative to Sphinx.

lazydocs Generate markdown API documentation for Google-style Python docstring. Getting Started • Features • Documentation • Support • Contribution •

CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent performances in several models such as Faster R-CNN, YOLOv4, RetinaNet and . There is a maximum AP improvement of 1.9% and an average AP of 0.8% improvement on MS COCO dataset, compared to traditional evaluation-feedback modules. Here we just use as an example to illustrate the code.

CDIoU-CDIoUloss CDIoU and CDIoU loss is like a convenient plug-in that can be used in multiple models. CDIoU and CDIoU loss have different excellent p

UNION: An Unreferenced Metric for Evaluating Open-ended Story Generation

UNION Automatic Evaluation Metric described in the paper UNION: An UNreferenced MetrIc for Evaluating Open-eNded Story Generation (EMNLP 2020). Please

An OCR evaluation tool

dinglehopper dinglehopper is an OCR evaluation tool and reads ALTO, PAGE and text files. It compares a ground truth (GT) document page with a OCR resu

TedEval: A Fair Evaluation Metric for Scene Text Detectors

TedEval: A Fair Evaluation Metric for Scene Text Detectors Official Python 3 implementation of TedEval | paper | slides Chae Young Lee, Youngmin Baek,

This repository provides train&test code, dataset, det.&rec. annotation, evaluation script, annotation tool, and ranking.

SCUT-CTW1500 Datasets We have updated annotations for both train and test set. Train: 1000 images [images][annos] Additional point annotation for each

Deploy tensorflow graphs for fast evaluation and export to tensorflow-less environments running numpy.

Deploy tensorflow graphs for fast evaluation and export to tensorflow-less environments running numpy. Now with tensorflow 1.0 support. Evaluation usa

Lazy Profiler is a simple utility to collect CPU, GPU, RAM and GPU Memory stats while the program is running.

lazyprofiler Lazy Profiler is a simple utility to collect CPU, GPU, RAM and GPU Memory stats while the program is running. Installation Use the packag

Multi-class confusion matrix library in Python

Table of contents Overview Installation Usage Document Try PyCM in Your Browser Issues & Bug Reports Todo Outputs Dependencies Contribution References

Machine learning evaluation metrics, implemented in Python, R, Haskell, and MATLAB / Octave

Note: the current releases of this toolbox are a beta release, to test working with Haskell's, Python's, and R's code repositories. Metrics provides i