189 Repositories

Python bias-evaluation Libraries

Open-Source CI/CD platform for ML teams. Deliver ML products, better & faster. ⚡️🧑🔧

Deliver ML products, better & faster Giskard is an Open-Source CI/CD platform for ML teams. Inspect ML models visually from your Python notebook 📗 Re

KwaiRec: A Fully-observed Dataset for Recommender Systems (Density: Almost 100%)

KuaiRec: A Fully-observed Dataset for Recommender Systems (Density: Almost 100%) KuaiRec is a real-world dataset collected from the recommendation log

Evaluation and Benchmarking of Speech Super-resolution Methods

Speech Super-resolution Evaluation and Benchmarking What this repo do: A toolbox for the evaluation of speech super-resolution algorithms. Unify the e

A benchmark for evaluation and comparison of various NLP tasks in Persian language.

Persian NLP Benchmark The repository aims to track existing natural language processing models and evaluate their performance on well-known datasets.

APEACH: Attacking Pejorative Expressions with Analysis on Crowd-generated Hate Speech Evaluation Datasets

APEACH - Korean Hate Speech Evaluation Datasets APEACH is the first crowd-generated Korean evaluation dataset for hate speech detection. Sentences of

codebase for "A Theory of the Inductive Bias and Generalization of Kernel Regression and Wide Neural Networks"

Eigenlearning This repo contains code for replicating the experiments of the paper A Theory of the Inductive Bias and Generalization of Kernel Regress

Provide baselines and evaluation metrics of the task: traffic flow prediction

Note: This repo is adpoted from https://github.com/UNIMIBInside/Smart-Mobility-Prediction. Due to technical reasons, I did not fork their code. Introd

Object detection evaluation metrics using Python.

Object detection evaluation metrics using Python.

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers Authors: Jaemin Cho, Abhay Zala, and Mohit Bansal (

The Implicit Bias of Gradient Descent on Generalized Gated Linear Networks

The Implicit Bias of Gradient Descent on Generalized Gated Linear Networks This folder contains the code to reproduce the data in "The Implicit Bias o

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers

DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generative Transformers Authors: Jaemin Cho, Abhay Zala, and Mohit Bansal (

Doubly Robust Off-Policy Evaluation for Ranking Policies under the Cascade Behavior Model

Doubly Robust Off-Policy Evaluation for Ranking Policies under the Cascade Behavior Model About This repository contains the code to replicate the syn

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation This reposi

Evaluation framework for testing segmentation networks in PyTorch

Evaluation framework for testing segmentation networks in PyTorch. What segmentation network to choose for next Kaggle competition? This benchmark knows the answer!

A python package to adjust the bias of probabilistic forecasts/hindcasts using "Mean and Variance Adjustment" method.

Documentation A python package to adjust the bias of probabilistic forecasts/hindcasts using "Mean and Variance Adjustment" method. Read documentation

This is the repository for our paper Ditch the Gold Standard: Re-evaluating Conversational Question Answering

Ditch the Gold Standard: Re-evaluating Conversational Question Answering This is the repository for our paper Ditch the Gold Standard: Re-evaluating C

FAIR Enough Metrics is an API for various FAIR Metrics Tests, written in python

☑️ FAIR Enough metrics for research FAIR Enough Metrics is an API for various FAIR Metrics Tests, written in python, conforming to the specifications

SuRE Evaluation: A Supplementary Material

SuRE Evaluation: A Supplementary Material This repository contains supplementary material regarding the evaluations presented in the paper Visual Expl

The open-source and free to use Python package miseval was developed to establish a standardized medical image segmentation evaluation procedure

miseval: a metric library for Medical Image Segmentation EVALuation The open-source and free to use Python package miseval was developed to establish

Official repository for the paper "On Evaluation Metrics for Graph Generative Models"

On Evaluation Metrics for Graph Generative Models Authors: Rylee Thompson, Boris Knyazev, Elahe Ghalebi, Jungtaek Kim, Graham Taylor This is the offic

This repository contains pre-trained models and some evaluation code for our paper Towards Unsupervised Dense Information Retrieval with Contrastive Learning

Contriever: Towards Unsupervised Dense Information Retrieval with Contrastive Learning This repository contains pre-trained models and some evaluation

Image Segmentation Evaluation

Image Segmentation Evaluation Martin Keršner, [email protected] Evaluation metrics for image segmentation inspired by paper Fully Convolutional Netw

FairLens is an open source Python library for automatically discovering bias and measuring fairness in data

FairLens FairLens is an open source Python library for automatically discovering bias and measuring fairness in data. The package can be used to quick

On Evaluation Metrics for Graph Generative Models

On Evaluation Metrics for Graph Generative Models Authors: Rylee Thompson, Boris Knyazev, Elahe Ghalebi, Jungtaek Kim, Graham Taylor This is the offic

Project made in Qt Designer + Python, for evaluation in the subject Introduction to Programming in IFPE - Paulista campus.

Project made in Qt Designer + Python, for evaluation in the subject Introduction to Programming in IFPE - Paulista campus.

NLG evaluation via Statistical Measures of Similarity: BaryScore, DepthScore, InfoLM

NLG evaluation via Statistical Measures of Similarity: BaryScore, DepthScore, InfoLM Automatic Evaluation Metric described in the papers BaryScore (EM

PyTorch code for the NAACL 2021 paper "Improving Generation and Evaluation of Visual Stories via Semantic Consistency"

Improving Generation and Evaluation of Visual Stories via Semantic Consistency PyTorch code for the NAACL 2021 paper "Improving Generation and Evaluat

An Evaluation of Generative Adversarial Networks for Collaborative Filtering.

An Evaluation of Generative Adversarial Networks for Collaborative Filtering. This repository was developed by Fernando B. Pérez Maurera. Fernando is

The Turing Change Point Detection Benchmark: An Extensive Benchmark Evaluation of Change Point Detection Algorithms on real-world data

Turing Change Point Detection Benchmark Welcome to the repository for the Turing Change Point Detection Benchmark, a benchmark evaluation of change po

General Assembly's 2015 Data Science course in Washington, DC

DAT8 Course Repository Course materials for General Assembly's Data Science course in Washington, DC (8/18/15 - 10/29/15). Instructor: Kevin Markham (

Automatic caption evaluation metric based on typicality analysis.

SeMantic and linguistic UndeRstanding Fusion (SMURF) Automatic caption evaluation metric described in the paper "SMURF: SeMantic and linguistic UndeRs

Text Summarization - WCN — Weighted Contextual N-gram method for evaluation of Text Summarization

Text Summarization WCN — Weighted Contextual N-gram method for evaluation of Text Summarization In this project, I fine tune T5 model on Extreme Summa

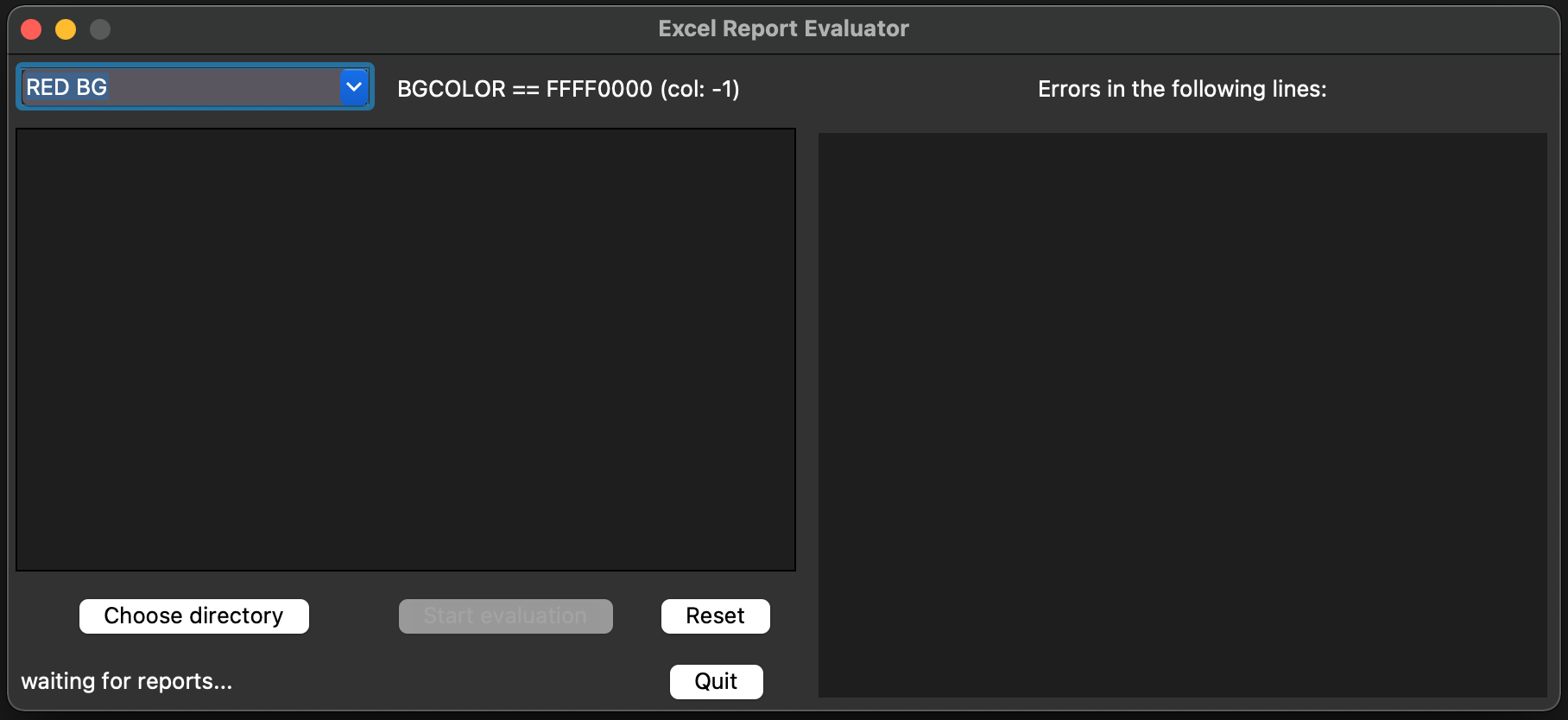

Excel-report-evaluator - A simple Python GUI application to aid with bulk evaluation of Microsoft Excel reports.

Excel Report Evaluator Simple Python GUI with Tkinter for evaluating Microsoft Excel reports (.xlsx-Files). Usage Start main.py and choose one of the

Image Matching Evaluation

Image Matching Evaluation (IME) IME provides to test any feature matching algorithm on datasets containing ground-truth homographies. Also, one can re

An efficient PyTorch implementation of the evaluation metrics in recommender systems.

recsys_metrics An efficient PyTorch implementation of the evaluation metrics in recommender systems. Overview • Installation • How to use • Benchmark

The official pytorch implementation of ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias

ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias Introduction | Updates | Usage | Results&Pretrained Models | Statement | Intr

GCRC: A Gaokao Chinese Reading Comprehension dataset for interpretable Evaluation

GCRC GCRC: A New Challenging MRC Dataset from Gaokao Chinese for Explainable Eva

Place holder for HOPE: a human-centric and task-oriented MT evaluation framework using professional post-editing

HOPE: A Task-Oriented and Human-Centric Evaluation Framework Using Professional Post-Editing Towards More Effective MT Evaluation Place holder for dat

Modeval (or Modular Eval) is a modular and secure string evaluation library that can be used to create custom parsers or interpreters.

modeval Modeval (or Modular Eval) is a modular and secure string evaluation library that can be used to create custom parsers or interpreters. Basic U

Modeval (or Modular Eval) is a modular and secure string evaluation library that can be used to create custom parsers or interpreters.

modeval Modeval (or Modular Eval) is a modular and secure string evaluation library that can be used to create custom parsers or interpreters. Basic U

Latte: Cross-framework Python Package for Evaluation of Latent-based Generative Models

Cross-framework Python Package for Evaluation of Latent-based Generative Models Latte Latte (for LATent Tensor Evaluation) is a cross-framework Python

Implementation for "Domain-Specific Bias Filtering for Single Labeled Domain Generalization"

DSBF Introduction This repository contains the implementation code for paper: Domain-Specific Bias Filtering for Single Labeled Domain Generalization

Evaluation toolkit of the informative tracking benchmark comprising 9 scenarios, 180 diverse videos, and new challenges.

Informative-tracking-benchmark Informative tracking benchmark (ITB) higher diversity. It contains 9 representative scenarios and 180 diverse videos. m

Implementation for paper BLEU: a Method for Automatic Evaluation of Machine Translation

BLEU Score Implementation for paper: BLEU: a Method for Automatic Evaluation of Machine Translation Author: Ba Ngoc from ProtonX BLEU score is a popul

Code release for General Greedy De-bias Learning

General Greedy De-bias for Dataset Biases This is an extention of "Greedy Gradient Ensemble for Robust Visual Question Answering" (ICCV 2021, Oral). T

Evaluation of file formats in the context of geo-referenced 3D geometries.

Geo-referenced Geometry File Formats Classic geometry file formats as .obj, .off, .ply, .stl or .dae do not support the utilization of coordinate syst

Official code for "On the Frequency Bias of Generative Models", NeurIPS 2021

Frequency Bias of Generative Models Generator Testbed Discriminator Testbed This repository contains official code for the paper On the Frequency Bias

Training and Evaluation Code for Neural Volumes

Neural Volumes This repository contains training and evaluation code for the paper Neural Volumes. The method learns a 3D volumetric representation of

The RDT protocol (RDT3.0,GBN,SR) implementation and performance evaluation code using socket

소켓을 이용한 RDT protocols (RDT3.0,GBN,SR) 구현 및 성능 평가 코드 입니다. 코드를 실행할때 리시버를 먼저 실행하세요. 성능 평가 코드는 패킷 전송 과정을 제외하고 시간당 전송률을 출력합니다. RDT3.0 GBN SR(버그 발견으로 구현중 입니

Evaluation toolkit of the informative tracking benchmark comprising 9 scenarios, 180 diverse videos, and new challenges.

Informative-tracking-benchmark Informative tracking benchmark (ITB) higher diversity. It contains 9 representative scenarios and 180 diverse videos. m

A PyTorch library and evaluation platform for end-to-end compression research

CompressAI CompressAI (compress-ay) is a PyTorch library and evaluation platform for end-to-end compression research. CompressAI currently provides: c

Atari2600 Training / Evaluation with RLlib

Training Atari2600 by Reinforcement Learning Train Atari2600 and check how it works! How to Setup You can setup packages on your local env. $ make set

Official implementation of the paper "Light Field Networks: Neural Scene Representations with Single-Evaluation Rendering"

Light Field Networks Project Page | Paper | Data | Pretrained Models Vincent Sitzmann*, Semon Rezchikov*, William Freeman, Joshua Tenenbaum, Frédo Dur

HDMapNet: A Local Semantic Map Learning and Evaluation Framework

HDMapNet_devkit Devkit for HDMapNet. HDMapNet: A Local Semantic Map Learning and Evaluation Framework Qi Li, Yue Wang, Yilun Wang, Hang Zhao [Paper] [

Code for "The Box Size Confidence Bias Harms Your Object Detector"

The Box Size Confidence Bias Harms Your Object Detector - Code Disclaimer: This repository is for research purposes only. It is designed to maintain r

Machine learning model evaluation made easy: plots, tables, HTML reports, experiment tracking and Jupyter notebook analysis.

sklearn-evaluation Machine learning model evaluation made easy: plots, tables, HTML reports, experiment tracking, and Jupyter notebook analysis. Suppo

A comprehensive set of fairness metrics for datasets and machine learning models, explanations for these metrics, and algorithms to mitigate bias in datasets and models.

AI Fairness 360 (AIF360) The AI Fairness 360 toolkit is an extensible open-source library containg techniques developed by the research community to h

Team collaborative evaluation tracker.

Team collaborative evaluation tracker.

TextWorld is a sandbox learning environment for the training and evaluation of reinforcement learning (RL) agents on text-based games.

TextWorld A text-based game generator and extensible sandbox learning environment for training and testing reinforcement learning (RL) agents. Also ch

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

Code for "The Box Size Confidence Bias Harms Your Object Detector"

The Box Size Confidence Bias Harms Your Object Detector - Code Disclaimer: This repository is for research purposes only. It is designed to maintain r

A package with multiple bias correction methods for climatic variables, including the QM, DQM, QDM, UQM, and SDM methods

A package with multiple bias correction methods for climatic variables, including the QM, DQM, QDM, UQM, and SDM methods

Training and evaluation codes for the BertGen paper (ACL-IJCNLP 2021)

BERTGEN This repository is the implementation of the paper "BERTGEN: Multi-task Generation through BERT" (https://arxiv.org/abs/2106.03484). The codeb

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

An open source bias lighting program which syncs up colored lights to the contents of your screen.

About Firelight Firelight is an open source bias lighting program which syncs up colored lights to the contents of your screen or TV, providing an imm

Official Repository for "Robust On-Policy Data Collection for Data Efficient Policy Evaluation" (NeurIPS 2021 Workshop on OfflineRL).

Robust On-Policy Data Collection for Data-Efficient Policy Evaluation Source code of Robust On-Policy Data Collection for Data-Efficient Policy Evalua

(NeurIPS 2021) Realistic Evaluation of Transductive Few-Shot Learning

Realistic evaluation of transductive few-shot learning Introduction This repo contains the code for our NeurIPS 2021 submitted paper "Realistic evalua

Python Single Object Tracking Evaluation

pysot-toolkit The purpose of this repo is to provide evaluation API of Current Single Object Tracking Dataset, including VOT2016 VOT2018 VOT2018-LT OT

Tightness-aware Evaluation Protocol for Scene Text Detection

TIoU-metric Release on 27/03/2019. This repository is built on the ICDAR 2015 evaluation code. If you propose a better metric and require further eval

Fluency ENhanced Sentence-bert Evaluation (FENSE), metric for audio caption evaluation. And Benchmark dataset AudioCaps-Eval, Clotho-Eval.

FENSE The metric, Fluency ENhanced Sentence-bert Evaluation (FENSE), for audio caption evaluation, proposed in the paper "Can Audio Captions Be Evalua

Prevent `CUDA error: out of memory` in just 1 line of code.

🐨 Koila Koila solves CUDA error: out of memory error painlessly. Fix it with just one line of code, and forget it. 🚀 Features 🙅 Prevents CUDA error

GradAttack is a Python library for easy evaluation of privacy risks in public gradients in Federated Learning

GradAttack is a Python library for easy evaluation of privacy risks in public gradients in Federated Learning, as well as corresponding mitigation strategies.

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER (EMNLP 2021).

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER. @inproceedings{tedes

Rethinking of Pedestrian Attribute Recognition: A Reliable Evaluation under Zero-Shot Pedestrian Identity Setting

Pytorch Pedestrian Attribute Recognition: A strong PyTorch baseline of pedestrian attribute recognition and multi-label classification.

Training code and evaluation benchmarks for the "Self-Supervised Policy Adaptation during Deployment" paper.

Self-Supervised Policy Adaptation during Deployment PyTorch implementation of PAD and evaluation benchmarks from Self-Supervised Policy Adaptation dur

Semi-Supervised Learning for Fine-Grained Classification

Semi-Supervised Learning for Fine-Grained Classification This repo contains the code of: A Realistic Evaluation of Semi-Supervised Learning for Fine-G

A Python package for causal inference using Synthetic Controls

Synthetic Control Methods A Python package for causal inference using synthetic controls This Python package implements a class of approaches to estim

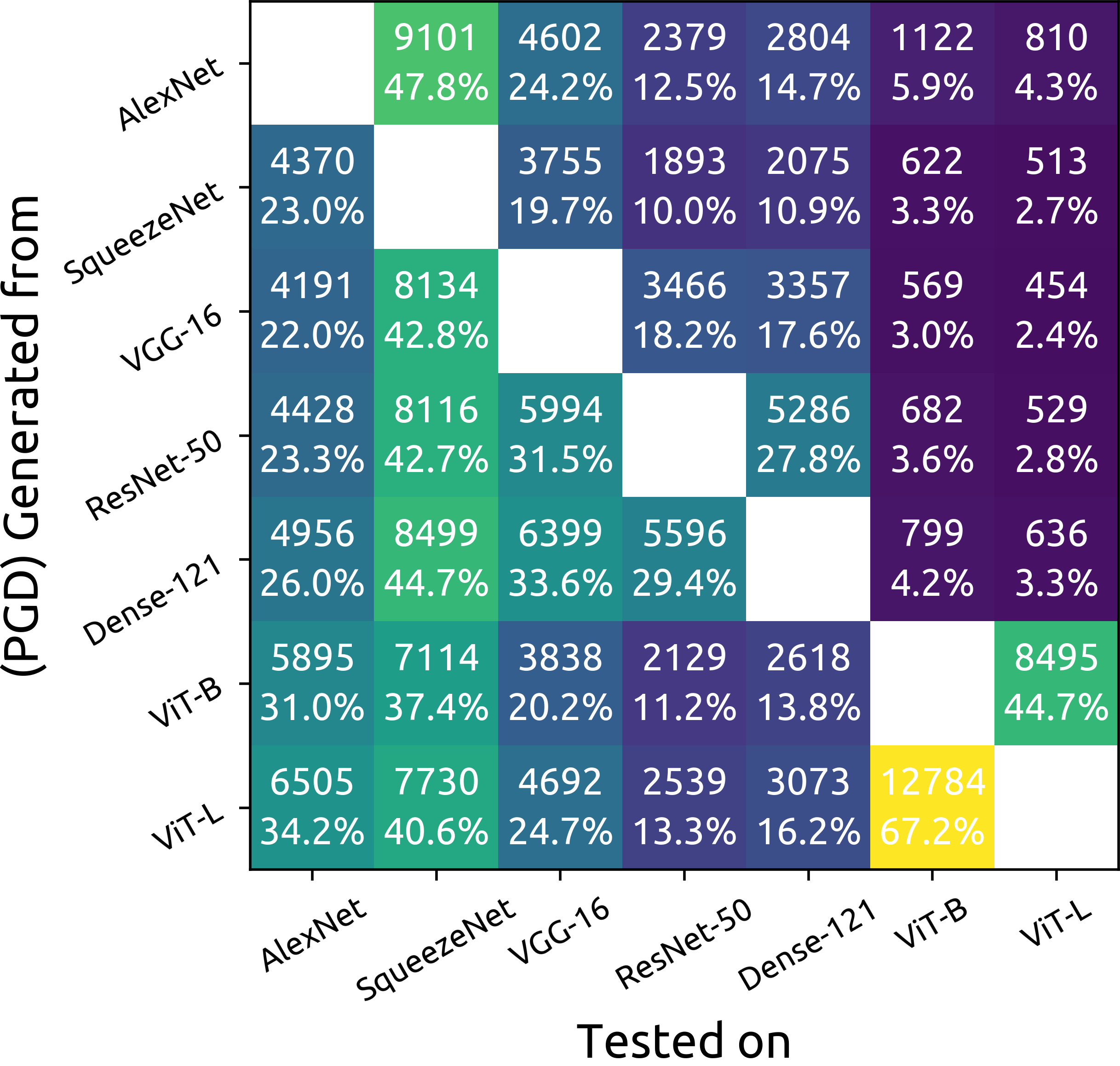

ImageNet Adversarial Image Evaluation

ImageNet Adversarial Image Evaluation This repository contains the code and some materials used in the experimental work presented in the following pa

Source code and notebooks to reproduce experiments and benchmarks on Bias Faces in the Wild (BFW).

Face Recognition: Too Bias, or Not Too Bias? Robinson, Joseph P., Gennady Livitz, Yann Henon, Can Qin, Yun Fu, and Samson Timoner. "Face recognition:

Lab Materials for MIT 6.S191: Introduction to Deep Learning

This repository contains all of the code and software labs for MIT 6.S191: Introduction to Deep Learning! All lecture slides and videos are available

Accelerating model creation and evaluation.

EmeraldML A machine learning library for streamlining the process of (1) cleaning and splitting data, (2) training, optimizing, and testing various mo

Politecnico of Turin Thesis: "Implementation and Evaluation of an Educational Chatbot based on NLP Techniques"

THESIS_CAIRONE_FIORENTINO Politecnico of Turin Thesis: "Implementation and Evaluation of an Educational Chatbot based on NLP Techniques" GENERATE TOKE

Automatic Video Captioning Evaluation Metric --- EMScore

Automatic Video Captioning Evaluation Metric --- EMScore Overview For an illustration, EMScore can be computed as: Installation modify the encode_text

Code for training and evaluation of the model from "Language Generation with Recurrent Generative Adversarial Networks without Pre-training"

Language Generation with Recurrent Generative Adversarial Networks without Pre-training Code for training and evaluation of the model from "Language G

A high performance implementation of HDBSCAN clustering.

HDBSCAN HDBSCAN - Hierarchical Density-Based Spatial Clustering of Applications with Noise. Performs DBSCAN over varying epsilon values and integrates

Non-Metric Space Library (NMSLIB): An efficient similarity search library and a toolkit for evaluation of k-NN methods for generic non-metric spaces.

Non-Metric Space Library (NMSLIB) Important Notes NMSLIB is generic but fast, see the results of ANN benchmarks. A standalone implementation of our fa

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding This repo contains the data and source code for baseline models in the NeurIPS 2

reXmeX is recommender system evaluation metric library.

A general purpose recommender metrics library for fair evaluation.

XAI - An eXplainability toolbox for machine learning

XAI - An eXplainability toolbox for machine learning XAI is a Machine Learning library that is designed with AI explainability in its core. XAI contai

Fit interpretable models. Explain blackbox machine learning.

InterpretML - Alpha Release In the beginning machines learned in darkness, and data scientists struggled in the void to explain them. Let there be lig

The project covers common metrics for super-resolution performance evaluation.

Super-Resolution Performance Evaluation Code The project covers common metrics for super-resolution performance evaluation. Metrics support The script

Prototype application for GCM bias-correction and downscaling

dodola Prototype application for GCM bias-correction and downscaling This is an unstable prototype. This is under heavy development. Features Nothing!

Source code, data, and evaluation details for “Cross-Lingual Citations in English Papers: A Large-Scale Analysis of Prevalence, Formation, and Ramifications”

Analysis of cross-lingual citations in English papers Contents initial_analysis Source code, data, and evaluation details as published at ICADL2020 ci

Multi-Modal Fingerprint Presentation Attack Detection: Evaluation On A New Dataset

PADISI USC Dataset This repository analyzes the PADISI-Finger dataset introduced in Multi-Modal Fingerprint Presentation Attack Detection: Evaluation

Codes for the ICCV'21 paper "FREE: Feature Refinement for Generalized Zero-Shot Learning"

FREE This repository contains the reference code for the paper "FREE: Feature Refinement for Generalized Zero-Shot Learning". [arXiv][Paper] 1. Prepar

Security evaluation module with onnx, pytorch, and SecML.

🚀 🐼 🔥 PandaVision Integrate and automate security evaluations with onnx, pytorch, and SecML! Installation Starting the server without Docker If you

Web Crawlers for Data Labelling of Malicious Domain Detection & IP Reputation Evaluation

Web Crawlers for Data Labelling of Malicious Domain Detection & IP Reputation Evaluation This repository provides two web crawlers to label domain nam

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding

CLUES: Few-Shot Learning Evaluation in Natural Language Understanding This repo contains the data and source code for baseline models in the NeurIPS 2

Suite of tools for retrieving USGS NWIS observations and evaluating National Water Model (NWM) data.

Documentation OWPHydroTools GitHub pages documentation Motivation We developed OWPHydroTools with data scientists in mind. We attempted to ensure the

Pipeline and Dataset helpers for complex algorithm evaluation.

tpcp - Tiny Pipelines for Complex Problems A generic way to build object-oriented datasets and algorithm pipelines and tools to evaluate them pip inst