1649 Repositories

Python natural-language-processing Libraries

EMNLP 2021 - Frustratingly Simple Pretraining Alternatives to Masked Language Modeling

Frustratingly Simple Pretraining Alternatives to Masked Language Modeling This is the official implementation for "Frustratingly Simple Pretraining Al

Türkçe küfürlü içerikleri bulan bir yapay zeka kütüphanesi / An ML library for profanity detection in Turkish sentences

"Kötü söz sahibine aittir." -Anonim Nedir? sinkaf uygunsuz yorumların bulunmasını sağlayan bir python kütüphanesidir. Farkı nedir? Diğer algoritmalard

Dataloader tools for language modelling

Installation: pip install lm_dataloader Design Philosophy A library to unify lm dataloading at large scale Simple interface, any tokenizer can be inte

🌈 PyTorch Implementation for EMNLP'21 Findings "Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer"

SGLKT-VisDial Pytorch Implementation for the paper: Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer Gi-Cheon Kang, Junseok P

EMNLP 2021 Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections

Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections Ruiqi Zhong, Kristy Lee*, Zheng Zhang*, Dan Klein EMN

An original implementation of "Noisy Channel Language Model Prompting for Few-Shot Text Classification"

Channel LM Prompting (and beyond) This includes an original implementation of Sewon Min, Mike Lewis, Hannaneh Hajishirzi, Luke Zettlemoyer. "Noisy Cha

Ongoing research training transformer language models at scale, including: BERT & GPT-2

Megatron (1 and 2) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA.

The source code of CVPR 2019 paper "Deep Exemplar-based Video Colorization".

Deep Exemplar-based Video Colorization (Pytorch Implementation) Paper | Pretrained Model | Youtube video 🔥 | Colab demo Deep Exemplar-based Video Col

Code for our ALiBi method for transformer language models.

Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation This repository contains the code and models for our paper Tra

CrossNorm and SelfNorm for Generalization under Distribution Shifts (ICCV 2021)

CrossNorm (CN) and SelfNorm (SN) (Accepted at ICCV 2021) This is the official PyTorch implementation of our CNSN paper, in which we propose CrossNorm

CrossNorm and SelfNorm for Generalization under Distribution Shifts (ICCV 2021)

CrossNorm (CN) and SelfNorm (SN) (Accepted at ICCV 2021) This is the official PyTorch implementation of our CNSN paper, in which we propose CrossNorm

StarGANv2-VC: A Diverse, Unsupervised, Non-parallel Framework for Natural-Sounding Voice Conversion

StarGANv2-VC: A Diverse, Unsupervised, Non-parallel Framework for Natural-Sounding Voice Conversion Yinghao Aaron Li, Ali Zare, Nima Mesgarani We pres

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Python library for the DeepL language translation API.

The DeepL API is a language translation API that allows other computer programs to send texts and documents to DeepL's servers and receive high-quality translations. This opens a whole universe of opportunities for developers: any translation product you can imagine can now be built on top of DeepL's best-in-class translation technology.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

Using a raspberry pi, we listen to the coffee machine and count the number of coffee consumption

A typical datarootsian consumes high-quality fresh coffee in their office environment. The board of dataroots had a very critical decision by the end of 2021-Q2 regarding coffee consumption.

This is the main repository of open-sourced speech technology by Huawei Noah's Ark Lab.

Speech-Backbones This is the main repository of open-sourced speech technology by Huawei Noah's Ark Lab. Grad-TTS Official implementation of the Grad-

Pipeline for fast building text classification TF-IDF + LogReg baselines.

Text Classification Baseline Pipeline for fast building text classification TF-IDF + LogReg baselines. Usage Instead of writing custom code for specif

A Survey of Natural Language Generation in Task-Oriented Dialogue System (TOD): Recent Advances and New Frontiers

A Survey of Natural Language Generation in Task-Oriented Dialogue System (TOD): Recent Advances and New Frontiers

X-modaler is a versatile and high-performance codebase for cross-modal analytics.

X-modaler X-modaler is a versatile and high-performance codebase for cross-modal analytics. This codebase unifies comprehensive high-quality modules i

Empower Sequence Labeling with Task-Aware Language Model

LM-LSTM-CRF Check Our New NER Toolkit 🚀 🚀 🚀 Inference: LightNER: inference w. models pre-trained / trained w. any following tools, efficiently. Tra

PyTorch implementation of Advantage Actor Critic (A2C), Proximal Policy Optimization (PPO), Scalable trust-region method for deep reinforcement learning using Kronecker-factored approximation (ACKTR) and Generative Adversarial Imitation Learning (GAIL).

pytorch-a2c-ppo-acktr Update (April 12th, 2021) PPO is great, but Soft Actor Critic can be better for many continuous control tasks. Please check out

An almost fully customizable language made in python!

Whython is a project language, the idea of it is that anyone can download and edit the language to make it suitable to what they want.

DEMix Layers for Modular Language Modeling

DEMix This repository contains modeling utilities for "DEMix Layers: Disentangling Domains for Modular Language Modeling" (Gururangan et. al, 2021). T

Evaluation suite for large-scale language models.

This repo contains code for running the evaluations and reproducing the results from the Jurassic-1 Technical Paper (see blog post), with current support for running the tasks through both the AI21 Studio API and OpenAI's GPT3 API.

IMS-Toucan is a toolkit to train state-of-the-art Speech Synthesis models

IMS-Toucan is a toolkit to train state-of-the-art Speech Synthesis models. Everything is pure Python and PyTorch based to keep it as simple and beginner-friendly, yet powerful as possible.

Korean Simple Contrastive Learning of Sentence Embeddings using SKT KoBERT and kakaobrain KorNLU dataset

KoSimCSE Korean Simple Contrastive Learning of Sentence Embeddings implementation using pytorch SimCSE Installation git clone https://github.com/BM-K/

Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT)

CIRPLANT This repository contains the code and pre-trained models for Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT) For d

Guesslang detects the programming language of a given source code

Detect the programming language of a source code

Data Preparation, Processing, and Visualization for MoVi Data

MoVi-Toolbox Data Preparation, Processing, and Visualization for MoVi Data, https://www.biomotionlab.ca/movi/ MoVi is a large multipurpose dataset of

The official code for paper "R2D2: Recursive Transformer based on Differentiable Tree for Interpretable Hierarchical Language Modeling".

R2D2 This is the official code for paper titled "R2D2: Recursive Transformer based on Differentiable Tree for Interpretable Hierarchical Language Mode

Towers of Babel: Combining Images, Language, and 3D Geometry for Learning Multimodal Vision. ICCV 2021.

Towers of Babel: Combining Images, Language, and 3D Geometry for Learning Multimodal Vision Download links and PyTorch implementation of "Towers of Ba

Library for processing molecules and reactions in python way

Chython [ˈkʌɪθ(ə)n] Library for processing molecules and reactions in python way. Features: Read/write/convert formats: MDL .RDF (.RXN) and .SDF (.MOL

3D position tracking for soccer players with multi-camera videos

This repo contains a full pipeline to support 3D position tracking of soccer players, with multi-view calibrated moving/fixed video sequences as inputs.

Vision-Language Transformer and Query Generation for Referring Segmentation (ICCV 2021)

Vision-Language Transformer and Query Generation for Referring Segmentation Please consider citing our paper in your publications if the project helps

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

😇A pyTorch implementation of the DeepMoji model: state-of-the-art deep learning model for analyzing sentiment, emotion, sarcasm etc

------ Update September 2018 ------ It's been a year since TorchMoji and DeepMoji were released. We're trying to understand how it's being used such t

Ongoing research training transformer language models at scale, including: BERT & GPT-2

What is this fork of Megatron-LM and Megatron-DeepSpeed This is a detached fork of https://github.com/microsoft/Megatron-DeepSpeed, which in itself is

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Natural Language Processing library built with AllenNLP 🌲🌱

Custom Natural Language Processing with big and small models 🌲🌱

PyTorch implementation for Stochastic Fine-grained Labeling of Multi-state Sign Glosses for Continuous Sign Language Recognition.

Stochastic CSLR This is the PyTorch implementation for the ECCV 2020 paper: Stochastic Fine-grained Labeling of Multi-state Sign Glosses for Continuou

Source code for "Progressive Transformers for End-to-End Sign Language Production" (ECCV 2020)

Progressive Transformers for End-to-End Sign Language Production Source code for "Progressive Transformers for End-to-End Sign Language Production" (B

Sign Language Translation with Transformers (COLING'2020, ECCV'20 SLRTP Workshop)

transformer-slt This repository gathers data and code supporting the experiments in the paper Better Sign Language Translation with STMC-Transformer.

Sign Language Transformers (CVPR'20)

Sign Language Transformers (CVPR'20) This repo contains the training and evaluation code for the paper Sign Language Transformers: Sign Language Trans

Pre-Trained Image Processing Transformer (IPT)

Pre-Trained Image Processing Transformer (IPT) By Hanting Chen, Yunhe Wang, Tianyu Guo, Chang Xu, Yiping Deng, Zhenhua Liu, Siwei Ma, Chunjing Xu, Cha

Code for the paper "VisualBERT: A Simple and Performant Baseline for Vision and Language"

This repository contains code for the following two papers: VisualBERT: A Simple and Performant Baseline for Vision and Language (arxiv) with a short

Multi Task Vision and Language

12-in-1: Multi-Task Vision and Language Representation Learning Please cite the following if you use this code. Code and pre-trained models for 12-in-

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

VisualGPT: Data-efficient Adaptation of Pretrained Language Models for Image Captioning

VisualGPT Our Paper VisualGPT: Data-efficient Adaptation of Pretrained Language Models for Image Captioning Main Architecture of Our VisualGPT Downloa

The RWKV Language Model

RWKV-LM We propose the RWKV language model, with alternating time-mix and channel-mix layers: The R, K, V are generated by linear transforms of input,

LightSeq is a high performance training and inference library for sequence processing and generation implemented in CUDA

LightSeq: A High Performance Library for Sequence Processing and Generation

Sum-Product Probabilistic Language

Sum-Product Probabilistic Language SPPL is a probabilistic programming language that delivers exact solutions to a broad range of probabilistic infere

Codes for processing meeting summarization datasets AMI and ICSI.

Meeting Summarization Dataset Meeting plays an essential part in our daily life, which allows us to share information and collaborate with others. Wit

esguard provides a Python decorator that waits for processing while monitoring the load of Elasticsearch.

esguard esguard provides a Python decorator that waits for processing while monitoring the load of Elasticsearch. Quick Start You need to launch elast

Implementation of the paper: "SinGAN: Learning a Generative Model from a Single Natural Image"

SinGAN This is an unofficial implementation of SinGAN from someone who's been sitting right next to SinGAN's creator for almost five years. Please ref

API for the GPT-J language model 🦜. Including a FastAPI backend and a streamlit frontend

gpt-j-api 🦜 An API to interact with the GPT-J language model. You can use and test the model in two different ways: Streamlit web app at http://api.v

Implementation for our ICCV2021 paper: Internal Video Inpainting by Implicit Long-range Propagation

Implicit Internal Video Inpainting Implementation for our ICCV2021 paper: Internal Video Inpainting by Implicit Long-range Propagation paper | project

A Repository of Community-Driven Natural Instructions

A Repository of Community-Driven Natural Instructions TLDR; this repository maintains a community effort to create a large collection of tasks and the

This repository is home to the Optimus data transformation plugins for various data processing needs.

Transformers Optimus's transformation plugins are implementations of Task and Hook interfaces that allows execution of arbitrary jobs in optimus. To i

[ICCV, 2021] Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks

Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks This is an official PyTorch code repository of the paper "Cloud Transformers:

Byte-based multilingual transformer TTS for low-resource/few-shot language adaptation.

One model to speak them all 🌎 Audio Language Text ▷ Chinese 人人生而自由,在尊严和权利上一律平等。 ▷ English All human beings are born free and equal in dignity and rig

Benchmark for evaluating open-ended generation

OpenMEVA Contributed by Jian Guan, Zhexin Zhang. Thank Jiaxin Wen for DeBugging. OpenMEVA is a benchmark for evaluating open-ended story generation me

Data and Code for ACL 2021 Paper "Inter-GPS: Interpretable Geometry Problem Solving with Formal Language and Symbolic Reasoning"

Introduction Code and data for ACL 2021 Paper "Inter-GPS: Interpretable Geometry Problem Solving with Formal Language and Symbolic Reasoning". We cons

Code Repo for the ACL21 paper "Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning"

Common Sense Beyond English: Evaluating and Improving Multilingual LMs for Commonsense Reasoning This is the Github repository of our paper, "Common S

![[IJCAI'21] Deep Automatic Natural Image Matting](https://github.com/JizhiziLi/AIM/raw/master/demo/network.png)

[IJCAI'21] Deep Automatic Natural Image Matting

Deep Automatic Natural Image Matting [IJCAI-21] This is the official repository of the paper Deep Automatic Natural Image Matting. Introduction | Netw

Versatile Generative Language Model

Versatile Generative Language Model This is the implementation of the paper: Exploring Versatile Generative Language Model Via Parameter-Efficient Tra

A PyTorch implementation of Radio Transformer Networks from the paper "An Introduction to Deep Learning for the Physical Layer".

An Introduction to Deep Learning for the Physical Layer An usable PyTorch implementation of the noisy autoencoder infrastructure in the paper "An Intr

Implementation of EMNLP 2017 Paper "Natural Language Does Not Emerge 'Naturally' in Multi-Agent Dialog" using PyTorch and ParlAI

Language Emergence in Multi Agent Dialog Code for the Paper Natural Language Does Not Emerge 'Naturally' in Multi-Agent Dialog Satwik Kottur, José M.

KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark.

KLUE Baseline Korean(한국어) KLUE-baseline contains the baseline code for the Korean Language Understanding Evaluation (KLUE) benchmark. See our paper fo

performing moving objects segmentation using image processing techniques with opencv and numpy

Moving Objects Segmentation On this project I tried to perform moving objects segmentation using background subtraction technique. the introduced meth

MASS: Masked Sequence to Sequence Pre-training for Language Generation

MASS: Masked Sequence to Sequence Pre-training for Language Generation

Active learning for text classification in Python

Active Learning allows you to efficiently label training data in a small-data scenario.

meProp: Sparsified Back Propagation for Accelerated Deep Learning

meProp The codes were used for the paper meProp: Sparsified Back Propagation for Accelerated Deep Learning with Reduced Overfitting (ICML 2017) [pdf]

Image Deblurring using Generative Adversarial Networks

DeblurGAN arXiv Paper Version Pytorch implementation of the paper DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. Our netwo

Pytorch Implementation of paper "Noisy Natural Gradient as Variational Inference"

Noisy Natural Gradient as Variational Inference PyTorch implementation of Noisy Natural Gradient as Variational Inference. Requirements Python 3 Pytor

Train an RL agent to execute natural language instructions in a 3D Environment (PyTorch)

Gated-Attention Architectures for Task-Oriented Language Grounding This is a PyTorch implementation of the AAAI-18 paper: Gated-Attention Architecture

PyTorch implementation of the wavelet analysis from Torrence & Compo

Continuous Wavelet Transforms in PyTorch This is a PyTorch implementation for the wavelet analysis outlined in Torrence and Compo (BAMS, 1998). The co

PyTorch implementation of convolutional neural networks-based text-to-speech synthesis models

Deepvoice3_pytorch PyTorch implementation of convolutional networks-based text-to-speech synthesis models: arXiv:1710.07654: Deep Voice 3: Scaling Tex

🐥A PyTorch implementation of OpenAI's finetuned transformer language model with a script to import the weights pre-trained by OpenAI

PyTorch implementation of OpenAI's Finetuned Transformer Language Model This is a PyTorch implementation of the TensorFlow code provided with OpenAI's

NLPShala , the best IDE for all Natural language processing tasks.

The revolutionary IDE for all NLP (Natural language processing) stuffs on the internet.

Simple reuse of partial HTML page templates in the Jinja template language for Python web frameworks.

Jinja Partials Simple reuse of partial HTML page templates in the Jinja template language for Python web frameworks. (There is also a Pyramid/Chameleo

Write reproducible code for getting and processing ChEMBL

chembl_downloader Don't worry about downloading/extracting ChEMBL or versioning - just use chembl_downloader to write code that knows how to download

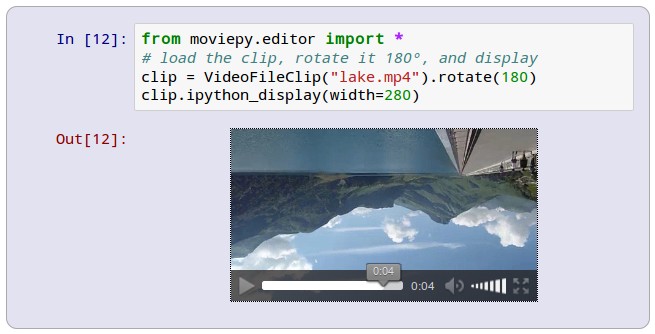

MoviePy is a Python library for video editing, can read and write all the most common audio and video formats

MoviePy is a Python library for video editing: cutting, concatenations, title insertions, video compositing (a.k.a. non-linear editing), video processing, and creation of custom effects. See the gallery for some examples of use.

GPT-Code-Clippy (GPT-CC) is an open source version of GitHub Copilot, a language model

GPT-Code-Clippy (GPT-CC) is an open source version of GitHub Copilot, a language model -- based on GPT-3, called GPT-Codex -- that is fine-tuned on publicly available code from GitHub.

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

ICML 21 - Voice2Series: Reprogramming Acoustic Models for Time Series Classification

Voice2Series-Reprogramming Voice2Series: Reprogramming Acoustic Models for Time Series Classification International Conference on Machine Learning (IC

Deduplicating Training Data Makes Language Models Better

Deduplicating Training Data Makes Language Models Better This repository contains code to deduplicate language model datasets as descrbed in the paper

[ICML 2021] Towards Understanding and Mitigating Social Biases in Language Models

Towards Understanding and Mitigating Social Biases in Language Models This repo contains code and data for evaluating and mitigating bias from generat

QVHighlights: Detecting Moments and Highlights in Videos via Natural Language Queries

Moment-DETR QVHighlights: Detecting Moments and Highlights in Videos via Natural Language Queries Jie Lei, Tamara L. Berg, Mohit Bansal For dataset de

A text augmentation tool for named entity recognition.

neraug This python library helps you with augmenting text data for named entity recognition. Augmentation Example Reference from An Analysis of Simple

Rubrix is a free and open-source tool for exploring and iterating on data for artificial intelligence projects.

Open-source tool for exploring, labeling, and monitoring data for AI projects

An implementation of DeepMind's Relational Recurrent Neural Networks in PyTorch.

relational-rnn-pytorch An implementation of DeepMind's Relational Recurrent Neural Networks (Santoro et al. 2018) in PyTorch. Relational Memory Core (

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

The Noise Contrastive Estimation for softmax output written in Pytorch

An NCE implementation in pytorch About NCE Noise Contrastive Estimation (NCE) is an approximation method that is used to work around the huge computat

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

Implementation of character based convolutional neural network

Character Based CNN This repo contains a PyTorch implementation of a character-level convolutional neural network for text classification. The model a

PyTorch original implementation of Cross-lingual Language Model Pretraining.

XLM NEW: Added XLM-R model. PyTorch original implementation of Cross-lingual Language Model Pretraining. Includes: Monolingual language model pretrain