1557 Repositories

Python phrase-bert-topic-model Libraries

Source code for "Efficient Training of BERT by Progressively Stacking"

Introduction This repository is the code to reproduce the result of Efficient Training of BERT by Progressively Stacking. The code is based on Fairseq

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

[ACL-IJCNLP 2021] "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets"

EarlyBERT This is the official implementation for the paper in ACL-IJCNLP 2021 "EarlyBERT: Efficient BERT Training via Early-bird Lottery Tickets" by

Code for the paper "BERT Loses Patience: Fast and Robust Inference with Early Exit".

Patience-based Early Exit Code for the paper "BERT Loses Patience: Fast and Robust Inference with Early Exit". NEWS: We now have a better and tidier i

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

ALBERT ***************New March 28, 2020 *************** Add a colab tutorial to run fine-tuning for GLUE datasets. ***************New January 7, 2020

This is the code for Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning

This is the code for Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning It includes /bert, which is the original BERT repos

Source code for "FastBERT: a Self-distilling BERT with Adaptive Inference Time".

FastBERT Source code for "FastBERT: a Self-distilling BERT with Adaptive Inference Time". Good News 2021/10/29 - Code: Code of FastPLM is released on

DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference

DeeBERT This is the code base for the paper DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference. Code in this repository is also available

Repo for EMNLP 2021 paper "Beyond Preserved Accuracy: Evaluating Loyalty and Robustness of BERT Compression"

beyond-preserved-accuracy Repo for EMNLP 2021 paper "Beyond Preserved Accuracy: Evaluating Loyalty and Robustness of BERT Compression" How to implemen

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

Code associated with the Don't Stop Pretraining ACL 2020 paper

dont-stop-pretraining Code associated with the Don't Stop Pretraining ACL 2020 paper Citation @inproceedings{dontstoppretraining2020, author = {Suchi

Research code for "What to Pre-Train on? Efficient Intermediate Task Selection", EMNLP 2021

efficient-task-transfer This repository contains code for the experiments in our paper "What to Pre-Train on? Efficient Intermediate Task Selection".

Multi-Task Deep Neural Networks for Natural Language Understanding

New Release We released Adversarial training for both LM pre-training/finetuning and f-divergence. Large-scale Adversarial training for LMs: ALUM code

Training and evaluation codes for the BertGen paper (ACL-IJCNLP 2021)

BERTGEN This repository is the implementation of the paper "BERTGEN: Multi-task Generation through BERT" (https://arxiv.org/abs/2106.03484). The codeb

Pytorch implementation of Bert and Pals: Projected Attention Layers for Efficient Adaptation in Multi-Task Learning

PyTorch implementation of BERT and PALs Introduction Work by Asa Cooper Stickland and Iain Murray, University of Edinburgh. Code for BERT and PALs; mo

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

Adapter-BERT: Parameter-Efficient Transfer Learning for NLP.

EasyTransfer is designed to make the development of transfer learning in NLP applications easier.

EasyTransfer is designed to make the development of transfer learning in NLP applications easier. The literature has witnessed the success of applying

Revisiting Self-Training for Few-Shot Learning of Language Model.

SFLM This is the implementation of the paper Revisiting Self-Training for Few-Shot Learning of Language Model. SFLM is short for self-training for few

Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF shows significant improvements over baseline fine-tuning without data filtration.

Information Gain Filtration Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF sho

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

A kedro-plugin to serve Kedro Pipelines as API

General informations Software repository Latest release Total downloads Pypi Code health Branch Tests Coverage Links Documentation Deployment Activity

TensorFlow (Python) implementation of DeepTCN model for multivariate time series forecasting.

DeepTCN TensorFlow TensorFlow (Python) implementation of multivariate time series forecasting model introduced in Chen, Y., Kang, Y., Chen, Y., & Wang

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

Word and phrase lists in CSV

Word Lists Word and phrase lists in CSV, collected from different sources. Oxford Word Lists: oxford-5k.csv - Oxford 3000 and 5000 oxford-opal.csv - O

Train the HRNet model on ImageNet

High-resolution networks (HRNets) for Image classification News [2021/01/20] Add some stronger ImageNet pretrained models, e.g., the HRNet_W48_C_ssld_

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

EdiBERT, a generative model for image editing

EdiBERT, a generative model for image editing EdiBERT is a generative model based on a bi-directional transformer, suited for image manipulation. The

CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer

CycleTransGAN-EVC CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer Demo emotion CycleTransGAN CycleTransGAN Cycle

This repository is for the preprint "A generative nonparametric Bayesian model for whole genomes"

BEAR Overview This repository contains code associated with the preprint A generative nonparametric Bayesian model for whole genomes (2021), which pro

Code for "On Memorization in Probabilistic Deep Generative Models"

On Memorization in Probabilistic Deep Generative Models This repository contains the code necessary to reproduce the experiments in On Memorization in

Pytorch implementation of the paper "Topic Modeling Revisited: A Document Graph-based Neural Network Perspective"

Graph Neural Topic Model (GNTM) This is the pytorch implementation of the paper "Topic Modeling Revisited: A Document Graph-based Neural Network Persp

Toolbox to analyze temporal context invariance of deep neural networks

PyTCI A toolbox that estimates the integration window of a sensory response using the "Temporal Context Invariance" paradigm (TCI). The TCI method Int

Code for models used in Bashiri et al., "A Flow-based latent state generative model of neural population responses to natural images".

A Flow-based latent state generative model of neural population responses to natural images Code for "A Flow-based latent state generative model of ne

[NeurIPS 2021] "G-PATE: Scalable Differentially Private Data Generator via Private Aggregation of Teacher Discriminators"

G-PATE This is the official code base for our NeurIPS 2021 paper: "G-PATE: Scalable Differentially Private Data Generator via Private Aggregation of T

Mouse Brain in the Model Zoo

Deep Neural Mouse Brain Modeling This is the repository for the ongoing deep neural mouse modeling project, an attempt to characterize the representat

Official Implementation for Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation

Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation We present a generic image-to-image translation framework, pixel2style2pixel (pSp

A Simple Long-Tailed Rocognition Baseline via Vision-Language Model

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

Official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

A paper list of pre-trained language models (PLMs).

Large-scale pre-trained language models (PLMs) such as BERT and GPT have achieved great success and become a milestone in NLP.

Search with BERT vectors in Solr and Elasticsearch

Search with BERT vectors in Solr and Elasticsearch

Various Algorithms for Short Text Mining

Short Text Mining in Python Introduction This package shorttext is a Python package that facilitates supervised and unsupervised learning for short te

A lightweight interface for reading in output from the Weather Research and Forecasting (WRF) model into xarray Dataset

xwrf A lightweight interface for reading in output from the Weather Research and Forecasting (WRF) model into xarray Dataset. The primary objective of

Fluency ENhanced Sentence-bert Evaluation (FENSE), metric for audio caption evaluation. And Benchmark dataset AudioCaps-Eval, Clotho-Eval.

FENSE The metric, Fluency ENhanced Sentence-bert Evaluation (FENSE), for audio caption evaluation, proposed in the paper "Can Audio Captions Be Evalua

Project to deploy a machine learning model based on Titanic dataset from Kaggle

kaggle_titanic_deploy Project to deploy a machine learning model based on Titanic dataset from Kaggle In this project we used the Titanic dataset from

Official Implementation of SWAGAN: A Style-based Wavelet-driven Generative Model

Official Implementation of SWAGAN: A Style-based Wavelet-driven Generative Model SWAGAN: A Style-based Wavelet-driven Generative Model Rinon Gal, Dana

Official implementation of VQ-Diffusion: Vector Quantized Diffusion Model for Text-to-Image Synthesis

Official implementation of VQ-Diffusion: Vector Quantized Diffusion Model for Text-to-Image Synthesis

![[NeurIPS 2021] Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data](https://github.com/EndlessSora/DeceiveD/raw/main/resources/teaser.jpg)

[NeurIPS 2021] Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data

Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data (NeurIPS 2021) This repository provides the official PyTorch implementation

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

Code for unmixing audio signals in four different stems "drums, bass, vocals, others". The code is adapted from "Jukebox: A Generative Model for Music"

Status: Archive (code is provided as-is, no updates expected) Disclaimer This code is a based on "Jukebox: A Generative Model for Music" Paper We adju

Pre-Training 3D Point Cloud Transformers with Masked Point Modeling

Point-BERT: Pre-Training 3D Point Cloud Transformers with Masked Point Modeling Created by Xumin Yu*, Lulu Tang*, Yongming Rao*, Tiejun Huang, Jie Zho

Anomaly Localization in Model Gradients Under Backdoor Attacks Against Federated Learning

Federated_Learning This repo provides a federated learning framework that allows to carry out backdoor attacks under varying conditions. This is a ker

A simple rest api serving a deep learning model that classifies human gender based on their faces. (vgg16 transfare learning)

this is a simple rest api serving a deep learning model that classifies human gender based on their faces. (vgg16 transfare learning)

Train an imgs.ai model on your own dataset

imgs.ai is a fast, dataset-agnostic, deep visual search engine for digital art history based on neural network embeddings.

LAnguage Model Analysis

LAMA: LAnguage Model Analysis LAMA is a probe for analyzing the factual and commonsense knowledge contained in pretrained language models. The dataset

Count the MACs / FLOPs of your PyTorch model.

THOP: PyTorch-OpCounter How to install pip install thop (now continously intergrated on Github actions) OR pip install --upgrade git+https://github.co

Pre-trained Deep Learning models and demos (high quality and extremely fast)

OpenVINO™ Toolkit - Open Model Zoo repository This repository includes optimized deep learning models and a set of demos to expedite development of hi

Selenium Page Object Model with Python

Page-object-model (POM) is a pattern that you can apply it to develop efficient automation framework.

IMBENS: class-imbalanced ensemble learning in Python.

IMBENS: class-imbalanced ensemble learning in Python. Links: [Documentation] [Gallery] [PyPI] [Changelog] [Source] [Download] [知乎/Zhihu] [中文README] [a

Research code for the paper "Variational Gibbs inference for statistical estimation from incomplete data".

Variational Gibbs inference (VGI) This repository contains the research code for Simkus, V., Rhodes, B., Gutmann, M. U., 2021. Variational Gibbs infer

An open-source Kazakh named entity recognition dataset (KazNERD), annotation guidelines, and baseline NER models.

Kazakh Named Entity Recognition This repository contains an open-source Kazakh named entity recognition dataset (KazNERD), named entity annotation gui

Codebase for Amodal Segmentation through Out-of-Task andOut-of-Distribution Generalization with a Bayesian Model

Codebase for Amodal Segmentation through Out-of-Task andOut-of-Distribution Generalization with a Bayesian Model

An optimized prompt tuning strategy comparable to fine-tuning across model scales and tasks.

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy achievi

Redis OM Python makes it easy to model Redis data in your Python applications.

Object mapping, and more, for Redis and Python Redis OM Python makes it easy to model Redis data in your Python applications. Redis OM Python | Redis

Tools to create pixel-wise object masks, bounding box labels (2D and 3D) and 3D object model (PLY triangle mesh) for object sequences filmed with an RGB-D camera.

Tools to create pixel-wise object masks, bounding box labels (2D and 3D) and 3D object model (PLY triangle mesh) for object sequences filmed with an RGB-D camera. This project prepares training and testing data for various deep learning projects such as 6D object pose estimation projects singleshotpose, as well as object detection and instance segmentation projects.

Pytorch implementation for Patient Knowledge Distillation for BERT Model Compression

Patient Knowledge Distillation for BERT Model Compression Knowledge distillation for BERT model Installation Run command below to install the environm

An updated version of virtual model making

Model-Swap-Face v2 这个项目是基于stylegan2 pSp制作的,比v1版本Model-Swap-Face在推理速度和图像质量上有一定提升。主要的功能是将虚拟模特进行环球不同区域的风格转换,目前转换器提供西欧模特、东亚模特和北非模特三种主流的风格样式,可帮我们实现生产资料零成

Keras Model Implementation Walkthrough

Keras Model Implementation Walkthrough

Python scripts for performing stereo depth estimation using the MobileStereoNet model in Tensorflow Lite.

TFLite-MobileStereoNet Python scripts for performing stereo depth estimation using the MobileStereoNet model in Tensorflow Lite. Stereo depth estimati

A logistic regression model for health insurance purchasing prediction

Logistic_Regression_Model A logistic regression model for health insurance purchasing prediction This code is using these packages, so please make sur

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

Simple and Distributed Machine Learning

Synapse Machine Learning SynapseML (previously MMLSpark) is an open source library to simplify the creation of scalable machine learning pipelines. Sy

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

Conversational text Analysis using various NLP techniques

PyConverse Let me try first Installation pip install pyconverse Usage Please try this notebook that demos the core functionalities: basic usage noteb

Official pytorch implementation of the paper: "SinGAN: Learning a Generative Model from a Single Natural Image"

SinGAN Project | Arxiv | CVF | Supplementary materials | Talk (ICCV`19) Official pytorch implementation of the paper: "SinGAN: Learning a Generative M

SMPL-X: A new joint 3D model of the human body, face and hands together

SMPL-X: A new joint 3D model of the human body, face and hands together [Paper Page] [Paper] [Supp. Mat.] Table of Contents License Description News I

Python scripts for performing stereo depth estimation using the MobileStereoNet model in ONNX

ONNX-MobileStereoNet Python scripts for performing stereo depth estimation using the MobileStereoNet model in ONNX Stereo depth estimation on the cone

Forecasting prices using Facebook/Meta's Prophet model

CryptoForecasting using Machine and Deep learning (Part 1) CryptoForecasting using Machine Learning The main aspect of predicting the stock-related da

Generate text captions for images from their CLIP embeddings. Includes PyTorch model code and example training script.

clip-text-decoder Generate text captions for images from their CLIP embeddings. Includes PyTorch model code and example training script. Example Predi

A linear regression model for house price prediction

Linear_Regression_Model A linear regression model for house price prediction. This code is using these packages, so please make sure your have install

HuggingTweets - Train a model to generate tweets

HuggingTweets - Train a model to generate tweets Create in 5 minutes a tweet generator based on your favorite Tweeter Make my own model with the demo

Reproduction repository for the MDX 2021 Hybrid Demucs model

Submission This is the submission for MDX 2021 Track A, for Track B go to the track_b branch. Submission Summary Submission ID: 151378 Submitter: defo

Simple converter for deploying Stable-Baselines3 model to TFLite and/or Coral

Running SB3 developed agents on TFLite or Coral Introduction I've been using Stable-Baselines3 to train agents against some custom Gyms, some of which

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

PeCo: Perceptual Codebook for BERT Pre-training of Vision Transformers

The code for the NeurIPS 2021 paper "A Unified View of cGANs with and without Classifiers".

Energy-based Conditional Generative Adversarial Network (ECGAN) This is the code for the NeurIPS 2021 paper "A Unified View of cGANs with and without

mPose3D, a mmWave-based 3D human pose estimation model.

mPose3D, a mmWave-based 3D human pose estimation model.

A model checker for verifying properties in epistemic models

Epistemic Model Checker This is a model checker for verifying properties in epistemic models. The goal of the model checker is to check for Pluralisti

Omnidirectional Scene Text Detection with Sequential-free Box Discretization (IJCAI 2019). Including competition model, online demo, etc.

Box_Discretization_Network This repository is built on the pytorch [maskrcnn_benchmark]. The method is the foundation of our ReCTs-competition method

Revisiting Video Saliency: A Large-scale Benchmark and a New Model (CVPR18, PAMI19)

DHF1K =========================================================================== Wenguan Wang, J. Shen, M.-M Cheng and A. Borji, Revisiting Video Sal

The Instructed Glacier Model (IGM)

The Instructed Glacier Model (IGM) Overview The Instructed Glacier Model (IGM) simulates the ice dynamics, surface mass balance, and its coupling thro

Using Language Model to Bootstrap Human Activity Recognition Ambient Sensors Based in Smart Homes

Using Language Model to Bootstrap Human Activity Recognition Ambient Sensors Based in Smart Homes This repository is the official implementation of Us

CLIPImageClassifier wraps clip image model from transformers

CLIPImageClassifier CLIPImageClassifier wraps clip image model from transformers. CLIPImageClassifier is initialized with the argument classes, these

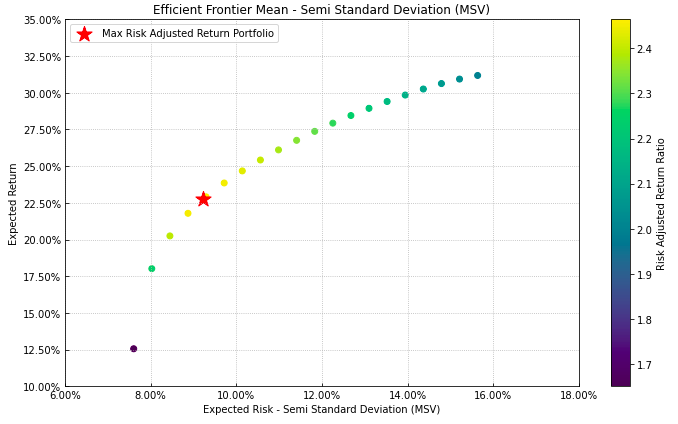

Portfolio Optimization and Quantitative Strategic Asset Allocation in Python

Riskfolio-Lib Quantitative Strategic Asset Allocation, Easy for Everyone. Description Riskfolio-Lib is a library for making quantitative strategic ass

Question and answer retrieval in Turkish with BERT

trfaq Google supported this work by providing Google Cloud credit. Thank you Google for supporting the open source! 🎉 What is this? At this repo, I'm

A naive Bayes model for cancer classification using a set of documents

Naivebayes text classifcation model for cancer and noncancer documents Author: Alex King Purpose Requirements/files included How to use 1. Purpose The

Delve is a Python package for analyzing the inference dynamics of your PyTorch model.

Delve is a Python package for analyzing the inference dynamics of your PyTorch model.

fastai ulmfit - Pretraining the Language Model, Fine-Tuning and training a Classifier

fast.ai ULMFiT with SentencePiece from pretraining to deployment Motivation: Why even bother with a non-BERT / Transformer language model? Short answe

An easy to use Natural Language Processing library and framework for predicting, training, fine-tuning, and serving up state-of-the-art NLP models.

Welcome to AdaptNLP A high level framework and library for running, training, and deploying state-of-the-art Natural Language Processing (NLP) models