104 Repositories

Python poisoning-attacks Libraries

DDoS Script (DDoS Panel) with Multiple Bypass ( Cloudflare UAM,CAPTCHA,BFM,NOSEC / DDoS Guard / Google Shield / V Shield / Amazon / etc.. )

KARMA DDoS DDoS Script (DDoS Panel) with Multiple Bypass ( Cloudflare UAM,CAPTCHA,BFM,NOSEC / DDoS Guard / Google Shield / V Shield / Amazon / etc.. )

Layer 7 DDoS Panel with Cloudflare Bypass ( UAM, CAPTCHA, BFM, etc.. )

Blood Deluxe DDoS DDoS Attack Panel includes CloudFlare Bypass (UAM, CAPTCHA, BFM, etc..)(It works intermittently. Working on it) Don't attack any web

automatically crawl every URL and find cross site scripting (XSS)

scancss Fastest tool to find XSS. scancss is a fastest tool to detect Cross Site scripting (XSS) automatically and it's also an intelligent payload ge

Source code of our TTH paper: Targeted Trojan-Horse Attacks on Language-based Image Retrieval.

Targeted Trojan-Horse Attacks on Language-based Image Retrieval Source code of our TTH paper: Targeted Trojan-Horse Attacks on Language-based Image Re

Code for the CVPR2022 paper "Frequency-driven Imperceptible Adversarial Attack on Semantic Similarity"

Introduction This is an official release of the paper "Frequency-driven Imperceptible Adversarial Attack on Semantic Similarity" (arxiv link). Abstrac

Universal Adversarial Examples in Remote Sensing: Methodology and Benchmark

Universal Adversarial Examples in Remote Sensing: Methodology and Benchmark Yong

BlockUnexpectedPackets - Preventing BungeeCord CPU overload due to Layer 7 DDoS attacks by scanning BungeeCord's logs

BlockUnexpectedPackets This script automatically blocks DDoS attacks that are sp

Dark Finix: All in one hacking framework with almost 100 tools

Dark Finix - Hacking Framework. Dark Finix is a all in one hacking framework wit

This respository contains the source code of the printjack and phonejack attacks.

Printjack-Phonejack This repository contains the source code of the printjack and phonejack attacks. The Printjack directory contains the script to ca

Demonstrating attacks, mitigations, and monitoring on AWS

About Inspectaroo is a web app which allows users to upload images to view metadata. It is designed to show off many AWS services including EC2, Lambd

Wifi-jammer - Continuously perform deauthentication attacks on all detectable stations

wifi-jammer Continuously perform deauthentication attacks on all detectable stat

AnonStress-Stored-XSS-Exploit - An exploit and demonstration on how to exploit a Stored XSS vulnerability in anonstress

AnonStress Stored XSS Exploit An exploit and demonstration on how to exploit a S

The Social-Engineer Toolkit (SET) is specifically designed to perform advanced attacks against the human element.

The Social-Engineer Toolkit (SET) The Social-Engineer Toolkit (SET) is specifically designed to perform advanced attacks against the human element. SE

Beyond imagenet attack (accepted by ICLR 2022) towards crafting adversarial examples for black-box domains.

Beyond ImageNet Attack: Towards Crafting Adversarial Examples for Black-box Domains (ICLR'2022) This is the Pytorch code for our paper Beyond ImageNet

Check out the StyleGAN repo and place it in the same directory hierarchy as the present repo

Variational Model Inversion Attacks Kuan-Chieh Wang, Yan Fu, Ke Li, Ashish Khisti, Richard Zemel, Alireza Makhzani Most commands are in run_scripts. W

Pytorch code for our paper Beyond ImageNet Attack: Towards Crafting Adversarial Examples for Black-box Domains)

Beyond ImageNet Attack: Towards Crafting Adversarial Examples for Black-box Domains (ICLR'2022) This is the Pytorch code for our paper Beyond ImageNet

Security system to prevent Shoulder Surfing Attacks

Surf_Sec Security system to prevent Shoulder Surfing Attacks. REQUIREMENTS: Python 3.6+ XAMPP INSTALLED METHOD TO CONFIGURE PROJECT: Clone the repo to

Neural Tangent Generalization Attacks (NTGA)

Neural Tangent Generalization Attacks (NTGA) ICML 2021 Video | Paper | Quickstart | Results | Unlearnable Datasets | Competitions | Citation Overview

A notebook explaining the principle of adversarial attacks and their defences

TL;DR: A notebook explaining the principle of adversarial attacks and their defences Abstract: Deep neural networks models have been wildly successful

HatSploit collection of generic payloads designed to provide a wide range of attacks without having to spend time writing new ones.

HatSploit collection of generic payloads designed to provide a wide range of attacks without having to spend time writing new ones.

This suite consists of two different scripts, made to automate attacks against NoSQL databases.

NoSQL-Attack-Suite This suite consists of two different scripts, made to automate attacks against NoSQL databases. The first one looks for a NoSQL Aut

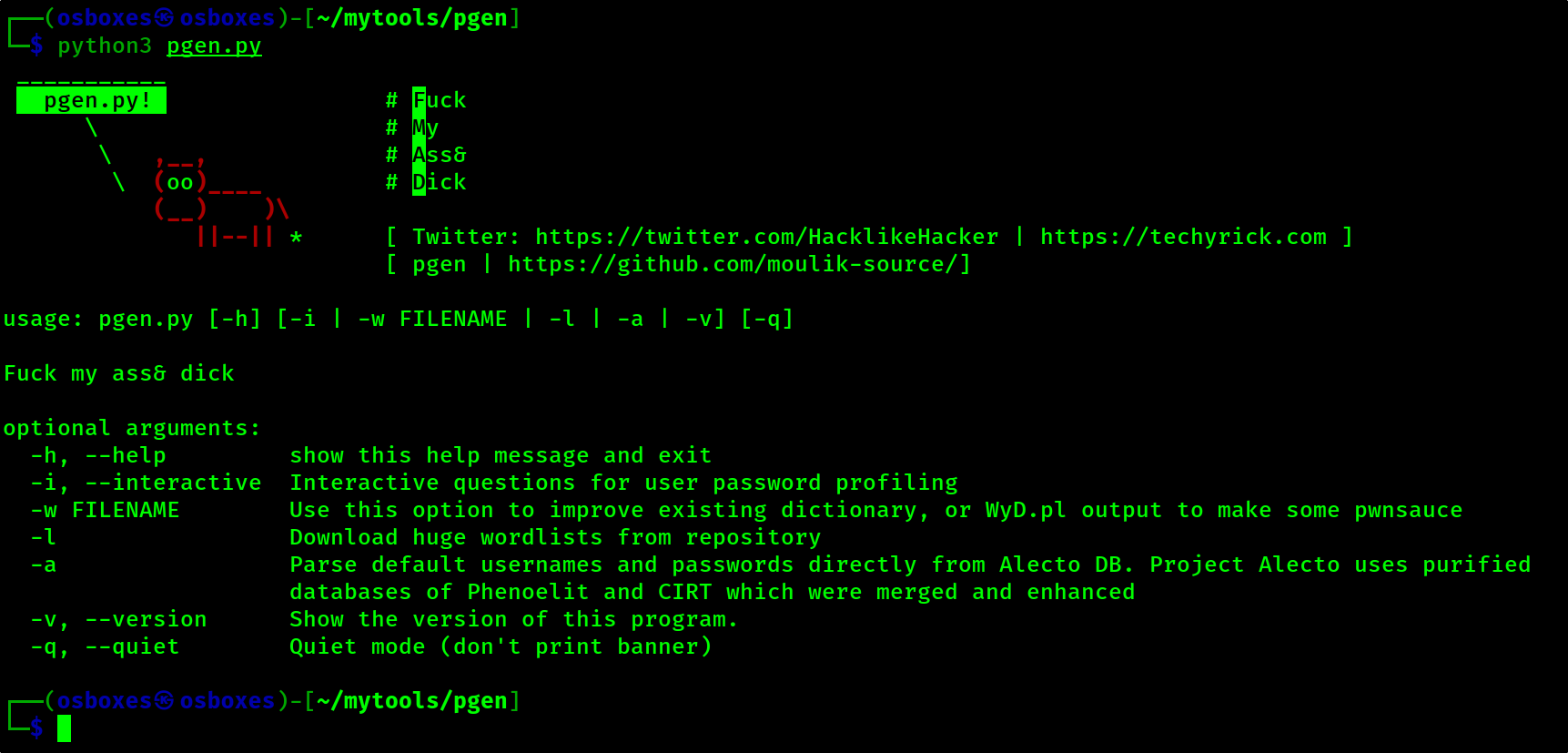

Pgen is the best brute force password generator and it is improved from the cupp.py

pgen Pgen is the best brute force password generator and it is improved from the cupp.py The pgen tool is dedicated to Leonardo da Vinci -Time stays l

Transferable Unrestricted Attacks, which won 1st place in CVPR’21 Security AI Challenger: Unrestricted Adversarial Attacks on ImageNet.

Transferable Unrestricted Adversarial Examples This is the PyTorch implementation of the Arxiv paper: Towards Transferable Unrestricted Adversarial Ex

Learnable Boundary Guided Adversarial Training (ICCV2021)

Learnable Boundary Guided Adversarial Training This repository contains the implementation code for the ICCV2021 paper: Learnable Boundary Guided Adve

Find-Xss - Termux Kurulum Dosyası Eklendi Eğer Hata Alıyorsanız Lütfen Resmini Çekip İnstagramdan Bildiriniz

FindXss Waf Bypass Eklendi !!! PRODUCER: Saep UPDATER: Aser-Vant Download: git c

Advbox is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle、PyTorch、Caffe2、MxNet、Keras、TensorFlow and Advbox can benchmark the robustness of machine learning models.

Advbox is a toolbox to generate adversarial examples that fool neural networks in PaddlePaddle、PyTorch、Caffe2、MxNet、Keras、TensorFlow and Advbox can benchmark the robustness of machine learning models. Advbox give a command line tool to generate adversarial examples with Zero-Coding.

Adversarial Attacks are Reversible via Natural Supervision

Adversarial Attacks are Reversible via Natural Supervision ICCV2021 Citation @InProceedings{Mao_2021_ICCV, author = {Mao, Chengzhi and Chiquier

Gmail account using brute force attack

Programmed in Python | PySimpleGUI Gmail Hack Python script with PySimpleGUI for hack gmail account using brute force attack If you like it give it

Denial Attacks by Various Methods

Denial Service Attack Denial Attacks by Various Methods IIIIIIIIIIIIIIIIIIII PPPPPPPPPPPPPPPPP VVVVVVVV VVVVVVVV I::

Stable Neural ODE with Lyapunov-Stable Equilibrium Points for Defending Against Adversarial Attacks

Stable Neural ODE with Lyapunov-Stable Equilibrium Points for Defending Against Adversarial Attacks Stable Neural ODE with Lyapunov-Stable Equilibrium

I hacked my own webcam from a Kali Linux VM in my local network, using Ettercap to do the MiTM ARP poisoning attack, sniffing with Wireshark, and using metasploit

plan I - Linux Fundamentals Les utilisateurs et les droits Installer des programmes avec apt-get Surveiller l'activité du système Exécuter des program

A repository built on the Flow software package to explore cyber-security attacks on intelligent transportation systems.

A repository built on the Flow software package to explore cyber-security attacks on intelligent transportation systems.

Official implementation of the paper "Backdoor Attacks on Self-Supervised Learning".

SSL-Backdoor Abstract Large-scale unlabeled data has allowed recent progress in self-supervised learning methods that learn rich visual representation

Official PyTorch implementation of "Preemptive Image Robustification for Protecting Users against Man-in-the-Middle Adversarial Attacks" (AAAI 2022)

Preemptive Image Robustification for Protecting Users against Man-in-the-Middle Adversarial Attacks This is the code for reproducing the results of th

SeqAttack: a framework for adversarial attacks on token classification models

A framework for adversarial attacks against token classification models

Python Toolkit containing different Cyber Attacks Tools

Helikopter Python Toolkit containing different Cyber Attacks Tools. Tools in Helikopter Toolkit 1. FattyNigger (PYTHON WORM) 2. Taxes (PYTHON PASS EXT

S-attack library. Official implementation of two papers "Are socially-aware trajectory prediction models really socially-aware?" and "Vehicle trajectory prediction works, but not everywhere".

S-attack library: A library for evaluating trajectory prediction models This library contains two research projects to assess the trajectory predictio

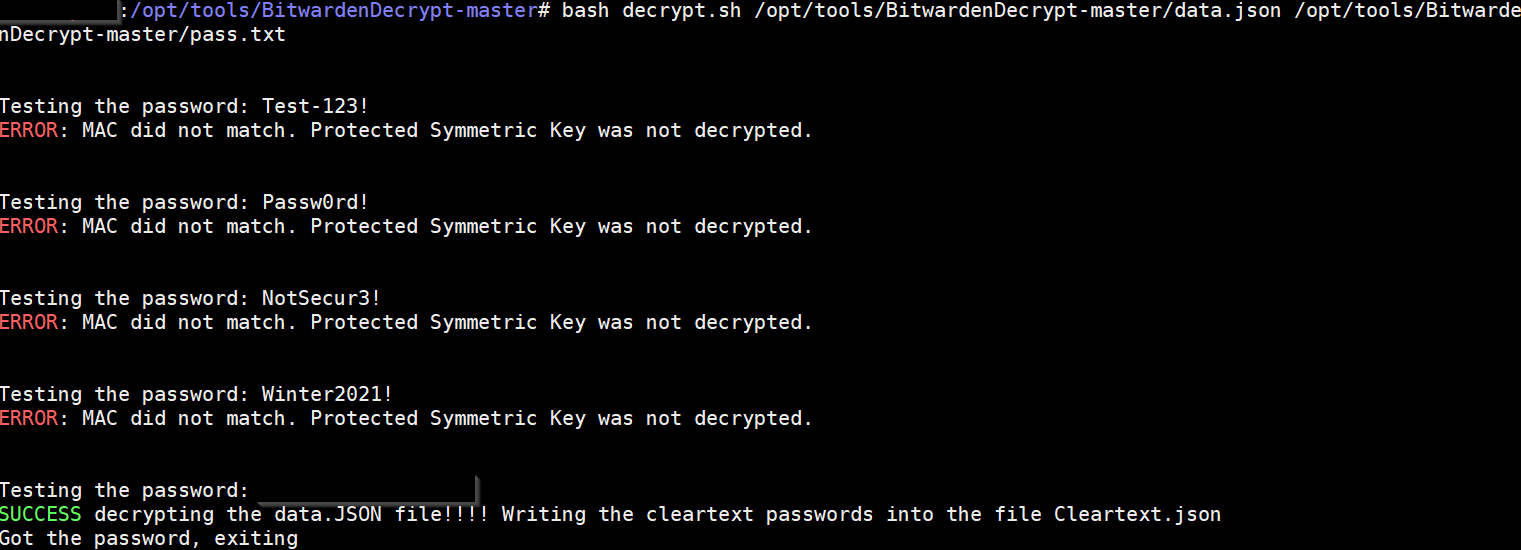

Wordlist attacks on Bitwarden data.json files

BitwardenDecryptBrute This is a slightly modified version of BitwardenDecrypt. In addition to the decryption this version can do wordlist attacks for

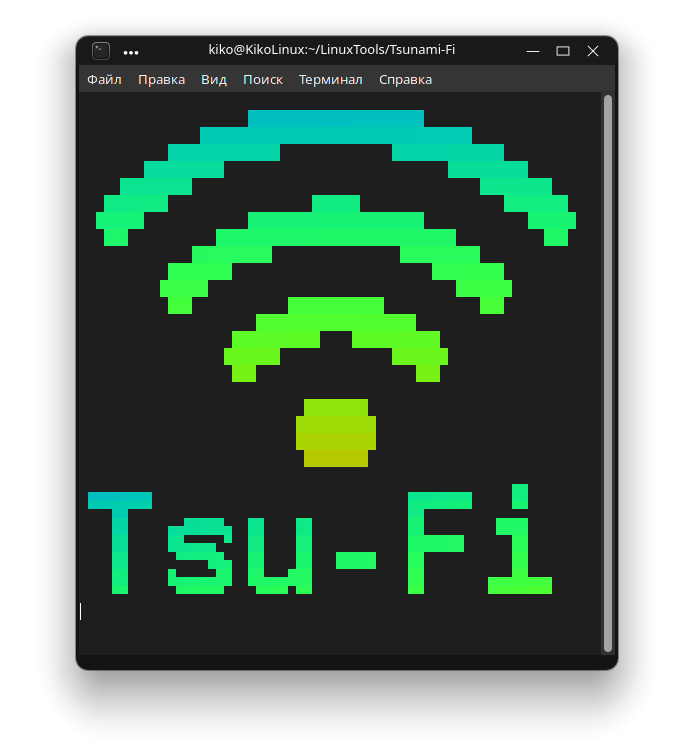

Tsunami-Fi is simple multi-tool bash application for Wi-Fi attacks

🪴 Tsunami-Fi 🪴 Русская версия README 🌿 Description 🌿 Tsunami-Fi is simple multi-tool bash application for Wi-Fi WPS PixieDust and NullPIN attack,

MS in Data Science capstone project. Studying attacks on autonomous vehicles.

Surveying Attack Models for CAVs Guide to Installing CARLA and Collecting Data Our project focuses on surveying attack models for Connveced Autonomous

A protocol or procedure that connects an ever-changing IP address to a fixed physical machine address

p0znMITM ARP Poisoning Tool What is ARP? Address Resolution Protocol (ARP) is a protocol or procedure that connects an ever-changing IP address to a f

Simulate Attacks With Mininet And Hping3

Miniattack Simulate Attacks With Mininet And Hping3 It measures network load with bwm-ng when the net is under attack and plots the result. This demo

A Model for Natural Language Attack on Text Classification and Inference

TextFooler A Model for Natural Language Attack on Text Classification and Inference This is the source code for the paper: Jin, Di, et al. "Is BERT Re

![[NeurIPS '21] Adversarial Attacks on Graph Classification via Bayesian Optimisation (GRABNEL)](https://github.com/xingchenwan/grabnel/raw/main/figs/overall_grabnel.png)

[NeurIPS '21] Adversarial Attacks on Graph Classification via Bayesian Optimisation (GRABNEL)

Adversarial Attacks on Graph Classification via Bayesian Optimisation @ NeurIPS 2021 This repository contains the official implementation of GRABNEL,

[NeurIPS2021] Code Release of Learning Transferable Perturbations

Learning Transferable Adversarial Perturbations This is an official release of the paper Learning Transferable Adversarial Perturbations. The code is

![[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️](https://github.com/sungyoon-lee/LossLandscapeMatters/raw/master/media/fig.png)

[NeurIPS 2021] Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples | ⛰️⚠️

Towards Better Understanding of Training Certifiably Robust Models against Adversarial Examples This repository is the official implementation of "Tow

Anomaly Localization in Model Gradients Under Backdoor Attacks Against Federated Learning

Federated_Learning This repo provides a federated learning framework that allows to carry out backdoor attacks under varying conditions. This is a ker

Codes for NeurIPS 2021 paper "Adversarial Neuron Pruning Purifies Backdoored Deep Models"

Adversarial Neuron Pruning Purifies Backdoored Deep Models Code for NeurIPS 2021 "Adversarial Neuron Pruning Purifies Backdoored Deep Models" by Dongx

Resilience from Diversity: Population-based approach to harden models against adversarial attacks

Resilience from Diversity: Population-based approach to harden models against adversarial attacks Requirements To install requirements: pip install -r

A Python toolbox to create adversarial examples that fool neural networks in PyTorch, TensorFlow, and JAX

Foolbox Native: Fast adversarial attacks to benchmark the robustness of machine learning models in PyTorch, TensorFlow, and JAX Foolbox is a Python li

Using LSTM to detect spoofing attacks in an Air-Ground network

Using LSTM to detect spoofing attacks in an Air-Ground network Specifications IDE: Spider Packages: Tensorflow 2.1.0 Keras NumPy Scikit-learn Matplotl

Source code for our paper "Do Not Trust Prediction Scores for Membership Inference Attacks"

Do Not Trust Prediction Scores for Membership Inference Attacks Abstract: Membership inference attacks (MIAs) aim to determine whether a specific samp

Machine Learning Privacy Meter: A tool to quantify the privacy risks of machine learning models with respect to inference attacks, notably membership inference attacks

ML Privacy Meter Machine learning is playing a central role in automated decision making in a wide range of organization and service providers. The da

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models (published in ICLR2018)

Defense-GAN: Protecting Classifiers Against Adversarial Attacks Using Generative Models Pouya Samangouei*, Maya Kabkab*, Rama Chellappa [*: authors co

The official implementation of NeurIPS 2021 paper: Finding Optimal Tangent Points for Reducing Distortions of Hard-label Attacks

Introduction This repository includes the source code for "Finding Optimal Tangent Points for Reducing Distortions of Hard-label Attacks", which is pu

RMTD: Robust Moving Target Defence Against False Data Injection Attacks in Power Grids

RMTD: Robust Moving Target Defence Against False Data Injection Attacks in Power Grids Real-time detection performance. This repo contains the code an

The official implementation of NeurIPS 2021 paper: Finding Optimal Tangent Points for Reducing Distortions of Hard-label Attacks

The official implementation of NeurIPS 2021 paper: Finding Optimal Tangent Points for Reducing Distortions of Hard-label Attacks

Graph Robustness Benchmark: A scalable, unified, modular, and reproducible benchmark for evaluating the adversarial robustness of Graph Machine Learning.

Homepage | Paper | Datasets | Leaderboard | Documentation Graph Robustness Benchmark (GRB) provides scalable, unified, modular, and reproducible evalu

Official implementation of "Membership Inference Attacks Against Self-supervised Speech Models"

Introduction Official implementation of "Membership Inference Attacks Against Self-supervised Speech Models". In this work, we demonstrate that existi

Dependency Combobulator is an Open-Source, modular and extensible framework to detect and prevent dependency confusion leakage and potential attacks.

Dependency Combobulator Dependency Combobulator is an Open-Source, modular and extensible framework to detect and prevent dependency confusion leakage

Code for "Adversarial attack by dropping information." (ICCV 2021)

AdvDrop Code for "AdvDrop: Adversarial Attack to DNNs by Dropping Information(ICCV 2021)." Human can easily recognize visual objects with lost informa

code for "Feature Importance-aware Transferable Adversarial Attacks"

Feature Importance-aware Attack(FIA) This repository contains the code for the paper: Feature Importance-aware Transferable Adversarial Attacks (ICCV

Code repository for EMNLP 2021 paper 'Adversarial Attacks on Knowledge Graph Embeddings via Instance Attribution Methods'

Adversarial Attacks on Knowledge Graph Embeddings via Instance Attribution Methods This is the code repository to accompany the EMNLP 2021 paper on ad

Companion code for the paper "Meta-Learning the Search Distribution of Black-Box Random Search Based Adversarial Attacks" by Yatsura et al.

META-RS This is the companion code for the paper "Meta-Learning the Search Distribution of Black-Box Random Search Based Adversarial Attacks" by Yatsu

Data Poisoning based on Adversarial Attacks using Non-Robust Features

Data Poisoning based on Adversarial Attacks using Non-Robust Features Usage python main.py [-h] [--gpu | -g GPU] [--eps |-e EPSILON] [--pert | -p PER

LeLeLe: A tool to simplify the application of Lattice attacks.

LeLeLe is a very simple library (300 lines) to help you more easily implement lattice attacks, the library is inspired by Z3Py (python interfa

Short PhD seminar on Machine Learning Security (Adversarial Machine Learning)

Short PhD seminar on Machine Learning Security (Adversarial Machine Learning)

FL-WBC: Enhancing Robustness against Model Poisoning Attacks in Federated Learning from a Client Perspective

FL-WBC: Enhancing Robustness against Model Poisoning Attacks in Federated Learning from a Client Perspective Official implementation of "FL-WBC: Enhan

[NeurIPS 2021] Source code for the paper "Qu-ANTI-zation: Exploiting Neural Network Quantization for Achieving Adversarial Outcomes"

Qu-ANTI-zation This repository contains the code for reproducing the results of our paper: Qu-ANTI-zation: Exploiting Quantization Artifacts for Achie

Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

CoProtector Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

CoProtector Code for the prototype tool in our paper "CoProtector: Protect Open-Source Code against Unauthorized Training Usage with Data Poisoning".

Bark Toolkit is a toolkit wich provides Denial-of-service attacks, SMS attacks and more.

Bark Toolkit About Bark Toolkit Bark Toolkit is a set of tools that provides denial of service attacks. Bark Toolkit includes SMS attack tool, HTTP

A Python library for adversarial machine learning focusing on benchmarking adversarial robustness.

ARES This repository contains the code for ARES (Adversarial Robustness Evaluation for Safety), a Python library for adversarial machine learning rese

PyTorch implementation of our method for adversarial attacks and defenses in hyperspectral image classification.

Self-Attention Context Network for Hyperspectral Image Classification PyTorch implementation of our method for adversarial attacks and defenses in hyp

Boosting Adversarial Attacks with Enhanced Momentum (BMVC 2021)

EMI-FGSM This repository contains code to reproduce results from the paper: Boosting Adversarial Attacks with Enhanced Momentum (BMVC 2021) Xiaosen Wa

SocksFlood, a DoS tools that sends attacks using Socks5 & Socks4

Information SocksFlood, a DoS tools that sends attacks using Socks5 and Socks4 Requirements Python 3.10.0 A little bit knowledge of sockets IDE / Code

SCAAML is a deep learning framwork dedicated to side-channel attacks run on top of TensorFlow 2.x.

SCAAML (Side Channel Attacks Assisted with Machine Learning) is a deep learning framwork dedicated to side-channel attacks. It is written in python and run on top of TensorFlow 2.x.

Code and data of the EMNLP 2021 paper "Mind the Style of Text! Adversarial and Backdoor Attacks Based on Text Style Transfer"

StyleAttack Code and data of the EMNLP 2021 paper "Mind the Style of Text! Adversarial and Backdoor Attacks Based on Text Style Transfer" Prepare Pois

Code for the preprint "Well-classified Examples are Underestimated in Classification with Deep Neural Networks"

This is a repository for the paper of "Well-classified Examples are Underestimated in Classification with Deep Neural Networks" The implementation and

Code repository for the paper "Doubly-Trained Adversarial Data Augmentation for Neural Machine Translation" with instructions to reproduce the results.

Doubly Trained Neural Machine Translation System for Adversarial Attack and Data Augmentation Languages Experimented: Data Overview: Source Target Tra

Toolkit for building machine learning models that generalize to unseen domains and are robust to privacy and other attacks.

Toolkit for Building Robust ML models that generalize to unseen domains (RobustDG) Divyat Mahajan, Shruti Tople, Amit Sharma Privacy & Causal Learning

TextAttack 🐙 is a Python framework for adversarial attacks, data augmentation, and model training in NLP

TextAttack 🐙 Generating adversarial examples for NLP models [TextAttack Documentation on ReadTheDocs] About • Setup • Usage • Design About TextAttack

Efficient Sparse Attacks on Videos using Reinforcement Learning

EARL This repository provides a simple implementation of the work "Efficient Sparse Attacks on Videos using Reinforcement Learning" Example: Demo: Her

G-NIA model from "Single Node Injection Attack against Graph Neural Networks" (CIKM 2021)

Single Node Injection Attack against Graph Neural Networks This repository is our Pytorch implementation of our paper: Single Node Injection Attack ag

theHasher Tool created for generate strong and unbreakable passwords by using Hash Functions.Generate Hashes and store them in txt files.Use the txt files as lists to execute Brute Force Attacks!

$theHasher theHasher is a Tool for generating hashes using some of the most Famous Hashes Functions ever created. You can save your hashes to correspo

DIT is a DTLS MitM proxy implemented in Python 3. It can intercept, manipulate and suppress datagrams between two DTLS endpoints and supports psk-based and certificate-based authentication schemes (RSA + ECC).

DIT - DTLS Interception Tool DIT is a MitM proxy tool to intercept DTLS traffic. It can intercept, manipulate and/or suppress DTLS datagrams between t

Some Attacks of Exchange SSRF ProxyLogon&ProxyShell

Some Attacks of Exchange SSRF This project is heavily replicated in ProxyShell, NtlmRelayToEWS https://mp.weixin.qq.com/s/GFcEKA48bPWsezNdVcrWag Get 1

Emulate and Dissect MSF and *other* attacks

Need help in analyzing Windows shellcode or attack coming from Metasploit Framework or Cobalt Strike (or may be also other malicious or obfuscated code)? Do you need to automate tasks with simple scripting? Do you want help to decrypt MSF generated traffic by extracting keys from payloads?

Defending graph neural networks against adversarial attacks (NeurIPS 2020)

GNNGuard: Defending Graph Neural Networks against Adversarial Attacks Authors: Xiang Zhang ([email protected]), Marinka Zitnik (marinka@hms.

This framework implements the data poisoning method found in the paper Adversarial Examples Make Strong Poisons

Adversarial poison generation and evaluation. This framework implements the data poisoning method found in the paper Adversarial Examples Make Strong

Adversarial Robustness Toolbox (ART) - Python Library for Machine Learning Security - Evasion, Poisoning, Extraction, Inference - Red and Blue Teams

Adversarial Robustness Toolbox (ART) is a Python library for Machine Learning Security. ART provides tools that enable developers and researchers to defend and evaluate Machine Learning models and applications against the adversarial threats of Evasion, Poisoning, Extraction, and Inference. ART supports all popular machine learning frameworks (TensorFlow, Keras, PyTorch, MXNet, scikit-learn, XGBoost, LightGBM, CatBoost, GPy, etc.), all data types (images, tables, audio, video, etc.) and machine learning tasks (classification, object detection, speech recognition, generation, certification, etc.).

We will see a basic program that is basically a hint to brute force attack to crack passwords. In other words, we will make a program to Crack Any Password Using Python. Show some ❤️ by starring this repository!

Crack Any Password Using Python We will see a basic program that is basically a hint to brute force attack to crack passwords. In other words, we will

Adversarial Attacks on Probabilistic Autoregressive Forecasting Models.

Attack-Probabilistic-Models This is the source code for Adversarial Attacks on Probabilistic Autoregressive Forecasting Models. This repository contai

An implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep Neural Networks in PyTorch.

Neural Attention Distillation This is an implementation demo of the ICLR 2021 paper Neural Attention Distillation: Erasing Backdoor Triggers from Deep

Implementation of Wasserstein adversarial attacks.

Stronger and Faster Wasserstein Adversarial Attacks Code for Stronger and Faster Wasserstein Adversarial Attacks, appeared in ICML 2020. This reposito

Code for "Diversity can be Transferred: Output Diversification for White- and Black-box Attacks"

Output Diversified Sampling (ODS) This is the github repository for the NeurIPS 2020 paper "Diversity can be Transferred: Output Diversification for W

transfer attack; adversarial examples; black-box attack; unrestricted Adversarial Attacks on ImageNet; CVPR2021 天池黑盒竞赛

transfer_adv CVPR-2021 AIC-VI: unrestricted Adversarial Attacks on ImageNet CVPR2021 安全AI挑战者计划第六期赛道2:ImageNet无限制对抗攻击 介绍 : 深度神经网络已经在各种视觉识别问题上取得了最先进的性能。

Attack classification models with transferability, black-box attack; unrestricted adversarial attacks on imagenet

Attack classification models with transferability, black-box attack; unrestricted adversarial attacks on imagenet, CVPR2021 安全AI挑战者计划第六期:ImageNet无限制对抗攻击 决赛第四名(team name: Advers)

Image-Scaling Attacks and Defenses

Image-Scaling Attacks & Defenses This repository belongs to our publication: Erwin Quiring, David Klein, Daniel Arp, Martin Johns and Konrad Rieck. Ad

Big-Papa Integrates Javascript and python for remote cookie stealing which then can be used for session hijacking

Big-Papa is a remote cookie stealer which can then be used for session hijacking and Bypassing 2 Factor Authentication