166 Repositories

Python prompt-tuning Libraries

Code for Generating Disentangled Arguments with Prompts: A Simple Event Extraction Framework that Works

GDAP Code for Generating Disentangled Arguments with Prompts: A Simple Event Extraction Framework that Works Environment Python (verified: v3.8) CUDA

Example Of Fine-Tuning BERT For Named-Entity Recognition Task And Preparing For Cloud Deployment Using Flask, React, And Docker

Example Of Fine-Tuning BERT For Named-Entity Recognition Task And Preparing For Cloud Deployment Using Flask, React, And Docker This repository contai

This repository is the official implementation of Unleashing the Power of Contrastive Self-Supervised Visual Models via Contrast-Regularized Fine-Tuning (NeurIPS21).

Core-tuning This repository is the official implementation of ``Unleashing the Power of Contrastive Self-Supervised Visual Models via Contrast-Regular

Code and datasets for the paper "KnowPrompt: Knowledge-aware Prompt-tuning with Synergistic Optimization for Relation Extraction"

KnowPrompt Code and datasets for our paper "KnowPrompt: Knowledge-aware Prompt-tuning with Synergistic Optimization for Relation Extraction" Requireme

Fine-tuning StyleGAN2 for Cartoon Face Generation

Cartoon-StyleGAN 🙃 : Fine-tuning StyleGAN2 for Cartoon Face Generation Abstract Recent studies have shown remarkable success in the unsupervised imag

🔥🔥High-Performance Face Recognition Library on PaddlePaddle & PyTorch🔥🔥

face.evoLVe: High-Performance Face Recognition Library based on PaddlePaddle & PyTorch Evolve to be more comprehensive, effective and efficient for fa

Learning to Prompt for Vision-Language Models.

CoOp Paper: Learning to Prompt for Vision-Language Models Authors: Kaiyang Zhou, Jingkang Yang, Chen Change Loy, Ziwei Liu CoOp (Context Optimization)

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Prefix-Tuning: Optimizing Continuous Prompts for Generation

Prefix Tuning Files: . ├── gpt2 # Code for GPT2 style autoregressive LM │ ├── train_e2e.py # high-level script

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Web Scraping, Document Deduplication & GPT-2 Fine-tuning with a newly created scam dataset.

Lale is a Python library for semi-automated data science.

Lale is a Python library for semi-automated data science. Lale makes it easy to automatically select algorithms and tune hyperparameters of pipelines that are compatible with scikit-learn, in a type-safe fashion.

P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy for sma

Hypernets: A General Automated Machine Learning framework to simplify the development of End-to-end AutoML toolkits in specific domains.

A General Automated Machine Learning framework to simplify the development of End-to-end AutoML toolkits in specific domains.

The Few-Shot Bot: Prompt-Based Learning for Dialogue Systems

Few-Shot Bot: Prompt-Based Learning for Dialogue Systems This repository includes the dataset, experiments results, and code for the paper: Few-Shot B

Official codebase for Legged Robots that Keep on Learning: Fine-Tuning Locomotion Policies in the Real World

Legged Robots that Keep on Learning Official codebase for Legged Robots that Keep on Learning: Fine-Tuning Locomotion Policies in the Real World, whic

p-tuning for few-shot NLU task

p-tuning_NLU Overview 这个小项目是受乐于分享的苏剑林大佬这篇p-tuning 文章启发,也实现了个使用P-tuning进行NLU分类的任务, 思路是一样的,prompt实现方式有不同,这里是将[unused*]的embeddings参数抽取出用于初始化prompt_embed后

TLDR; Train custom adaptive filter optimizers without hand tuning or extra labels.

AutoDSP TLDR; Train custom adaptive filter optimizers without hand tuning or extra labels. About Adaptive filtering algorithms are commonplace in sign

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks

Prompt-learning is the latest paradigm to adapt pre-trained language models (PLMs) to downstream NLP tasks, which modifies the input text with a textual template and directly uses PLMs to conduct pre-trained tasks. This library provides a standard, flexible and extensible framework to deploy the prompt-learning pipeline. OpenPrompt supports loading PLMs directly from huggingface transformers. In the future, we will also support PLMs implemented by other libraries.

🔥🔥High-Performance Face Recognition Library on PaddlePaddle & PyTorch🔥🔥

face.evoLVe: High-Performance Face Recognition Library based on PaddlePaddle & PyTorch Evolve to be more comprehensive, effective and efficient for fa

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

Example of network fine-tuning in pytorch for the kaggle competition Dogs vs. Cats Redux: Kernels Edition

Example of network fine-tuning in pytorch for the kaggle competition Dogs vs. Cats Redux: Kernels Edition Currently

easyopt is a super simple yet super powerful optuna-based Hyperparameters Optimization Framework that requires no coding.

easyopt is a super simple yet super powerful optuna-based Hyperparameters Optimization Framework that requires no coding.

Vehicle Detection Using Deep Learning and YOLO Algorithm

VehicleDetection Vehicle Detection Using Deep Learning and YOLO Algorithm Dataset take or find vehicle images for create a special dataset for fine-tu

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt

Feed forward VQGAN-CLIP model, where the goal is to eliminate the need for optimizing the latent space of VQGAN for each input prompt. This is done by

This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced in the paper titled "BanglaBERT: Combating Embedding Barrier in Multilingual Models for Low-Resource Language Understanding".

BanglaBERT This repository contains the official release of the model "BanglaBERT" and associated downstream finetuning code and datasets introduced i

The code for our paper "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task —— Next Sentence Prediction"

The code for our paper "NSP-BERT: A Prompt-based Zero-Shot Learner Through an Original Pre-training Task —— Next Sentence Prediction"

This repository accompanies our paper “Do Prompt-Based Models Really Understand the Meaning of Their Prompts?”

This repository accompanies our paper “Do Prompt-Based Models Really Understand the Meaning of Their Prompts?” Usage To replicate our results in Secti

EMNLP 2021 Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections

Adapting Language Models for Zero-shot Learning by Meta-tuning on Dataset and Prompt Collections Ruiqi Zhong, Kristy Lee*, Zheng Zhang*, Dan Klein EMN

Source Code For Template-Based Named Entity Recognition Using BART

Template-Based NER Source Code For Template-Based Named Entity Recognition Using BART Training Training train.py Inference inference.py Corpus ATIS (h

auto-tuning momentum SGD optimizer

YellowFin YellowFin is an auto-tuning optimizer based on momentum SGD which requires no manual specification of learning rate and momentum. It measure

Code release for NeurIPS 2020 paper "Co-Tuning for Transfer Learning"

CoTuning Official implementation for NeurIPS 2020 paper Co-Tuning for Transfer Learning. [News] 2021/01/13 The COCO 70 dataset used in the paper is av

Bear-Shell is a shell based in the terminal or command prompt.

Bear-Shell is a shell based in the terminal or command prompt. You can navigate files, run python files, create files via the BearUtils text editor, and a lot more coming up!

Bear-Shell is a shell based in the terminal or command prompt.

Bear-Shell is a shell based in the terminal or command prompt. You can navigate files, run python files, create files via the BearUtils text editor, and a lot more coming up!

This is an online course where you can learn and master the skill of low-level performance analysis and tuning.

Performance Ninja Class This is an online course where you can learn to find and fix low-level performance issues, for example CPU cache misses and br

Code release for "Self-Tuning for Data-Efficient Deep Learning" (ICML 2021)

Self-Tuning for Data-Efficient Deep Learning This repository contains the implementation code for paper: Self-Tuning for Data-Efficient Deep Learning

The Power of Scale for Parameter-Efficient Prompt Tuning

The Power of Scale for Parameter-Efficient Prompt Tuning Implementation of soft embeddings from https://arxiv.org/abs/2104.08691v1 using Pytorch and H

Automated modeling and machine learning framework FEDOT

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML). It can build custom modeling pipelines for different real-world processes in an automated way using an evolutionary approach. FEDOT supports classification (binary and multiclass), regression, clustering, and time series prediction tasks.

Cartoon-StyleGan2 🙃 : Fine-tuning StyleGAN2 for Cartoon Face Generation

Fine-tuning StyleGAN2 for Cartoon Face Generation

Code and datasets for our paper "PTR: Prompt Tuning with Rules for Text Classification"

PTR Code and datasets for our paper "PTR: Prompt Tuning with Rules for Text Classification" If you use the code, please cite the following paper: @art

Code for ACL2021 paper Consistency Regularization for Cross-Lingual Fine-Tuning.

xTune Code for ACL2021 paper Consistency Regularization for Cross-Lingual Fine-Tuning. Environment DockerFile: dancingsoul/pytorch:xTune Install the f

Fine-Tune EleutherAI GPT-Neo to Generate Netflix Movie Descriptions in Only 47 Lines of Code Using Hugginface And DeepSpeed

GPT-Neo-2.7B Fine-Tuning Example Using HuggingFace & DeepSpeed Installation cd venv/bin ./pip install -r ../../requirements.txt ./pip install deepspe

simpleT5 is built on top of PyTorch-lightning⚡️ and Transformers🤗 that lets you quickly train your T5 models.

Quickly train T5 models in just 3 lines of code + ONNX support simpleT5 is built on top of PyTorch-lightning ⚡️ and Transformers 🤗 that lets you quic

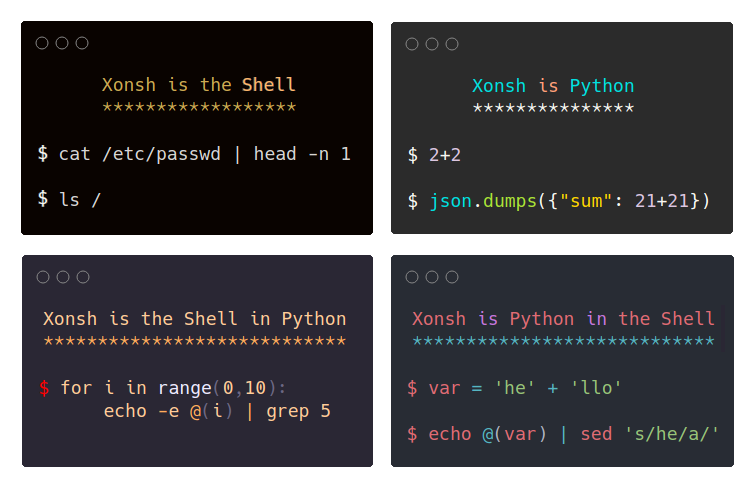

xonsh is a Python-powered, cross-platform, Unix-gazing shell language and command prompt.

xonsh xonsh is a Python-powered, cross-platform, Unix-gazing shell language and command prompt. The language is a superset of Python 3.6+ with additio

The project help you to quickly build layouts in terminal,cross-platform

The project help you to quickly build layouts in terminal,cross-platform

Word2Wave: a framework for generating short audio samples from a text prompt using WaveGAN and COALA.

Word2Wave is a simple method for text-controlled GAN audio generation. You can either follow the setup instructions below and use the source code and CLI provided in this repo or you can have a play around in the Colab notebook provided. Note that, in both cases, you will need to train a WaveGAN model first

![NAACL'2021: Factual Probing Is [MASK]: Learning vs. Learning to Recall](https://github.com/princeton-nlp/OptiPrompt/raw/main/figure/optiprompt.png)

NAACL'2021: Factual Probing Is [MASK]: Learning vs. Learning to Recall

OptiPrompt This is the PyTorch implementation of the paper Factual Probing Is [MASK]: Learning vs. Learning to Recall. We propose OptiPrompt, a simple

OCTIS: Comparing Topic Models is Simple! A python package to optimize and evaluate topic models (accepted at EACL2021 demo track)

OCTIS : Optimizing and Comparing Topic Models is Simple! OCTIS (Optimizing and Comparing Topic models Is Simple) aims at training, analyzing and compa

A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''.

P-tuning A novel method to tune language models. Codes and datasets for paper ``GPT understands, too''. How to use our code We have released the code

Automated Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning

The mljar-supervised is an Automated Machine Learning Python package that works with tabular data. I

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Ray provides a simple, universal API for building distributed applications. Ray is packaged with the following libraries for accelerating machine lear

optimization routines for hyperparameter tuning

Optunity is a library containing various optimizers for hyperparameter tuning. Hyperparameter tuning is a recurrent problem in many machine learning t

Sequential Model-based Algorithm Configuration

SMAC v3 Project Copyright (C) 2016-2018 AutoML Group Attention: This package is a reimplementation of the original SMAC tool (see reference below). Ho

Automated Machine Learning with scikit-learn

auto-sklearn auto-sklearn is an automated machine learning toolkit and a drop-in replacement for a scikit-learn estimator. Find the documentation here

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

A beautiful and useful prompt for your shell

A Powerline style prompt for your shell A beautiful and useful prompt generator for Bash, ZSH, Fish, and tcsh: Shows some important details about the

Docker image with Uvicorn managed by Gunicorn for high-performance FastAPI web applications in Python 3.6 and above with performance auto-tuning. Optionally with Alpine Linux.

Supported tags and respective Dockerfile links python3.8, latest (Dockerfile) python3.7, (Dockerfile) python3.6 (Dockerfile) python3.8-slim (Dockerfil

Library for building powerful interactive command line applications in Python

Python Prompt Toolkit prompt_toolkit is a library for building powerful interactive command line applications in Python. Read the documentation on rea

Framework for fine-tuning pretrained transformers for Named-Entity Recognition (NER) tasks

NERDA Not only is NERDA a mesmerizing muppet-like character. NERDA is also a python package, that offers a slick easy-to-use interface for fine-tuning

Determined: Deep Learning Training Platform

Determined: Deep Learning Training Platform Determined is an open-source deep learning training platform that makes building models fast and easy. Det

Automates Machine Learning Pipeline with Feature Engineering and Hyper-Parameters Tuning :rocket:

MLJAR Automated Machine Learning Documentation: https://supervised.mljar.com/ Source Code: https://github.com/mljar/mljar-supervised Table of Contents

A Sklearn-like Framework for Hyperparameter Tuning and AutoML in Deep Learning projects. Finally have the right abstractions and design patterns to properly do AutoML. Let your pipeline steps have hyperparameter spaces. Enable checkpoints to cut duplicate calculations. Go from research to production environment easily.

Neuraxle Pipelines Code Machine Learning Pipelines - The Right Way. Neuraxle is a Machine Learning (ML) library for building machine learning pipeline

A web-based application for quick, scalable, and automated hyperparameter tuning and stacked ensembling in Python.

Xcessiv Xcessiv is a tool to help you create the biggest, craziest, and most excessive stacked ensembles you can think of. Stacked ensembles are simpl

A Python Automated Machine Learning tool that optimizes machine learning pipelines using genetic programming.

Master status: Development status: Package information: TPOT stands for Tree-based Pipeline Optimization Tool. Consider TPOT your Data Science Assista

An open source framework that provides a simple, universal API for building distributed applications. Ray is packaged with RLlib, a scalable reinforcement learning library, and Tune, a scalable hyperparameter tuning library.

Ray provides a simple, universal API for building distributed applications. Ray is packaged with the following libraries for accelerating machine lear

CLI for SQLite Databases with auto-completion and syntax highlighting

litecli Docs A command-line client for SQLite databases that has auto-completion and syntax highlighting. Installation If you already know how to inst

xonsh is a Python-powered, cross-platform, Unix-gazing shell

xonsh is a Python-powered, cross-platform, Unix-gazing shell language and command prompt.