513 Repositories

Python parameter-efficient-tuning Libraries

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training [Arxiv] VideoMAE: Masked Autoencoders are Data-Efficient Learne

Temporally Efficient Vision Transformer for Video Instance Segmentation, CVPR 2022, Oral

Temporally Efficient Vision Transformer for Video Instance Segmentation Temporally Efficient Vision Transformer for Video Instance Segmentation (CVPR

"MST++: Multi-stage Spectral-wise Transformer for Efficient Spectral Reconstruction" (CVPRW 2022) & (Winner of NTIRE 2022 Challenge on Spectral Reconstruction from RGB)

MST++: Multi-stage Spectral-wise Transformer for Efficient Spectral Reconstruction (CVPRW 2022) Yuanhao Cai, Jing Lin, Zudi Lin, Haoqian Wang, Yulun Z

Official Pytorch and JAX implementation of "Efficient-VDVAE: Less is more"

The Official Pytorch and JAX implementation of "Efficient-VDVAE: Less is more" Arxiv preprint Louay Hazami · Rayhane Mama · Ragavan Thurairatn

Not All Points Are Equal: Learning Highly Efficient Point-based Detectors for 3D LiDAR Point Clouds (CVPR 2022, Oral)

Not All Points Are Equal: Learning Highly Efficient Point-based Detectors for 3D LiDAR Point Clouds (CVPR 2022, Oral) This is the official implementat

Implementation of the Transformer variant proposed in "Transformer Quality in Linear Time"

FLASH - Pytorch Implementation of the Transformer variant proposed in the paper Transformer Quality in Linear Time Install $ pip install FLASH-pytorch

Official implementation of the paper 'Efficient and Degradation-Adaptive Network for Real-World Image Super-Resolution'

DASR Paper Efficient and Degradation-Adaptive Network for Real-World Image Super-Resolution Jie Liang, Hui Zeng, and Lei Zhang. In arxiv preprint. Abs

Code for T-Few from "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learning"

T-Few This repository contains the official code for the paper: "Few-Shot Parameter-Efficient Fine-Tuning is Better and Cheaper than In-Context Learni

gget is a free and open-source command-line tool and Python package that enables efficient querying of genomic databases.

gget is a free and open-source command-line tool and Python package that enables efficient querying of genomic databases. gget consists of a collection of separate but interoperable modules, each designed to facilitate one type of database querying in a single line of code.

A Python library that enables ML teams to share, load, and transform data in a collaborative, flexible, and efficient way :chestnut:

Squirrel Core Share, load, and transform data in a collaborative, flexible, and efficient way What is Squirrel? Squirrel is a Python library that enab

FastAPI-Amis-Admin is a high-performance, efficient and easily extensible FastAPI admin framework. Inspired by django-admin, and has as many powerful functions as django-admin.

简体中文 | English 项目介绍 FastAPI-Amis-Admin fastapi-amis-admin是一个拥有高性能,高效率,易拓展的fastapi管理后台框架. 启发自Django-Admin,并且拥有不逊色于Django-Admin的强大功能. 源码 · 在线演示 · 文档 · 文

Easy Parallel Library (EPL) is a general and efficient deep learning framework for distributed model training.

English | 简体中文 Easy Parallel Library Overview Easy Parallel Library (EPL) is a general and efficient library for distributed model training. Usability

Implementation of CaiT models in TensorFlow and ImageNet-1k checkpoints. Includes code for inference and fine-tuning.

CaiT-TF (Going deeper with Image Transformers) This repository provides TensorFlow / Keras implementations of different CaiT [1] variants from Touvron

The code for our paper submitted to RAL/IROS 2022: OverlapTransformer: An Efficient and Rotation-Invariant Transformer Network for LiDAR-Based Place Recognition.

OverlapTransformer The code for our paper submitted to RAL/IROS 2022: OverlapTransformer: An Efficient and Rotation-Invariant Transformer Network for

Codes for "Efficient Long-Range Attention Network for Image Super-resolution"

ELAN Codes for "Efficient Long-Range Attention Network for Image Super-resolution", arxiv link. Dependencies & Installation Please refer to the follow

![[ICLR 2022 Oral] F8Net: Fixed-Point 8-bit Only Multiplication for Network Quantization](https://user-images.githubusercontent.com/25779973/164990377-bb692b26-4c7c-41bb-be8c-d91bdc6e8715.png)

[ICLR 2022 Oral] F8Net: Fixed-Point 8-bit Only Multiplication for Network Quantization

F8Net Fixed-Point 8-bit Only Multiplication for Network Quantization (ICLR 2022 Oral) OpenReview | arXiv | PDF | Model Zoo | BibTex PyTorch implementa

Includes PyTorch - Keras model porting code for ConvNeXt family of models with fine-tuning and inference notebooks.

ConvNeXt-TF This repository provides TensorFlow / Keras implementations of different ConvNeXt [1] variants. It also provides the TensorFlow / Keras mo

Learn Data Science with focus on adding value with the most efficient tech stack.

DataScienceWithPython Get started with Data Science with Python An engaging journey to become a Data Scientist with Python TL;DR Download all Jupyter

Prompt tuning toolkit for GPT-2 and GPT-Neo

mkultra mkultra is a prompt tuning toolkit for GPT-2 and GPT-Neo. Prompt tuning injects a string of 20-100 special tokens into the context in order to

Multilingual Emotion classification using BERT (fine-tuning). Published at the WASSA workshop (ACL2022).

XLM-EMO: Multilingual Emotion Prediction in Social Media Text Abstract Detecting emotion in text allows social and computational scientists to study h

Official Implementation of DE-DETR and DELA-DETR in "Towards Data-Efficient Detection Transformers"

DE-DETRs By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao This repository is an official implementation of DE-DETR and DELA-DETR in

Official Implementation of DE-CondDETR and DELA-CondDETR in "Towards Data-Efficient Detection Transformers"

DE-DETRs By Wen Wang, Jing Zhang, Yang Cao, Yongliang Shen, and Dacheng Tao This repository is an official implementation of DE-CondDETR and DELA-Cond

Lowest memory consumption and second shortest runtime in NTIRE 2022 challenge on Efficient Super-Resolution

FMEN Lowest memory consumption and second shortest runtime in NTIRE 2022 on Efficient Super-Resolution. Our paper: Fast and Memory-Efficient Network T

Repository for fine-tuning Transformers 🤗 based seq2seq speech models in JAX/Flax.

Seq2Seq Speech in JAX A JAX/Flax repository for combining a pre-trained speech encoder model (e.g. Wav2Vec2, HuBERT, WavLM) with a pre-trained text de

Code for the Findings of NAACL 2022(Long Paper): AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks

AdapterBias: Parameter-efficient Token-dependent Representation Shift for Adapters in NLP Tasks arXiv link: upcoming To be published in Findings of NA

This is an official implementation for "DeciWatch: A Simple Baseline for 10x Efficient 2D and 3D Pose Estimation"

DeciWatch: A Simple Baseline for 10× Efficient 2D and 3D Pose Estimation This repo is the official implementation of "DeciWatch: A Simple Baseline for

Code for our SIGIR 2022 accepted paper : P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-based Learning and Pre-finetuning

P3 Ranker Implementation for our SIGIR2022 accepted paper: P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-bas

In this project we predict the forest cover type using the cartographic variables in the training/test datasets.

Kaggle Competition: Forest Cover Type Prediction In this project we predict the forest cover type (the predominant kind of tree cover) using the carto

Under the hood working of transformers, fine-tuning GPT-3 models, DeBERTa, vision models, and the start of Metaverse, using a variety of NLP platforms: Hugging Face, OpenAI API, Trax, and AllenNLP

Transformers-for-NLP-2nd-Edition @copyright 2022, Packt Publishing, Denis Rothman Contact me for any question you have on LinkedIn Get the book on Ama

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning This repository is official Tensorflow implementation of paper: Ensemb

code for the ICLR'22 paper: On Robust Prefix-Tuning for Text Classification

On Robust Prefix-Tuning for Text Classification Prefix-tuning has drawed much attention as it is a parameter-efficient and modular alternative to adap

Implementation of a memory efficient multi-head attention as proposed in the paper, "Self-attention Does Not Need O(n²) Memory"

Memory Efficient Attention Pytorch Implementation of a memory efficient multi-head attention as proposed in the paper, Self-attention Does Not Need O(

Colar: Effective and Efficient Online Action Detection by Consulting Exemplars, CVPR 2022.

Colar: Effective and Efficient Online Action Detection by Consulting Exemplars This repository is the official implementation of Colar. In this work,

Codes for "Template-free Prompt Tuning for Few-shot NER".

EntLM The source codes for EntLM. Dependencies: Cuda 10.1, python 3.6.5 To install the required packages by following commands: $ pip3 install -r requ

a simple, efficient, and intuitive text editor

Oxygen beta a simple, efficient, and intuitive text editor Overview oxygen is a simple, efficient, and intuitive text editor designed as more featured

PyTorch Implementation of DiffGAN-TTS: High-Fidelity and Efficient Text-to-Speech with Denoising Diffusion GANs

DiffGAN-TTS - PyTorch Implementation PyTorch implementation of DiffGAN-TTS: High

ZeroGen: Efficient Zero-shot Learning via Dataset Generation

ZEROGEN This repository contains the code for our paper “ZeroGen: Efficient Zero

HashNeRF-pytorch - Pure PyTorch Implementation of NVIDIA paper on Instant Training of Neural Graphics primitives

HashNeRF-pytorch Instant-NGP recently introduced a Multi-resolution Hash Encodin

SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING - The Facebook paper about fine tuning RoBERTa with contrastive loss

"# SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING" i

FAMIE is a comprehensive and efficient active learning (AL) toolkit for multilingual information extraction (IE)

FAMIE: A Fast Active Learning Framework for Multilingual Information Extraction

OpenDelta - An Open-Source Framework for Paramter Efficient Tuning.

OpenDelta is a toolkit for parameter efficient methods (we dub it as delta tuning), by which users could flexibly assign (or add) a small amount parameters to update while keeping the most paramters frozen. By using OpenDelta, users could easily implement prefix-tuning, adapters, Lora, or any other types of delta tuning with preferred PTMs.

Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration

This repo is for the paper: Theory-inspired Parameter Control Benchmarks for Dynamic Algorithm Configuration The DAC environment is based on the Dynam

HCQ: Hybrid Contrastive Quantization for Efficient Cross-View Video Retrieval

HCQ: Hybrid Contrastive Quantization for Efficient Cross-View Video Retrieval [toc] 1. Introduction This repository provides the code for our paper at

Learning Features with Parameter-Free Layers, ICLR 2022

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

A curated list of automated deep learning (including neural architecture search and hyper-parameter optimization) resources.

Awesome AutoDL A curated list of automated deep learning related resources. Inspired by awesome-deep-vision, awesome-adversarial-machine-learning, awe

Monocular 3D Object Detection: An Extrinsic Parameter Free Approach (CVPR2021)

Monocular 3D Object Detection: An Extrinsic Parameter Free Approach (CVPR2021) Yunsong Zhou, Yuan He, Hongzi Zhu, Cheng Wang, Hongyang Li, Qinhong Jia

Learning Features with Parameter-Free Layers (ICLR 2022)

Learning Features with Parameter-Free Layers (ICLR 2022) Dongyoon Han, YoungJoon Yoo, Beomyoung Kim, Byeongho Heo | Paper NAVER AI Lab, NAVER CLOVA Up

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

SGPT: Multi-billion parameter models for semantic search

SGPT: Multi-billion parameter models for semantic search This repository contains code, results and pre-trained models for the paper SGPT: Multi-billi

Learning Efficient Online 3D Bin Packing on Packing Configuration Trees

Learning Efficient Online 3D Bin Packing on Packing Configuration Trees This repository is being continuously updated, please stay tuned! Any code con

Gold(Gold) is a modern cryptocurrency built from scratch, designed to be efficient, decentralized, and secure

gold-blockchain (Gold) Gold(Gold) is a modern cryptocurrency built from scratch, designed to be efficient, decentralized, and secure. Here are some of

Large-scale Knowledge Graph Construction with Prompting

Large-scale Knowledge Graph Construction with Prompting across tasks (predictive and generative), and modalities (language, image, vision + language, etc.)

Youtube Downloader is a simple but highly efficient Youtube Video Downloader, made completly using Python

Youtube Downloader is a simple but highly efficient Youtube Video Downloader, made completly using Python

Code Impementation for "Mold into a Graph: Efficient Bayesian Optimization over Mixed Spaces"

Code Impementation for "Mold into a Graph: Efficient Bayesian Optimization over Mixed Spaces" This repo contains the implementation of GEBO algorithm.

Efficient Deep Learning Systems course

Efficient Deep Learning Systems This repository contains materials for the Efficient Deep Learning Systems course taught at the Faculty of Computer Sc

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation

Image-based Navigation in Real-World Environments via Multiple Mid-level Representations: Fusion Models Benchmark and Efficient Evaluation This reposi

FewBit — a library for memory efficient training of large neural networks

FewBit FewBit — a library for memory efficient training of large neural networks. Its efficiency originates from storage optimizations applied to back

This repository provides an efficient PyTorch-based library for training deep models.

An Efficient Library for Training Deep Models This repository provides an efficient PyTorch-based library for training deep models. Installation Make

Pytorch Performace Tuning, WandB, AMP, Multi-GPU, TensorRT, Triton

Plant Pathology 2020 FGVC7 Introduction A deep learning model pipeline for training, experimentaiton and deployment for the Kaggle Competition, Plant

Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners

DART Implementation for ICLR2022 paper Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. Environment [email protected] Use pi

Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On

UPMT Generate fine-tuning samples & Fine-tuning the model & Generate samples by transferring Note On See main.py as an example: from model import PopM

Hyperparameters tuning and features selection are two common steps in every machine learning pipeline.

shap-hypetune A python package for simultaneous Hyperparameters Tuning and Features Selection for Gradient Boosting Models. Overview Hyperparameters t

Efficient-GlobalPointer - Pytorch Efficient GlobalPointer

引言 感谢苏神带来的模型,原文地址:https://spaces.ac.cn/archives/8877 如何运行 对应模型EfficientGlobalPoi

EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks

EncT5 (Unofficial) Pytorch Implementation of EncT5: Fine-tuning T5 Encoder for Non-autoregressive Tasks About Finetune T5 model for classification & r

"Structure-Augmented Text Representation Learning for Efficient Knowledge Graph Completion"(WWW 2021)

STAR_KGC This repo contains the source code of the paper accepted by WWW'2021. "Structure-Augmented Text Representation Learning for Efficient Knowled

A "multiclipboards" script for an efficient way to improve the original clipboards which are only able to save one string at a time

A "multiclipboards" script for an efficient way to improve the original clipboards which are only able to save one string at a time. Works on both Windows and Linux.

Efficient Multi Collection Style Transfer Using GAN

Proposed a new model that can make style transfer from single style image, and allow to transfer into multiple different styles in a single model.

TheTimeMachine - Weaponizing WaybackUrls for Recon, BugBounties , OSINT, Sensitive Endpoints and what not

The Time Machine - Weaponizing WaybackUrls for Recon, BugBounties , OSINT, Sensi

Pynomial - a lightweight python library for implementing the many confidence intervals for the risk parameter of a binomial model

Pynomial - a lightweight python library for implementing the many confidence intervals for the risk parameter of a binomial model

This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML)

package tests docs license stats support This repository contains FEDOT - an open-source framework for automated modeling and machine learning (AutoML

Peek-a-Boo: What (More) is Disguised in a Randomly Weighted Neural Network, and How to Find It Efficiently

Peek-a-Boo: What (More) is Disguised in a Randomly Weighted Neural Network, and How to Find It Efficiently This repository is the official implementat

A functional and efficient python implementation of the 3D version of Maxwell's equations

py-maxwell-fdfd Solving Maxwell's equations via A python implementation of the 3D curl-curl E-field equations. This code contains additional work to e

Utilities to make function-based views cleaner, more efficient, and better tasting.

django-fbv Utilities to make Django function-based views cleaner, more efficient, and better tasting. 💥 📖 Complete documentation: https://django-fbv

A curated list of efficient attention modules

awesome-fast-attention A curated list of efficient attention modules

Local-Global Stratified Transformer for Efficient Video Recognition

DualFormer This repo is the implementation of our manuscript entitled "Local-Global Stratified Transformer for Efficient Video Recognition". Our model

Efficient Online Bayesian Inference for Neural Bandits

Efficient Online Bayesian Inference for Neural Bandits By Gerardo Durán-Martín, Aleyna Kara, and Kevin Murphy AISTATS 2022.

Original Implementation of Prompt Tuning from Lester, et al, 2021

Prompt Tuning This is the code to reproduce the experiments from the EMNLP 2021 paper "The Power of Scale for Parameter-Efficient Prompt Tuning" (Lest

A handy tool for common machine learning models' hyper-parameter tuning.

Common machine learning models' hyperparameter tuning This repo is for a collection of hyper-parameter tuning for "common" machine learning models, in

In this project, we compared Spanish BERT and Multilingual BERT in the Sentiment Analysis task.

Applying BERT Fine Tuning to Sentiment Classification on Amazon Reviews Abstract Sentiment analysis has made great progress in recent years, due to th

Official PyTorch Implementation of paper EAN: Event Adaptive Network for Efficient Action Recognition

Official PyTorch Implementation of paper EAN: Event Adaptive Network for Efficient Action Recognition

"Moshpit SGD: Communication-Efficient Decentralized Training on Heterogeneous Unreliable Devices", official implementation

Moshpit SGD: Communication-Efficient Decentralized Training on Heterogeneous Unreliable Devices This repository contains the official PyTorch implemen

An implementation of the efficient attention module.

Efficient Attention An implementation of the efficient attention module. Description Efficient attention is an attention mechanism that substantially

![[NeurIPS 2020] Official Implementation:](https://github.com/giannisdaras/smyrf/raw/master/visuals/quality_degradation.png)

[NeurIPS 2020] Official Implementation: "SMYRF: Efficient Attention using Asymmetric Clustering".

SMYRF: Efficient attention using asymmetric clustering Get started: Abstract We propose a novel type of balanced clustering algorithm to approximate a

Source code for paper "Black-Box Tuning for Language-Model-as-a-Service"

Black-Box-Tuning Source code for paper "Black-Box Tuning for Language-Model-as-a-Service". Being busy recently, the code in this repo and this tutoria

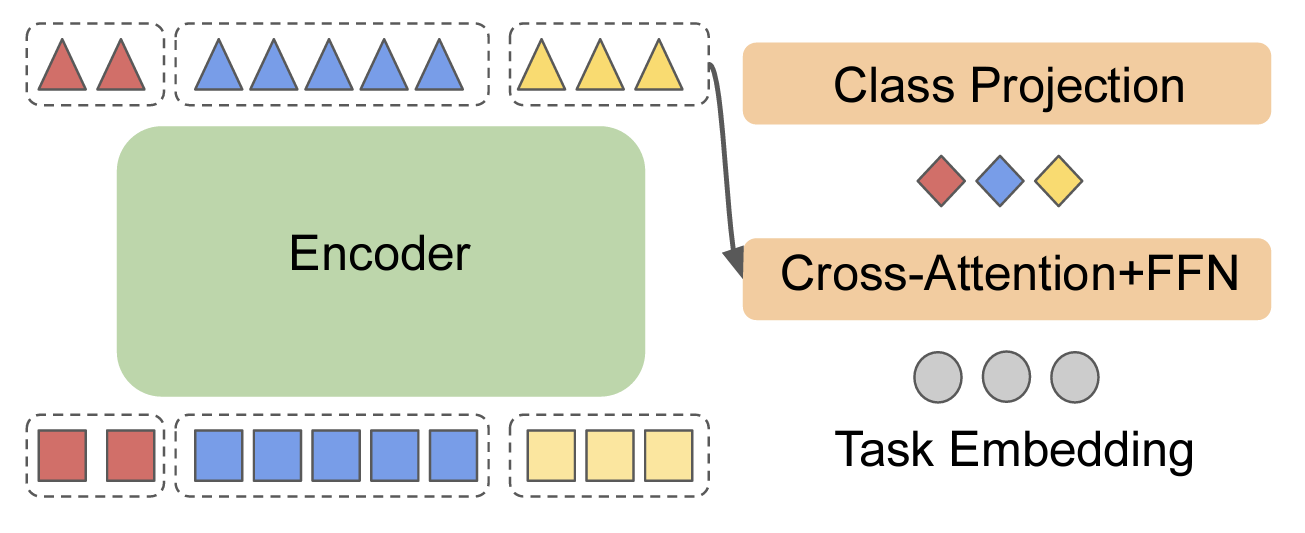

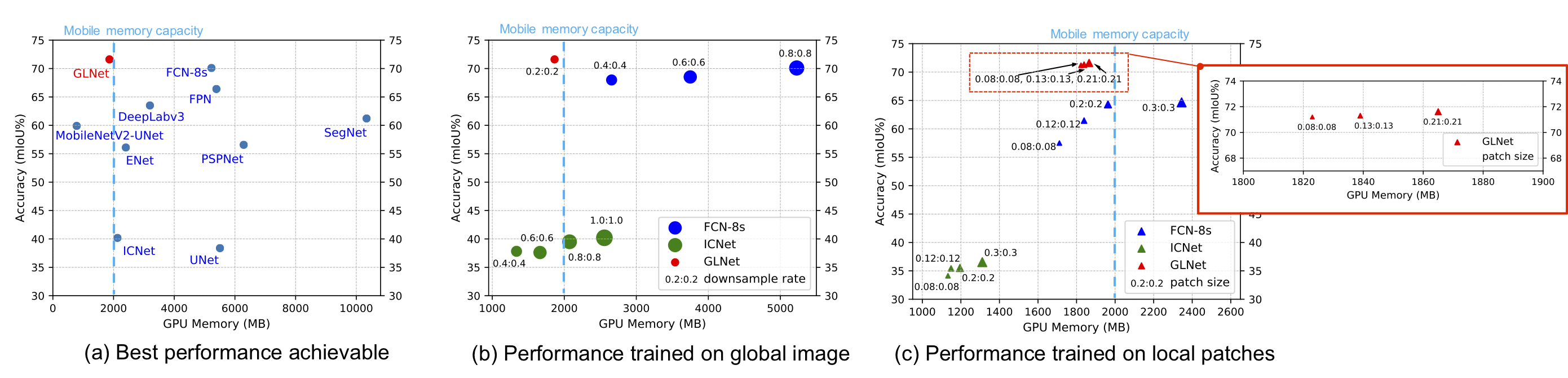

GLNet for Memory-Efficient Segmentation of Ultra-High Resolution Images

GLNet for Memory-Efficient Segmentation of Ultra-High Resolution Images Collaborative Global-Local Networks for Memory-Efficient Segmentation of Ultra-

Eff video representation - Efficient video representation through neural fields

Neural Residual Flow Fields for Efficient Video Representations 1. Download MPI

Black-Box-Tuning - Black-Box Tuning for Language-Model-as-a-Service

Black-Box-Tuning Source code for paper "Black-Box Tuning for Language-Model-as-a

Official Code For TDEER: An Efficient Translating Decoding Schema for Joint Extraction of Entities and Relations (EMNLP2021)

TDEER 🦌 🦒 Official Code For TDEER: An Efficient Translating Decoding Schema for Joint Extraction of Entities and Relations (EMNLP2021) Overview TDEE

We present a regularized self-labeling approach to improve the generalization and robustness properties of fine-tuning.

Overview This repository provides the implementation for the paper "Improved Regularization and Robustness for Fine-tuning in Neural Networks", which

K Closest Points and Maximum Clique Pruning for Efficient and Effective 3D Laser Scan Matching (To appear in RA-L 2022)

KCP The official implementation of KCP: k Closest Points and Maximum Clique Pruning for Efficient and Effective 3D Laser Scan Matching, accepted for p

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

Source code of paper: "HRegNet: A Hierarchical Network for Efficient and Accurate Outdoor LiDAR Point Cloud Registration".

HRegNet: A Hierarchical Network for Efficient and Accurate Outdoor LiDAR Point Cloud Registration Environments The code mainly requires the following

Financial portfolio optimisation in python, including classical efficient frontier, Black-Litterman, Hierarchical Risk Parity

PyPortfolioOpt has recently been published in the Journal of Open Source Software 🎉 PyPortfolioOpt is a library that implements portfolio optimizatio

Fast, efficient Blowfish cipher implementation in pure Python (3.4+).

blowfish This module implements the Blowfish cipher using only Python (3.4+). Blowfish is a block cipher that can be used for symmetric-key encryption

Logistic Bandit experiments. Official code for the paper "Jointly Efficient and Optimal Algorithms for Logistic Bandits".

Code for the paper Jointly Efficient and Optimal Algorithms for Logistic Bandits, by Louis Faury, Marc Abeille, Clément Calauzènes and Kwang-Sun Jun.

Easy and Efficient Object Detector

EOD Easy and Efficient Object Detector EOD (Easy and Efficient Object Detection) is a general object detection model production framework. It aim on p

MVS2D: Efficient Multi-view Stereo via Attention-Driven 2D Convolutions

MVS2D: Efficient Multi-view Stereo via Attention-Driven 2D Convolutions Project Page | Paper If you find our work useful for your research, please con

Make YouTube videos tasks in Todoist faster and time efficient!

Youtubist Basically fork of yt-dlp python module to my needs. You can paste playlist or channel link on the YouTube. It will automatically format to s

Open-source implementation of Google Vizier for hyper parameters tuning

Advisor Introduction Advisor is the hyper parameters tuning system for black box optimization. It is the open-source implementation of Google Vizier w

Very efficient backup system based on the git packfile format, providing fast incremental saves and global deduplication

Very efficient backup system based on the git packfile format, providing fast incremental saves and global deduplication (among and within files, including virtual machine images). Current release is 0.31, and the development branch is master. Please post problems or patches to the mailing list for discussion (see the end of the README below).