2006 Repositories

Python radio-transformer-networks Libraries

LynxKite: a complete graph data science platform for very large graphs and other datasets.

LynxKite is a complete graph data science platform for very large graphs and other datasets. It seamlessly combines the benefits of a friendly graphical interface and a powerful Python API.

Group Activity Recognition with Clustered Spatial Temporal Transformer

GroupFormer Group Activity Recognition with Clustered Spatial-TemporalTransformer Backbone Style Action Acc Activity Acc Config Download Inv3+flow+pos

Source code of SIGIR2021 Paper 'One Chatbot Per Person: Creating Personalized Chatbots based on Implicit Profiles'

DHAP Source code of SIGIR2021 Long Paper: One Chatbot Per Person: Creating Personalized Chatbots based on Implicit User Profiles . Preinstallation Fir

An End-to-End Machine Learning Library to Optimize AUC (AUROC, AUPRC).

Logo by Zhuoning Yuan LibAUC: A Machine Learning Library for AUC Optimization Website | Updates | Installation | Tutorial | Research | Github LibAUC a

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python)

ttslearn: Library for Pythonで学ぶ音声合成 (Text-to-speech with Python) 日本語は以下に続きます (Japanese follows) English: This book is written in Japanese and primaril

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

Code for MentorNet: Learning Data-Driven Curriculum for Very Deep Neural Networks

MentorNet: Learning Data-Driven Curriculum for Very Deep Neural Networks This is the code for the paper: MentorNet: Learning Data-Driven Curriculum fo

Curriculum Domain Adaptation for Semantic Segmentation of Urban Scenes, ICCV 2017

AdaptationSeg This is the Python reference implementation of AdaptionSeg proposed in "Curriculum Domain Adaptation for Semantic Segmentation of Urban

This repository contains a CBIR system that uses swin transformer to extract image's feature.

Swin-transformer based CBIR This repository contains a CBIR(content-based image retrieval) system. Here we use Swin-transformer to extract query image

ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

ONNX Runtime is a cross-platform inference and training machine-learning accelerator. ONNX Runtime inference can enable faster customer experiences an

PyTorch implementation of Super SloMo by Jiang et al.

Super-SloMo PyTorch implementation of "Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation" by Jiang H., Sun

Source code for Fathony, Sahu, Willmott, & Kolter, "Multiplicative Filter Networks", ICLR 2021.

Multiplicative Filter Networks This repository contains a PyTorch MFN implementation and code to perform & reproduce experiments from the ICLR 2021 pa

Machine learning algorithms for many-body quantum systems

NetKet NetKet is an open-source project delivering cutting-edge methods for the study of many-body quantum systems with artificial neural networks and

StyleSwin: Transformer-based GAN for High-resolution Image Generation

StyleSwin This repo is the official implementation of "StyleSwin: Transformer-based GAN for High-resolution Image Generation". By Bowen Zhang, Shuyang

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

Source Code for AAAI 2022 paper "Graph Convolutional Networks with Dual Message Passing for Subgraph Isomorphism Counting and Matching"

Graph Convolutional Networks with Dual Message Passing for Subgraph Isomorphism Counting and Matching This repository is an official implementation of

A Japanese tokenizer based on recurrent neural networks

Nagisa is a python module for Japanese word segmentation/POS-tagging. It is designed to be a simple and easy-to-use tool. This tool has the following

Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021).

STAR-pytorch Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021). CVF (pdf) STAR-DC

This project aims to explore the deployment of Swin-Transformer based on TensorRT, including the test results of FP16 and INT8.

Swin Transformer This project aims to explore the deployment of SwinTransformer based on TensorRT, including the test results of FP16 and INT8. Introd

OSLO: Open Source framework for Large-scale transformer Optimization

O S L O Open Source framework for Large-scale transformer Optimization What's New: December 21, 2021 Released OSLO 1.0. What is OSLO about? OSLO is a

Source Code for AAAI 2022 paper "Graph Convolutional Networks with Dual Message Passing for Subgraph Isomorphism Counting and Matching"

Graph Convolutional Networks with Dual Message Passing for Subgraph Isomorphism Counting and Matching This repository is an official implementation of

TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning

TransZero++ This repository contains the testing code for the paper "TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning" submitted

MISSFormer: An Effective Medical Image Segmentation Transformer

MISSFormer Code for paper "MISSFormer: An Effective Medical Image Segmentation Transformer". Please read our preprint at the following link: paper_add

Header-only library for using Keras models in C++.

frugally-deep Use Keras models in C++ with ease Table of contents Introduction Usage Performance Requirements and Installation FAQ Introduction Would

PyTorch implementation of HDN(Homography Decomposition Networks) for planar object tracking

Homography Decomposition Networks for Planar Object Tracking This project is the offical PyTorch implementation of HDN(Homography Decomposition Networ

Code for 2021 NeurIPS --- Towards Multi-Grained Explainability for Graph Neural Networks

ReFine: Multi-Grained Explainability for GNNs This is the official code for Towards Multi-Grained Explainability for Graph Neural Networks (NeurIPS 20

TensorFlow implementation of "TokenLearner: What Can 8 Learned Tokens Do for Images and Videos?"

TokenLearner: What Can 8 Learned Tokens Do for Images and Videos? Source: Improving Vision Transformer Efficiency and Accuracy by Learning to Tokenize

Tensorflow Tutorials using Jupyter Notebook

Tensorflow Tutorials using Jupyter Notebook TensorFlow tutorials written in Python (of course) with Jupyter Notebook. Tried to explain as kindly as po

Human Activity Recognition example using TensorFlow on smartphone sensors dataset and an LSTM RNN. Classifying the type of movement amongst six activity categories - Guillaume Chevalier

LSTMs for Human Activity Recognition Human Activity Recognition (HAR) using smartphones dataset and an LSTM RNN. Classifying the type of movement amon

A deep learning network built with TensorFlow and Keras to classify gender and estimate age.

Convolutional Neural Network (CNN). This repository contains a source code of a deep learning network built with TensorFlow and Keras to classify gend

Deep Reinforcement Learning for Keras.

Deep Reinforcement Learning for Keras What is it? keras-rl implements some state-of-the art deep reinforcement learning algorithms in Python and seaml

![[CVPR 2020] Local Class-Specific and Global Image-Level Generative Adversarial Networks for Semantic-Guided Scene Generation](https://github.com/Ha0Tang/LGGAN/raw/master/imgs/framework.jpg)

[CVPR 2020] Local Class-Specific and Global Image-Level Generative Adversarial Networks for Semantic-Guided Scene Generation

Contents Local and Global GAN Cross-View Image Translation Semantic Image Synthesis Acknowledgments Related Projects Citation Contributions Collaborat

O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis

O-CNN This repository contains the implementation of our papers related with O-CNN. The code is released under the MIT license. O-CNN: Octree-based Co

![[ECCV'20] Convolutional Occupancy Networks](https://github.com/autonomousvision/convolutional_occupancy_networks/raw/master/media/teaser_matterport.gif)

[ECCV'20] Convolutional Occupancy Networks

Convolutional Occupancy Networks Paper | Supplementary | Video | Teaser Video | Project Page | Blog Post This repository contains the implementation o

C3DPO - Canonical 3D Pose Networks for Non-rigid Structure From Motion.

C3DPO: Canonical 3D Pose Networks for Non-Rigid Structure From Motion By: David Novotny, Nikhila Ravi, Benjamin Graham, Natalia Neverova, Andrea Vedal

Volumetric Correspondence Networks for Optical Flow, NeurIPS 2019.

VCN: Volumetric correspondence networks for optical flow [project website] Requirements python 3.6 pytorch 1.1.0-1.3.0 pytorch correlation module (opt

Official Pytorch implementation of Scene Representation Networks: Continuous 3D-Structure-Aware Neural Scene Representations

Scene Representation Networks This is the official implementation of the NeurIPS submission "Scene Representation Networks: Continuous 3D-Structure-Aw

3D HourGlass Networks for Human Pose Estimation Through Videos

3D-HourGlass-Network 3D CNN Based Hourglass Network for Human Pose Estimation (3D Human Pose) from videos. This was my summer'18 research project. Dis

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Build Low Code Automated Tensorflow, What-IF explainable models in just 3 lines of code.

Build Low Code Automated Tensorflow explainable models in just 3 lines of code.

Implementation of Auto-Conditioned Recurrent Networks for Extended Complex Human Motion Synthesis

acLSTM_motion This folder contains an implementation of acRNN for the CMU motion database written in Pytorch. See the following links for more backgro

Something I built to test for Log4J vulnerabilities on customer networks.

Log4J-Scanner Something I built to test for Log4J vulnerabilities on customer networks. I'm not responsible if your computer blows up, catches fire or

pyo is a Python module written in C to help digital signal processing script creation.

pyo is a Python module written in C to help digital signal processing script creation.

Almost State-of-the-art Text Generation library

Ps: we are adding transformer model soon Text Gen 🐐 Almost State-of-the-art Text Generation library Text gen is a python library that allow you build

Codes for building and training the neural network model described in Domain-informed neural networks for interaction localization within astroparticle experiments.

Domain-informed Neural Networks Codes for building and training the neural network model described in Domain-informed neural networks for interaction

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2021/11/19 Thank you for your interest in our work. We have uploaded the code of our MTUNet to help peers conduct further research on i

Pytorch implementation of our paper under review -- 1xN Pattern for Pruning Convolutional Neural Networks

1xN Pattern for Pruning Convolutional Neural Networks (paper) . This is Pytorch re-implementation of "1xN Pattern for Pruning Convolutional Neural Net

Implementation of Nalbach et al. 2017 paper.

Deep Shading Convolutional Neural Networks for Screen-Space Shading Our project is based on Nalbach et al. 2017 paper. In this project, a set of buffe

CDTrans: Cross-domain Transformer for Unsupervised Domain Adaptation

[ICCV2021] TransReID: Transformer-based Object Re-Identification [pdf] The official repository for TransReID: Transformer-based Object Re-Identificati

This repository builds a basic vision transformer from scratch so that one beginner can understand the theory of vision transformer.

vision-transformer-from-scratch This repository includes several kinds of vision transformers from scratch so that one beginner can understand the the

A Transformer-Based Feature Segmentation and Region Alignment Method For UAV-View Geo-Localization

University1652-Baseline [Paper] [Slide] [Explore Drone-view Data] [Explore Satellite-view Data] [Explore Street-view Data] [Video Sample] [中文介绍] This

Official PyTorch implementation of SegFormer

SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers Figure 1: Performance of SegFormer-B0 to SegFormer-B5. Project page

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

GANformer: Generative Adversarial Transformers

GANformer: Generative Adversarial Transformers Drew A. Hudson* & C. Lawrence Zitnick Update: We released the new GANformer2 paper! *I wish to thank Ch

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Edge-Augmented Graph Transformer

Edge-augmented Graph Transformer Introduction This is the official implementation of the Edge-augmented Graph Transformer (EGT) as described in https:

FastFormers - highly efficient transformer models for NLU

FastFormers FastFormers provides a set of recipes and methods to achieve highly efficient inference of Transformer models for Natural Language Underst

Transformer training code for sequential tasks

Sequential Transformer This is a code for training Transformers on sequential tasks such as language modeling. Unlike the original Transformer archite

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Fine-tuning scripts for evaluating transformer-based models on KLEJ benchmark.

The KLEJ Benchmark Baselines The KLEJ benchmark (Kompleksowa Lista Ewaluacji Językowych) is a set of nine evaluation tasks for the Polish language und

Large-scale pretraining for dialogue

A State-of-the-Art Large-scale Pretrained Response Generation Model (DialoGPT) This repository contains the source code and trained model for a large-

The code for two papers: Feedback Transformer and Expire-Span.

transformer-sequential This repo contains the code for two papers: Feedback Transformer Expire-Span The training code is structured for long sequentia

Funnel-Transformer: Filtering out Sequential Redundancy for Efficient Language Processing

Introduction Funnel-Transformer is a new self-attention model that gradually compresses the sequence of hidden states to a shorter one and hence reduc

Sinkhorn Transformer - Practical implementation of Sparse Sinkhorn Attention

Sinkhorn Transformer This is a reproduction of the work outlined in Sparse Sinkhorn Attention, with additional enhancements. It includes a parameteriz

An implementation of the Pay Attention when Required transformer

Pay Attention when Required (PAR) Transformer-XL An implementation of the Pay Attention when Required transformer from the paper: https://arxiv.org/pd

Fully featured implementation of Routing Transformer

Routing Transformer A fully featured implementation of Routing Transformer. The paper proposes using k-means to route similar queries / keys into the

Reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer: Self-Attention with Linear Complexity)

Linear Multihead Attention (Linformer) PyTorch Implementation of reproducing the Linear Multihead Attention introduced in Linformer paper (Linformer:

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

Longformer: The Long-Document Transformer

Longformer Longformer and LongformerEncoderDecoder (LED) are pretrained transformer models for long documents. ***** New December 1st, 2020: Longforme

This repository contains the code for running the character-level Sandwich Transformers from our ACL 2020 paper on Improving Transformer Models by Reordering their Sublayers.

Improving Transformer Models by Reordering their Sublayers This repository contains the code for running the character-level Sandwich Transformers fro

Source code of paper "BP-Transformer: Modelling Long-Range Context via Binary Partitioning"

BP-Transformer This repo contains the code for our paper BP-Transformer: Modeling Long-Range Context via Binary Partition Zihao Ye, Qipeng Guo, Quan G

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

This is the repository for the NeurIPS-21 paper [Contrastive Graph Poisson Networks: Semi-Supervised Learning with Extremely Limited Labels].

CGPN This is the repository for the NeurIPS-21 paper [Contrastive Graph Poisson Networks: Semi-Supervised Learning with Extremely Limited Labels]. Req

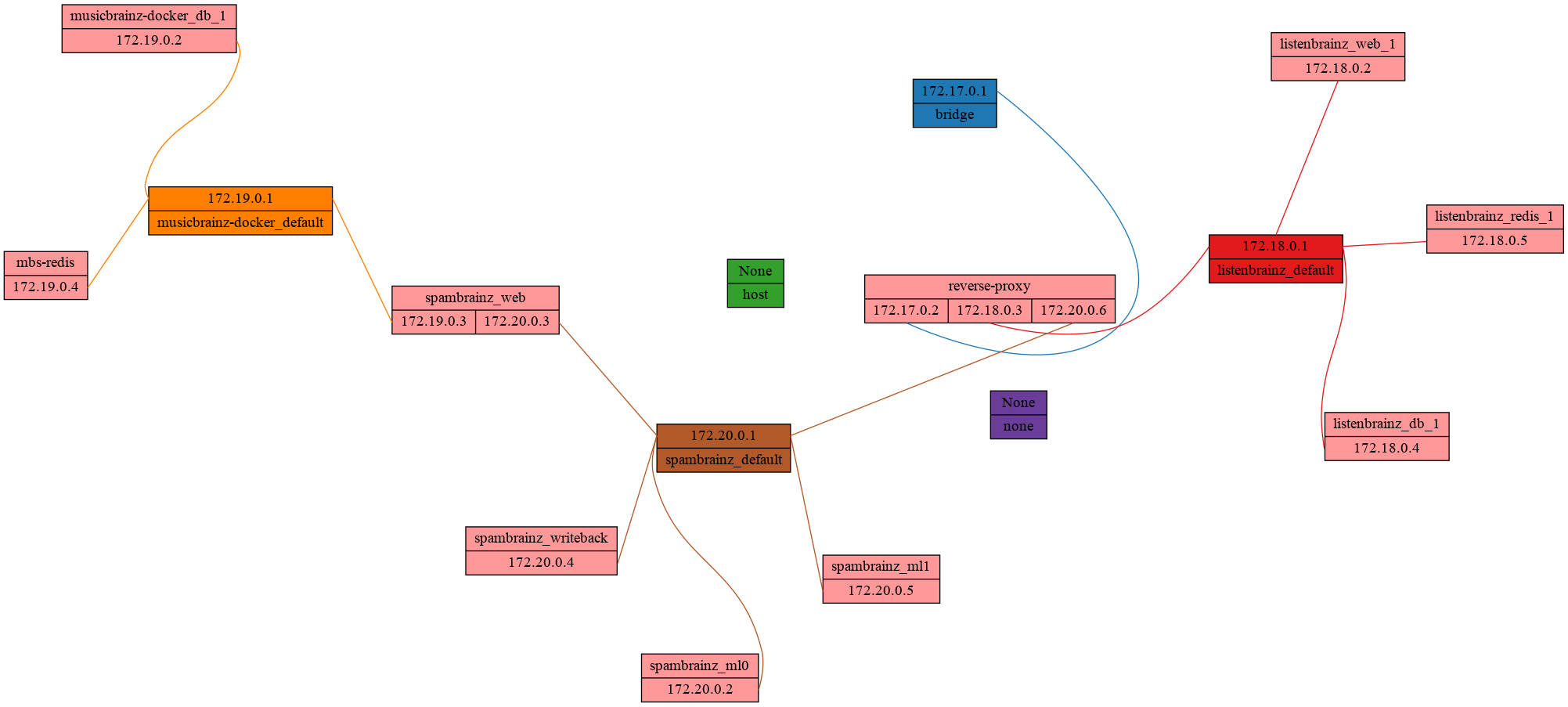

Efficient and Scalable Physics-Informed Deep Learning and Scientific Machine Learning on top of Tensorflow for multi-worker distributed computing

Notice: Support for Python 3.6 will be dropped in v.0.2.1, please plan accordingly! Efficient and Scalable Physics-Informed Deep Learning Collocation-

PyDEns is a framework for solving Ordinary and Partial Differential Equations (ODEs & PDEs) using neural networks

PyDEns PyDEns is a framework for solving Ordinary and Partial Differential Equations (ODEs & PDEs) using neural networks. With PyDEns one can solve PD

Physics-Informed Neural Networks (PINN) and Deep BSDE Solvers of Differential Equations for Scientific Machine Learning (SciML) accelerated simulation

NeuralPDE NeuralPDE.jl is a solver package which consists of neural network solvers for partial differential equations using scientific machine learni

Sparse Physics-based and Interpretable Neural Networks

Sparse Physics-based and Interpretable Neural Networks for PDEs This repository contains the code and manuscript for research done on Sparse Physics-b

Implementation of Research Paper "Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation"

Zero-DCE and Zero-DCE++(Lite architechture for Mobile and edge Devices) Papers Abstract The paper presents a novel method, Zero-Reference Deep Curve E

[ACM MM 2021] Yes, "Attention is All You Need", for Exemplar based Colorization

Transformer for Image Colorization This is an implemention for Yes, "Attention Is All You Need", for Exemplar based Colorization, and the current soft

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2

Mask2Former: Masked-attention Mask Transformer for Universal Image Segmentation in TensorFlow 2 Bowen Cheng, Ishan Misra, Alexander G. Schwing, Alexan

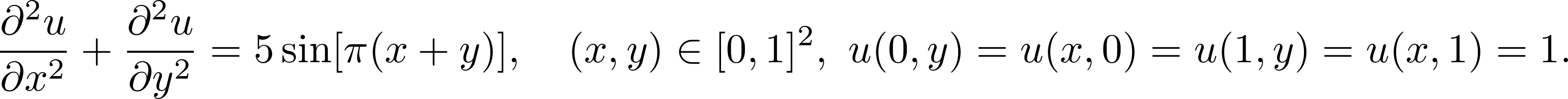

Quickly visualize docker networks with graphviz.

Docker Network Graph Visualize the relationship between Docker networks and containers as a neat graphviz graph. Example Usage usage: docker-net-graph

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022

Dual Correlation Reduction Network An official source code for paper Deep Graph Clustering via Dual Correlation Reduction, accepted by AAAI 2022. Any

Hunt down social media accounts by username across social networks

Hunt down social media accounts by username across social networks Installation | Usage | Docker Notes | Contributing Installation # clone the repo $

Recursive Bayesian Networks

Recursive Bayesian Networks This repository contains the code to reproduce the results from the NeurIPS 2021 paper Lieck R, Rohrmeier M (2021) Recursi

![[AAAI 2022] Sparse Structure Learning via Graph Neural Networks for Inductive Document Classification](https://github.com/qkrdmsghk/TextSSL/raw/master/TextSSL.png)

[AAAI 2022] Sparse Structure Learning via Graph Neural Networks for Inductive Document Classification

Sparse Structure Learning via Graph Neural Networks for inductive document classification Make graph dataset create co-occurrence graph for datasets.

Code for Contrastive-Geometry Networks for Generalized 3D Pose Transfer

CGTransformer Code for our AAAI 2022 paper "Contrastive-Geometry Transformer network for Generalized 3D Pose Transfer" Contrastive-Geometry Transforme

A high-performance distributed deep learning system targeting large-scale and automated distributed training.

HETU Documentation | Examples Hetu is a high-performance distributed deep learning system targeting trillions of parameters DL model training, develop

AI4Good project for detecting waste in the environment

Detect waste AI4Good project for detecting waste in environment. www.detectwaste.ml. Our latest results were published in Waste Management journal in

Generate high quality pictures. GAN. Generative Adversarial Networks

ESRGAN generate high quality pictures. GAN. Generative Adversarial Networks """ Super-resolution of CelebA using Generative Adversarial Networks. The

PyGAD, a Python 3 library for building the genetic algorithm and training machine learning algorithms (Keras & PyTorch).

PyGAD: Genetic Algorithm in Python PyGAD is an open-source easy-to-use Python 3 library for building the genetic algorithm and optimizing machine lear

Paper and Codes for “Embracing Single Stride 3D Object Detector with Sparse Transformer”

SST: Single-stride Sparse Transformer This is the official implementation of paper: Embracing Single Stride 3D Object Detector with Sparse Transformer

This is the official source code of "BiCAT: Bi-Chronological Augmentation of Transformer for Sequential Recommendation".

BiCAT This is our TensorFlow implementation for the paper: "BiCAT: Sequential Recommendation with Bidirectional Chronological Augmentation of Transfor

The code of "Dependency Learning for Legal Judgment Prediction with a Unified Text-to-Text Transformer".

Code data_preprocess.py: preprocess data for Dependent-T5. parameters.py: define parameters of Dependent-T5. train_tools.py: traning and evaluation co

PyG (PyTorch Geometric) - A library built upon PyTorch to easily write and train Graph Neural Networks (GNNs)

PyG (PyTorch Geometric) is a library built upon PyTorch to easily write and train Graph Neural Networks (GNNs) for a wide range of applications related to structured data.

Official code for Next Check-ins Prediction via History and Friendship on Location-Based Social Networks (MDM 2018)

MUC Next Check-ins Prediction via History and Friendship on Location-Based Social Networks (MDM 2018) Performance Details for Accuracy: | Dataset

Official implementation of the article "Unsupervised JPEG Domain Adaptation For Practical Digital Forensics"

Unsupervised JPEG Domain Adaptation for Practical Digital Image Forensics @WIFS2021 (Montpellier, France) Rony Abecidan, Vincent Itier, Jeremie Boulan