2006 Repositories

Python radio-transformer-networks Libraries

Code for the paper "Are Sixteen Heads Really Better than One?"

Are Sixteen Heads Really Better than One? This repository contains code to reproduce the experiments in our paper Are Sixteen Heads Really Better than

This is a repository with the code for the ACL 2019 paper

The Story of Heads This is the official repo for the following papers: (ACL 2019) Analyzing Multi-Head Self-Attention: Specialized Heads Do the Heavy

Code for this paper The Lottery Ticket Hypothesis for Pre-trained BERT Networks.

The Lottery Ticket Hypothesis for Pre-trained BERT Networks Code for this paper The Lottery Ticket Hypothesis for Pre-trained BERT Networks. [NeurIPS

Norm-based Analysis of Transformer

Norm-based Analysis of Transformer Implementations for 2 papers introducing to analyze Transformers using vector norms: Kobayashi+'20 Attention is Not

Understanding the Difficulty of Training Transformers

Admin Understanding the Difficulty of Training Transformers Guided by our analyses, we propose Adaptive Model Initialization (Admin), which successful

Official Pytorch Implementation of Length-Adaptive Transformer (ACL 2021)

Length-Adaptive Transformer This is the official Pytorch implementation of Length-Adaptive Transformer. For detailed information about the method, ple

Implementation of Continuous Sparsification, a method for pruning and ticket search in deep networks

Continuous Sparsification Implementation of Continuous Sparsification (CS), a method based on l_0 regularization to find sparse neural networks, propo

Multi-Task Deep Neural Networks for Natural Language Understanding

New Release We released Adversarial training for both LM pre-training/finetuning and f-divergence. Large-scale Adversarial training for LMs: ALUM code

Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP 2021.

The Stem Cell Hypothesis Codes for our paper The Stem Cell Hypothesis: Dilemma behind Multi-Task Learning with Transformer Encoders published to EMNLP

🥇Samsung AI Challenge 2021 1등 솔루션입니다🥇

MoT - Molecular Transformer Large-scale Pretraining for Molecular Property Prediction Samsung AI Challenge for Scientific Discovery This repository is

[KBS] Aspect-based sentiment analysis via affective knowledge enhanced graph convolutional networks

#Sentic GCN Introduction This repository was used in our paper: Aspect-Based Sentiment Analysis via Affective Knowledge Enhanced Graph Convolutional N

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion. NÜWA is a unified multimodal p

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification [pdf] The official repository for Self-Supervised Pre-Training for Transfo

Implementation of Vaswani, Ashish, et al. "Attention is all you need."

Attention Is All You Need Paper Implementation This is my from-scratch implementation of the original transformer architecture from the following pape

Multi-path load balancing is a method used by most of the real-time network to split the packets into different paths rather than transferring it through a single path

Multipath-Load-Balancing Method of managing incoming traffic by distributing and sharing load fairly among multiple routes from source to destination

MXNet implementation for: Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution

Octave Convolution MXNet implementation for: Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution Imag

[3DV 2021] A Dataset-Dispersion Perspective on Reconstruction Versus Recognition in Single-View 3D Reconstruction Networks

dispersion-score Official implementation of 3DV 2021 Paper A Dataset-dispersion Perspective on Reconstruction versus Recognition in Single-view 3D Rec

CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer

CycleTransGAN-EVC CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer Demo emotion CycleTransGAN CycleTransGAN Cycle

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

Decoding the Protein-ligand Interactions Using Parallel Graph Neural Networks

Decoding the Protein-ligand Interactions Using Parallel Graph Neural Networks Requirements python 0.10+ rdkit 2020.03.3.0 biopython 1.78 openbabel 2.4

Codes and models of NeurIPS2021 paper - DominoSearch: Find layer-wise fine-grained N:M sparse schemes from dense neural networks

DominoSearch This is repository for codes and models of NeurIPS2021 paper - DominoSearch: Find layer-wise fine-grained N:M sparse schemes from dense n

Official implementation of Representer Point Selection via Local Jacobian Expansion for Post-hoc Classifier Explanation of Deep Neural Networks and Ensemble Models at NeurIPS 2021

Representer Point Selection via Local Jacobian Expansion for Classifier Explanation of Deep Neural Networks and Ensemble Models This repository is the

Using Random Effects to Account for High-Cardinality Categorical Features and Repeated Measures in Deep Neural Networks

LMMNN Using Random Effects to Account for High-Cardinality Categorical Features and Repeated Measures in Deep Neural Networks This is the working dire

NeurIPS 2021 paper 'Representation Learning on Spatial Networks' code

Representation Learning on Spatial Networks This repository is the official implementation of Representation Learning on Spatial Networks. Training Ex

Discovering Dynamic Salient Regions with Spatio-Temporal Graph Neural Networks

Discovering Dynamic Salient Regions with Spatio-Temporal Graph Neural Networks This is the official code for DyReg model inroduced in Discovering Dyna

Toolbox to analyze temporal context invariance of deep neural networks

PyTCI A toolbox that estimates the integration window of a sensory response using the "Temporal Context Invariance" paradigm (TCI). The TCI method Int

Representing Long-Range Context for Graph Neural Networks with Global Attention

Graph Augmentation Graph augmentation/self-supervision/etc. Algorithms gcn gcn+virtual node gin gin+virtual node PNA GraphTrans Augmentation methods N

Pytorch implementation of Integrating Tree Path in Transformer for Code Representation

This is an official Pytorch implementation of the approaches proposed in: Han Peng, Ge Li, Wenhan Wang, Yunfei Zhao, Zhi Jin “Integrating Tree Path in

Code for 2021 NeurIPS --- Towards Multi-Grained Explainability for Graph Neural Networks

ReFine: Multi-Grained Explainability for GNNs We are trying hard to update the code, but it may take a while to complete due to our tight schedule rec

The official implementation of EIGNN: Efficient Infinite-Depth Graph Neural Networks (NeurIPS 2021)

EIGNN: Efficient Infinite-Depth Graph Neural Networks The official implementation of EIGNN: Efficient Infinite-Depth Graph Neural Networks (NeurIPS 20

The PyTorch implementation of Directed Graph Contrastive Learning (DiGCL), NeurIPS-2021

Directed Graph Contrastive Learning Paper | Poster | Supplementary The PyTorch implementation of Directed Graph Contrastive Learning (DiGCL). In this

Discerning Decision-Making Process of Deep Neural Networks with Hierarchical Voting Transformation

Configurations Change HOME_PATH in CONFIG.py as the current path Data Prepare CENSINCOME Download data Put census-income.data and census-income.test i

Official implementation of the NeurIPS 2021 paper Online Learning Of Neural Computations From Sparse Temporal Feedback

Online Learning Of Neural Computations From Sparse Temporal Feedback This repository is the official implementation of the NeurIPS 2021 paper Online L

Robust & Reliable Route Recommendation on Road Networks

NeuroMLR: Robust & Reliable Route Recommendation on Road Networks This repository is the official implementation of NeuroMLR: Robust & Reliable Route

Official implementation of the NeurIPS'21 paper 'Conditional Generation Using Polynomial Expansions'.

Conditional Generation Using Polynomial Expansions Official implementation of the conditional image generation experiments as described on the NeurIPS

A simple and useful implementation of LPIPS.

lpips-pytorch Description Developing perceptual distance metrics is a major topic in recent image processing problems. LPIPS[1] is a state-of-the-art

Official implementation of "Robust channel-wise illumination estimation"

This repository provides the official implementation of "Robust channel-wise illumination estimation." accepted in BMVC (2021).

A Python library for Deep Graph Networks

PyDGN Wiki Description This is a Python library to easily experiment with Deep Graph Networks (DGNs). It provides automatic management of data splitti

Implementation of Artificial Neural Network Algorithm

Artificial Neural Network This repository contain implementation of Artificial Neural Network Algorithm in several programming languanges and framewor

Adjusting for Autocorrelated Errors in Neural Networks for Time Series

Adjusting for Autocorrelated Errors in Neural Networks for Time Series This repository is the official implementation of the paper "Adjusting for Auto

Long Expressive Memory (LEM)

Long Expressive Memory for Sequence Modeling This repository contains the implementation to reproduce the numerical experiments of the paper Long Expr

This is a collection of our NAS and Vision Transformer work.

This is a collection of our NAS and Vision Transformer work.

Swin-Transformer is basically a hierarchical Transformer whose representation is computed with shifted windows.

Swin-Transformer Swin-Transformer is basically a hierarchical Transformer whose representation is computed with shifted windows. For more details, ple

Code for A Volumetric Transformer for Accurate 3D Tumor Segmentation

VT-UNet This repo contains the supported pytorch code and configuration files to reproduce 3D medical image segmentaion results of VT-UNet. Environmen

The official implementation for "FQ-ViT: Fully Quantized Vision Transformer without Retraining".

FQ-ViT [arXiv] This repo contains the official implementation of "FQ-ViT: Fully Quantized Vision Transformer without Retraining". Table of Contents In

A benchmark dataset for emulating atmospheric radiative transfer in weather and climate models with machine learning (NeurIPS 2021 Datasets and Benchmarks Track)

ClimART - A Benchmark Dataset for Emulating Atmospheric Radiative Transfer in Weather and Climate Models Official PyTorch Implementation Using deep le

This is a collection of our NAS and Vision Transformer work.

AutoML - Neural Architecture Search This is a collection of our AutoML-NAS work iRPE (NEW): Rethinking and Improving Relative Position Encoding for Vi

Torch implementation of "Enhanced Deep Residual Networks for Single Image Super-Resolution"

NTIRE2017 Super-resolution Challenge: SNU_CVLab Introduction This is our project repository for CVPR 2017 Workshop (2nd NTIRE). We, Team SNU_CVLab, (B

A Pytorch implementation of SMU: SMOOTH ACTIVATION FUNCTION FOR DEEP NETWORKS USING SMOOTHING MAXIMUM TECHNIQUE

SMU_pytorch A Pytorch Implementation of SMU: SMOOTH ACTIVATION FUNCTION FOR DEEP NETWORKS USING SMOOTHING MAXIMUM TECHNIQUE arXiv https://arxiv.org/ab

Bonnet: An Open-Source Training and Deployment Framework for Semantic Segmentation in Robotics.

Bonnet: An Open-Source Training and Deployment Framework for Semantic Segmentation in Robotics. By Andres Milioto @ University of Bonn. (for the new P

Unsupervised Feature Ranking via Attribute Networks.

FRANe Unsupervised Feature Ranking via Attribute Networks (FRANe) converts a dataset into a network (graph) with nodes that correspond to the features

Official implementation of "Intrinsic Dimension, Persistent Homology and Generalization in Neural Networks", NeurIPS 2021.

PHDimGeneralization Official implementation of "Intrinsic Dimension, Persistent Homology and Generalization in Neural Networks", NeurIPS 2021. Overvie

Subnet Replacement Attack: Towards Practical Deployment-Stage Backdoor Attack on Deep Neural Networks

Subnet Replacement Attack: Towards Practical Deployment-Stage Backdoor Attack on Deep Neural Networks Official implementation of paper Towards Practic

A framework that constructs deep neural networks, autoencoders, logistic regressors, and linear networks

A framework that constructs deep neural networks, autoencoders, logistic regressors, and linear networks without the use of any outside machine learning libraries - all from scratch.

PointCNN: Convolution On X-Transformed Points (NeurIPS 2018)

PointCNN: Convolution On X-Transformed Points Created by Yangyan Li, Rui Bu, Mingchao Sun, Wei Wu, Xinhan Di, and Baoquan Chen. Introduction PointCNN

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

Image Super-Resolution Using Very Deep Residual Channel Attention Networks

PyTorch version repo for CSRNet: Dilated Convolutional Neural Networks for Understanding the Highly Congested Scenes

Study-CSRNet-pytorch This is the PyTorch version repo for CSRNet: Dilated Convolutional Neural Networks for Understanding the Highly Congested Scenes

Haystack is an open source NLP framework that leverages Transformer models.

Haystack is an end-to-end framework that enables you to build powerful and production-ready pipelines for different search use cases. Whether you want

Pytorch and Keras Implementations of Hyperspectral Image Classification -- Traditional to Deep Models: A Survey for Future Prospects.

The repository contains the implementations for Hyperspectral Image Classification -- Traditional to Deep Models: A Survey for Future Prospects. Model

A simple and extensible library to create Bayesian Neural Network layers on PyTorch.

Blitz - Bayesian Layers in Torch Zoo BLiTZ is a simple and extensible library to create Bayesian Neural Network Layers (based on whats proposed in Wei

KakaoBrain KoGPT (Korean Generative Pre-trained Transformer)

KoGPT KoGPT (Korean Generative Pre-trained Transformer) https://github.com/kakaobrain/kogpt https://huggingface.co/kakaobrain/kogpt Model Descriptions

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

Listen to the radio station from your favorite broadcast

Latest news Listen to the radio station from your favorite broadcast MyCroft Radio Skill for testing and copy at docker skill About Play regional radi

Learning Convolutional Neural Networks with Interactive Visualization.

CNN Explainer An interactive visualization system designed to help non-experts learn about Convolutional Neural Networks (CNNs) For more information,

Pytorch library for end-to-end transformer models training and serving

Pytorch library for end-to-end transformer models training and serving

labelpix is a graphical image labeling interface for drawing bounding boxes

Welcome to labelpix 👋 labelpix is a graphical image labeling interface for drawing bounding boxes. 🏠 Homepage Install pip install -r requirements.tx

MobileNetV1-V2,MobileNeXt,GhostNet,AdderNet,ShuffleNetV1-V2,Mobile+ViT etc.

MobileNetV1-V2,MobileNeXt,GhostNet,AdderNet,ShuffleNetV1-V2,Mobile+ViT etc. ⭐⭐⭐⭐⭐

Official Code for Cross-Modality Fusion Transformer for Multispectral Object Detection.

Multispectral-Object-Detection Intro Official Code for Cross-Modality Fusion Transformer for Multispectral Object Detection. Multispectral Object Dete

Implementation of the Paper: "Parameterized Hypercomplex Graph Neural Networks for Graph Classification" by Tuan Le, Marco Bertolini, Frank Noé and Djork-Arné Clevert

Parameterized Hypercomplex Graph Neural Networks (PHC-GNNs) PHC-GNNs (Le et al., 2021): https://arxiv.org/abs/2103.16584 PHM Linear Layer Illustration

Prototypical Cross-Attention Networks for Multiple Object Tracking and Segmentation, NeurIPS 2021 Spotlight

PCAN for Multiple Object Tracking and Segmentation This is the offical implementation of paper PCAN for MOTS. We also present a trailer that consists

mPose3D, a mmWave-based 3D human pose estimation model.

mPose3D, a mmWave-based 3D human pose estimation model.

Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation

TVT Code of TVT: Transferable Vision Transformer for Unsupervised Domain Adaptation Datasets: Digit: MNIST, SVHN, USPS Object: Office, Office-Home, Vi

Softlearning is a reinforcement learning framework for training maximum entropy policies in continuous domains. Includes the official implementation of the Soft Actor-Critic algorithm.

Softlearning Softlearning is a deep reinforcement learning toolbox for training maximum entropy policies in continuous domains. The implementation is

Keyword spotting on Arm Cortex-M Microcontrollers

Keyword spotting for Microcontrollers This repository consists of the tensorflow models and training scripts used in the paper: Hello Edge: Keyword sp

Code for paper " AdderNet: Do We Really Need Multiplications in Deep Learning?"

AdderNet: Do We Really Need Multiplications in Deep Learning? This code is a demo of CVPR 2020 paper AdderNet: Do We Really Need Multiplications in De

Awesome Deep Graph Clustering is a collection of SOTA, novel deep graph clustering methods

ADGC: Awesome Deep Graph Clustering ADGC is a collection of state-of-the-art (SOTA), novel deep graph clustering methods (papers, codes and datasets).

Official implementation of SIGIR'2021 paper: "Sequential Recommendation with Graph Neural Networks".

SURGE: Sequential Recommendation with Graph Neural Networks This is our TensorFlow implementation for the paper: Sequential Recommendation with Graph

Augmenting Physical Models with Deep Networks for Complex Dynamics Forecasting

Official code of APHYNITY Augmenting Physical Models with Deep Networks for Complex Dynamics Forecasting (ICLR 2021, Oral) Yuan Yin*, Vincent Le Guen*

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis

This repository contains the official implementation code of the paper Transformer-based Feature Reconstruction Network for Robust Multimodal Sentiment Analysis, accepted at ACMMM 2021.

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification

Self-Supervised Pre-Training for Transformer-Based Person Re-Identification [pdf] The official repository for Self-Supervised Pre-Training for Transfo

Hidden-Fold Networks (HFN): Random Recurrent Residuals Using Sparse Supermasks

Hidden-Fold Networks (HFN): Random Recurrent Residuals Using Sparse Supermasks by Ángel López García-Arias, Masanori Hashimoto, Masato Motomura, and J

Official Codes for Graph Modularity:Towards Understanding the Cross-Layer Transition of Feature Representations in Deep Neural Networks.

Dynamic-Graphs-Construction Official Codes for Graph Modularity:Towards Understanding the Cross-Layer Transition of Feature Representations in Deep Ne

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing Paper Introduction Multi-task indoor scene understanding is widely considered a

This is the official PyTorch implementation for "Sharpness-aware Quantization for Deep Neural Networks".

Sharpness-aware Quantization for Deep Neural Networks This is the official repository for our paper: Sharpness-aware Quantization for Deep Neural Netw

A unified 3D Transformer Pipeline for visual synthesis

Overview This is the official repo for the paper: "NÜWA: Visual Synthesis Pre-training for Neural visUal World creAtion". NÜWA is a unified multimodal

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing

Cerberus Transformer: Joint Semantic, Affordance and Attribute Parsing Paper Introduction Multi-task indoor scene understanding is widely considered a

OpenMMLab Text Detection, Recognition and Understanding Toolbox

Introduction English | 简体中文 MMOCR is an open-source toolbox based on PyTorch and mmdetection for text detection, text recognition, and the correspondi

OpenMMLab Image Classification Toolbox and Benchmark

Introduction English | 简体中文 MMClassification is an open source image classification toolbox based on PyTorch. It is a part of the OpenMMLab project. D

YOLOv4 / Scaled-YOLOv4 / YOLO - Neural Networks for Object Detection (Windows and Linux version of Darknet )

Yolo v4, v3 and v2 for Windows and Linux (neural networks for object detection) Paper YOLO v4: https://arxiv.org/abs/2004.10934 Paper Scaled YOLO v4:

Two-Stream Adaptive Graph Convolutional Networks for Skeleton-Based Action Recognition in CVPR19

2s-AGCN Two-Stream Adaptive Graph Convolutional Networks for Skeleton-Based Action Recognition in CVPR19 Note PyTorch version should be 0.3! For PyTor

Question and answer retrieval in Turkish with BERT

trfaq Google supported this work by providing Google Cloud credit. Thank you Google for supporting the open source! 🎉 What is this? At this repo, I'm

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation This repo is the official implementation of "MHFormer: Multi-Hypothesis Transforme

Delve is a Python package for analyzing the inference dynamics of your PyTorch model.

Delve is a Python package for analyzing the inference dynamics of your PyTorch model.

A library that integrates huggingface transformers with the world of fastai, giving fastai devs everything they need to train, evaluate, and deploy transformer specific models.

blurr A library that integrates huggingface transformers with version 2 of the fastai framework Install You can now pip install blurr via pip install

The Most Efficient Temporal Difference Learning Framework for 2048

moporgic/TDL2048+ TDL2048+ is a highly optimized temporal difference (TD) learning framework for 2048. Features Many common methods related to 2048 ar

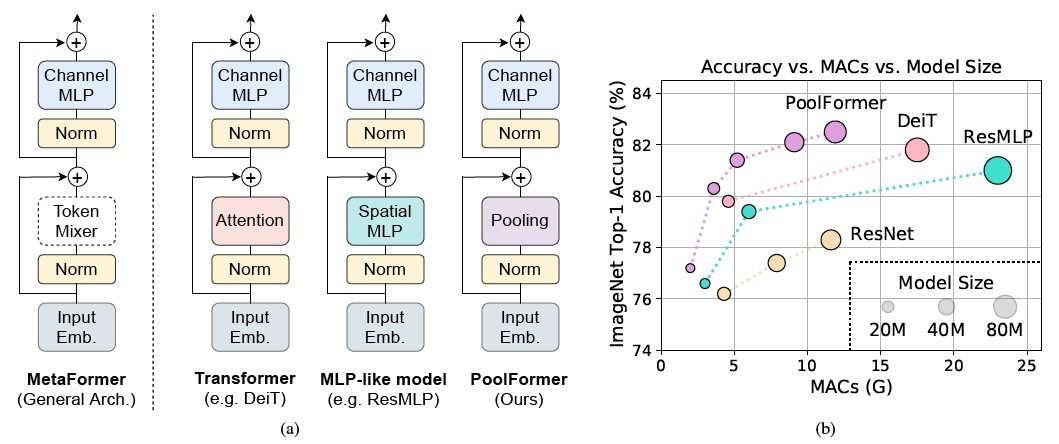

PoolFormer: MetaFormer is Actually What You Need for Vision

PoolFormer: MetaFormer is Actually What You Need for Vision (arXiv) This is a PyTorch implementation of PoolFormer proposed by our paper "MetaFormer i

Graph Convolutional Neural Networks with Data-driven Graph Filter (GCNN-DDGF)

Graph Convolutional Gated Recurrent Neural Network (GCGRNN) Improved from Graph Convolutional Neural Networks with Data-driven Graph Filter (GCNN-DDGF

Skoot is a lightweight python library of machine learning transformer classes that interact with scikit-learn and pandas.

Skoot is a lightweight python library of machine learning transformer classes that interact with scikit-learn and pandas. Its objective is to ex

PyTorch code for Composing Partial Differential Equations with Physics-Aware Neural Networks

FInite volume Neural Network (FINN) This repository contains the PyTorch code for models, training, and testing, and Python code for data generation t