1798 Repositories

Python small-pre-trained-model Libraries

Neural network chess engine trained on Gary Kasparov's games.

Neural Chess It's not the best chess engine, but it is a chess engine. Proof of concept neural network chess engine (feed-forward multi-layer perceptr

An executor that performs standard pre-processing and normalization on images.

An executor that performs standard pre-processing and normalization on images.

SEOVER: Sentence-level Emotion Orientation Vector based Conversation Emotion Recognition Model

SEOVER-Master This code is the implementation of paper: SEOVER: Sentence-level Emotion Orientation Vector based Conversation Emotion Recognition Model

Code release for SLIP Self-supervision meets Language-Image Pre-training

SLIP: Self-supervision meets Language-Image Pre-training What you can find in this repo: Pre-trained models (with ViT-Small, Base, Large) and code to

AAAI 2022 paper - Unifying Model Explainability and Robustness for Joint Text Classification and Rationale Extraction

AT-BMC Unifying Model Explainability and Robustness for Joint Text Classification and Rationale Extraction (AAAI 2022) Paper Prerequisites Install pac

A set of tests for evaluating large-scale algorithms for Wasserstein-2 transport maps computation.

Continuous Wasserstein-2 Benchmark This is the official Python implementation of the NeurIPS 2021 paper Do Neural Optimal Transport Solvers Work? A Co

⛓ marc is a small, but flexible Markov chain generator

About marc (markov chain) is a small, but flexible Markov chain generator. Usage marc is easy to use. To build a MarkovChain pass the object a sequenc

Historical battle simulation package for Python

Jomini v0.1.4 Jomini creates military simulations by using mathematical combat models. Designed to be helpful for game developers, students, history e

Model Zoo for MindSpore

Welcome to the Model Zoo for MindSpore In order to facilitate developers to enjoy the benefits of MindSpore framework, we will continue to add typical

MMRazor: a model compression toolkit for model slimming and AutoML

Documentation: https://mmrazor.readthedocs.io/ English | 简体中文 Introduction MMRazor is a model compression toolkit for model slimming and AutoML, which

Cisco IOS-XE Operations Program. Shows operational data using restconf and yang

XE-Ops View operational and config data from devices running Cisco IOS-XE software. NoteS The build folder is the latest build. All other files are fo

Some out-of-the-box hooks for pre-commit

pre-commit-hooks Some out-of-the-box hooks for pre-commit. See also: https://github.com/pre-commit/pre-commit Using pre-commit-hooks with pre-commit A

A few stylization coreML models that I've trained with CreateML

CoreML-StyleTransfer A few stylization coreML models that I've trained with CreateML You can open and use the .mlmodel files in the "models" folder in

Small binja plugin to import header file to types

binja-import-header (v1.0.0) Author: matteyeux Import header file to Binary Ninja types view Description: Binary Ninja plugin to import types from C h

A small Python app to create Notion pages from Jira issues

Jira to Notion This little program will capture a Jira issue and create a corresponding Notion subpage. Mac users can fetch the current issue from the

An embedded application for toy-car controlling based on Raspberry Pi 3 Model B and AlphaBot2-Pi.

An embedded application for toy-car controlling based on Raspberry Pi 3 Model B and AlphaBot2-Pi. This is the source codes of my programming assignmen

SIR model parameter estimation using a novel algorithm for differentiated uniformization.

TenSIR Parameter estimation on epidemic data under the SIR model using a novel algorithm for differentiated uniformization of Markov transition rate m

Simple multilingual lemmatizer for Python, especially useful for speed and efficiency

Simplemma: a simple multilingual lemmatizer for Python Purpose Lemmatization is the process of grouping together the inflected forms of a word so they

Small python wrapper around the valico rust library to provide fast JSON schema validation.

Small python wrapper around the valico rust library to provide fast JSON schema validation.

Ninja is a small build system with a focus on speed.

Ninja Ninja is a small build system with a focus on speed. https://ninja-build.org/ See the manual or doc/manual.asciidoc included in the distribution

Python KNN model: Predicting a probability of getting a work visa. Tableau: Non-immigrant visas over the years.

The value of international students to the United States. Probability of getting a non-immigrant visa. Project timeline: Jan 2021 - April 2021 Project

Credo AI Lens is a comprehensive assessment framework for AI systems. Lens standardizes model and data assessment, and acts as a central gateway to assessments created in the open source community.

Lens by Credo AI - Responsible AI Assessment Framework Lens is a comprehensive assessment framework for AI systems. Lens standardizes model and data a

Ansible Automation Example: JSNAPY PRE/POST Upgrade Validation

Ansible Automation Example: JSNAPY PRE/POST Upgrade Validation Overview This example will show how to validate the status of our firewall before and a

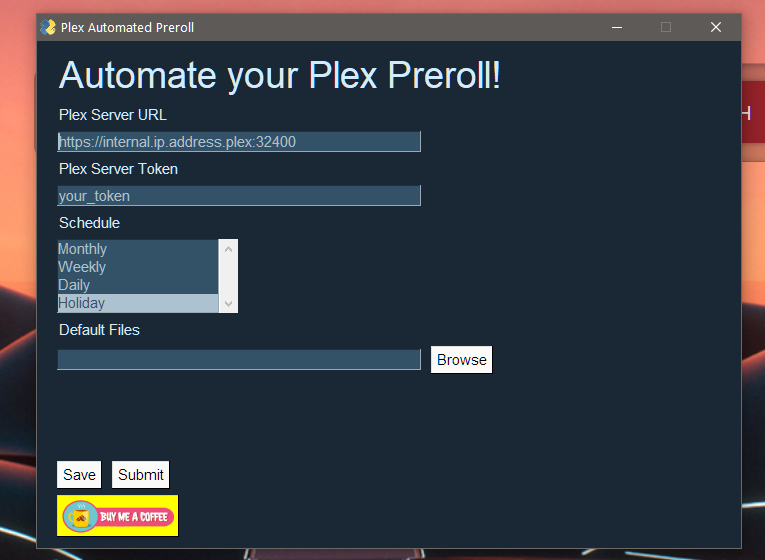

This is the new and improved Plex Automatic Pre-roll script with a GUI

Plex-Automatic-Pre-roll-GUI This is the new and improved Plex Automatic Pre-roll script with a GUI! It should be stable but if you find a bug please l

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition"

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition" Pre-trained Deep Convo

⛵️The official PyTorch implementation for "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing" (EMNLP 2020).

BERT-of-Theseus Code for paper "BERT-of-Theseus: Compressing BERT by Progressive Module Replacing". BERT-of-Theseus is a new compressed BERT by progre

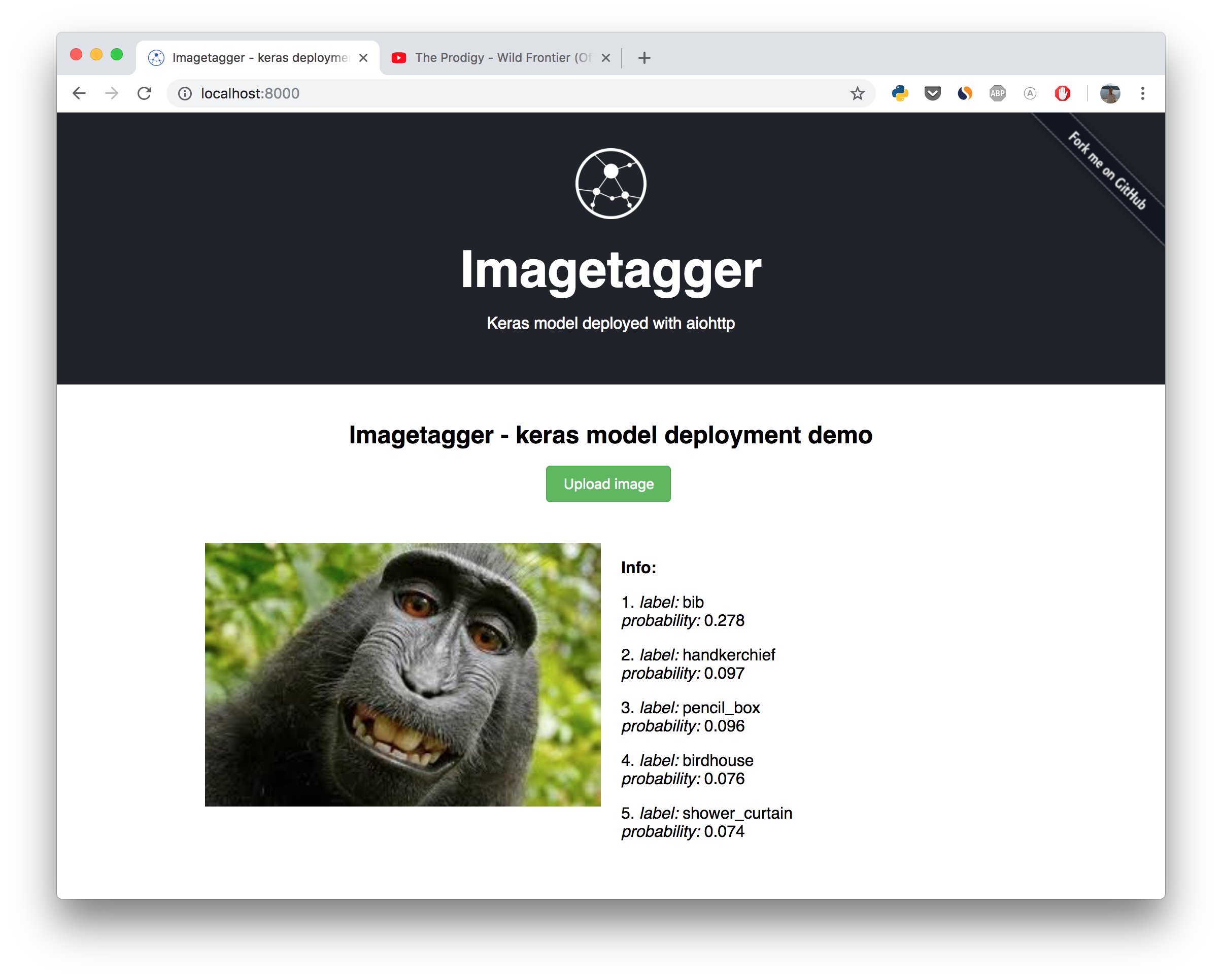

A set of demo of deploying a Machine Learning Model in production using various methods

Machine Learning Model in Production This git is for those who have concern about serving your machine learning model to production. Overview The tuto

Model Quantization Benchmark

Introduction MQBench is an open-source model quantization toolkit based on PyTorch fx. The envision of MQBench is to provide: SOTA Algorithms. With MQ

Official codebase for running the small, filtered-data GLIDE model from GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models.

GLIDE This is the official codebase for running the small, filtered-data GLIDE model from GLIDE: Towards Photorealistic Image Generation and Editing w

'Personal Finance' is a project where people can manage and track their expenses

Personal Finance by Abhiram Rishi Pratitpati 'Personal Finance' is a project where people can manage and track their expenses. It is hard to keep trac

An easy FASTA object handler, reader, writer and translator for small to medium size projects without dependencies.

miniFASTA An easy FASTA object handler, reader, writer and translator for small to medium size projects without dependencies. Installation Using pip /

Resources related to our paper "CLIN-X: pre-trained language models and a study on cross-task transfer for concept extraction in the clinical domain"

CLIN-X (CLIN-X-ES) & (CLIN-X-EN) This repository holds the companion code for the system reported in the paper: "CLIN-X: pre-trained language models a

The source code of "Language Models are Few-shot Multilingual Learners" (MRL @ EMNLP 2021)

Language Models are Few-shot Multilingual Learners Paper This is the source code of the paper [Arxiv] [ACL Anthology]: This code has been written usin

Auxiliary Raw Net (ARawNet) is a ASVSpoof detection model taking both raw waveform and handcrafted features as inputs, to balance the trade-off between performance and model complexity.

Overview This repository is an implementation of the Auxiliary Raw Net (ARawNet), which is ASVSpoof detection system taking both raw waveform and hand

Example how to deploy deep learning model with aiohttp.

aiohttp-demos Demos for aiohttp project. Contents Imagetagger Deep Learning Image Classifier URL shortener Toxic Comments Classifier Moderator Slack B

Automatic labeling, conversion of different data set formats, sample size statistics, model cascade

Simple Gadget Collection for Object Detection Tasks Automatic image annotation Conversion between different annotation formats Obtain statistical info

A warping based image translation model focusing on upper body synthesis.

Pose2Img Upper body image synthesis from skeleton(Keypoints). Sub module in the ICCV-2021 paper "Speech Drives Templates: Co-Speech Gesture Synthesis

LSTM model trained on a small dataset of 3000 names written in PyTorch

LSTM model trained on a small dataset of 3000 names. Model generates names from model by selecting one out of top 3 letters suggested by model at a time until an EOS (End Of Sentence) character is not encountered.

An end-to-end image translation model with weight-map for color constancy

CCUnet An end-to-end image translation model with weight-map for color constancy 1. Download the dataset (take Colorchecker_recommended dataset as an

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism (SVS & TTS); AAAI 2022; Official code

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism This repository is the official PyTorch implementation of our AAAI-2022 paper, in

BARTpho: Pre-trained Sequence-to-Sequence Models for Vietnamese

Table of contents Introduction Using BARTpho with fairseq Using BARTpho with transformers Notes BARTpho: Pre-trained Sequence-to-Sequence Models for V

CMUA-Watermark: A Cross-Model Universal Adversarial Watermark for Combating Deepfakes (AAAI2022)

CMUA-Watermark The official code for CMUA-Watermark: A Cross-Model Universal Adversarial Watermark for Combating Deepfakes (AAAI2022) arxiv. It is bas

A PyTorch-based model pruning toolkit for pre-trained language models

English | 中文说明 TextPruner是一个为预训练语言模型设计的模型裁剪工具包,通过轻量、快速的裁剪方法对模型进行结构化剪枝,从而实现压缩模型体积、提升模型速度。 其他相关资源: 知识蒸馏工具TextBrewer:https://github.com/airaria/TextBrewe

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism (SVS & TTS); AAAI 2022

DiffSinger: Singing Voice Synthesis via Shallow Diffusion Mechanism This repository is the official PyTorch implementation of our AAAI-2022 paper, in

![[CVPR 2020] Local Class-Specific and Global Image-Level Generative Adversarial Networks for Semantic-Guided Scene Generation](https://github.com/Ha0Tang/LGGAN/raw/master/imgs/framework.jpg)

[CVPR 2020] Local Class-Specific and Global Image-Level Generative Adversarial Networks for Semantic-Guided Scene Generation

Contents Local and Global GAN Cross-View Image Translation Semantic Image Synthesis Acknowledgments Related Projects Citation Contributions Collaborat

GAN-based 3D human pose estimation model for 3DV'17 paper

Tensorflow implementation for 3DV 2017 conference paper "Adversarially Parameterized Optimization for 3D Human Pose Estimation". @inproceedings{jack20

This is a small project written to help build documentation for projects in less time.

Documentation-Builder This is a small project written to help build documentation for projects in less time. About This project builds documentation f

Tensorflow Implementation of ECCV'18 paper: Multimodal Human Motion Synthesis

MT-VAE for Multimodal Human Motion Synthesis This is the code for ECCV 2018 paper MT-VAE: Learning Motion Transformations to Generate Multimodal Human

Complete system for facial identity system. Include one-shot model, database operation, features visualization, monitoring

Complete system for facial identity system. Include one-shot model, database operation, features visualization, monitoring

Spatio-Temporal Entropy Model (STEM) for end-to-end leaned video compression.

Spatio-Temporal Entropy Model A Pytorch Reproduction of Spatio-Temporal Entropy Model (STEM) for end-to-end leaned video compression. More details can

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

Conservative and Adaptive Penalty for Model-Based Safe Reinforcement Learning

Conservative and Adaptive Penalty for Model-Based Safe Reinforcement Learning This is the official repository for Conservative and Adaptive Penalty fo

Codes for building and training the neural network model described in Domain-informed neural networks for interaction localization within astroparticle experiments.

Domain-informed Neural Networks Codes for building and training the neural network model described in Domain-informed neural networks for interaction

The InterScript dataset contains interactive user feedback on scripts generated by a T5-XXL model.

Interscript The Interscript dataset contains interactive user feedback on a T5-11B model generated scripts. Dataset data.json contains the data in an

On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021))

PTvsBT On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021) Citation Please cite a

DECA: Detailed Expression Capture and Animation (SIGGRAPH 2021)

DECA: Detailed Expression Capture and Animation (SIGGRAPH2021) input image, aligned reconstruction, animation with various poses & expressions This is

This repository contains the source code for the paper First Order Motion Model for Image Animation

!!! Check out our new paper and framework improved for articulated objects First Order Motion Model for Image Animation This repository contains the s

A small python tool to get relevant values from SRI invoices

SriInvoiceProcessing A small python tool to get relevant values from SRI invoices Some useful info to run the tool Login into your SRI account and ret

A small game I made back in think 2011

Navi Network A small game I made back in think 2011. An online game inspired by the self-hosted nature of Minecraft, made with pygame, based on the Me

A small distributed download manager to help bypass device-specific bandwidth limitations.

Distributed Download Manager A small distributed download manager to help bypass device-specific bandwidth limitations. Architecture The download mana

A high-level yet extensible library for fast language model tuning via automatic prompt search

ruPrompts ruPrompts is a high-level yet extensible library for fast language model tuning via automatic prompt search, featuring integration with Hugg

Industrial Image Anomaly Localization Based on Gaussian Clustering of Pre-trained Feature

Industrial Image Anomaly Localization Based on Gaussian Clustering of Pre-trained Feature Q. Wan, L. Gao, X. Li and L. Wen, "Industrial Image Anomaly

Working demo of the Multi-class and Anomaly classification model using the CLIP feature space

👁️ Hindsight AI: Crime Classification With Clip About For Educational Purposes Only This is a recursive neural net trained to classify specific crime

Chinese NER with albert/electra or other bert descendable model (keras)

Chinese NLP (albert/electra with Keras) Named Entity Recognization Project Structure ./ ├── NER │ ├── __init__.py │ ├── log

Some pre-commit hooks for OpenMMLab projects

pre-commit-hooks Some pre-commit hooks for OpenMMLab projects. Using pre-commit-hooks with pre-commit Add this to your .pre-commit-config.yaml - rep

Interactive chemical viewer for 2D structures of small molecules

👀 mols2grid mols2grid is an interactive chemical viewer for 2D structures of small molecules, based on RDKit. ➡️ Try the demo notebook on Google Cola

A small POC plugin for launching dumpulator emulation within IDA, passing it addresses from your IDA view using the context menu.

Dumpulator-IDA Currently proof-of-concept This project is a small POC plugin for launching dumpulator emulation within IDA, passing it addresses from

A model that attempts to learn and benefit from data collected on card counting.

A model that attempts to learn and benefit from data collected on card counting. A decision tree like model is built to win more often than loose and increase the bet of the player appropriately to come out winning as much money as possible.

Dust model dichotomous performance analysis

Dust-model-dichotomous-performance-analysis Using a collated dataset of 90,000 dust point source observations from 9 drylands studies from around the

Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition

SEW (Squeezed and Efficient Wav2vec) The repo contains the code of the paper "Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speec

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

Model parallel transformers in JAX and Haiku

Table of contents Mesh Transformer JAX Updates Pretrained Models GPT-J-6B Links Acknowledgments License Model Details Zero-Shot Evaluations Architectu

Unofficial implementation of Google's FNet: Mixing Tokens with Fourier Transforms

FNet: Mixing Tokens with Fourier Transforms Pytorch implementation of Fnet : Mixing Tokens with Fourier Transforms. Citation: @misc{leethorp2021fnet,

ByT5: Towards a token-free future with pre-trained byte-to-byte models

ByT5: Towards a token-free future with pre-trained byte-to-byte models ByT5 is a tokenizer-free extension of the mT5 model. Instead of using a subword

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Mesh TensorFlow: Model Parallelism Made Easier

Mesh TensorFlow - Model Parallelism Made Easier Introduction Mesh TensorFlow (mtf) is a language for distributed deep learning, capable of specifying

🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 | 한국어 State-of-the-art Machine Learning for JAX, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained models

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised

Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities

Hiring We are hiring at all levels (including FTE researchers and interns)! If you are interested in working with us on NLP and large-scale pre-traine

Unsupervised Language Model Pre-training for French

FlauBERT and FLUE FlauBERT is a French BERT trained on a very large and heterogeneous French corpus. Models of different sizes are trained using the n

Code for EMNLP20 paper: "ProphetNet: Predicting Future N-gram for Sequence-to-Sequence Pre-training"

ProphetNet-X This repo provides the code for reproducing the experiments in ProphetNet. In the paper, we propose a new pre-trained language model call

DeBERTa: Decoding-enhanced BERT with Disentangled Attention

DeBERTa: Decoding-enhanced BERT with Disentangled Attention This repository is the official implementation of DeBERTa: Decoding-enhanced BERT with Dis

CodeBERT: A Pre-Trained Model for Programming and Natural Languages.

CodeBERT This repo provides the code for reproducing the experiments in CodeBERT: A Pre-Trained Model for Programming and Natural Languages. CodeBERT

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

ELECTRA Introduction ELECTRA is a method for self-supervised language representation learning. It can be used to pre-train transformer networks using

Conditional Transformer Language Model for Controllable Generation

CTRL - A Conditional Transformer Language Model for Controllable Generation Authors: Nitish Shirish Keskar, Bryan McCann, Lav Varshney, Caiming Xiong,

An implementation of model parallel GPT-2 and GPT-3-style models using the mesh-tensorflow library.

GPT Neo 🎉 1T or bust my dudes 🎉 An implementation of model & data parallel GPT3-like models using the mesh-tensorflow library. If you're just here t

Awesome Treasure of Transformers Models Collection

💁 Awesome Treasure of Transformers Models for Natural Language processing contains papers, videos, blogs, official repo along with colab Notebooks. 🛫☑️

Memory-Augmented Model Predictive Control

Memory-Augmented Model Predictive Control This repository hosts the source code for the journal article "Composing MPC with LQR and Neural Networks fo

A program that uses an API and a AI model to get info of sotcks

Stock-Market-AI-Analysis I dont mind anyone using this code but please give me credit A program that uses an API and a AI model to get info of stocks

SeqFormer: a Frustratingly Simple Model for Video Instance Segmentation

SeqFormer: a Frustratingly Simple Model for Video Instance Segmentation SeqFormer SeqFormer: a Frustratingly Simple Model for Video Instance Segmentat

A Python library for electronic structure pre/post-processing

PyProcar PyProcar is a robust, open-source Python library used for pre- and post-processing of the electronic structure data coming from DFT calculati

A Python description of the Kinematic Bicycle Model with an animated example.

Kinematic Bicycle Model Abstract A python library for the Kinematic Bicycle model. The Kinematic Bicycle is a compromise between the non-linear and li

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm.

This repository is the official implementation of the Hybrid Self-Attention NEAT algorithm. It contains the code to reproduce the results presented in the original paper: https://arxiv.org/abs/2112.03670

Telegram chatbot created with deep learning model (LSTM) and telebot library.

Telegram chatbot Telegram chatbot created with deep learning model (LSTM) and telebot library. Description This program will allow you to create very

A toolkit for document-level event extraction, containing some SOTA model implementations

❤️ A Toolkit for Document-level Event Extraction with & without Triggers Hi, there 👋 . Thanks for your stay in this repo. This project aims at buildi

A 1.3B text-to-image generation model trained on 14 million image-text pairs

minDALL-E on Conceptual Captions minDALL-E, named after minGPT, is a 1.3B text-to-image generation model trained on 14 million image-text pairs for no

Using Bert as the backbone model for lime, designed for NLP task explanation (sentence pair text classification task)

Lime Comparing deep contextualized model for sentences highlighting task. In addition, take the classic explanation model "LIME" with bert-base model