317 Repositories

Python pre-processor Libraries

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training [Arxiv] VideoMAE: Masked Autoencoders are Data-Efficient Learne

Code and pre-trained models for MultiMAE: Multi-modal Multi-task Masked Autoencoders

MultiMAE: Multi-modal Multi-task Masked Autoencoders Roman Bachmann*, David Mizrahi*, Andrei Atanov, Amir Zamir Website | arXiv | BibTeX Official PyTo

![[CVPR 2022 Oral] Versatile Multi-Modal Pre-Training for Human-Centric Perception](https://github.com/hongfz16/HCMoCo/raw/main/assets/teaser.png)

[CVPR 2022 Oral] Versatile Multi-Modal Pre-Training for Human-Centric Perception

Versatile Multi-Modal Pre-Training for Human-Centric Perception Fangzhou Hong1 Liang Pan1 Zhongang Cai1,2,3 Ziwei Liu1* 1S-Lab, Nanyang Technologic

Pre-crisis Risk Management for Personal Finance

Антикризисный риск-менеджмент личных финансов Риск-менеджмент личных финансов условиях санкций и/или финансового кризиса: делаем сегодня все, чтобы за

A Python package develop for transportation spatio-temporal big data processing, analysis and visualization.

English 中文版 TransBigData Introduction TransBigData is a Python package developed for transportation spatio-temporal big data processing, analysis and

BigDetection: A Large-scale Benchmark for Improved Object Detector Pre-training

BigDetection: A Large-scale Benchmark for Improved Object Detector Pre-training By Likun Cai, Zhi Zhang, Yi Zhu, Li Zhang, Mu Li, Xiangyang Xue. This

StorSeismic: An approach to pre-train a neural network to store seismic data features

StorSeismic: An approach to pre-train a neural network to store seismic data features This repository contains codes and resources to reproduce experi

Unified API to facilitate usage of pre-trained "perceptor" models, a la CLIP

mmc installation git clone https://github.com/dmarx/Multi-Modal-Comparators cd 'Multi-Modal-Comparators' pip install poetry poetry build pip install d

code for TCL: Vision-Language Pre-Training with Triple Contrastive Learning, CVPR 2022

Vision-Language Pre-Training with Triple Contrastive Learning, CVPR 2022 News (03/16/2022) upload retrieval checkpoints finetuned on COCO and Flickr T

Guide to using pre-trained large language models of source code

Large Models of Source Code I occasionally train and publicly release large neural language models on programs, including PolyCoder. Here, I describe

CLIP (Contrastive Language–Image Pre-training) for Italian

Italian CLIP CLIP (Radford et al., 2021) is a multimodal model that can learn to represent images and text jointly in the same space. In this project,

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Paper | Blog OFA is a unified multimodal pretrained model that unifies modalities (i.e., cross-modality, vision, language) and tasks (e.g., image gene

In this tutorial, you will perform inference across 10 well-known pre-trained object detectors and fine-tune on a custom dataset. Design and train your own object detector.

Object Detection Object detection is a computer vision task for locating instances of predefined objects in images or videos. In this tutorial, you wi

Code for CVPR'2022 paper ✨ "Predict, Prevent, and Evaluate: Disentangled Text-Driven Image Manipulation Empowered by Pre-Trained Vision-Language Model"

PPE ✨ Repository for our CVPR'2022 paper: Predict, Prevent, and Evaluate: Disentangled Text-Driven Image Manipulation Empowered by Pre-Trained Vision-

Code for our paper "Graph Pre-training for AMR Parsing and Generation" in ACL2022

AMRBART An implementation for ACL2022 paper "Graph Pre-training for AMR Parsing and Generation". You may find our paper here (Arxiv). Requirements pyt

Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers

beyond masking Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers The code is coming Figure 1: Pipeline of token-based pre-

Large-Scale Pre-training for Person Re-identification with Noisy Labels (LUPerson-NL)

LUPerson-NL Large-Scale Pre-training for Person Re-identification with Noisy Labels (LUPerson-NL) The repository is for our CVPR2022 paper Large-Scale

SIGIR'22 paper: Axiomatically Regularized Pre-training for Ad hoc Search

Introduction This codebase contains source-code of the Python-based implementation (ARES) of our SIGIR 2022 paper. Chen, Jia, et al. "Axiomatically Re

Cairo hooks for pre-commit

pre-commit-cairo Cairo hooks for pre-commit. See pre-commit for more details Using pre-commit-cairo with pre-commit Add this to your .pre-commit-confi

Princeton NLP's pre-training library based on fairseq with DeepSpeed kernel integration 🚃

This repository provides a library for efficient training of masked language models (MLM), built with fairseq. We fork fairseq to give researchers mor

Code for our SIGIR 2022 accepted paper : P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-based Learning and Pre-finetuning

P3 Ranker Implementation for our SIGIR2022 accepted paper: P3 Ranker: Mitigating the Gaps between Pre-training and Ranking Fine-tuning with Prompt-bas

Text classification is one of the popular tasks in NLP that allows a program to classify free-text documents based on pre-defined classes.

Deep-Learning-for-Text-Document-Classification Text classification is one of the popular tasks in NLP that allows a program to classify free-text docu

PyTorch Implementation of "Bridging Pre-trained Language Models and Hand-crafted Features for Unsupervised POS Tagging" (Findings of ACL 2022)

Feature_CRF_AE Feature_CRF_AE provides a implementation of Bridging Pre-trained Language Models and Hand-crafted Features for Unsupervised POS Tagging

SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING - The Facebook paper about fine tuning RoBERTa with contrastive loss

"# SUPERVISED-CONTRASTIVE-LEARNING-FOR-PRE-TRAINED-LANGUAGE-MODEL-FINE-TUNING" i

Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

Official repository of OFA. Paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework

KoRean based ELECTRA pre-trained models (KR-ELECTRA) for Tensorflow and PyTorch

KoRean based ELECTRA (KR-ELECTRA) This is a release of a Korean-specific ELECTRA model with comparable or better performances developed by the Computa

BROS: A Pre-trained Language Model Focusing on Text and Layout for Better Key Information Extraction from Documents

BROS (BERT Relying On Spatiality) is a pre-trained language model focusing on text and layout for better key information extraction from documents. Given the OCR results of the document image, which are text and bounding box pairs, it can perform various key information extraction tasks, such as extracting an ordered item list from receipts

Explore extreme compression for pre-trained language models

Code for paper "Exploring extreme parameter compression for pre-trained language models ICLR2022"

/samples.jpg)

A collection of pre-trained StyleGAN2 models trained on different datasets at different resolution.

Awesome Pretrained StyleGAN2 A collection of pre-trained StyleGAN2 models trained on different datasets at different resolution. Note the readme is a

Helperpod - A CLI tool to run a Kubernetes utility pod with pre-installed tools that can be used for debugging/testing purposes inside a Kubernetes cluster

Helperpod is a CLI tool to run a Kubernetes utility pod with pre-installed tools that can be used for debugging/testing purposes inside a Kubernetes cluster.

PyTorch code for BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation

Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners

DART Implementation for ICLR2022 paper Differentiable Prompt Makes Pre-trained Language Models Better Few-shot Learners. Environment [email protected] Use pi

JASS: Japanese-specific Sequence to Sequence Pre-training for Neural Machine Translation

JASS: Japanese-specific Sequence to Sequence Pre-training for Neural Machine Translation This the repository for this paper. Find extensions of this w

Official code of Team Yao at Multi-Modal-Fact-Verification-2022

Official code of Team Yao at Multi-Modal-Fact-Verification-2022 A Multi-Modal Fact Verification dataset released as part of the De-Factify workshop in

Detic ros - A simple ROS wrapper for Detic instance segmentation using pre-trained dataset

Detic ros - A simple ROS wrapper for Detic instance segmentation using pre-trained dataset

SAS: Self-Augmentation Strategy for Language Model Pre-training

SAS: Self-Augmentation Strategy for Language Model Pre-training This repository

This repository contains pre-trained models and some evaluation code for our paper Towards Unsupervised Dense Information Retrieval with Contrastive Learning

Contriever: Towards Unsupervised Dense Information Retrieval with Contrastive Learning This repository contains pre-trained models and some evaluation

Revisiting Weakly Supervised Pre-Training of Visual Perception Models

SWAG: Supervised Weakly from hashtAGs This repository contains SWAG models from the paper Revisiting Weakly Supervised Pre-Training of Visual Percepti

A shimmer pre-load component for Plotly Dash

dash-loading-shimmer A shimmer pre-load component for Plotly Dash Installation Get it with pip: pip install dash-loading-extras Or maybe you prefer Pi

RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2

RoNER RoNER is a Named Entity Recognition model based on a pre-trained BERT transformer model trained on RONECv2. It is meant to be an easy to use, hi

CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pairs

CLIP [Blog] [Paper] [Model Card] [Colab] CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pair

Pre-trained models for a Cascaded-FCN in caffe and tensorflow that segments

Cascaded-FCN This repository contains the pre-trained models for a Cascaded-FCN in caffe and tensorflow that segments the liver and its lesions out of

This repository contains code, network definitions and pre-trained models for working on remote sensing images using deep learning

Deep learning for Earth Observation This repository contains code, network definitions and pre-trained models for working on remote sensing images usi

TLXZoo - Pre-trained models based on TensorLayerX

Pre-trained models based on TensorLayerX. TensorLayerX is a multi-backend AI fra

MADT: Offline Pre-trained Multi-Agent Decision Transformer

MADT: Offline Pre-trained Multi-Agent Decision Transformer A link to our paper can be found on Arxiv. Overview Official codebase for Offline Pre-train

X-VLM: Multi-Grained Vision Language Pre-Training

X-VLM: learning multi-grained vision language alignments Multi-Grained Vision Language Pre-Training: Aligning Texts with Visual Concepts. Yan Zeng, Xi

TweebankNLP - Pre-trained Tweet NLP Pipeline (NER, tokenization, lemmatization, POS tagging, dependency parsing) + Models + Tweebank-NER

TweebankNLP This repo contains the new Tweebank-NER dataset and Twitter-Stanza p

(Pre-)compromise operations for MITRE CALDERA

(Pre-)compromise operations for CALDERA Extend your CALDERA operations over the entire adversary killchain. In contrast to MITRE's access plugin, cald

A PyTorch implementation of VIOLET

VIOLET: End-to-End Video-Language Transformers with Masked Visual-token Modeling A PyTorch implementation of VIOLET Overview VIOLET is an implementati

Qcover is an open source effort to help exploring combinatorial optimization problems in Noisy Intermediate-scale Quantum(NISQ) processor.

Qcover is an open source effort to help exploring combinatorial optimization problems in Noisy Intermediate-scale Quantum(NISQ) processor. It is devel

Predicting 10 different clothing types using Xception pre-trained model.

Predicting-Clothing-Types Predicting 10 different clothing types using Xception pre-trained model from Keras library. It is reimplemented version from

Visual dialog agents with pre-trained vision-and-language encoders.

Learning Better Visual Dialog Agents with Pretrained Visual-Linguistic Representation Or READ-UP: Referring Expression Agent Dialog with Unified Pretr

POC of CVE-2021-26084, which is Atlassian Confluence Server OGNL Pre-Auth RCE Injection Vulneralibity.

CVE-2021-26084 Description POC of CVE-2021-26084, which is Atlassian Confluence Server OGNL(Object-Graph Navigation Language) Pre-Auth RCE Injection V

Pytorch implementation of MLP-Mixer with loading pre-trained models.

MLP-Mixer-Pytorch PyTorch implementation of MLP-Mixer: An all-MLP Architecture for Vision with the function of loading official ImageNet pre-trained p

A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval

CLIP4CMR A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval The original data and pre-calculate

Supervised 3D Pre-training on Large-scale 2D Natural Image Datasets for 3D Medical Image Analysis

Introduction This is an implementation of our paper Supervised 3D Pre-training on Large-scale 2D Natural Image Datasets for 3D Medical Image Analysis.

A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval

CLIP4CMR A Comprehensive Empirical Study of Vision-Language Pre-trained Model for Supervised Cross-Modal Retrieval The original data and pre-calculate

Official Implementation of "DialogLM: Pre-trained Model for Long Dialogue Understanding and Summarization."

DialogLM Code for AAAI 2022 paper: DialogLM: Pre-trained Model for Long Dialogue Understanding and Summarization. Pre-trained Models We release two ve

Cherche (search in French) allows you to create a neural search pipeline using retrievers and pre-trained language models as rankers.

Cherche (search in French) allows you to create a neural search pipeline using retrievers and pre-trained language models as rankers. Cherche is meant to be used with small to medium sized corpora. Cherche's main strength is its ability to build diverse and end-to-end pipelines.

Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data

Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data This is the official PyTorch implementation of the SeCo paper: @articl

Code, pre-trained models and saliency results for the paper "Boosting RGB-D Saliency Detection by Leveraging Unlabeled RGB Images".

Boosting RGB-D Saliency Detection by Leveraging Unlabeled RGB This repository is the official implementation of the paper. Our results comming soon in

UI2I via StyleGAN2 - Unsupervised image-to-image translation method via pre-trained StyleGAN2 network

We proposed an unsupervised image-to-image translation method via pre-trained StyleGAN2 network. paper: Unsupervised Image-to-Image Translation via Pr

Preprossing-loan-data-with-NumPy - In this project, I have cleaned and pre-processed the loan data that belongs to an affiliate bank based in the United States.

Preprossing-loan-data-with-NumPy In this project, I have cleaned and pre-processed the loan data that belongs to an affiliate bank based in the United

KoBERT - Korean BERT pre-trained cased (KoBERT)

KoBERT KoBERT Korean BERT pre-trained cased (KoBERT) Why'?' Training Environment Requirements How to install How to use Using with PyTorch Using with

Linescanning - Package for (pre)processing of anatomical and (linescanning) fMRI data

line scanning repository This repository contains all of the tools used during the acquisition and postprocessing of line scanning data at the Spinoza

Let's pretend you want to create a AWS Lambda project called "sns-processor".

Usage Let's pretend you want to create a AWS Lambda project called "sns-processor". Rather than using lambda and then editing the results to include y

PyTorch implementation for Graph Contrastive Learning with Augmentations

Graph Contrastive Learning with Augmentations PyTorch implementation for Graph Contrastive Learning with Augmentations [poster] [appendix] Yuning You*

CCCL: Contrastive Cascade Graph Learning.

CCGL: Contrastive Cascade Graph Learning This repo provides a reference implementation of Contrastive Cascade Graph Learning (CCGL) framework as descr

GCC: Graph Contrastive Coding for Graph Neural Network Pre-Training @ KDD 2020

GCC: Graph Contrastive Coding for Graph Neural Network Pre-Training Original implementation for paper GCC: Graph Contrastive Coding for Graph Neural N

Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking."

Expert-Linking Pytorch implementation of the paper "COAD: Contrastive Pre-training with Adversarial Fine-tuning for Zero-shot Expert Linking." This is

Library for managing git hooks

Autohooks Library for managing and writing git hooks in Python. Looking for automatic formatting or linting, e.g., with black and pylint, while creati

Pythonic event-processing library based on decorators

Process Events In Style This library aims to simplify the common pattern of event processing. It simplifies the process of filtering, dispatching and

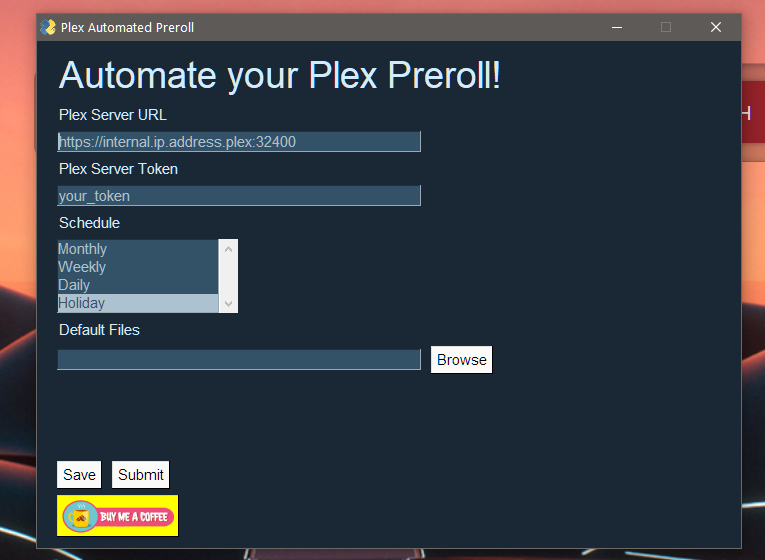

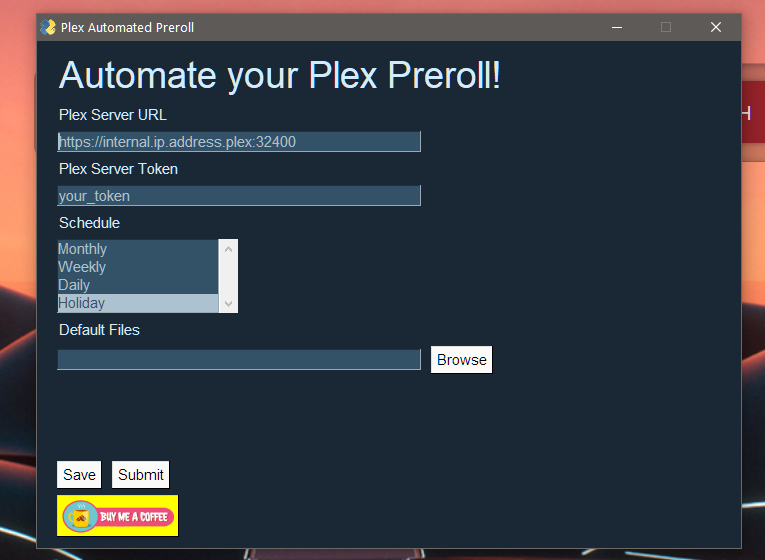

This is the new and improved Plex Automatic Pre-roll script with a GUI

Rollarr This is the new and improved Automatic Pre-roll script with a GUI for Plex now called Rollarr! It should be stable but if you find a bug pleas

Align and Prompt: Video-and-Language Pre-training with Entity Prompts

ALPRO Align and Prompt: Video-and-Language Pre-training with Entity Prompts [Paper] Dongxu Li, Junnan Li, Hongdong Li, Juan Carlos Niebles, Steven C.H

Pre-Training Graph Neural Networks for Cold-Start Users and Items Representation.

Pretrain-Recsys This is our Tensorflow implementation for our WSDM 2021 paper: Bowen Hao, Jing Zhang, Hongzhi Yin, Cuiping Li, Hong Chen. Pre-Training

Pre-training of Graph Augmented Transformers for Medication Recommendation

G-Bert Pre-training of Graph Augmented Transformers for Medication Recommendation Intro G-Bert combined the power of Graph Neural Networks and BERT (B

Code for KDD'20 "Generative Pre-Training of Graph Neural Networks"

GPT-GNN: Generative Pre-Training of Graph Neural Networks GPT-GNN is a pre-training framework to initialize GNNs by generative pre-training. It can be

![[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages](https://github.com/michiyasunaga/DrRepair/raw/master/DrRepair-overview.png)

[ICML 2020] DrRepair: Learning to Repair Programs from Error Messages

DrRepair: Learning to Repair Programs from Error Messages This repo provides the source code & data of our paper: Graph-based, Self-Supervised Program

Autoregressive Predictive Coding: An unsupervised autoregressive model for speech representation learning

Autoregressive Predictive Coding This repository contains the official implementation (in PyTorch) of Autoregressive Predictive Coding (APC) proposed

A module for parsing and processing commands.

cmdtools A module for parsing and processing commands. Installation pip install --upgrade cmdtools-py install latest commit from GitHub pip install g

pypyr task-runner cli & api for automation pipelines.

pypyr task-runner cli & api for automation pipelines. Automate anything by combining commands, different scripts in different languages & applications into one pipeline process.

PyTorch implementation of Rethinking Positional Encoding in Language Pre-training

TUPE PyTorch implementation of Rethinking Positional Encoding in Language Pre-training. Quickstart Clone this repository. git clone https://github.com

An executor that performs standard pre-processing and normalization on images.

An executor that performs standard pre-processing and normalization on images.

Code release for SLIP Self-supervision meets Language-Image Pre-training

SLIP: Self-supervision meets Language-Image Pre-training What you can find in this repo: Pre-trained models (with ViT-Small, Base, Large) and code to

Some out-of-the-box hooks for pre-commit

pre-commit-hooks Some out-of-the-box hooks for pre-commit. See also: https://github.com/pre-commit/pre-commit Using pre-commit-hooks with pre-commit A

Ansible Automation Example: JSNAPY PRE/POST Upgrade Validation

Ansible Automation Example: JSNAPY PRE/POST Upgrade Validation Overview This example will show how to validate the status of our firewall before and a

This is the new and improved Plex Automatic Pre-roll script with a GUI

Plex-Automatic-Pre-roll-GUI This is the new and improved Plex Automatic Pre-roll script with a GUI! It should be stable but if you find a bug please l

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition"

Tensorflow Implementation for "Pre-trained Deep Convolution Neural Network Model With Attention for Speech Emotion Recognition" Pre-trained Deep Convo

Resources related to our paper "CLIN-X: pre-trained language models and a study on cross-task transfer for concept extraction in the clinical domain"

CLIN-X (CLIN-X-ES) & (CLIN-X-EN) This repository holds the companion code for the system reported in the paper: "CLIN-X: pre-trained language models a

BARTpho: Pre-trained Sequence-to-Sequence Models for Vietnamese

Table of contents Introduction Using BARTpho with fairseq Using BARTpho with transformers Notes BARTpho: Pre-trained Sequence-to-Sequence Models for V

A PyTorch-based model pruning toolkit for pre-trained language models

English | 中文说明 TextPruner是一个为预训练语言模型设计的模型裁剪工具包,通过轻量、快速的裁剪方法对模型进行结构化剪枝,从而实现压缩模型体积、提升模型速度。 其他相关资源: 知识蒸馏工具TextBrewer:https://github.com/airaria/TextBrewe

On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021))

PTvsBT On the Complementarity between Pre-Training and Back-Translation for Neural Machine Translation (Findings of EMNLP 2021) Citation Please cite a

Industrial Image Anomaly Localization Based on Gaussian Clustering of Pre-trained Feature

Industrial Image Anomaly Localization Based on Gaussian Clustering of Pre-trained Feature Q. Wan, L. Gao, X. Li and L. Wen, "Industrial Image Anomaly

Some pre-commit hooks for OpenMMLab projects

pre-commit-hooks Some pre-commit hooks for OpenMMLab projects. Using pre-commit-hooks with pre-commit Add this to your .pre-commit-config.yaml - rep

Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition

SEW (Squeezed and Efficient Wav2vec) The repo contains the code of the paper "Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speec

Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE)

[中文|English] Rotary Transformer Rotary Transformer is an MLM pre-trained language model with rotary position embedding (RoPE). The RoPE is a relative

ByT5: Towards a token-free future with pre-trained byte-to-byte models

ByT5: Towards a token-free future with pre-trained byte-to-byte models ByT5 is a tokenizer-free extension of the mT5 model. Instead of using a subword

Code for CodeT5: a new code-aware pre-trained encoder-decoder model.

CodeT5: Identifier-aware Unified Pre-trained Encoder-Decoder Models for Code Understanding and Generation This is the official PyTorch implementation

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models

PEGASUS library Pre-training with Extracted Gap-sentences for Abstractive SUmmarization Sequence-to-sequence models, or PEGASUS, uses self-supervised