1820 Repositories

Python transformer-models Libraries

Meshed-Memory Transformer for Image Captioning. CVPR 2020

M²: Meshed-Memory Transformer This repository contains the reference code for the paper Meshed-Memory Transformer for Image Captioning (CVPR 2020). Pl

PyTorch code for MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning

MART: Memory-Augmented Recurrent Transformer for Coherent Video Paragraph Captioning PyTorch code for our ACL 2020 paper "MART: Memory-Augmented Recur

Implementation of the Object Relation Transformer for Image Captioning

Object Relation Transformer This is a PyTorch implementation of the Object Relation Transformer published in NeurIPS 2019. You can find the paper here

Pytorch-diffusion - A basic PyTorch implementation of 'Denoising Diffusion Probabilistic Models'

PyTorch implementation of 'Denoising Diffusion Probabilistic Models' This reposi

DeepSpeech - Easy-to-use Speech Toolkit including SOTA ASR pipeline, influential TTS with text frontend and End-to-End Speech Simultaneous Translation.

(简体中文|English) Quick Start | Documents | Models List PaddleSpeech is an open-source toolkit on PaddlePaddle platform for a variety of critical tasks i

Computer-Vision-Paper-Reviews - Computer Vision Paper Reviews with Key Summary along Papers & Codes

Computer-Vision-Paper-Reviews Computer Vision Paper Reviews with Key Summary along Papers & Codes. Jonathan Choi 2021 50+ Papers across Computer Visio

LightningFSL: Pytorch-Lightning implementations of Few-Shot Learning models.

LightningFSL: Few-Shot Learning with Pytorch-Lightning In this repo, a number of pytorch-lightning implementations of FSL algorithms are provided, inc

CorrProxies - Optimizing Machine Learning Inference Queries with Correlative Proxy Models

CorrProxies - Optimizing Machine Learning Inference Queries with Correlative Proxy Models

Augmented CLIP - Training simple models to predict CLIP image embeddings from text embeddings, and vice versa.

Train aug_clip against laion400m-embeddings found here: https://laion.ai/laion-400-open-dataset/ - note that this used the base ViT-B/32 CLIP model. S

TrainingBike - Code, models and schematics I've used to interface my stationary training bike with PC.

TrainingBike Code, models and schematics I've used to interface my stationary training bike with PC. You can find more information about the project i

Implementation of hyperparameter optimization/tuning methods for machine learning & deep learning models

Hyperparameter Optimization of Machine Learning Algorithms This code provides a hyper-parameter optimization implementation for machine learning algor

Transformer - A TensorFlow Implementation of the Transformer: Attention Is All You Need

[UPDATED] A TensorFlow Implementation of Attention Is All You Need When I opened this repository in 2017, there was no official code yet. I tried to i

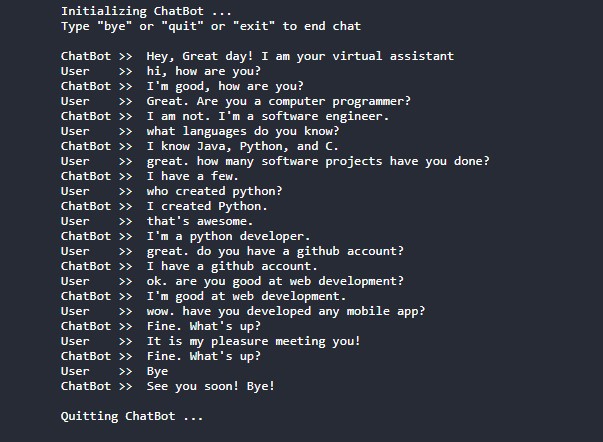

Conversational-AI-ChatBot - Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users!

Conversational AI ChatBot Intelligent ChatBot built with Microsoft's DialoGPT transformer to make conversations with human users! In this project? Thi

A collection of Machine Learning Models To Web Api which are built on open source technologies/frameworks like Django, Flask.

Author Ibrahim Koné From-Machine-Learning-Models-To-WebAPI A collection of Machine Learning Models To Web Api which are built on open source technolog

SPT_LSA_ViT - Implementation for Visual Transformer for Small-size Datasets

Vision Transformer for Small-Size Datasets Seung Hoon Lee and Seunghyun Lee and Byung Cheol Song | Paper Inha University Abstract Recently, the Vision

Time-series-deep-learning - Developing Deep learning LSTM, BiLSTM models, and NeuralProphet for multi-step time-series forecasting of stock price.

Stock Price Prediction Using Deep Learning Univariate Time Series Predicting stock price using historical data of a company using Neural networks for

Image-popularity-score - A novel deep regression method for image scoring.

Image-popularity-score - A novel deep regression method for image scoring.

Flask app to predict daily radiation from the time series of Solcast from Islamabad, Pakistan

Solar-radiation-ISB-MLOps - Flask app to predict daily radiation from the time series of Solcast from Islamabad, Pakistan.

Minimal diffusion models - Minimal code and simple experiments to play with Denoising Diffusion Probabilistic Models (DDPMs)

Minimal code and simple experiments to play with Denoising Diffusion Probabilist

SOTA easy to use PyTorch-based DL training library

Easily train or fine-tune SOTA computer vision models from one training repository. SuperGradients Introduction Welcome to SuperGradients, a free open

Offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation

Shunted Transformer This is the offical implementation of Shunted Self-Attention via Multi-Scale Token Aggregation by Sucheng Ren, Daquan Zhou, Shengf

PyTorch Implementation of SSTNs for hyperspectral image classifications from the IEEE T-GRS paper "Spectral-Spatial Transformer Network for Hyperspectral Image Classification: A FAS Framework."

PyTorch Implementation of SSTN for Hyperspectral Image Classification Paper links: SSTN published on IEEE T-GRS. Also, you can directly find the imple

Code for Universal Semi-Supervised Semantic Segmentation models paper accepted in ICCV 2019

USSS_ICCV19 Code for Universal Semi Supervised Semantic Segmentation accepted to ICCV 2019. Full Paper available at https://arxiv.org/abs/1811.10323.

Training neural models with structured signals.

Neural Structured Learning in TensorFlow Neural Structured Learning (NSL) is a new learning paradigm to train neural networks by leveraging structured

Ecco is a python library for exploring and explaining Natural Language Processing models using interactive visualizations.

Visualize, analyze, and explore NLP language models. Ecco creates interactive visualizations directly in Jupyter notebooks explaining the behavior of Transformer-based language models (like GPT2, BERT, RoBERTA, T5, and T0).

Beibo is a Python library that uses several AI prediction models to predict stocks returns over a defined period of time.

Beibo is a Python library that uses several AI prediction models to predict stocks returns over a defined period of time.

Contrastive Learning of Structured World Models

Contrastive Learning of Structured World Models This repository contains the official PyTorch implementation of: Contrastive Learning of Structured Wo

The official pytorch implementation of ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias

ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias Introduction | Updates | Usage | Results&Pretrained Models | Statement | Intr

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation

LAVT: Language-Aware Vision Transformer for Referring Image Segmentation Where we are ? 12.27 目前和原论文仍有1%左右得差距,但已经力压很多SOTA了 ckpt__448_epoch_25.pth mIoU

Variable Transformer Calculator

✠ VASCO - VAriable tranSformer CalculatOr Software que calcula informações de transformadores feita para a matéria de "Conversão Eletromecânica de Ene

Official release of MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis of Pancreatic Cancer axriv: http://arxiv.org/abs/2112.13513

MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis This is the official page of the MSHT with its experimental script and records. We de

Quantized models with python

quantized-network download .pth files to qmodels/: googlenet : https://download.

A modular dynamical-systems model of Ethereum's validator economics.

CADLabs Ethereum Economic Model A modular dynamical-systems model of Ethereum's validator economics, based on the open-source Python library radCAD, a

Unofficial TensorFlow implementation of the Keyword Spotting Transformer model

Keyword Spotting Transformer This is the unofficial TensorFlow implementation of the Keyword Spotting Transformer model. This model is used to train o

Official repository of the paper Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

Official repository of the paper Learning to Regress 3D Face Shape and Expression from an Image without 3D Supervision

TensorFlow implementation of "Attention is all you need (Transformer)"

[TensorFlow 2] Attention is all you need (Transformer) TensorFlow implementation of "Attention is all you need (Transformer)" Dataset The MNIST datase

XViT - Space-time Mixing Attention for Video Transformer

XViT - Space-time Mixing Attention for Video Transformer This is the official implementation of the XViT paper: @inproceedings{bulat2021space, title

GUI for visualization and interactive editing of SMPL-family body models ie. SMPL, SMPL-X, MANO, FLAME.

Body Model Visualizer Introduction This is a simple Open3D-based GUI for SMPL-family body models. This GUI lets you play with the shape, expression, a

Machine Learning Course Project, IMDB movie review sentiment analysis by lstm, cnn, and transformer

IMDB Sentiment Analysis This is the final project of Machine Learning Courses in Huazhong University of Science and Technology, School of Artificial I

GUI for visualization and interactive editing of SMPL-family body models ie. SMPL, SMPL-X, MANO, FLAME.

Body Model Visualizer Introduction This is a simple Open3D-based GUI for SMPL-family body models. This GUI lets you play with the shape, expression, a

LazyText is inspired b the idea of lazypredict, a library which helps build a lot of basic models without much code.

LazyText is inspired b the idea of lazypredict, a library which helps build a lot of basic models without much code. LazyText is for text what lazypredict is for numeric data.

PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation.

ALiBi PyTorch implementation of Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation. Quickstart Clone this reposit

A website which allows you to play with the GPT-2 transformer

transformers A website which allows you to play with the GPT-2 model Built with ❤️ by raphtlw Table of contents Model Setup About Contributors Model T

Tools to convert SQLAlchemy models to Pydantic models

Pydantic-SQLAlchemy Tools to generate Pydantic models from SQLAlchemy models. Still experimental. How to use Quick example: from typing import List f

Implementation of the final project of the course DDA6309 Probabilistic Graphical Model

Task-aware Joint CWS and POS (TCwsPos) This is the implementation of the final project of the course DDA6309 Probabilistic Graphical Models, The Chine

Tensorflow implementation for "Improved Transformer for High-Resolution GANs" (NeurIPS 2021).

HiT-GAN Official TensorFlow Implementation HiT-GAN presents a Transformer-based generator that is trained based on Generative Adversarial Networks (GA

CALPHAD tools for designing thermodynamic models, calculating phase diagrams and investigating phase equilibria.

CALPHAD tools for designing thermodynamic models, calculating phase diagrams and investigating phase equilibria.

A Python package to create, run, and post-process MODFLOW-based models.

Version 3.3.5 — release candidate Introduction FloPy includes support for MODFLOW 6, MODFLOW-2005, MODFLOW-NWT, MODFLOW-USG, and MODFLOW-2000. Other s

A minimal implementation of face-detection models using flask, gunicorn, nginx, docker, and docker-compose

Face-Detection-flask-gunicorn-nginx-docker This is a simple implementation of dockerized face-detection restful-API implemented with flask, Nginx, and

An executor that loads ONNX models and embeds documents using the ONNX runtime.

ONNXEncoder An executor that loads ONNX models and embeds documents using the ONNX runtime. Usage via Docker image (recommended) from jina import Flow

Group Activity Recognition with Clustered Spatial Temporal Transformer

GroupFormer Group Activity Recognition with Clustered Spatial-TemporalTransformer Backbone Style Action Acc Activity Acc Config Download Inv3+flow+pos

Source code of SIGIR2021 Paper 'One Chatbot Per Person: Creating Personalized Chatbots based on Implicit Profiles'

DHAP Source code of SIGIR2021 Long Paper: One Chatbot Per Person: Creating Personalized Chatbots based on Implicit User Profiles . Preinstallation Fir

Model Zoo for MindSpore

Welcome to the Model Zoo for MindSpore In order to facilitate developers to enjoy the benefits of MindSpore framework, we will continue to add typical

A few stylization coreML models that I've trained with CreateML

CoreML-StyleTransfer A few stylization coreML models that I've trained with CreateML You can open and use the .mlmodel files in the "models" folder in

Code implementation from my Medium blog post: [Transformers from Scratch in PyTorch]

transformer-from-scratch Code for my Medium blog post: Transformers from Scratch in PyTorch Note: This Transformer code does not include masked attent

Latte: Cross-framework Python Package for Evaluation of Latent-based Generative Models

Cross-framework Python Package for Evaluation of Latent-based Generative Models Latte Latte (for LATent Tensor Evaluation) is a cross-framework Python

High-Resolution Image Synthesis with Latent Diffusion Models

Latent Diffusion Models arXiv | BibTeX High-Resolution Image Synthesis with Latent Diffusion Models Robin Rombach*, Andreas Blattmann*, Dominik Lorenz

YAML-formatted plain-text file based models for Flask backed by Flask-SQLAlchemy

Flask-FileAlchemy Flask-FileAlchemy is a Flask extension that lets you use Markdown or YAML formatted plain-text files as the main data store for your

This repository contains a CBIR system that uses swin transformer to extract image's feature.

Swin-transformer based CBIR This repository contains a CBIR(content-based image retrieval) system. Here we use Swin-transformer to extract query image

Python SDK for building, training, and deploying ML models

Overview of Kubeflow Fairing Kubeflow Fairing is a Python package that streamlines the process of building, training, and deploying machine learning (

This repository contains the code needed to train Mega-NeRF models and generate the sparse voxel octrees

Mega-NeRF This repository contains the code needed to train Mega-NeRF models and generate the sparse voxel octrees used by the Mega-NeRF-Dynamic viewe

StyleSwin: Transformer-based GAN for High-resolution Image Generation

StyleSwin This repo is the official implementation of "StyleSwin: Transformer-based GAN for High-resolution Image Generation". By Bowen Zhang, Shuyang

Official Pytorch implementation of the paper: "Locally Shifted Attention With Early Global Integration"

Locally-Shifted-Attention-With-Early-Global-Integration Pretrained models You can download all the models from here. Training Imagenet python -m torch

Source code of the paper "Deep Learning of Latent Variable Models for Industrial Process Monitoring".

Source code of the paper "Deep Learning of Latent Variable Models for Industrial Process Monitoring".

This repository contains the code, data, and models of the paper titled "CrossSum: Beyond English-Centric Cross-Lingual Abstractive Text Summarization for 1500+ Language Pairs".

CrossSum This repository contains the code, data, and models of the paper titled "CrossSum: Beyond English-Centric Cross-Lingual Abstractive Text Summ

Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021).

STAR-pytorch Implementation for paper "STAR: A Structure-aware Lightweight Transformer for Real-time Image Enhancement" (ICCV 2021). CVF (pdf) STAR-DC

This project aims to explore the deployment of Swin-Transformer based on TensorRT, including the test results of FP16 and INT8.

Swin Transformer This project aims to explore the deployment of SwinTransformer based on TensorRT, including the test results of FP16 and INT8. Introd

Matching python environment code for Lux AI 2021 Kaggle competition, and a gym interface for RL models.

Lux AI 2021 python game engine and gym This is a replica of the Lux AI 2021 game ported directly over to python. It also sets up a classic Reinforceme

Official codebase for running the small, filtered-data GLIDE model from GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models.

GLIDE This is the official codebase for running the small, filtered-data GLIDE model from GLIDE: Towards Photorealistic Image Generation and Editing w

OSLO: Open Source framework for Large-scale transformer Optimization

O S L O Open Source framework for Large-scale transformer Optimization What's New: December 21, 2021 Released OSLO 1.0. What is OSLO about? OSLO is a

High-Resolution Image Synthesis with Latent Diffusion Models

Latent Diffusion Models Requirements A suitable conda environment named ldm can be created and activated with: conda env create -f environment.yaml co

Quick tutorial on orchest.io that shows how to build multiple deep learning models on your data with a single line of code using python

Deep AutoViML Pipeline for orchest.io Quickstart Build Deep Learning models with a single line of code: deep_autoviml Deep AutoViML helps you build te

📜 GPT-2 Rhyming Limerick and Haiku models using data augmentation

Well-formed Limericks and Haikus with GPT2 📜 GPT-2 Rhyming Limerick and Haiku models using data augmentation In collaboration with Matthew Korahais &

Resources related to our paper "CLIN-X: pre-trained language models and a study on cross-task transfer for concept extraction in the clinical domain"

CLIN-X (CLIN-X-ES) & (CLIN-X-EN) This repository holds the companion code for the system reported in the paper: "CLIN-X: pre-trained language models a

TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning

TransZero++ This repository contains the testing code for the paper "TransZero++: Cross Attribute-guided Transformer for Zero-Shot Learning" submitted

The source code of "Language Models are Few-shot Multilingual Learners" (MRL @ EMNLP 2021)

Language Models are Few-shot Multilingual Learners Paper This is the source code of the paper [Arxiv] [ACL Anthology]: This code has been written usin

MISSFormer: An Effective Medical Image Segmentation Transformer

MISSFormer Code for paper "MISSFormer: An Effective Medical Image Segmentation Transformer". Please read our preprint at the following link: paper_add

Header-only library for using Keras models in C++.

frugally-deep Use Keras models in C++ with ease Table of contents Introduction Usage Performance Requirements and Installation FAQ Introduction Would

Robust fine-tuning of zero-shot models

Robust fine-tuning of zero-shot models This repository contains code for the paper Robust fine-tuning of zero-shot models by Mitchell Wortsman*, Gabri

TensorFlow implementation of "TokenLearner: What Can 8 Learned Tokens Do for Images and Videos?"

TokenLearner: What Can 8 Learned Tokens Do for Images and Videos? Source: Improving Vision Transformer Efficiency and Accuracy by Learning to Tokenize

BARTpho: Pre-trained Sequence-to-Sequence Models for Vietnamese

Table of contents Introduction Using BARTpho with fairseq Using BARTpho with transformers Notes BARTpho: Pre-trained Sequence-to-Sequence Models for V

J.A.R.V.I.S is an AI virtual assistant made in python.

J.A.R.V.I.S is an AI virtual assistant made in python. Running JARVIS Without Python To run JARVIS without python: 1. Head over to our installation pa

Benchmark for the generalization of 3D machine learning models across different remeshing/samplings of a surface.

Discretization Robust Correspondence Benchmark One challenge of machine learning on 3D surfaces is that there are many different representations/sampl

The aim is to contain multiple models for materials discovery under a common interface

Aviary The aviary contains: - roost, - wren, cgcnn. The aim is to contain multiple models for materials discovery under a common interface Environment

Django models and endpoints for working with large images -- tile serving

Django Large Image Models and endpoints for working with large images in Django -- specifically geared towards geospatial tile serving. DISCLAIMER: th

Training deep models using anime, illustration images.

animeface deep models for anime images. Datasets anime-face-dataset Anime faces collected from Getchu.com. Based on Mckinsey666's dataset. 63.6K image

A PyTorch-based model pruning toolkit for pre-trained language models

English | 中文说明 TextPruner是一个为预训练语言模型设计的模型裁剪工具包,通过轻量、快速的裁剪方法对模型进行结构化剪枝,从而实现压缩模型体积、提升模型速度。 其他相关资源: 知识蒸馏工具TextBrewer:https://github.com/airaria/TextBrewe

A library that allows for inference on probabilistic models

Bean Machine Overview Bean Machine is a probabilistic programming language for inference over statistical models written in the Python language using

The code written during my Bachelor Thesis "Classification of Human Whole-Body Motion using Hidden Markov Models".

This code was written during the course of my Bachelor thesis Classification of Human Whole-Body Motion using Hidden Markov Models. Some things might

Training PyTorch models with differential privacy

Opacus is a library that enables training PyTorch models with differential privacy. It supports training with minimal code changes required on the cli

Implementing Vision Transformer (ViT) in PyTorch

Lightning-Hydra-Template A clean and scalable template to kickstart your deep learning project 🚀 ⚡ 🔥 Click on Use this template to initialize new re

Official code for "On the Frequency Bias of Generative Models", NeurIPS 2021

Frequency Bias of Generative Models Generator Testbed Discriminator Testbed This repository contains official code for the paper On the Frequency Bias

Build Low Code Automated Tensorflow, What-IF explainable models in just 3 lines of code.

Build Low Code Automated Tensorflow explainable models in just 3 lines of code.

scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms.

Sklearn-genetic-opt scikit-learn models hyperparameters tuning and feature selection, using evolutionary algorithms. This is meant to be an alternativ

Official code repository for "Exploring Neural Models for Query-Focused Summarization"

Query-Focused Summarization Official code repository for "Exploring Neural Models for Query-Focused Summarization" This is a work in progress. Expect

Lex Rosetta: Transfer of Predictive Models Across Languages, Jurisdictions, and Legal Domains

Lex Rosetta: Transfer of Predictive Models Across Languages, Jurisdictions, and Legal Domains This is an accompanying repository to the ICAIL 2021 pap

"Learning and Analyzing Generation Order for Undirected Sequence Models" in Findings of EMNLP, 2021

undirected-generation-dev This repo contains the source code of the models described in the following paper "Learning and Analyzing Generation Order f

Mixed Transformer UNet for Medical Image Segmentation

MT-UNet Update 2021/11/19 Thank you for your interest in our work. We have uploaded the code of our MTUNet to help peers conduct further research on i

Ensembling Off-the-shelf Models for GAN Training

Vision-aided GAN video (3m) | website | paper Can the collective knowledge from a large bank of pretrained vision models be leveraged to improve GAN t