1978 Repositories

Python transformer-networks Libraries

Code for Understanding Pooling in Graph Neural Networks

Select, Reduce, Connect This repository contains the code used for the experiments of: "Understanding Pooling in Graph Neural Networks" Setup Install

Official PyTorch implementation of "AASIST: Audio Anti-Spoofing using Integrated Spectro-Temporal Graph Attention Networks"

AASIST This repository provides the overall framework for training and evaluating audio anti-spoofing systems proposed in 'AASIST: Audio Anti-Spoofing

PyTorch implementation for paper StARformer: Transformer with State-Action-Reward Representations.

StARformer This repository contains the PyTorch implementation for our paper titled StARformer: Transformer with State-Action-Reward Representations.

Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation.

Unified-EPT Code for the ICCV 2021 Workshop paper: A Unified Efficient Pyramid Transformer for Semantic Segmentation. Installation Linux, CUDA=10.0,

![[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer](https://github.com/AllenXiangX/SnowflakeNet/raw/main/pics/network.png)

[ICCV 2021 Oral] SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer

This repository contains the source code for the paper SnowflakeNet: Point Cloud Completion by Snowflake Point Deconvolution with Skip-Transformer (ICCV 2021 Oral). The project page is here.

Pytorch implementation for our ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visual Question Answering".

TRAnsformer Routing Networks (TRAR) This is an official implementation for ICCV 2021 paper "TRAR: Routing the Attention Spans in Transformers for Visu

Alias-Free Generative Adversarial Networks (StyleGAN3) Official PyTorch implementation

Alias-Free Generative Adversarial Networks (StyleGAN3) Official PyTorch implementation

Permute Me Softly: Learning Soft Permutations for Graph Representations

Permute Me Softly: Learning Soft Permutations for Graph Representations

Hypercomplex Neural Networks with PyTorch

HyperNets Hypercomplex Neural Networks with PyTorch: this repository would be a container for hypercomplex neural network modules to facilitate resear

PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech

PortaSpeech - PyTorch Implementation PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech. Model Size Module Nor

GndNet: Fast ground plane estimation and point cloud segmentation for autonomous vehicles using deep neural networks.

GndNet: Fast Ground plane Estimation and Point Cloud Segmentation for Autonomous Vehicles. Authors: Anshul Paigwar, Ozgur Erkent, David Sierra Gonzale

Shallow Convolutional Neural Networks for Human Activity Recognition using Wearable Sensors

-IEEE-TIM-2021-1-Shallow-CNN-for-HAR [IEEE TIM 2021-1] Shallow Convolutional Neural Networks for Human Activity Recognition using Wearable Sensors All

A geometric deep learning pipeline for predicting protein interface contacts.

A geometric deep learning pipeline for predicting protein interface contacts.

HeatNet is a python package that provides tools to build, train and evaluate neural networks designed to predict extreme heat wave events globally on daily to subseasonal timescales.

HeatNet HeatNet is a python package that provides tools to build, train and evaluate neural networks designed to predict extreme heat wave events glob

Unofficial PyTorch implementation of MobileViT based on paper "MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer".

MobileViT RegNet Unofficial PyTorch implementation of MobileViT based on paper MOBILEVIT: LIGHT-WEIGHT, GENERAL-PURPOSE, AND MOBILE-FRIENDLY VISION TR

Google's Meena transformer chatbot implementation

Here's my attempt at recreating Meena, a state of the art chatbot developed by Google Research and described in the paper Towards a Human-like Open-Domain Chatbot.

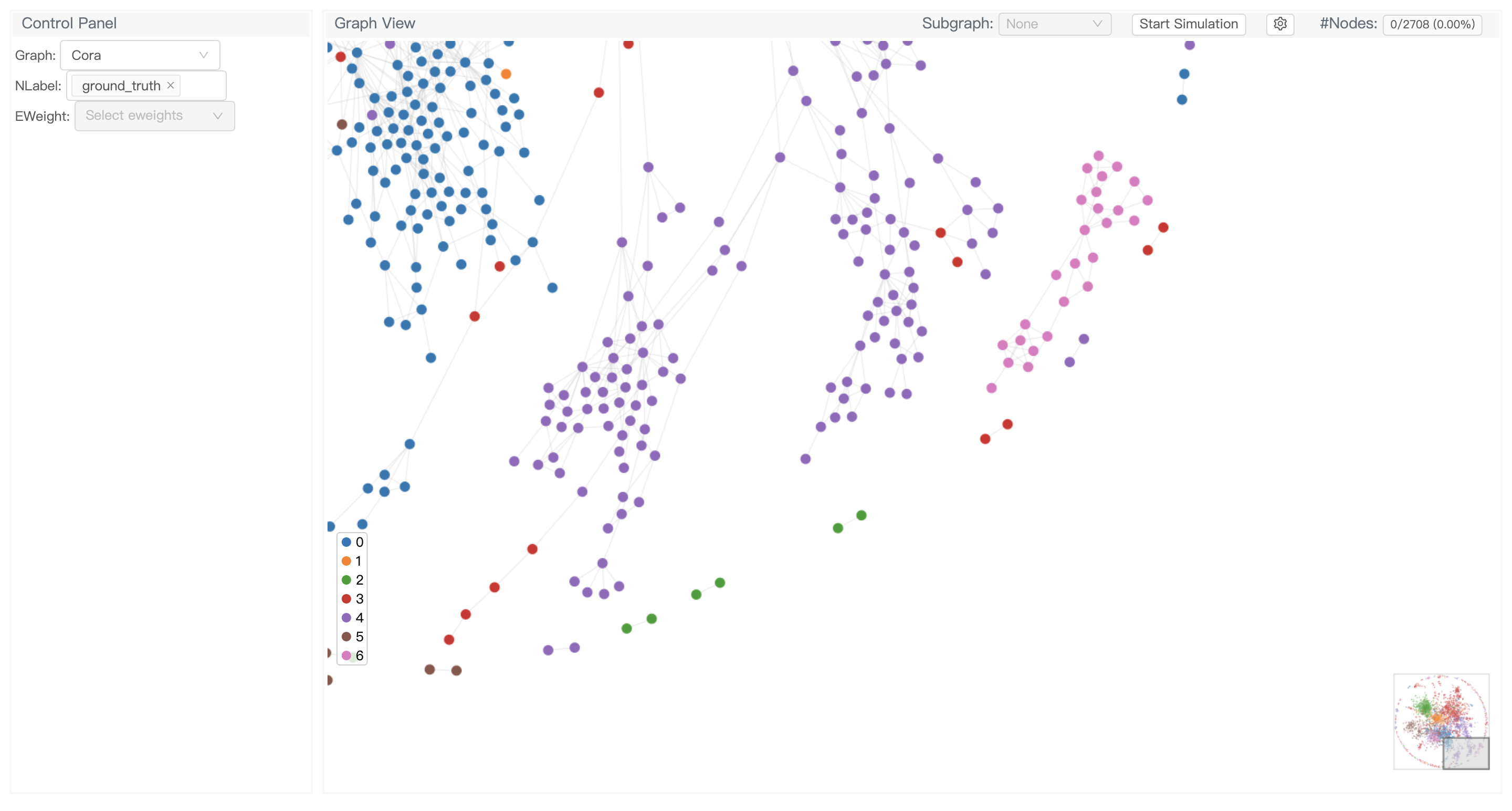

GNNLens2 is an interactive visualization tool for graph neural networks (GNN).

GNNLens2 is an interactive visualization tool for graph neural networks (GNN).

Mesh Graphormer is a new transformer-based method for human pose and mesh reconsruction from an input image

MeshGraphormer ✨ ✨ This is our research code of Mesh Graphormer. Mesh Graphormer is a new transformer-based method for human pose and mesh reconsructi

PortaSpeech - PyTorch Implementation

PortaSpeech - PyTorch Implementation PyTorch Implementation of PortaSpeech: Portable and High-Quality Generative Text-to-Speech. Model Size Module Nor

A library for performing coverage guided fuzzing of neural networks

TensorFuzz: Coverage Guided Fuzzing for Neural Networks This repository contains a library for performing coverage guided fuzzing of neural networks,

3D-Transformer: Molecular Representation with Transformer in 3D Space

3D-Transformer: Molecular Representation with Transformer in 3D Space

Physics-informed convolutional-recurrent neural networks for solving spatiotemporal PDEs

PhyCRNet Physics-informed convolutional-recurrent neural networks for solving spatiotemporal PDEs Paper link: [ArXiv] By: Pu Ren, Chengping Rao, Yang

The first public PyTorch implementation of Attentive Recurrent Comparators

arc-pytorch PyTorch implementation of Attentive Recurrent Comparators by Shyam et al. A blog explaining Attentive Recurrent Comparators Visualizing At

Code for the paper "Ordered Neurons: Integrating Tree Structures into Recurrent Neural Networks"

ON-LSTM This repository contains the code used for word-level language model and unsupervised parsing experiments in Ordered Neurons: Integrating Tree

Training RNNs as Fast as CNNs

News SRU++, a new SRU variant, is released. [tech report] [blog] The experimental code and SRU++ implementation are available on the dev branch which

A PyTorch Library for Accelerating 3D Deep Learning Research

Kaolin: A Pytorch Library for Accelerating 3D Deep Learning Research Overview NVIDIA Kaolin library provides a PyTorch API for working with a variety

![[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax](https://github.com/scaomath/galerkin-transformer/raw/main/data/simple_ft.png)

[NeurIPS 2021] Galerkin Transformer: a linear attention without softmax

[NeurIPS 2021] Galerkin Transformer: linear attention without softmax Summary A non-numerical analyst oriented explanation on Toward Data Science abou

Educational python for Neural Networks, written in pure Python/NumPy.

Educational python for Neural Networks, written in pure Python/NumPy.

Improving 3D Object Detection with Channel-wise Transformer

"Improving 3D Object Detection with Channel-wise Transformer" Thanks for the OpenPCDet, this implementation of the CT3D is mainly based on the pcdet v

Pytorch implementation of four neural network based domain adaptation techniques: DeepCORAL, DDC, CDAN and CDAN+E. Evaluated on benchmark dataset Office31.

Deep-Unsupervised-Domain-Adaptation Pytorch implementation of four neural network based domain adaptation techniques: DeepCORAL, DDC, CDAN and CDAN+E.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

An official repository for Paper "Uformer: A General U-Shaped Transformer for Image Restoration".

Uformer: A General U-Shaped Transformer for Image Restoration Zhendong Wang, Xiaodong Cun, Jianmin Bao and Jianzhuang Liu Paper: https://arxiv.org/abs

A Python library for Deep Probabilistic Modeling

Abstract DeeProb-kit is a Python library that implements deep probabilistic models such as various kinds of Sum-Product Networks, Normalizing Flows an

A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You can find two approaches for achieving this in this repo.

multitask-learning-transformers A simple recipe for training and inferencing Transformer architecture for Multi-Task Learning on custom datasets. You

A curated (most recent) list of resources for Learning with Noisy Labels

A curated (most recent) list of resources for Learning with Noisy Labels

A PyTorch implementation of SlowFast based on ICCV 2019 paper "SlowFast Networks for Video Recognition"

SlowFast A PyTorch implementation of SlowFast based on ICCV 2019 paper SlowFast Networks for Video Recognition. Requirements Anaconda PyTorch conda in

Differentiable architecture search for convolutional and recurrent networks

Differentiable Architecture Search Code accompanying the paper DARTS: Differentiable Architecture Search Hanxiao Liu, Karen Simonyan, Yiming Yang. arX

PyTorch code accompanying our paper on Maximum Entropy Generators for Energy-Based Models

Maximum Entropy Generators for Energy-Based Models All experiments have tensorboard visualizations for samples / density / train curves etc. To run th

Learning Confidence for Out-of-Distribution Detection in Neural Networks

Learning Confidence Estimates for Neural Networks This repository contains the code for the paper Learning Confidence for Out-of-Distribution Detectio

Distributionally robust neural networks for group shifts

Distributionally Robust Neural Networks for Group Shifts: On the Importance of Regularization for Worst-Case Generalization This code implements the g

NEATEST: Evolving Neural Networks Through Augmenting Topologies with Evolution Strategy Training

NEATEST: Evolving Neural Networks Through Augmenting Topologies with Evolution Strategy Training

simple generative adversarial network (GAN) using PyTorch

Generative Adversarial Networks (GANs) in PyTorch Running Run the sample code by typing: ./gan_pytorch.py ...and you'll train two nets to battle it o

PyTorch Implementation of Fully Convolutional Networks. (Training code to reproduce the original result is available.)

pytorch-fcn PyTorch implementation of Fully Convolutional Networks. Requirements pytorch = 0.2.0 torchvision = 0.1.8 fcn = 6.1.5 Pillow scipy tqdm

PyTorch Tutorial for Deep Learning Researchers

This repository provides tutorial code for deep learning researchers to learn PyTorch. In the tutorial, most of the models were implemented with less

Using / reproducing ACD from the paper "Hierarchical interpretations for neural network predictions" 🧠 (ICLR 2019)

Hierarchical neural-net interpretations (ACD) 🧠 Produces hierarchical interpretations for a single prediction made by a pytorch neural network. Offic

Making decision trees competitive with neural networks on CIFAR10, CIFAR100, TinyImagenet200, Imagenet

Neural-Backed Decision Trees · Site · Paper · Blog · Video Alvin Wan, *Lisa Dunlap, *Daniel Ho, Jihan Yin, Scott Lee, Henry Jin, Suzanne Petryk, Sarah

Visualization toolkit for neural networks in PyTorch! Demo --

FlashTorch A Python visualization toolkit, built with PyTorch, for neural networks in PyTorch. Neural networks are often described as "black box". The

VSR-Transformer - This paper proposes a new Transformer for video super-resolution (called VSR-Transformer).

VSR-Transformer By Jiezhang Cao, Yawei Li, Kai Zhang, Luc Van Gool This paper proposes a new Transformer for video super-resolution (called VSR-Transf

Code release for ICCV 2021 paper "Anticipative Video Transformer"

Anticipative Video Transformer Ranked first in the Action Anticipation task of the CVPR 2021 EPIC-Kitchens Challenge! (entry: AVT-FB-UT) [project page

A simple but complete full-attention transformer with a set of promising experimental features from various papers

x-transformers A concise but fully-featured transformer, complete with a set of promising experimental features from various papers. Install $ pip ins

A python toolbox for predictive uncertainty quantification, calibration, metrics, and visualization

Website, Tutorials, and Docs Uncertainty Toolbox A python toolbox for predictive uncertainty quantification, calibration, metrics, and visualizatio

Unofficial Implementation of Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration

Zero-Shot Text-to-Speech for Text-Based Insertion in Audio Narration This repo contains only model Implementation of Zero-Shot Text-to-Speech for Text

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

A Non-Autoregressive Transformer based TTS, supporting a family of SOTA transformers with supervised and unsupervised duration modelings. This project grows with the research community, aiming to achieve the ultimate TTS.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

Lingvo is a framework for building neural networks in Tensorflow, particularly sequence models.

PyTorch Implementation of [1611.06440] Pruning Convolutional Neural Networks for Resource Efficient Inference

PyTorch implementation of [1611.06440 Pruning Convolutional Neural Networks for Resource Efficient Inference] This demonstrates pruning a VGG16 based

Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and Layer Input Masking"

model_based_energy_constrained_compression Code for paper "Energy-Constrained Compression for Deep Neural Networks via Weighted Sparse Projection and

Learning Sparse Neural Networks through L0 regularization

Example implementation of the L0 regularization method described at Learning Sparse Neural Networks through L0 regularization, Christos Louizos, Max W

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres

Tutorial for surrogate gradient learning in spiking neural networks

SpyTorch A tutorial on surrogate gradient learning in spiking neural networks Version: 0.4 This repository contains tutorial files to get you started

OptNet: Differentiable Optimization as a Layer in Neural Networks

OptNet: Differentiable Optimization as a Layer in Neural Networks This repository is by Brandon Amos and J. Zico Kolter and contains the PyTorch sourc

This repository contains an overview of important follow-up works based on the original Vision Transformer (ViT) by Google.

This repository contains an overview of important follow-up works based on the original Vision Transformer (ViT) by Google.

GluonMM is a library of transformer models for computer vision and multi-modality research

GluonMM is a library of transformer models for computer vision and multi-modality research. It contains reference implementations of widely adopted baseline models and also research work from Amazon Research.

The VeriNet toolkit for verification of neural networks

VeriNet The VeriNet toolkit is a state-of-the-art sound and complete symbolic interval propagation based toolkit for verification of neural networks.

Code and data form the paper BERT Got a Date: Introducing Transformers to Temporal Tagging

BERT Got a Date: Introducing Transformers to Temporal Tagging Satya Almasian*, Dennis Aumiller*, and Michael Gertz Heidelberg University Contact us vi

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks

GPU-accelerated PyTorch implementation of Zero-shot User Intent Detection via Capsule Neural Networks This repository implements a capsule model Inten

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context Code in both PyTorch and TensorFlow

Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context This repository contains the code in both PyTorch and TensorFlow for our paper

🤗 Transformers: State-of-the-art Natural Language Processing for Pytorch, TensorFlow, and JAX.

English | 简体中文 | 繁體中文 State-of-the-art Natural Language Processing for Jax, PyTorch and TensorFlow 🤗 Transformers provides thousands of pretrained mo

[ICLR'19] Trellis Networks for Sequence Modeling

TrellisNet for Sequence Modeling This repository contains the experiments done in paper Trellis Networks for Sequence Modeling by Shaojie Bai, J. Zico

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

PyTorch implementation of convolutional neural networks-based text-to-speech synthesis models

Deepvoice3_pytorch PyTorch implementation of convolutional networks-based text-to-speech synthesis models: arXiv:1710.07654: Deep Voice 3: Scaling Tex

Sequence modeling benchmarks and temporal convolutional networks

Sequence Modeling Benchmarks and Temporal Convolutional Networks (TCN) This repository contains the experiments done in the work An Empirical Evaluati

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Transformers4Rec is a flexible and efficient library for sequential and session-based recommendation, available for both PyTorch and Tensorflow.

Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models.

Tevatron Tevatron is a simple and efficient toolkit for training and running dense retrievers with deep language models. The toolkit has a modularized

Search and filter videos based on objects that appear in them using convolutional neural networks

Thingscoop: Utility for searching and filtering videos based on their content Description Thingscoop is a command-line utility for analyzing videos se

Pretty Tensor - Fluent Neural Networks in TensorFlow

Pretty Tensor provides a high level builder API for TensorFlow. It provides thin wrappers on Tensors so that you can easily build multi-layer neural networks.

A best practice for tensorflow project template architecture.

A best practice for tensorflow project template architecture.

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

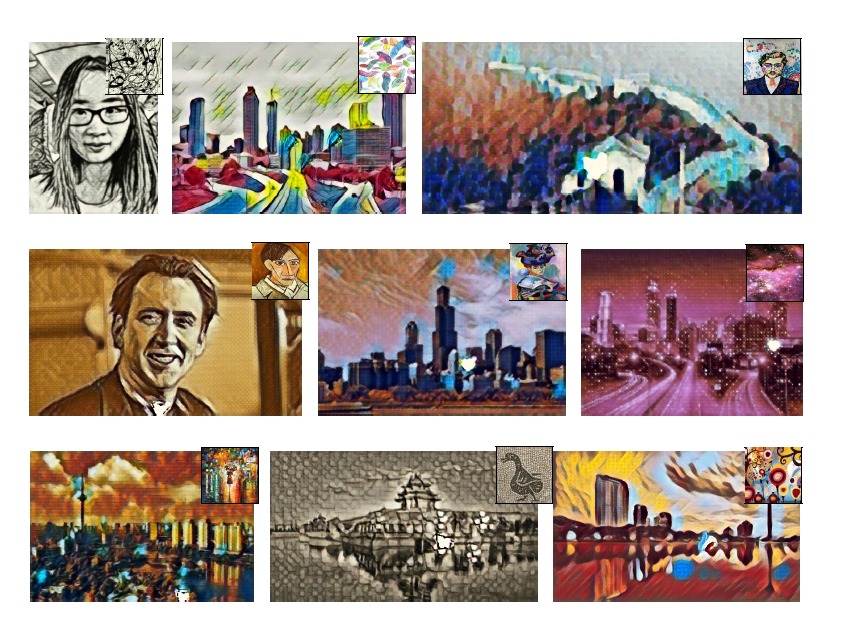

Neural Style and MSG-Net

PyTorch-Style-Transfer This repo provides PyTorch Implementation of MSG-Net (ours) and Neural Style (Gatys et al. CVPR 2016), which has been included

Pytorch implementation of Value Iteration Networks (NIPS 2016 best paper)

VIN: Value Iteration Networks A quick thank you A few others have released amazing related work which helped inspire and improve my own implementation

LeafSnap replicated using deep neural networks to test accuracy compared to traditional computer vision methods.

Deep-Leafsnap Convolutional Neural Networks have become largely popular in image tasks such as image classification recently largely due to to Krizhev

Code for the paper "Adversarial Generator-Encoder Networks"

This repository contains code for the paper "Adversarial Generator-Encoder Networks" (AAAI'18) by Dmitry Ulyanov, Andrea Vedaldi, Victor Lempitsky. Pr

Tree LSTM implementation in PyTorch

Tree-Structured Long Short-Term Memory Networks This is a PyTorch implementation of Tree-LSTM as described in the paper Improved Semantic Representati

A PyTorch implementation of Multi-digit Number Recognition from Street View Imagery using Deep Convolutional Neural Networks

SVHNClassifier-PyTorch A PyTorch implementation of Multi-digit Number Recognition from Street View Imagery using Deep Convolutional Neural Networks If

Improving Convolutional Networks via Attention Transfer (ICLR 2017)

Attention Transfer PyTorch code for "Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Tran

PyTorch implementation of Value Iteration Networks (VIN): Clean, Simple and Modular. Visualization in Visdom.

VIN: Value Iteration Networks This is an implementation of Value Iteration Networks (VIN) in PyTorch to reproduce the results.(TensorFlow version) Key

OptNet: Differentiable Optimization as a Layer in Neural Networks

OptNet: Differentiable Optimization as a Layer in Neural Networks This repository is by Brandon Amos and J. Zico Kolter and contains the PyTorch sourc

A simple PyTorch Implementation of Generative Adversarial Networks, focusing on anime face drawing.

AnimeGAN A simple PyTorch Implementation of Generative Adversarial Networks, focusing on anime face drawing. Randomly Generated Images The images are

Highway networks implemented in PyTorch.

PyTorch Highway Networks Highway networks implemented in PyTorch. Just the MNIST example from PyTorch hacked to work with Highway layers. Todo Make th

3.8% and 18.3% on CIFAR-10 and CIFAR-100

Wide Residual Networks This code was used for experiments with Wide Residual Networks (BMVC 2016) http://arxiv.org/abs/1605.07146 by Sergey Zagoruyko

Wide Residual Networks (WideResNets) in PyTorch

Wide Residual Networks (WideResNets) in PyTorch WideResNets for CIFAR10/100 implemented in PyTorch. This implementation requires less GPU memory than

PyTorch Implementation of Fully Convolutional Networks. (Training code to reproduce the original result is available.)

pytorch-fcn PyTorch implementation of Fully Convolutional Networks. Requirements pytorch = 0.2.0 torchvision = 0.1.8 fcn = 6.1.5 Pillow scipy tqdm

A PyTorch implementation for V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation

A PyTorch implementation of V-Net Vnet is a PyTorch implementation of the paper V-Net: Fully Convolutional Neural Networks for Volumetric Medical Imag

Official implementation of "Learning to Discover Cross-Domain Relations with Generative Adversarial Networks"

DiscoGAN Official PyTorch implementation of Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. Prerequisites Python 2.7

PyTorch implementation of "Learning to Discover Cross-Domain Relations with Generative Adversarial Networks"

DiscoGAN in PyTorch PyTorch implementation of Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. * All samples in READM

PyTorch implementation of "Image-to-Image Translation Using Conditional Adversarial Networks".

pix2pix-pytorch PyTorch implementation of Image-to-Image Translation Using Conditional Adversarial Networks. Based on pix2pix by Phillip Isola et al.

PyTorch implementation of the Value Iteration Networks (VIN) (NIPS '16 best paper)

Value Iteration Networks in PyTorch Tamar, A., Wu, Y., Thomas, G., Levine, S., and Abbeel, P. Value Iteration Networks. Neural Information Processing

This implements one of result networks from Large-scale evolution of image classifiers

Exotic structured image classifier This implements one of result networks from Large-scale evolution of image classifiers by Esteban Real, et. al. Req

Official DGL implementation of "Rethinking High-order Graph Convolutional Networks"

SE Aggregation This is the implementation for Rethinking High-order Graph Convolutional Networks. Here we show the codes for citation networks as an e