1978 Repositories

Python transformer-networks Libraries

Official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT This repository is the official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. ArXiv If

A model library for exploring state-of-the-art deep learning topologies and techniques for optimizing Natural Language Processing neural networks

A Deep Learning NLP/NLU library by Intel® AI Lab Overview | Models | Installation | Examples | Documentation | Tutorials | Contributing NLP Architect

Official implement of Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer

Evo-ViT: Slow-Fast Token Evolution for Dynamic Vision Transformer This repository contains the PyTorch code for Evo-ViT. This work proposes a slow-fas

A machine learning library for spiking neural networks. Supports training with both torch and jax pipelines, and deployment to neuromorphic hardware.

Rockpool Rockpool is a Python package for developing signal processing applications with spiking neural networks. Rockpool allows you to build network

Learning based AI for playing multi-round Koi-Koi hanafuda card games. Have fun.

Koi-Koi AI Learning based AI for playing multi-round Koi-Koi hanafuda card games. Platform Python PyTorch PySimpleGUI (for the interface playing vs AI

It is a tool that looks for a specific username in social networks

It is a tool that looks for a specific username in social networks

Netwalk is a Python library to discover, parse, analyze and change Cisco switched networks

Netwalk is a Python library born out of a large remadiation project aimed at making network device discovery and management as fast and painless as possible.

Natural Language Processing with transformers

we want to create a repo to illustrate usage of transformers in chinese

A collection of SOTA Image Classification Models in PyTorch

A collection of SOTA Image Classification Models in PyTorch

This is the official code for the paper "Tracker Meets Night: A Transformer Enhancer for UAV Tracking".

SCT This is the official code for the paper "Tracker Meets Night: A Transformer Enhancer for UAV Tracking" The spatial-channel Transformer (SCT) enhan

Implementation of a Transformer, but completely in Triton

Transformer in Triton (wip) Implementation of a Transformer, but completely in Triton. I'm completely new to lower-level neural net code, so this repo

Code & Models for 3DETR - an End-to-end transformer model for 3D object detection

3DETR: An End-to-End Transformer Model for 3D Object Detection PyTorch implementation and models for 3DETR. 3DETR (3D DEtection TRansformer) is a simp

This is an open-source toolkit for Heterogeneous Graph Neural Network(OpenHGNN) based on DGL [Deep Graph Library] and PyTorch.

This is an open-source toolkit for Heterogeneous Graph Neural Network(OpenHGNN) based on DGL [Deep Graph Library] and PyTorch.

CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Generation

CPT This repository contains code and checkpoints for CPT. CPT: A Pre-Trained Unbalanced Transformer for Both Chinese Language Understanding and Gener

Implementation of Hierarchical Transformer Memory (HTM) for Pytorch

Hierarchical Transformer Memory (HTM) - Pytorch Implementation of Hierarchical Transformer Memory (HTM) for Pytorch. This Deepmind paper proposes a si

Must-read Papers on Physics-Informed Neural Networks.

PINNpapers Contributed by IDRL lab. Introduction Physics-Informed Neural Network (PINN) has achieved great success in scientific computing since 2017.

MVGCN: a novel multi-view graph convolutional network (MVGCN) framework for link prediction in biomedical bipartite networks.

MVGCN MVGCN: a novel multi-view graph convolutional network (MVGCN) framework for link prediction in biomedical bipartite networks. Developer: Fu Hait

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

GNPy: Optical Route Planning and DWDM Network Optimization

GNPy is an open-source, community-developed library for building route planning and optimization tools in real-world mesh optical networks

Selene is a Python library and command line interface for training deep neural networks from biological sequence data such as genomes.

Selene is a Python library and command line interface for training deep neural networks from biological sequence data such as genomes.

code for Multi-scale Matching Networks for Semantic Correspondence, ICCV

MMNet This repo is the official implementation of ICCV 2021 paper "Multi-scale Matching Networks for Semantic Correspondence.". Pre-requisite conda cr

![[ICCV 2021] Amplitude-Phase Recombination: Rethinking Robustness of Convolutional Neural Networks in Frequency Domain](https://github.com/iCGY96/APR/raw/main/img/motivation.png)

[ICCV 2021] Amplitude-Phase Recombination: Rethinking Robustness of Convolutional Neural Networks in Frequency Domain

Amplitude-Phase Recombination (ICCV'21) Official PyTorch implementation of "Amplitude-Phase Recombination: Rethinking Robustness of Convolutional Neur

G-NIA model from "Single Node Injection Attack against Graph Neural Networks" (CIKM 2021)

Single Node Injection Attack against Graph Neural Networks This repository is our Pytorch implementation of our paper: Single Node Injection Attack ag

Optimized code based on M2 for faster image captioning training

Transformer Captioning This repository contains the code for Transformer-based image captioning. Based on meshed-memory-transformer, we further optimi

Face Mask Detection is a project to determine whether someone is wearing mask or not, using deep neural network.

face-mask-detection Face Mask Detection is a project to determine whether someone is wearing mask or not, using deep neural network. It contains 3 scr

Tutorial to pretrain & fine-tune a 🤗 Flax T5 model on a TPUv3-8 with GCP

Pretrain and Fine-tune a T5 model with Flax on GCP This tutorial details how pretrain and fine-tune a FlaxT5 model from HuggingFace using a TPU VM ava

Classifying audio using Wavelet transform and deep learning

Audio Classification using Wavelet Transform and Deep Learning A step-by-step tutorial to classify audio signals using continuous wavelet transform (C

An Implementation of SiameseRPN with Feature Pyramid Networks

SiameseRPN with FPN This project is mainly based on HelloRicky123/Siamese-RPN. What I've done is just add a Feature Pyramid Network method to the orig

Code and checkpoints for training the transformer-based Table QA models introduced in the paper TAPAS: Weakly Supervised Table Parsing via Pre-training.

End-to-end neural table-text understanding models.

CTRL-C: Camera calibration TRansformer with Line-Classification

CTRL-C: Camera calibration TRansformer with Line-Classification This repository contains the official code and pretrained models for CTRL-C (Camera ca

nnFormer: Interleaved Transformer for Volumetric Segmentation Code for paper "nnFormer: Interleaved Transformer for Volumetric Segmentation "

nnFormer: Interleaved Transformer for Volumetric Segmentation Code for paper "nnFormer: Interleaved Transformer for Volumetric Segmentation ". Please

CMT: Convolutional Neural Networks Meet Vision Transformers

CMT: Convolutional Neural Networks Meet Vision Transformers [arxiv] 1. Introduction This repo is the CMT model which impelement with pytorch, no refer

Code for the paper "Reinforcement Learning as One Big Sequence Modeling Problem"

Trajectory Transformer Code release for Reinforcement Learning as One Big Sequence Modeling Problem. Installation All python dependencies are in envir

Voxel Transformer for 3D object detection

Voxel Transformer This is a reproduced repo of Voxel Transformer for 3D object detection. The code is mainly based on OpenPCDet. Introduction We provi

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019)

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019) Introduction Official implementation of Adaptive Pyramid Context Network

ZSL-KG is a general-purpose zero-shot learning framework with a novel transformer graph convolutional network (TrGCN) to learn class representation from common sense knowledge graphs.

ZSL-KG is a general-purpose zero-shot learning framework with a novel transformer graph convolutional network (TrGCN) to learn class representa

Image Captioning using CNN and Transformers

Image-Captioning Keras/Tensorflow Image Captioning application using CNN and Transformer as encoder/decoder. In particulary, the architecture consists

TorchIO is a Medical image preprocessing and augmentation toolkit for deep learning. Part of the PyTorch Ecosystem.

Medical image preprocessing and augmentation toolkit for deep learning. Part of the PyTorch Ecosystem.

Train GPT-3 model on V100(16GB Mem) Using improved Transformer.

GPT-X using transformer pytorch

Bayesian Neural Networks in PyTorch

We present the new scheme to compute Monte Carlo estimator in Bayesian VI settings with almost no memory cost in GPU, regardles of the number of sampl

超轻量级bert的pytorch版本,大量中文注释,容易修改结构,持续更新

bert4pytorch 2021年8月27更新: 感谢大家的star,最近有小伙伴反映了一些小的bug,我也注意到了,奈何这个月工作上实在太忙,更新不及时,大约会在9月中旬集中更新一个只需要pip一下就完全可用的版本,然后会新添加一些关键注释。 再增加对抗训练的内容,更新一个完整的finetune

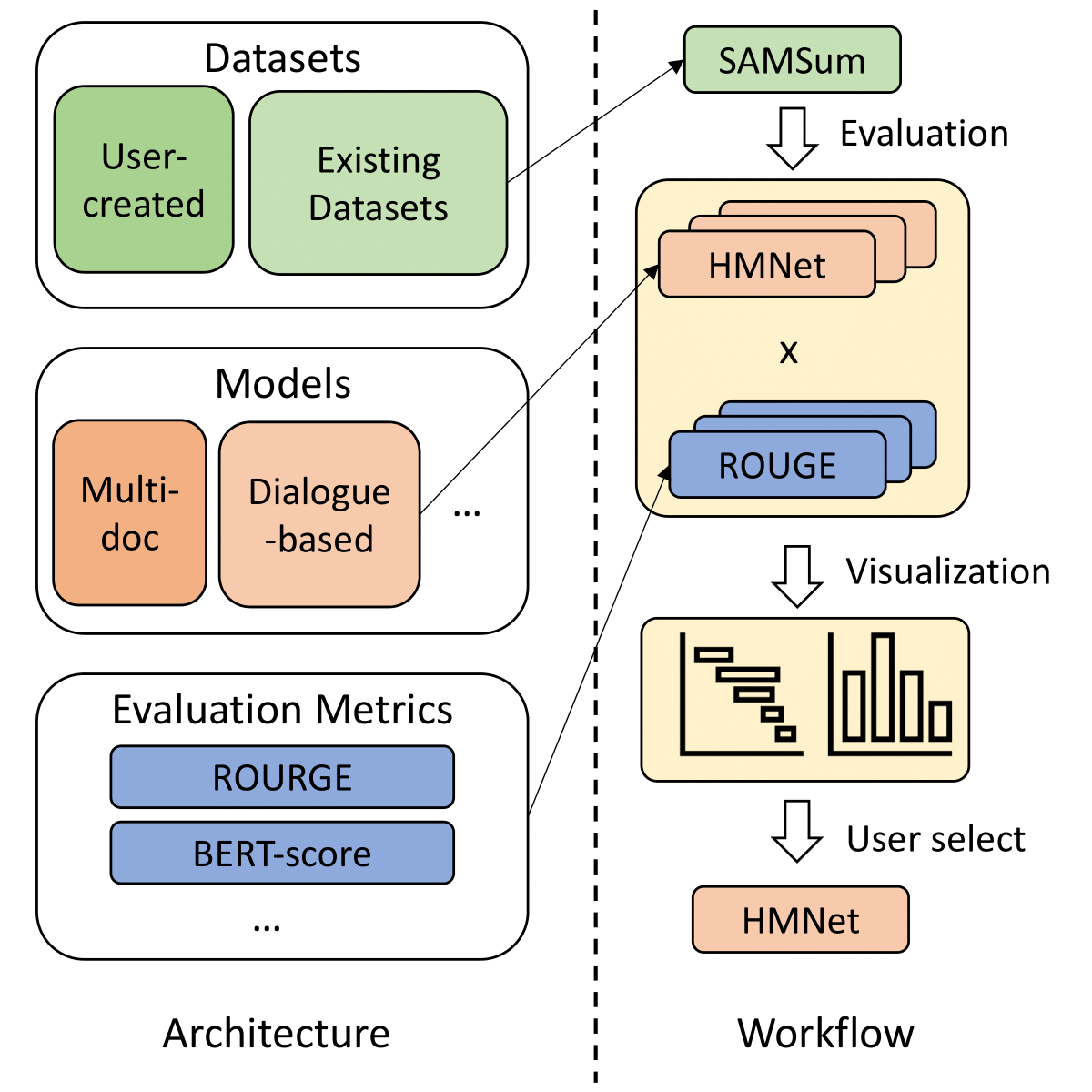

SummerTime - Text Summarization Toolkit for Non-experts

A library to help users choose appropriate summarization tools based on their specific tasks or needs. Includes models, evaluation metrics, and datasets.

Instance Segmentation in 3D Scenes using Semantic Superpoint Tree Networks

SSTNet Instance Segmentation in 3D Scenes using Semantic Superpoint Tree Networks(ICCV2021) by Zhihao Liang, Zhihao Li, Songcen Xu, Mingkui Tan, Kui J

EMNLP 2021 - Frustratingly Simple Pretraining Alternatives to Masked Language Modeling

Frustratingly Simple Pretraining Alternatives to Masked Language Modeling This is the official implementation for "Frustratingly Simple Pretraining Al

PaddleViT: State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 2.0+

PaddlePaddle Vision Transformers State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 🤖 PaddlePaddle Visual Transformers (PaddleViT or

Unofficial implementation of Perceiver IO: A General Architecture for Structured Inputs & Outputs

Perceiver IO Unofficial implementation of Perceiver IO: A General Architecture for Structured Inputs & Outputs Usage import torch from src.perceiver.

The official repository for our paper "The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers". We significantly improve the systematic generalization of transformer models on a variety of datasets using simple tricks and careful considerations.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

Ongoing research training transformer language models at scale, including: BERT & GPT-2

Megatron (1 and 2) is a large, powerful transformer developed by the Applied Deep Learning Research team at NVIDIA.

(ICCV'21) Official PyTorch implementation of Relational Embedding for Few-Shot Classification

Relational Embedding for Few-Shot Classification (ICCV 2021) Dahyun Kang, Heeseung Kwon, Juhong Min, Minsu Cho [paper], [project hompage] We propose t

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

Code for our ALiBi method for transformer language models.

Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation This repository contains the code and models for our paper Tra

ICCV2021 Oral SA-ConvONet: Sign-Agnostic Optimization of Convolutional Occupancy Networks

Sign-Agnostic Convolutional Occupancy Networks Paper | Supplementary | Video | Teaser Video | Project Page This repository contains the implementation

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

Code for "On the Effects of Batch and Weight Normalization in Generative Adversarial Networks"

Note: this repo has been discontinued, please check code for newer version of the paper here Weight Normalized GAN Code for the paper "On the Effects

Code used to generate the results appearing in "Train longer, generalize better: closing the generalization gap in large batch training of neural networks"

Train longer, generalize better - Big batch training This is a code repository used to generate the results appearing in "Train longer, generalize bet

Pytorch implementation of "A simple neural network module for relational reasoning" (Relational Networks)

Pytorch implementation of Relational Networks - A simple neural network module for relational reasoning Implemented & tested on Sort-of-CLEVR task. So

Principled Detection of Out-of-Distribution Examples in Neural Networks

ODIN: Out-of-Distribution Detector for Neural Networks This is a PyTorch implementation for detecting out-of-distribution examples in neural networks.

Accelerate Neural Net Training by Progressively Freezing Layers

FreezeOut A simple technique to accelerate neural net training by progressively freezing layers. This repository contains code for the extended abstra

Pytorch implementation of "Forward Thinking: Building and Training Neural Networks One Layer at a Time"

forward-thinking-pytorch Pytorch implementation of Forward Thinking: Building and Training Neural Networks One Layer at a Time Requirements Python 2.7

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

PyTorch Implementation of [1611.06440] Pruning Convolutional Neural Networks for Resource Efficient Inference

PyTorch implementation of [1611.06440 Pruning Convolutional Neural Networks for Resource Efficient Inference] This demonstrates pruning a VGG16 based

Temporal Segment Networks (TSN) in PyTorch

TSN-Pytorch We have released MMAction, a full-fledged action understanding toolbox based on PyTorch. It includes implementation for TSN as well as oth

Oriented Response Networks, in CVPR 2017

Oriented Response Networks [Home] [Project] [Paper] [Supp] [Poster] Torch Implementation The torch branch contains: the official torch implementation

This is the offical website for paper ''Category-consistent deep network learning for accurate vehicle logo recognition''

The Pytorch Implementation of Category-consistent deep network learning for accurate vehicle logo recognition This is the offical website for paper ''

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

ICCV2021 Oral SA-ConvONet: Sign-Agnostic Optimization of Convolutional Occupancy Networks

Sign-Agnostic Convolutional Occupancy Networks Paper | Supplementary | Video | Teaser Video | Project Page This repository contains the implementation

Code for the ICCV 2021 paper "Pixel Difference Networks for Efficient Edge Detection" (Oral).

Pixel Difference Convolution This repository contains the PyTorch implementation for "Pixel Difference Networks for Efficient Edge Detection" by Zhuo

![[Preprint]](https://github.com/VITA-Group/Deep_GCN_Benchmarking/raw/main/figs/combo.png)

[Preprint] "Bag of Tricks for Training Deeper Graph Neural Networks A Comprehensive Benchmark Study" by Tianlong Chen*, Kaixiong Zhou*, Keyu Duan, Wenqing Zheng, Peihao Wang, Xia Hu, Zhangyang Wang

Bag of Tricks for Training Deeper Graph Neural Networks: A Comprehensive Benchmark Study Codes for [Preprint] Bag of Tricks for Training Deeper Graph

Deep learning for spiking neural networks

A deep learning library for spiking neural networks. Norse aims to exploit the advantages of bio-inspired neural components, which are sparse and even

![[TIP 2021] SADRNet: Self-Aligned Dual Face Regression Networks for Robust 3D Dense Face Alignment and Reconstruction](https://github.com/MCG-NJU/SADRNet/raw/main/data/output/30904b202ac883bc14e59c1225c9316c.gif)

[TIP 2021] SADRNet: Self-Aligned Dual Face Regression Networks for Robust 3D Dense Face Alignment and Reconstruction

SADRNet Paper link: SADRNet: Self-Aligned Dual Face Regression Networks for Robust 3D Dense Face Alignment and Reconstruction Requirements python

Official code for paper "Demystifying Local Vision Transformer: Sparse Connectivity, Weight Sharing, and Dynamic Weight"

Demysitifing Local Vision Transformer, arxiv This is the official PyTorch implementation of our paper. We simply replace local self attention by (dyna

Official code for "Focal Self-attention for Local-Global Interactions in Vision Transformers"

Focal Transformer This is the official implementation of our Focal Transformer -- "Focal Self-attention for Local-Global Interactions in Vision Transf

A PyTorch implementation of "Multi-Scale Contrastive Siamese Networks for Self-Supervised Graph Representation Learning", IJCAI-21

MERIT A PyTorch implementation of our IJCAI-21 paper Multi-Scale Contrastive Siamese Networks for Self-Supervised Graph Representation Learning. Depen

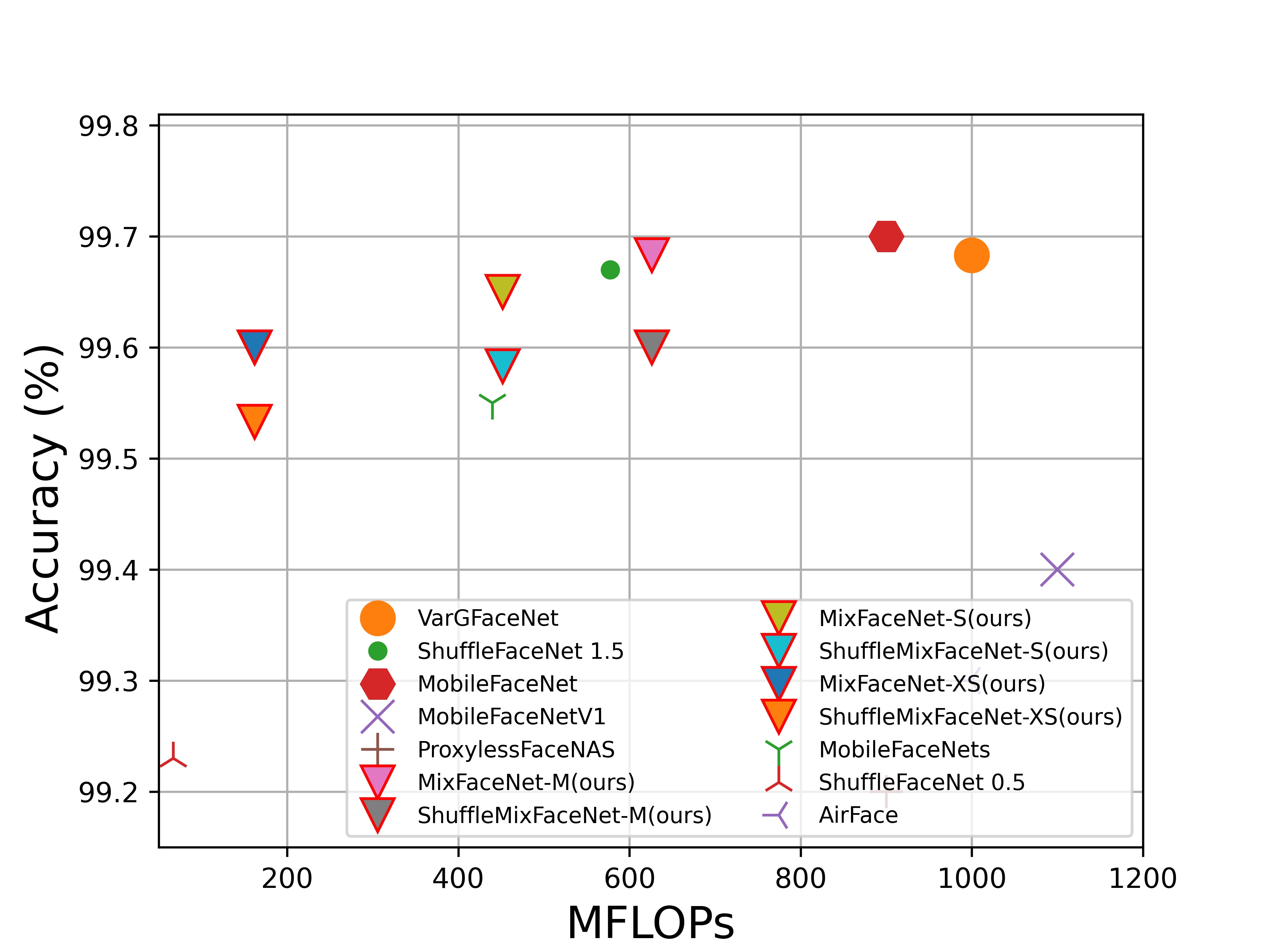

Official repository for MixFaceNets: Extremely Efficient Face Recognition Networks

MixFaceNets This is the official repository of the paper: MixFaceNets: Extremely Efficient Face Recognition Networks. (Accepted in IJCB2021) https://i

PyTorch version of the paper 'Enhanced Deep Residual Networks for Single Image Super-Resolution' (CVPRW 2017)

About PyTorch 1.2.0 Now the master branch supports PyTorch 1.2.0 by default. Due to the serious version problem (especially torch.utils.data.dataloade

yolox_backbone is a deep-learning library and is a collection of YOLOX Backbone models.

YOLOX-Backbone yolox-backbone is a deep-learning library and is a collection of YOLOX backbone models. Install pip install yolox-backbone Load a Pret

An implementation of paper `Real-time Convolutional Neural Networks for Emotion and Gender Classification` with PaddlePaddle.

简介 通过PaddlePaddle框架复现了论文 Real-time Convolutional Neural Networks for Emotion and Gender Classification 中提出的两个模型,分别是SimpleCNN和MiniXception。利用 imdb_crop

Transformer model implemented with Pytorch

transformer-pytorch Transformer model implemented with Pytorch Attention is all you need-[Paper] Architecture Self-Attention self_attention.py class

QTool: A Low-bit Quantization Toolbox for Deep Neural Networks in Computer Vision

This project provides abundant choices of quantization strategies (such as the quantization algorithms, training schedules and empirical tricks) for quantizing the deep neural networks into low-bit counterparts.

Extract knowledge from raw text

Extract knowledge from raw text This repository is a nearly copy-paste of "From Text to Knowledge: The Information Extraction Pipeline" with some cosm

PyTorch code for our ECCV 2018 paper "Image Super-Resolution Using Very Deep Residual Channel Attention Networks"

PyTorch code for our ECCV 2018 paper "Image Super-Resolution Using Very Deep Residual Channel Attention Networks"

Guesslang detects the programming language of a given source code

Detect the programming language of a source code

pix2tex: Using a ViT to convert images of equations into LaTeX code.

The goal of this project is to create a learning based system that takes an image of a math formula and returns corresponding LaTeX code.

Library for implementing reservoir computing models (echo state networks) for multivariate time series classification and clustering.

Framework overview This library allows to quickly implement different architectures based on Reservoir Computing (the family of approaches popularized

Official implementation of the ICCV 2021 paper "Conditional DETR for Fast Training Convergence".

The DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings and that the spatial embeddings make minor contributions, increasing the need for high-quality content embeddings and thus increasing the training difficulty.

The official code for paper "R2D2: Recursive Transformer based on Differentiable Tree for Interpretable Hierarchical Language Modeling".

R2D2 This is the official code for paper titled "R2D2: Recursive Transformer based on Differentiable Tree for Interpretable Hierarchical Language Mode

Generative Flow Networks

Flow Network based Generative Models for Non-Iterative Diverse Candidate Generation Implementation for our paper, submitted to NeurIPS 2021 (also chec

Official PyTorch implementation of the paper: Improving Graph Neural Network Expressivity via Subgraph Isomorphism Counting.

Improving Graph Neural Network Expressivity via Subgraph Isomorphism Counting Official PyTorch implementation of the paper: Improving Graph Neural Net

This is an official implementation of the High-Resolution Transformer for Dense Prediction.

High-Resolution Transformer for Dense Prediction Introduction This is the official implementation of High-Resolution Transformer (HRT). We present a H

Official PaddlePaddle implementation of Paint Transformer

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction [Paper] [Paddle Implementation] Update We have optimized the serial inference p

Vision-Language Transformer and Query Generation for Referring Segmentation (ICCV 2021)

Vision-Language Transformer and Query Generation for Referring Segmentation Please consider citing our paper in your publications if the project helps

LONG-TERM SERIES FORECASTING WITH QUERYSELECTOR – EFFICIENT MODEL OF SPARSEATTENTION

Query Selector Here you can find code and data loaders for the paper https://arxiv.org/pdf/2107.08687v1.pdf . Query Selector is a novel approach to sp

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

Code for EmBERT, a transformer model for embodied, language-guided visual task completion.

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Ἀνατομή is a PyTorch library to analyze representation of neural networks

Ἀνατομή is a PyTorch library to analyze representation of neural networks

10th place solution for Google Smartphone Decimeter Challenge at kaggle.

Under refactoring 10th place solution for Google Smartphone Decimeter Challenge at kaggle. Google Smartphone Decimeter Challenge Global Navigation Sat

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

Pytorch implementation for reproducing StackGAN_v2 results in the paper StackGAN++: Realistic Image Synthesis with Stacked Generative Adversarial Networks

StackGAN-v2 StackGAN-v1: Tensorflow implementation StackGAN-v1: Pytorch implementation Inception score evaluation Pytorch implementation for reproduci