1356 Repositories

Python vision-transformers Libraries

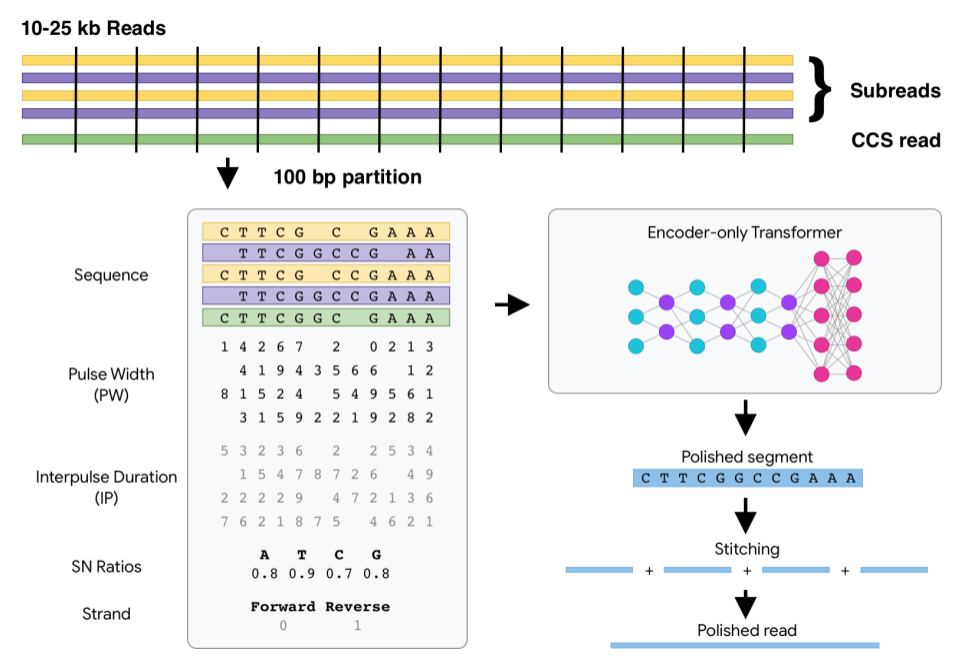

DeepConsensus uses gap-aware sequence transformers to correct errors in Pacific Biosciences (PacBio) Circular Consensus Sequencing (CCS) data.

DeepConsensus DeepConsensus uses gap-aware sequence transformers to correct errors in Pacific Biosciences (PacBio) Circular Consensus Sequencing (CCS)

![[ICCV 2021 Oral] NerfingMVS: Guided Optimization of Neural Radiance Fields for Indoor Multi-view Stereo](https://github.com/weiyithu/NerfingMVS/raw/main/imgs/demo.gif)

[ICCV 2021 Oral] NerfingMVS: Guided Optimization of Neural Radiance Fields for Indoor Multi-view Stereo

NerfingMVS Project Page | Paper | Video | Data NerfingMVS: Guided Optimization of Neural Radiance Fields for Indoor Multi-view Stereo Yi Wei, Shaohui

![[ICCV21] Self-Calibrating Neural Radiance Fields](https://github.com/POSTECH-CVLab/SCNeRF/raw/master/assets/scnerf_teaser.png)

[ICCV21] Self-Calibrating Neural Radiance Fields

Self-Calibrating Neural Radiance Fields, ICCV, 2021 Project Page | Paper | Video Author Information Yoonwoo Jeong [Google Scholar] Seokjun Ahn [Google

Code for Blind Image Decomposition (BID) and Blind Image Decomposition network (BIDeN).

arXiv, porject page, paper Blind Image Decomposition (BID) Blind Image Decomposition is a novel task. The task requires separating a superimposed imag

PaddleViT: State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 2.0+

PaddlePaddle Vision Transformers State-of-the-art Visual Transformer and MLP Models for PaddlePaddle 🤖 PaddlePaddle Visual Transformers (PaddleViT or

The official repository for our paper "The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers". We significantly improve the systematic generalization of transformer models on a variety of datasets using simple tricks and careful considerations.

Codebase for training transformers on systematic generalization datasets. The official repository for our EMNLP 2021 paper The Devil is in the Detail:

The Official Implementation of the ICCV-2021 Paper: Semantically Coherent Out-of-Distribution Detection.

SCOOD-UDG (ICCV 2021) This repository is the official implementation of the paper: Semantically Coherent Out-of-Distribution Detection Jingkang Yang,

Deep Unsupervised 3D SfM Face Reconstruction Based on Massive Landmark Bundle Adjustment.

(ACMMM 2021 Oral) SfM Face Reconstruction Based on Massive Landmark Bundle Adjustment This repository shows two tasks: Face landmark detection and Fac

(ICCV'21) Official PyTorch implementation of Relational Embedding for Few-Shot Classification

Relational Embedding for Few-Shot Classification (ICCV 2021) Dahyun Kang, Heeseung Kwon, Juhong Min, Minsu Cho [paper], [project hompage] We propose t

The source code of CVPR 2019 paper "Deep Exemplar-based Video Colorization".

Deep Exemplar-based Video Colorization (Pytorch Implementation) Paper | Pretrained Model | Youtube video 🔥 | Colab demo Deep Exemplar-based Video Col

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

LLVIP: A Visible-infrared Paired Dataset for Low-light Vision

LLVIP: A Visible-infrared Paired Dataset for Low-light Vision Project | Arxiv | Abstract It is very challenging for various visual tasks such as image

CrossNorm and SelfNorm for Generalization under Distribution Shifts (ICCV 2021)

CrossNorm (CN) and SelfNorm (SN) (Accepted at ICCV 2021) This is the official PyTorch implementation of our CNSN paper, in which we propose CrossNorm

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

SwinIR: Image Restoration Using Swin Transformer

SwinIR: Image Restoration Using Swin Transformer This repository is the official PyTorch implementation of SwinIR: Image Restoration Using Shifted Win

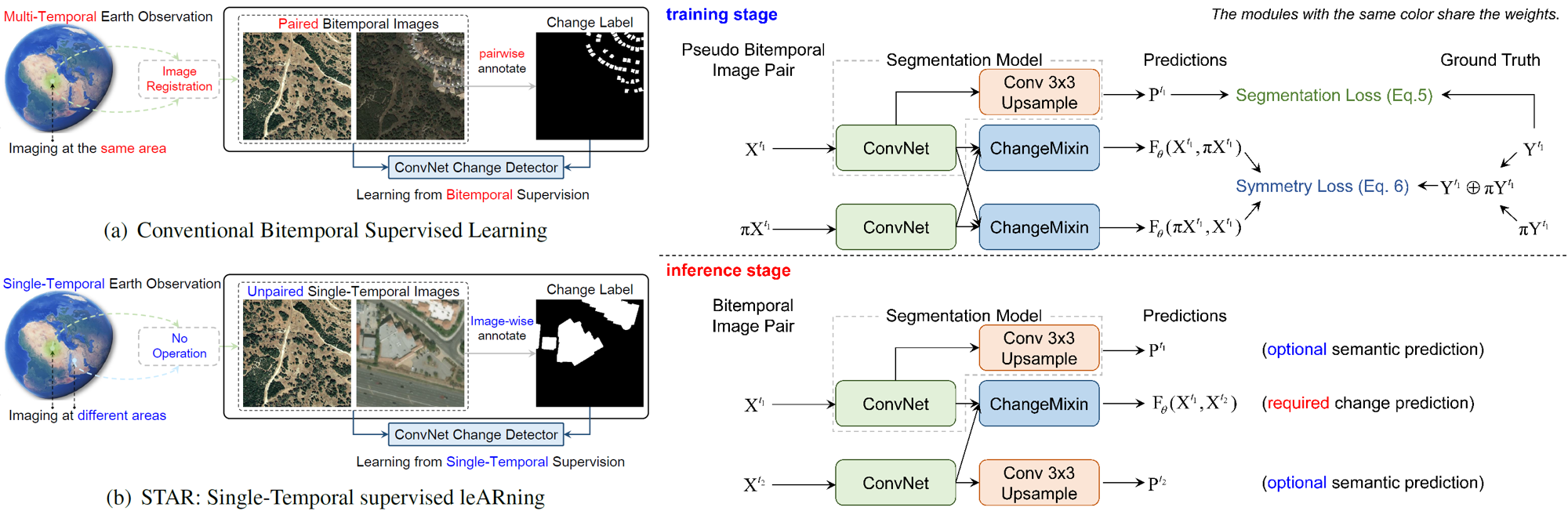

Change is Everywhere: Single-Temporal Supervised Object Change Detection in Remote Sensing Imagery (ICCV 2021)

Change is Everywhere Single-Temporal Supervised Object Change Detection in Remote Sensing Imagery by Zhuo Zheng, Ailong Ma, Liangpei Zhang and Yanfei

CrossNorm and SelfNorm for Generalization under Distribution Shifts (ICCV 2021)

CrossNorm (CN) and SelfNorm (SN) (Accepted at ICCV 2021) This is the official PyTorch implementation of our CNSN paper, in which we propose CrossNorm

Robust Video Matting in PyTorch, TensorFlow, TensorFlow.js, ONNX, CoreML!

Robust Video Matting in PyTorch, TensorFlow, TensorFlow.js, ONNX, CoreML!

Neural Message Passing for Computer Vision

Neural Message Passing for Quantum Chemistry Implementation of different models of Neural Networks on graphs as explained in the article proposed by G

Many Class Activation Map methods implemented in Pytorch for CNNs and Vision Transformers. Including Grad-CAM, Grad-CAM++, Score-CAM, Ablation-CAM and XGrad-CAM

Class Activation Map methods implemented in Pytorch pip install grad-cam ⭐ Tested on many Common CNN Networks and Vision Transformers. ⭐ Includes smoo

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

Implementation of a Transformer that Ponders, using the scheme from the PonderNet paper

Ponder(ing) Transformer Implementation of a Transformer that learns to adapt the number of computational steps it takes depending on the difficulty of

Deep Unsupervised 3D SfM Face Reconstruction Based on Massive Landmark Bundle Adjustment.

(ACMMM 2021 Oral) SfM Face Reconstruction Based on Massive Landmark Bundle Adjustment This repository shows two tasks: Face landmark detection and Fac

rclip - AI-Powered Command-Line Photo Search Tool

rclip is a command-line photo search tool based on the awesome OpenAI's CLIP neural network.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics.

Simple tool/toolkit for evaluating NLG (Natural Language Generation) offering various automated metrics. Jury offers a smooth and easy-to-use interface. It uses datasets for underlying metric computation, and hence adding custom metric is easy as adopting datasets.Metric.

![SOTR: Segmenting Objects with Transformers [ICCV 2021]](https://github.com/easton-cau/SOTR/raw/main/images/overview.png)

SOTR: Segmenting Objects with Transformers [ICCV 2021]

SOTR: Segmenting Objects with Transformers [ICCV 2021] By Ruohao Guo, Dantong Niu, Liao Qu, Zhenbo Li Introduction This is the official implementation

![[ICCV 2021 Oral] PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers](https://github.com/yuxumin/PoinTr/raw/master/fig/pointr.gif)

[ICCV 2021 Oral] PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers

PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers Created by Xumin Yu*, Yongming Rao*, Ziyi Wang, Zuyan Liu, Jiwen Lu, Jie Zhou

Image transformations designed for Scene Text Recognition (STR) data augmentation. Published at ICCV 2021 Workshop on Interactive Labeling and Data Augmentation for Vision.

Data Augmentation for Scene Text Recognition (ICCV 2021 Workshop) (Pronounced as "strog") Paper Arxiv Why it matters? Scene Text Recognition (STR) req

Official code for paper "Demystifying Local Vision Transformer: Sparse Connectivity, Weight Sharing, and Dynamic Weight"

Demysitifing Local Vision Transformer, arxiv This is the official PyTorch implementation of our paper. We simply replace local self attention by (dyna

Official code for "Focal Self-attention for Local-Global Interactions in Vision Transformers"

Focal Transformer This is the official implementation of our Focal Transformer -- "Focal Self-attention for Local-Global Interactions in Vision Transf

Exploring Classification Equilibrium in Long-Tailed Object Detection, ICCV2021

Exploring Classification Equilibrium in Long-Tailed Object Detection (LOCE, ICCV 2021) Paper Introduction The conventional detectors tend to make imba

X-modaler is a versatile and high-performance codebase for cross-modal analytics.

X-modaler X-modaler is a versatile and high-performance codebase for cross-modal analytics. This codebase unifies comprehensive high-quality modules i

TOOD: Task-aligned One-stage Object Detection, ICCV2021 Oral

One-stage object detection is commonly implemented by optimizing two sub-tasks: object classification and localization, using heads with two parallel branches, which might lead to a certain level of spatial misalignment in predictions between the two tasks.

3D ResNets for Action Recognition (CVPR 2018)

3D ResNets for Action Recognition Update (2020/4/13) We published a paper on arXiv. Hirokatsu Kataoka, Tenga Wakamiya, Kensho Hara, and Yutaka Satoh,

Fog Simulation on Real LiDAR Point Clouds for 3D Object Detection in Adverse Weather

LiDAR fog simulation Created by Martin Hahner at the Computer Vision Lab of ETH Zurich. This is the official code release of the paper Fog Simulation

QTool: A Low-bit Quantization Toolbox for Deep Neural Networks in Computer Vision

This project provides abundant choices of quantization strategies (such as the quantization algorithms, training schedules and empirical tricks) for quantizing the deep neural networks into low-bit counterparts.

Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT)

CIRPLANT This repository contains the code and pre-trained models for Composed Image Retrieval using Pretrained LANguage Transformers (CIRPLANT) For d

pix2tex: Using a ViT to convert images of equations into LaTeX code.

The goal of this project is to create a learning based system that takes an image of a math formula and returns corresponding LaTeX code.

Towards Interpretable Deep Metric Learning with Structural Matching

DIML Created by Wenliang Zhao*, Yongming Rao*, Ziyi Wang, Jiwen Lu, Jie Zhou This repository contains PyTorch implementation for paper Towards Interpr

Towers of Babel: Combining Images, Language, and 3D Geometry for Learning Multimodal Vision. ICCV 2021.

Towers of Babel: Combining Images, Language, and 3D Geometry for Learning Multimodal Vision Download links and PyTorch implementation of "Towers of Ba

This is an official implementation of the High-Resolution Transformer for Dense Prediction.

High-Resolution Transformer for Dense Prediction Introduction This is the official implementation of High-Resolution Transformer (HRT). We present a H

An organized collection of tutorials and projects created for aspriring computer vision students.

A repository created with the purpose of teaching students in BME lab 308A- Hanoi University of Science and Technology

In this project we investigate the performance of the SetCon model on realistic video footage. Therefore, we implemented the model in PyTorch and tested the model on two example videos.

Contrastive Learning of Object Representations Supervisor: Prof. Dr. Gemma Roig Institutions: Goethe University CVAI - Computational Vision & Artifici

FastReID is a research platform that implements state-of-the-art re-identification algorithms.

FastReID is a research platform that implements state-of-the-art re-identification algorithms.

Vision-Language Transformer and Query Generation for Referring Segmentation (ICCV 2021)

Vision-Language Transformer and Query Generation for Referring Segmentation Please consider citing our paper in your publications if the project helps

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

10th place solution for Google Smartphone Decimeter Challenge at kaggle.

Under refactoring 10th place solution for Google Smartphone Decimeter Challenge at kaggle. Google Smartphone Decimeter Challenge Global Navigation Sat

Learn meanings behind words is a key element in NLP. This project concentrates on the disambiguation of preposition senses. Therefore, we train a bert-transformer model and surpass the state-of-the-art.

New State-of-the-Art in Preposition Sense Disambiguation Supervisor: Prof. Dr. Alexander Mehler Alexander Henlein Institutions: Goethe University TTLa

Refactoring dalle-pytorch and taming-transformers for TPU VM

Text-to-Image Translation (DALL-E) for TPU in Pytorch Refactoring Taming Transformers and DALLE-pytorch for TPU VM with Pytorch Lightning Requirements

Learning and Building Convolutional Neural Networks using PyTorch

Image Classification Using Deep Learning Learning and Building Convolutional Neural Networks using PyTorch. Models, selected are based on number of ci

Extreme Rotation Estimation using Dense Correlation Volumes

Extreme Rotation Estimation using Dense Correlation Volumes This repository contains a PyTorch implementation of the paper: Extreme Rotation Estimatio

We evaluate our method on different datasets (including ShapeNet, CUB-200-2011, and Pascal3D+) and achieve state-of-the-art results, outperforming all the other supervised and unsupervised methods and 3D representations, all in terms of performance, accuracy, and training time.

An Effective Loss Function for Generating 3D Models from Single 2D Image without Rendering Papers with code | Paper Nikola Zubić Pietro Lio University

Vision Transformer and MLP-Mixer Architectures

Vision Transformer and MLP-Mixer Architectures Update (2.7.2021): Added the "When Vision Transformers Outperform ResNets..." paper, and SAM (Sharpness

This is the official implementation for "Do Transformers Really Perform Bad for Graph Representation?".

Graphormer By Chengxuan Ying, Tianle Cai, Shengjie Luo, Shuxin Zheng*, Guolin Ke, Di He*, Yanming Shen and Tie-Yan Liu. This repo is the official impl

Source code for "Progressive Transformers for End-to-End Sign Language Production" (ECCV 2020)

Progressive Transformers for End-to-End Sign Language Production Source code for "Progressive Transformers for End-to-End Sign Language Production" (B

Sign Language Translation with Transformers (COLING'2020, ECCV'20 SLRTP Workshop)

transformer-slt This repository gathers data and code supporting the experiments in the paper Better Sign Language Translation with STMC-Transformer.

Sign Language Transformers (CVPR'20)

Sign Language Transformers (CVPR'20) This repo contains the training and evaluation code for the paper Sign Language Transformers: Sign Language Trans

This is an official implementation for "Self-Supervised Learning with Swin Transformers".

Self-Supervised Learning with Vision Transformers By Zhenda Xie*, Yutong Lin*, Zhuliang Yao, Zheng Zhang, Qi Dai, Yue Cao and Han Hu This repo is the

Code for the paper "VisualBERT: A Simple and Performant Baseline for Vision and Language"

This repository contains code for the following two papers: VisualBERT: A Simple and Performant Baseline for Vision and Language (arxiv) with a short

Multi Task Vision and Language

12-in-1: Multi-Task Vision and Language Representation Learning Please cite the following if you use this code. Code and pre-trained models for 12-in-

(CVPR2021) Kaleido-BERT: Vision-Language Pre-training on Fashion Domain

Kaleido-BERT: Vision-Language Pre-training on Fashion Domain Mingchen Zhuge*, Dehong Gao*, Deng-Ping Fan#, Linbo Jin, Ben Chen, Haoming Zhou, Minghui

Text-to-Image generation

Generate vivid Images for Any (Chinese) text CogView is a pretrained (4B-param) transformer for text-to-image generation in general domain. Read our p

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

Exploring Image Deblurring via Blur Kernel Space (CVPR'21)

Exploring Image Deblurring via Encoded Blur Kernel Space About the project We introduce a method to encode the blur operators of an arbitrary dataset

Official implementation of the paper Vision Transformer with Progressive Sampling, ICCV 2021.

Vision Transformer with Progressive Sampling This is the official implementation of the paper Vision Transformer with Progressive Sampling, ICCV 2021.

Code for "Finetuning Pretrained Transformers into Variational Autoencoders"

transformers-into-vaes Code for Finetuning Pretrained Transformers into Variational Autoencoders (our submission to NLP Insights Workshop 2021). Gathe

Code for generating a single image pretraining dataset

Single Image Pretraining of Visual Representations As shown in the paper A critical analysis of self-supervision, or what we can learn from a single i

Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized

VQGAN-CLIP-Docker About Zero-Shot Text-to-Image Generation VQGAN+CLIP Dockerized This is a stripped and minimal dependency repository for running loca

Official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers

Visual Parser (ViP) This is the official implementation of the paper Visual Parser: Representing Part-whole Hierarchies with Transformers. Key Feature

Implementation for our ICCV2021 paper: Internal Video Inpainting by Implicit Long-range Propagation

Implicit Internal Video Inpainting Implementation for our ICCV2021 paper: Internal Video Inpainting by Implicit Long-range Propagation paper | project

Video Contrastive Learning with Global Context

Video Contrastive Learning with Global Context (VCLR) This is the official PyTorch implementation of our VCLR paper. Install dependencies environments

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

[ICCV, 2021] Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks

Cloud Transformers: A Universal Approach To Point Cloud Processing Tasks This is an official PyTorch code repository of the paper "Cloud Transformers:

Study of human inductive biases in CNNs and Transformers.

Are Convolutional Neural Networks or Transformers more like human vision? This repository contains the code and fine-tuned models of popular Convoluti

FPGA: Fast Patch-Free Global Learning Framework for Fully End-to-End Hyperspectral Image Classification

FPGA & FreeNet Fast Patch-Free Global Learning Framework for Fully End-to-End Hyperspectral Image Classification by Zhuo Zheng, Yanfei Zhong, Ailong M

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

Code for our CVPR 2021 Paper "Rethinking Style Transfer: From Pixels to Parameterized Brushstrokes".

Rethinking Style Transfer: From Pixels to Parameterized Brushstrokes (CVPR 2021) Project page | Paper | Colab | Colab for Drawing App Rethinking Style

Open-World Entity Segmentation

Open-World Entity Segmentation Project Website Lu Qi*, Jason Kuen*, Yi Wang, Jiuxiang Gu, Hengshuang Zhao, Zhe Lin, Philip Torr, Jiaya Jia This projec

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

Dense Prediction Transformers

Vision Transformers for Dense Prediction This repository contains code and models for our paper: Vision Transformers for Dense Prediction René Ranftl,

An official implementation of the paper Exploring Sequence Feature Alignment for Domain Adaptive Detection Transformers

Sequence Feature Alignment (SFA) By Wen Wang, Yang Cao, Jing Zhang, Fengxiang He, Zheng-jun Zha, Yonggang Wen, and Dacheng Tao This repository is an o

TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A good teacher is patient and consistent by Beyer et al.

FunMatch-Distillation TF2 implementation of knowledge distillation using the "function matching" hypothesis from the paper Knowledge distillation: A g

A library for finding knowledge neurons in pretrained transformer models.

knowledge-neurons An open source repository replicating the 2021 paper Knowledge Neurons in Pretrained Transformers by Dai et al., and extending the t

The code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention.

CrossFormer This repository is the code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention. Introduction Existin

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

This project is a sample demo of Arxiv search related to AI/ML Papers built using Streamlit, sentence-transformers and Faiss.

Active learning for text classification in Python

Active Learning allows you to efficiently label training data in a small-data scenario.

meProp: Sparsified Back Propagation for Accelerated Deep Learning

meProp The codes were used for the paper meProp: Sparsified Back Propagation for Accelerated Deep Learning with Reduced Overfitting (ICML 2017) [pdf]

Image Deblurring using Generative Adversarial Networks

DeblurGAN arXiv Paper Version Pytorch implementation of the paper DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. Our netwo

Synthesizing and manipulating 2048x1024 images with conditional GANs

pix2pixHD Project | Youtube | Paper Pytorch implementation of our method for high-resolution (e.g. 2048x1024) photorealistic image-to-image translatio

PyTorch implementation of the YOLO (You Only Look Once) v2

PyTorch implementation of the YOLO (You Only Look Once) v2 The YOLOv2 is one of the most popular one-stage object detector. This project adopts PyTorc

PyTorch Implementation of Realtime Multi-Person Pose Estimation project.

PyTorch Realtime Multi-Person Pose Estimation This is a pytorch version of Realtime_Multi-Person_Pose_Estimation, origin code is here Realtime_Multi-P

PyTorch implementation of 1712.06087 "Zero-Shot" Super-Resolution using Deep Internal Learning

Unofficial PyTorch implementation of "Zero-Shot" Super-Resolution using Deep Internal Learning Unofficial Implementation of 1712.06087 "Zero-Shot" Sup

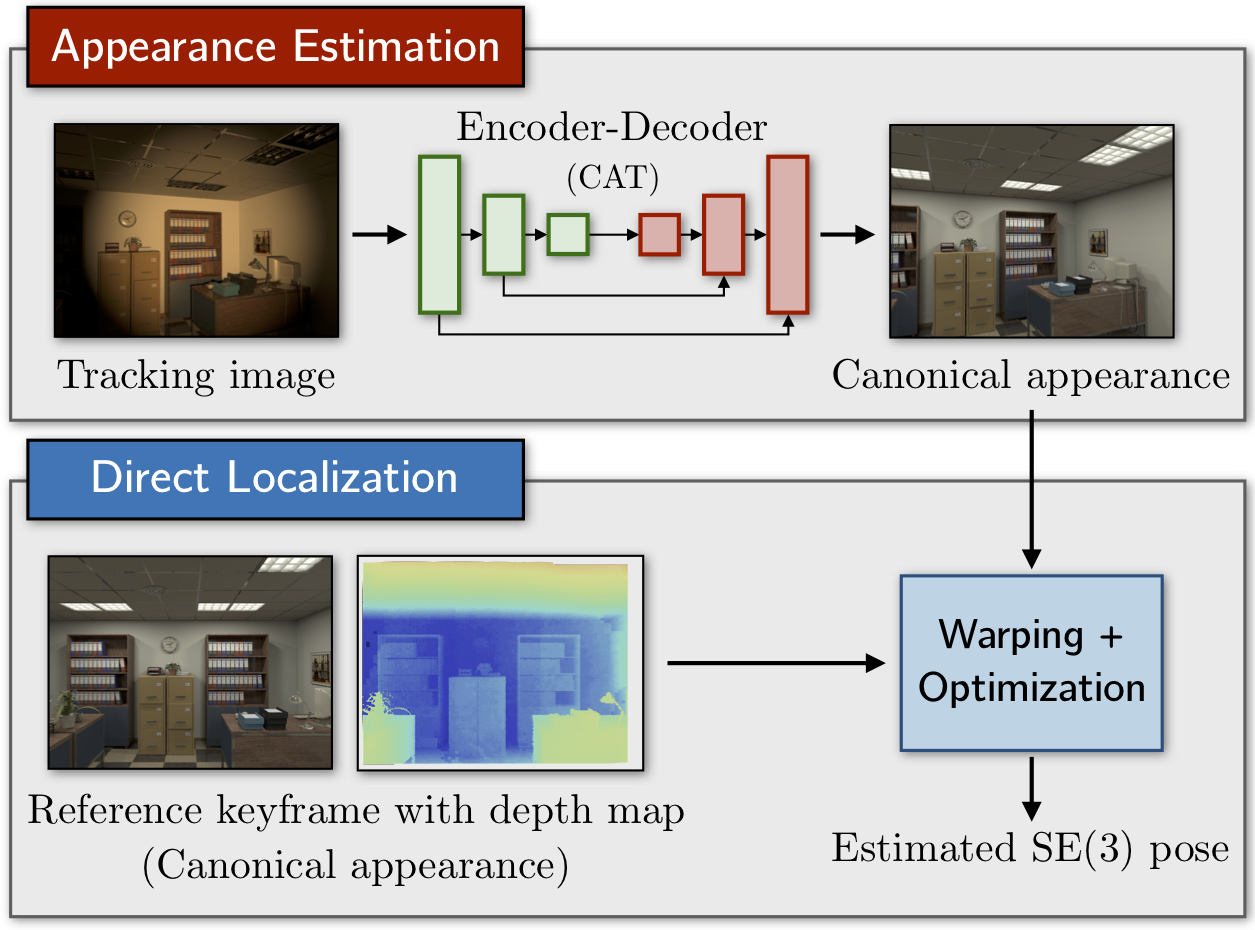

Canonical Appearance Transformations

CAT-Net: Learning Canonical Appearance Transformations Code to accompany our paper "How to Train a CAT: Learning Canonical Appearance Transformations

This's an implementation of deepmind Visual Interaction Networks paper using pytorch

Visual-Interaction-Networks An implementation of Deepmind visual interaction networks in Pytorch. Introduction For the purpose of understanding the ch

A PyTorch Implementation of Neural IMage Assessment

NIMA: Neural IMage Assessment This is a PyTorch implementation of the paper NIMA: Neural IMage Assessment (accepted at IEEE Transactions on Image Proc

NIMA: Neural IMage Assessment

PyTorch NIMA: Neural IMage Assessment PyTorch implementation of Neural IMage Assessment by Hossein Talebi and Peyman Milanfar. You can learn more from

Implementation of Multistream Transformers in Pytorch

Multistream Transformers Implementation of Multistream Transformers in Pytorch. This repository deviates slightly from the paper, where instead of usi

![[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery](https://github.com/IceTTTb/PlaneTR3D/raw/master/misc/network.jpg)

[ICCV'21] PlaneTR: Structure-Guided Transformers for 3D Plane Recovery

PlaneTR: Structure-Guided Transformers for 3D Plane Recovery This is the official implementation of our ICCV 2021 paper News There maybe some bugs in

Stochastic Downsampling for Cost-Adjustable Inference and Improved Regularization in Convolutional Networks

Stochastic Downsampling for Cost-Adjustable Inference and Improved Regularization in Convolutional Networks (SDPoint) This repository contains the cod

CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped

CSWin-Transformer This repo is the official implementation of "CSWin Transformer: A General Vision Transformer Backbone with Cross-Shaped Windows". Th