123 Repositories

Python weight-pruning Libraries

ACL'22: Structured Pruning Learns Compact and Accurate Models

☕ CoFiPruning: Structured Pruning Learns Compact and Accurate Models This repository contains the code and pruned models for our ACL'22 paper Structur

Code of paper: "DropAttack: A Masked Weight Adversarial Training Method to Improve Generalization of Neural Networks"

DropAttack: A Masked Weight Adversarial Training Method to Improve Generalization of Neural Networks Abstract: Adversarial training has been proven to

Official pytorch code for "APP: Anytime Progressive Pruning"

APP: Anytime Progressive Pruning Diganta Misra1,2,3, Bharat Runwal2,4, Tianlong Chen5, Zhangyang Wang5, Irina Rish1,3 1 Mila - Quebec AI Institute,2 L

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning

Ensemble Knowledge Guided Sub-network Search and Fine-tuning for Filter Pruning This repository is official Tensorflow implementation of paper: Ensemb

Mini Software that give reminder to drink water as per your weight.

Water Notification Desktop Python The Mini Software built in Python (tkinter) that will remind you to drink water on specific time span based on your

The Unreasonable Effectiveness of Random Pruning: Return of the Most Naive Baseline for Sparse Training

[ICLR 2022] The Unreasonable Effectiveness of Random Pruning: Return of the Most Naive Baseline for Sparse Training The Unreasonable Effectiveness of

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers

SAGE: Sensitivity-guided Adaptive Learning Rate for Transformers This repo contains our codes for the paper "No Parameters Left Behind: Sensitivity Gu

PyTorch Implementation of the SuRP algorithm by the authors of the AISTATS 2022 paper "An Information-Theoretic Justification for Model Pruning"

PyTorch Implementation of the SuRP algorithm by the authors of the AISTATS 2022 paper "An Information-Theoretic Justification for Model Pruning".

Structured Data Gradient Pruning (SDGP)

Structured Data Gradient Pruning (SDGP) Weight pruning is a technique to make Deep Neural Network (DNN) inference more computationally efficient by re

Predicting a person's gender based on their weight and height

Logistic Regression Advanced Case Study Gender Classification: Predicting a person's gender based on their weight and height 1. Introduction We turn o

Docov - Light-weight, recursive docstring coverage analysis for python modules

docov Light-weight, recursive docstring coverage analysis for python modules. Ov

Pytorch Implementation of Auto-Compressing Subset Pruning for Semantic Image Segmentation

Pytorch Implementation of Auto-Compressing Subset Pruning for Semantic Image Segmentation Introduction ACoSP is an online pruning algorithm that compr

This library provides an abstraction to perform Model Versioning using Weight & Biases.

Description This library provides an abstraction to perform Model Versioning using Weight & Biases. Features Version a new trained model Promote a mod

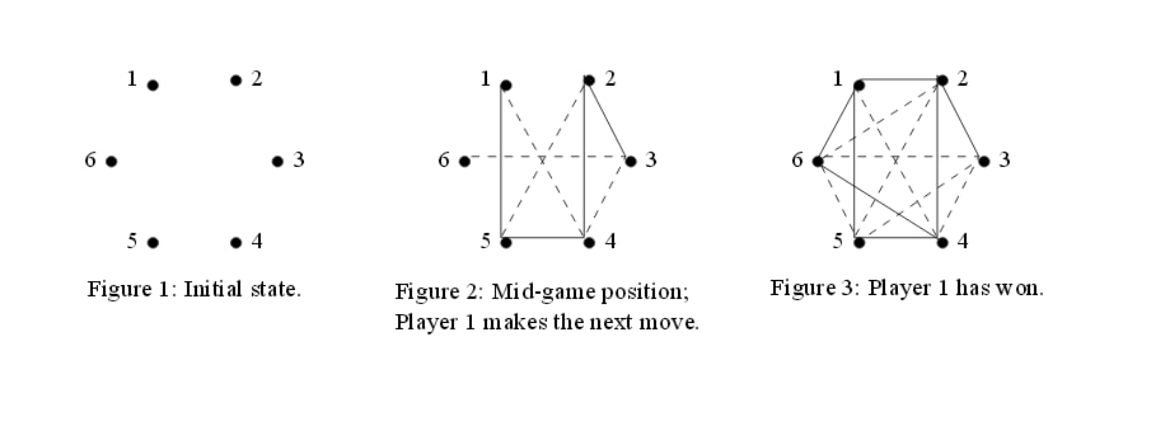

VampiresVsWerewolves - Our Implementation of a MiniMax algorithm with alpha beta pruning in the context of an in-class competition

VampiresVsWerewolves Our Implementation of a MiniMax algorithm with alpha beta pruning in the context of an in-class competition. Our Algorithm finish

Homed - Light-weight, easily configurable, dockerized homepage

homed GitHub Repo Docker Hub homed is a light-weight customizable portal primari

Official code repository of the paper Linear Transformers Are Secretly Fast Weight Programmers.

Linear Transformers Are Secretly Fast Weight Programmers This repository contains the code accompanying the paper Linear Transformers Are Secretly Fas

This repo provides the base code for pytorch-lightning and weight and biases simultaneous integration.

Write your model faster with pytorch-lightning-wadb-code-backbone This repository provides the base code for pytorch-lightning and weight and biases s

Map Matching & Weight Completion service - Java (Springboot) & Python(Flask)

Map Matching service to match coordinates to roads using Java and Springboot. Weight Completion service to fill in missing weights in a graph, using Python and Flask.

K Closest Points and Maximum Clique Pruning for Efficient and Effective 3D Laser Scan Matching (To appear in RA-L 2022)

KCP The official implementation of KCP: k Closest Points and Maximum Clique Pruning for Efficient and Effective 3D Laser Scan Matching, accepted for p

Intel® Neural Compressor is an open-source Python library running on Intel CPUs and GPUs

Intel® Neural Compressor targeting to provide unified APIs for network compression technologies, such as low precision quantization, sparsity, pruning, knowledge distillation, across different deep learning frameworks to pursue optimal inference performance.

Weight estimation in CT by multi atlas techniques

maweight A Python package for multi-atlas based weight estimation for CT images, including segmentation by registration, feature extraction and model

Neural network pruning for finding a sparse computational model for controlling a biological motor task.

MothPruning Scientific Overview Originally inspired by biological nervous systems, deep neural networks (DNNs) are powerful computational tools for mo

Elastic weight consolidation technique for incremental learning.

Overcoming-Catastrophic-forgetting-in-Neural-Networks Elastic weight consolidation technique for incremental learning. About Use this API if you dont

TensorFlow implementation of Elastic Weight Consolidation

Elastic weight consolidation Introduction A TensorFlow implementation of elastic weight consolidation as presented in Overcoming catastrophic forgetti

A PyTorch implementation of the continual learning experiments with deep neural networks

Brain-Inspired Replay A PyTorch implementation of the continual learning experiments with deep neural networks described in the following paper: Brain

RLMeta is a light-weight flexible framework for Distributed Reinforcement Learning Research.

RLMeta rlmeta - a flexible lightweight research framework for Distributed Reinforcement Learning based on PyTorch and moolib Installation To build fro

🔖 Lemnos: A simple, light-weight command-line to-do list manager.

🔖 Lemnos: CLI To-do List Manager This is a simple program that allows one to manage a to-do list via the command-line. Example $ python3 todo.py add

PyTorch implementation of DCT fast weight RNNs

DCT based fast weights This repository contains the official code for the paper: Training and Generating Neural Networks in Compressed Weight Space. T

Discord-Lite - A light weight discord client written in Python, for developers, by developers.

Discord-Lite - A light weight discord client written in Python, for developers, by developers.

An end-to-end image translation model with weight-map for color constancy

CCUnet An end-to-end image translation model with weight-map for color constancy 1. Download the dataset (take Colorchecker_recommended dataset as an

A PyTorch-based model pruning toolkit for pre-trained language models

English | 中文说明 TextPruner是一个为预训练语言模型设计的模型裁剪工具包,通过轻量、快速的裁剪方法对模型进行结构化剪枝,从而实现压缩模型体积、提升模型速度。 其他相关资源: 知识蒸馏工具TextBrewer:https://github.com/airaria/TextBrewe

Pytorch implementation of our paper under review -- 1xN Pattern for Pruning Convolutional Neural Networks

1xN Pattern for Pruning Convolutional Neural Networks (paper) . This is Pytorch re-implementation of "1xN Pattern for Pruning Convolutional Neural Net

The code for the Subformer, from the EMNLP 2021 Findings paper: "Subformer: Exploring Weight Sharing for Parameter Efficiency in Generative Transformers", by Machel Reid, Edison Marrese-Taylor, and Yutaka Matsuo

Subformer This repository contains the code for the Subformer. To help overcome this we propose the Subformer, allowing us to retain performance while

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

Reimplementation of NeurIPS'19: "Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting" by Shu et al.

[Re] Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting Reimplementation of NeurIPS'19: "Meta-Weight-Net: Learning an Explicit Mapping

i-SpaSP: Structured Neural Pruning via Sparse Signal Recovery

i-SpaSP: Structured Neural Pruning via Sparse Signal Recovery This is a public code repository for the publication: i-SpaSP: Structured Neural Pruning

NeurIPS'19: Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting (Pytorch implementation for noisy labels).

Meta-Weight-Net NeurIPS'19: Meta-Weight-Net: Learning an Explicit Mapping For Sample Weighting (Official Pytorch implementation for noisy labels). The

SHRIMP: Sparser Random Feature Models via Iterative Magnitude Pruning

SHRIMP: Sparser Random Feature Models via Iterative Magnitude Pruning This repository is the official implementation of "SHRIMP: Sparser Random Featur

A simple, light-weight and highly maintainable online judge system for secondary education

y³OJ a simple, light-weight and highly maintainable online judge system for secondary education 一个简单、轻量化、易于维护的、为中学信息技术学科课业教学设计的 Online Judge 系统。 Onlin

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

Inflated i3d network with inception backbone, weights transfered from tensorflow

I3D models transfered from Tensorflow to PyTorch This repo contains several scripts that allow to transfer the weights from the tensorflow implementat

A light weight data augmentation tool for training CNNs and Viola Jones detectors

hey-daug A light weight data augmentation tool for training CNNs and Viola Jones detectors (Haar Cascades). This tool inflates your data by up to six

This is the code for Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning

This is the code for Compressing BERT: Studying the Effects of Weight Pruning on Transfer Learning It includes /bert, which is the original BERT repos

Implementation of Continuous Sparsification, a method for pruning and ticket search in deep networks

Continuous Sparsification Implementation of Continuous Sparsification (CS), a method based on l_0 regularization to find sparse neural networks, propo

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Codes for NeurIPS 2021 paper "Adversarial Neuron Pruning Purifies Backdoored Deep Models"

Adversarial Neuron Pruning Purifies Backdoored Deep Models Code for NeurIPS 2021 "Adversarial Neuron Pruning Purifies Backdoored Deep Models" by Dongx

This is the official repository for our paper: ''Pruning Self-attentions into Convolutional Layers in Single Path''.

Pruning Self-attentions into Convolutional Layers in Single Path This is the official repository for our paper: Pruning Self-attentions into Convoluti

This is the official repository for our paper: ''Pruning Self-attentions into Convolutional Layers in Single Path''.

Pruning Self-attentions into Convolutional Layers in Single Path This is the official repository for our paper: Pruning Self-attentions into Convoluti

Delve is a Python package for analyzing the inference dynamics of your PyTorch model.

Delve is a Python package for analyzing the inference dynamics of your PyTorch model.

FasterAI: A library to make smaller and faster models with FastAI.

Fasterai fasterai is a library created to make neural network smaller and faster. It essentially relies on common compression techniques for networks

Select, weight and analyze complex sample data

Sample Analytics In large-scale surveys, often complex random mechanisms are used to select samples. Estimates derived from such samples must reflect

DA2Lite is an automated model compression toolkit for PyTorch.

DA2Lite (Deep Architecture to Lite) is a toolkit to compress and accelerate deep network models. ⭐ Star us on GitHub — it helps!! Frameworks & Librari

🛠️ Tools for Transformers compression using Lightning ⚡

Bert-squeeze is a repository aiming to provide code to reduce the size of Transformer-based models or decrease their latency at inference time.

Iterative Training: Finding Binary Weight Deep Neural Networks with Layer Binarization

Iterative Training: Finding Binary Weight Deep Neural Networks with Layer Binarization This repository contains the source code for the paper (link wi

Convert weight file.pth to weight file.blob

CONVERT YOUR MODEL TO IR FORMAT INSTALLATION OpenVino Toolkit Download openvinotoolkit 2021.3 version : Link Instruction of installation : Link Pytorc

An implementation of Group Fisher Pruning for Practical Network Compression based on pytorch and mmcv

FisherPruning-Pytorch An implementation of Group Fisher Pruning for Practical Network Compression based on pytorch and mmcv Main Functions Pruning f

Hypersearch weight debugging and losses tutorial

tutorial Activate tensorboard option Running TensorBoard remotely When working on a remote server, you can use SSH tunneling to forward the port of th

(ICCV 2021) PyTorch implementation of Paper "Progressive Correspondence Pruning by Consensus Learning"

CLNet (ICCV 2021) PyTorch implementation of Paper "Progressive Correspondence Pruning by Consensus Learning" [project page] [paper] Citing CLNet If yo

RMNet: Equivalently Removing Residual Connection from Networks

RM Operation can equivalently convert ResNet to VGG, which is better for pruning; and can help RepVGG perform better when the depth is large.

RM Operation can equivalently convert ResNet to VGG, which is better for pruning; and can help RepVGG perform better when the depth is large.

RMNet: Equivalently Removing Residual Connection from Networks This repository is the official implementation of "RMNet: Equivalently Removing Residua

A Gomoku game GUI using pygame where the user can choose to play against another player or an AI using minimax with alpha-beta pruning

Gomoku A GUI based Gomoku game using pygame where the user can choose to play against another player or an AI using minimax with alpha-beta pruning. R

Light-weight network, depth estimation, knowledge distillation, real-time depth estimation, auxiliary data.

light-weight-depth-estimation Boosting Light-Weight Depth Estimation Via Knowledge Distillation, https://arxiv.org/abs/2105.06143 Junjie Hu, Chenyou F

YOLOv5 Series Multi-backbone, Pruning and quantization Compression Tool Box.

YOLOv5-Compression Update News Requirements 环境安装 pip install -r requirements.txt Evaluation metric Visdrone Model mAP mAP@50 Parameters(M) GFLOPs FPS@

wger Workout Manager is a free, open source web application that helps you manage your personal workouts, weight and diet plans and can also be used as a simple gym management utility.

wger (ˈvɛɡɐ) Workout Manager is a free, open source web application that helps you manage your personal workouts, weight and diet plans and can also be used as a simple gym management utility.

A light-weight open-source project CLI utility for showing services running on ports in a host

Portable Port Scanner (ppscanner) Portable Port Scanner (ppscanner) is a light-weight open-source CLI utility that leverages on nmap to make quick and

Code base for the paper "Scalable One-Pass Optimisation of High-Dimensional Weight-Update Hyperparameters by Implicit Differentiation"

This repository contains code for the paper Scalable One-Pass Optimisation of High-Dimensional Weight-Update Hyperparameters by Implicit Differentiati

Joint Channel and Weight Pruning for Model Acceleration on Mobile Devices

Joint Channel and Weight Pruning for Model Acceleration on Mobile Devices Abstract For practical deep neural network design on mobile devices, it is e

Hexagon game. Two players: AI and User. Implemented using Alpha-Beta pruning to find optimal solution for agent.

Hexagon game. Two players: AI and User. Implemented using Alpha-Beta pruning to find optimal solution for agent.

Chisel is a light-weight Python WSGI application framework built for creating well-documented, schema-validated JSON web APIs

chisel Chisel is a light-weight Python WSGI application framework built for creating well-documented, schema-validated JSON web APIs. Here are its fea

Unofficial PyTorch implementation of MobileViT based on paper "MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer".

MobileViT RegNet Unofficial PyTorch implementation of MobileViT based on paper MOBILEVIT: LIGHT-WEIGHT, GENERAL-PURPOSE, AND MOBILE-FRIENDLY VISION TR

a lite weight photo editor written in python for day to day photo editing!

GNU-PhotoShop A lite weight Photo editing Program (currently CLI only) written in python3 for day to day photo editing. Disclaimer : Currently we don'

Fast⚡, simple and light💡weight ASGI micro🔬 web🌏-framework for Python🐍.

NanoASGI Asynchronous Python Web Framework NanoASGI is a fast ⚡ , simple and light 💡 weight ASGI micro 🔬 web 🌏 -framework for Python 🐍 . It is dis

![A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]](https://user-images.githubusercontent.com/33194443/104581604-2592cb00-56a2-11eb-9610-5eaa0afb6e1f.png)

A repository that shares tuning results of trained models generated by TensorFlow / Keras. Post-training quantization (Weight Quantization, Integer Quantization, Full Integer Quantization, Float16 Quantization), Quantization-aware training. TensorFlow Lite. OpenVINO. CoreML. TensorFlow.js. TF-TRT. MediaPipe. ONNX. [.tflite,.h5,.pb,saved_model,tfjs,tftrt,mlmodel,.xml/.bin, .onnx]

PINTO_model_zoo Please read the contents of the LICENSE file located directly under each folder before using the model. My model conversion scripts ar

Network Pruning That Matters: A Case Study on Retraining Variants (ICLR 2021)

Network Pruning That Matters: A Case Study on Retraining Variants (ICLR 2021)

A Closer Look at Structured Pruning for Neural Network Compression

A Closer Look at Structured Pruning for Neural Network Compression Code used to reproduce experiments in https://arxiv.org/abs/1810.04622. To prune, w

PyTorch Implementation of [1611.06440] Pruning Convolutional Neural Networks for Resource Efficient Inference

PyTorch implementation of [1611.06440 Pruning Convolutional Neural Networks for Resource Efficient Inference] This demonstrates pruning a VGG16 based

Official implementations of EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis.

EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis This repo contains the official implementations of EigenDamage: Structured Prunin

Distiller is an open-source Python package for neural network compression research.

Wiki and tutorials | Documentation | Getting Started | Algorithms | Design | FAQ Distiller is an open-source Python package for neural network compres

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

TensorFlow implementation of "A Simple Baseline for Bayesian Uncertainty in Deep Learning"

Weight initialization schemes for PyTorch nn.Modules

nninit Weight initialization schemes for PyTorch nn.Modules. This is a port of the popular nninit for Torch7 by @kaixhin. ##Update This repo has been

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

Channel Pruning for Accelerating Very Deep Neural Networks (ICCV'17)

skimpy is a light weight tool that provides summary statistics about variables in data frames within the console.

skimpy Welcome Welcome to skimpy! skimpy is a light weight tool that provides summary statistics about variables in data frames within the console. Th

code for paper "Not All Unlabeled Data are Equal: Learning to Weight Data in Semi-supervised Learning" by Zhongzheng Ren*, Raymond A. Yeh*, Alexander G. Schwing.

Not All Unlabeled Data are Equal: Learning to Weight Data in Semi-supervised Learning Overview This code is for paper: Not All Unlabeled Data are Equa

Code for "On the Effects of Batch and Weight Normalization in Generative Adversarial Networks"

Note: this repo has been discontinued, please check code for newer version of the paper here Weight Normalized GAN Code for the paper "On the Effects

PyTorch Implementation of [1611.06440] Pruning Convolutional Neural Networks for Resource Efficient Inference

PyTorch implementation of [1611.06440 Pruning Convolutional Neural Networks for Resource Efficient Inference] This demonstrates pruning a VGG16 based

Official code for paper "Demystifying Local Vision Transformer: Sparse Connectivity, Weight Sharing, and Dynamic Weight"

Demysitifing Local Vision Transformer, arxiv This is the official PyTorch implementation of our paper. We simply replace local self attention by (dyna

A Simple, Easy to use and light-weight Pyrogram Userbot

Nexa Userbot A Simple, Easy to use and light-weight Pyrogram Userbot Deploy With Heroku With VPS (Local) Clone Nexa-Userbot repository git clone https

Py_extract is a simple, light-weight python library to handle some extraction tasks using less lines of code

py_extract Py_extract is a simple, light-weight python library to handle some extraction tasks using less lines of code. Still in Development Stage! I

Open source implementation of AceNAS: Learning to Rank Ace Neural Architectures with Weak Supervision of Weight Sharing

AceNAS This repo is the experiment code of AceNAS, and is not considered as an official release. We are working on integrating AceNAS as a built-in st

Official code for "Simpler is Better: Few-shot Semantic Segmentation with Classifier Weight Transformer. ICCV2021".

Simpler is Better: Few-shot Semantic Segmentation with Classifier Weight Transformer. ICCV2021. Introduction We proposed a novel model training paradi

DeLighT: Very Deep and Light-Weight Transformers

DeLighT: Very Deep and Light-weight Transformers This repository contains the source code of our work on building efficient sequence models: DeFINE (I

A Python implementation of the Locality Preserving Matching (LPM) method for pruning outliers in image matching.

LPM_Python A Python implementation of the Locality Preserving Matching (LPM) method for pruning outliers in image matching. The code is established ac

A light-weight image labelling tool for Python designed for creating segmentation data sets.

An image labelling tool for creating segmentation data sets, for Django and Flask.

Learnable Motion Coherence for Correspondence Pruning

Learnable Motion Coherence for Correspondence Pruning Yuan Liu, Lingjie Liu, Cheng Lin, Zhen Dong, Wenping Wang Project Page Any questions or discussi

Code for PackNet: Adding Multiple Tasks to a Single Network by Iterative Pruning

PackNet: https://arxiv.org/abs/1711.05769 Pretrained models are available here: https://uofi.box.com/s/zap2p03tnst9dfisad4u0sfupc0y1fxt Datasets in Py

Learned Token Pruning for Transformers

LTP: Learned Token Pruning for Transformers Check our paper for more details. Installation We follow the same installation procedure as the original H

A Closer Look at Structured Pruning for Neural Network Compression

A Closer Look at Structured Pruning for Neural Network Compression Code used to reproduce experiments in https://arxiv.org/abs/1810.04622. To prune, w

This repo contains the official implementations of EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis

EigenDamage: Structured Pruning in the Kronecker-Factored Eigenbasis This repo contains the official implementations of EigenDamage: Structured Prunin

![[Preprint]](https://github.com/VITA-Group/SViTE/raw/main/Figs/res.png)

[Preprint] "Chasing Sparsity in Vision Transformers: An End-to-End Exploration" by Tianlong Chen, Yu Cheng, Zhe Gan, Lu Yuan, Lei Zhang, Zhangyang Wang

Chasing Sparsity in Vision Transformers: An End-to-End Exploration Codes for [Preprint] Chasing Sparsity in Vision Transformers: An End-to-End Explora

Official repository for the paper "Going Beyond Linear Transformers with Recurrent Fast Weight Programmers"

Recurrent Fast Weight Programmers This is the official repository containing the code we used to produce the experimental results reported in the pape