250 Repositories

Python word-embeddings Libraries

LUKE -- Language Understanding with Knowledge-based Embeddings

LUKE (Language Understanding with Knowledge-based Embeddings) is a new pre-trained contextualized representation of words and entities based on transf

Phrase-BERT: Improved Phrase Embeddings from BERT with an Application to Corpus Exploration

Phrase-BERT: Improved Phrase Embeddings from BERT with an Application to Corpus Exploration This is the official repository for the EMNLP 2021 long pa

Code for this paper The Lottery Ticket Hypothesis for Pre-trained BERT Networks.

The Lottery Ticket Hypothesis for Pre-trained BERT Networks Code for this paper The Lottery Ticket Hypothesis for Pre-trained BERT Networks. [NeurIPS

Word and phrase lists in CSV

Word Lists Word and phrase lists in CSV, collected from different sources. Oxford Word Lists: oxford-5k.csv - Oxford 3000 and 5000 oxford-opal.csv - O

Official Implementation of "Learning Disentangled Behavior Embeddings"

DBE: Disentangled-Behavior-Embedding Official implementation of Learning Disentangled Behavior Embeddings (NeurIPS 2021). Environment requirement The

OpenL3: Open-source deep audio and image embeddings

OpenL3 OpenL3 is an open-source Python library for computing deep audio and image embeddings. Please refer to the documentation for detailed instructi

Generate text captions for images from their CLIP embeddings. Includes PyTorch model code and example training script.

clip-text-decoder Generate text captions for images from their CLIP embeddings. Includes PyTorch model code and example training script. Example Predi

Resources related to EMNLP 2021 paper "FAME: Feature-Based Adversarial Meta-Embeddings for Robust Input Representations"

FAME: Feature-based Adversarial Meta-Embeddings This is the companion code for the experiments reported in the paper "FAME: Feature-Based Adversarial

Switch spaces for knowledge graph embeddings

SwisE Switch spaces for knowledge graph embeddings. Requirements: python3 pytorch numpy tqdm Reproduce the results To reproduce the reported results,

Exploring dimension-reduced embeddings

sleepwalk Exploring dimension-reduced embeddings This is the code repository. See here for the Sleepwalk web page. License and disclaimer This program

A fast, efficient universal vector embedding utility package.

Magnitude: a fast, simple vector embedding utility library A feature-packed Python package and vector storage file format for utilizing vector embeddi

Repo for the paper "DiLBERT: Cheap Embeddings for Disease Related Medical NLP"

DiLBERT Repo for the paper "DiLBERT: Cheap Embeddings for Disease Related Medical NLP" Pretrained Model The pretrained model presented in the paper is

Code for the paper "A Simple but Tough-to-Beat Baseline for Sentence Embeddings".

Code for the paper "A Simple but Tough-to-Beat Baseline for Sentence Embeddings".

The best way to convert files on your computer, be it .pdf to .png, .pdf to .docx, .png to .ico, or anything you can imagine.

The best way to convert files on your computer, be it .pdf to .png, .pdf to .docx, .png to .ico, or anything you can imagine.

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 A repository part of the MarIA project. Corpora 📃 Corpora Number of documents Number of tokens Size (GB) BNE 201,080,084

Python script to generate Vale linting rules from word usage guidance in the Red Hat Supplementary Style Guide

ssg-vale-rules-gen Python script to generate Vale linting rules from word usage guidance in the Red Hat Supplementary Style Guide. These rules are use

This is the code used in the paper "Entity Embeddings of Categorical Variables".

This is the code used in the paper "Entity Embeddings of Categorical Variables". If you want to get the original version of the code used for the Kagg

A socket script to obtain chinese phones-sequence for any english word

Foreign Pronunciation Generator (English-Chinese) We provide a simple socket script for acquiring Chinese pronunciation of English words (phones in ai

Text-to-Music Retrieval using Pre-defined/Data-driven Emotion Embeddings

Text2Music Emotion Embedding Text-to-Music Retrieval using Pre-defined/Data-driven Emotion Embeddings Reference Emotion Embedding Spaces for Matching

This Project is based on NLTK It generates a RANDOM WORD from a predefined list of words, From that random word it read out the word, its meaning with parts of speech , its antonyms, its synonyms

This Project is based on NLTK(Natural Language Toolkit) It generates a RANDOM WORD from a predefined list of words, From that random word it read out the word, its meaning with parts of speech , its antonyms, its synonyms

This project uses word frequency and Term Frequency-Inverse Document Frequency to summarize a text.

Text Summarizer This project uses word frequency and Term Frequency-Inverse Document Frequency to summarize a text. Team Members This mini-project was

This repo is the code release of EMNLP 2021 conference paper "Connect-the-Dots: Bridging Semantics between Words and Definitions via Aligning Word Sense Inventories".

Connect-the-Dots: Bridging Semantics between Words and Definitions via Aligning Word Sense Inventories This repo is the code release of EMNLP 2021 con

An open-source online reverse dictionary.

An open-source online reverse dictionary.

Rates how pog a word or user is. Not random and does have *some* kind of algorithm to it.

PogRater :D Rates how pogchamp a word is :D A fun project coded by JBYT27 using Python3 Have you ever wondered how pog a word is? Well, congrats, you

Code repository for EMNLP 2021 paper 'Adversarial Attacks on Knowledge Graph Embeddings via Instance Attribution Methods'

Adversarial Attacks on Knowledge Graph Embeddings via Instance Attribution Methods This is the code repository to accompany the EMNLP 2021 paper on ad

Simple Reddit bot that replies to comments containing a certain word.

reddit-replier-bot Small comment reply bot based on PRAW. This script will scan the comments of a subreddit as they come in and look for a trigger wor

🌸 fastText + Bloom embeddings for compact, full-coverage vectors with spaCy

floret: fastText + Bloom embeddings for compact, full-coverage vectors with spaCy floret is an extended version of fastText that can produce word repr

WORD: Revisiting Organs Segmentation in the Whole Abdominal Region

WORD: Revisiting Organs Segmentation in the Whole Abdominal Region. This repository provides the codebase and dataset for our work WORD: Revisiting Or

Interpretable-contrastive-word-mover-s-embedding

Interpretable-contrastive-word-mover-s-embedding Paper Datasets Here is a Dropbox link to the datasets used in the paper: https://www.dropbox.com/sh/n

gtfs2vec - Learning GTFS Embeddings for comparing PublicTransport Offer in Microregions

gtfs2vec This is a companion repository for a gtfs2vec - Learning GTFS Embeddings for comparing PublicTransport Offer in Microregions publication. Vis

Keywords : Streamlit, BertTokenizer, BertForMaskedLM, Pytorch

Next Word Prediction Keywords : Streamlit, BertTokenizer, BertForMaskedLM, Pytorch 🎬 Project Demo ✔ Application is hosted on Streamlit. You can see t

This code is for a bot which will find a Twitter user's most tweeted word and tweet that word, tagging said user

max_tweeted_word This code is for a bot which will find a Twitter user's most tweeted word and tweet that word, tagging said user The program uses twe

Japanese Long-Unit-Word Tokenizer with RemBertTokenizerFast of Transformers

Japanese-LUW-Tokenizer Japanese Long-Unit-Word (国語研長単位) Tokenizer for Transformers based on 青空文庫 Basic Usage from transformers import RemBertToken

The code of paper ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs. Zhanqiu Zhang, Jie Wang, Jiajun Chen, Shuiwang Ji, Feng Wu. NeurIPS 2021.

ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs This is the code of paper ConE: Cone Embeddings for Multi-Hop Reasoning over Knowl

A PyTorch implementation of unsupervised SimCSE

A PyTorch implementation of unsupervised SimCSE

ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs

ConE: Cone Embeddings for Multi-Hop Reasoning over Knowledge Graphs This is the code of paper ConE: Cone Embeddings for Multi-Hop Reasoning over Knowl

Towhee is a flexible machine learning framework currently focused on computing deep learning embeddings over unstructured data.

Towhee is a flexible machine learning framework currently focused on computing deep learning embeddings over unstructured data.

Count the frequency of letters or words in a text file and show a graph.

Word Counter By EBUS Coding Club Count the frequency of letters or words in a text file and show a graph. Requirements Python 3.9 or higher matplotlib

Language Models for the legal domain in Spanish done @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish legal domain Language Model ⚖️ This repository contains the page for two main resources for the Spanish legal domain: A RoBERTa model: https:/

Bootstrapped Unsupervised Sentence Representation Learning (ACL 2021)

Install first pip3 install -e . Training python3 training/unsupervised_tuning.py python3 training/supervised_tuning.py python3 training/multilingual_

Code for reproducing our paper: LMSOC: An Approach for Socially Sensitive Pretraining

LMSOC: An Approach for Socially Sensitive Pretraining Code for reproducing the paper LMSOC: An Approach for Socially Sensitive Pretraining to appear a

Recognition of 38 speech commands in russian. Based on Yandex Cup 2021 ML Challenge: ASR

Speech_38_ru_commands Recognition of 38 speech commands in russian. Based on Yandex Cup 2021 ML Challenge: ASR Программа умеет распознавать 38 ключевы

A Word Level Transformer layer based on PyTorch and 🤗 Transformers.

Transformer Embedder A Word Level Transformer layer based on PyTorch and 🤗 Transformers. How to use Install the library from PyPI: pip install transf

On-device wake word detection powered by deep learning.

Porcupine Made in Vancouver, Canada by Picovoice Porcupine is a highly-accurate and lightweight wake word engine. It enables building always-listening

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Finetuner allows one to tune the weights of any deep neural network for better embeddings on search tasks

Vector AI — A platform for building vector based applications. Encode, query and analyse data using vectors.

Vector AI is a framework designed to make the process of building production grade vector based applications as quickly and easily as possible. Create

EMNLP'2021: SimCSE: Simple Contrastive Learning of Sentence Embeddings

SimCSE: Simple Contrastive Learning of Sentence Embeddings This repository contains the code and pre-trained models for our paper SimCSE: Simple Contr

Code for ICML2019 Paper "Compositional Invariance Constraints for Graph Embeddings"

Dependencies NOTE: This code has been updated, if you were using this repo earlier and experienced issues that was due to an outaded codebase. Please

This repository contains the code for EMNLP-2021 paper "Word-Level Coreference Resolution"

Word-Level Coreference Resolution This is a repository with the code to reproduce the experiments described in the paper of the same name, which was a

Wikipedia WordCloud App generate Wikipedia word cloud art created using python's streamlit, matplotlib, wikipedia and wordcloud packages

Wikipedia WordCloud App Wikipedia WordCloud App generate Wikipedia word cloud art created using python's streamlit, matplotlib, wikipedia and wordclou

Using contrastive learning and OpenAI's CLIP to find good embeddings for images with lossy transformations

Creating Robust Representations from Pre-Trained Image Encoders using Contrastive Learning Sriram Ravula, Georgios Smyrnis This is the code for our pr

BPEmb is a collection of pre-trained subword embeddings in 275 languages, based on Byte-Pair Encoding (BPE) and trained on Wikipedia.

BPEmb is a collection of pre-trained subword embeddings in 275 languages, based on Byte-Pair Encoding (BPE) and trained on Wikipedia. Its intended use is as input for neural models in natural language processing.

An easy-to-use Python module that helps you to extract the BERT embeddings for a large text dataset (Bengali/English) efficiently.

An easy-to-use Python module that helps you to extract the BERT embeddings for a large text dataset (Bengali/English) efficiently.

Telegram Group Management Bot based on phython !!!

How to setup/deploy. For easiest way to deploy this Bot click on the below button Mᴀᴅᴇ Bʏ Sᴜᴘᴘᴏʀᴛ Sᴏᴜʀᴄᴇ Find This Bot on Telegram A modular Telegram

split Word file by chapter

split Word file by chapter we use the mircosoft word api to code this tool api url:https://docs.microsoft.com/zh-cn/dotnet/api/ if this tool is good f

Implementation of Neural Distance Embeddings for Biological Sequences (NeuroSEED) in PyTorch

Neural Distance Embeddings for Biological Sequences Official implementation of Neural Distance Embeddings for Biological Sequences (NeuroSEED) in PyTo

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming

Code for ACL'2021 paper WARP 🌀 Word-level Adversarial ReProgramming. Outperforming `GPT-3` on SuperGLUE Few-Shot text classification.

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

Convolutional 2D Knowledge Graph Embeddings resources

ConvE Convolutional 2D Knowledge Graph Embeddings resources. Paper: Convolutional 2D Knowledge Graph Embeddings Used in the paper, but do not use thes

PyTorch implementation of the NIPS-17 paper "Poincaré Embeddings for Learning Hierarchical Representations"

Poincaré Embeddings for Learning Hierarchical Representations PyTorch implementation of Poincaré Embeddings for Learning Hierarchical Representations

Original implementation of the pooling method introduced in "Speaker embeddings by modeling channel-wise correlations"

Speaker-Embeddings-Correlation-Pooling This is the original implementation of the pooling method introduced in "Speaker embeddings by modeling channel

Accurately generate all possible forms of an English word e.g "election" -- "elect", "electoral", "electorate" etc.

Accurately generate all possible forms of an English word Word forms can accurately generate all possible forms of an English word. It can conjugate v

Applying "Load What You Need: Smaller Versions of Multilingual BERT" to LaBSE

smaller-LaBSE LaBSE(Language-agnostic BERT Sentence Embedding) is a very good method to get sentence embeddings across languages. But it is hard to fi

Embeddinghub is a database built for machine learning embeddings.

Embeddinghub is a database built for machine learning embeddings.

🍊 PAUSE (Positive and Annealed Unlabeled Sentence Embedding), accepted by EMNLP'2021 🌴

PAUSE: Positive and Annealed Unlabeled Sentence Embedding Sentence embedding refers to a set of effective and versatile techniques for converting raw

This repository contains the code for EMNLP-2021 paper "Word-Level Coreference Resolution"

Word-Level Coreference Resolution This is a repository with the code to reproduce the experiments described in the paper of the same name, which was a

MWPToolkit is a PyTorch-based toolkit for Math Word Problem (MWP) solving.

MWPToolkit is a PyTorch-based toolkit for Math Word Problem (MWP) solving. It is a comprehensive framework for research purpose that integrates popular MWP benchmark datasets and typical deep learning-based MWP algorithms.

Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

Pytorch-NLU,一个中文文本分类、序列标注工具包,支持中文长文本、短文本的多类、多标签分类任务,支持中文命名实体识别、词性标注、分词等序列标注任务。 Ptorch NLU, a Chinese text classification and sequence annotation toolkit, supports multi class and multi label classification tasks of Chinese long text and short text, and supports sequence annotation tasks such as Chinese named entity recognition, part of speech tagging and word segmentation.

PyTorch Code for the paper "VSE++: Improving Visual-Semantic Embeddings with Hard Negatives"

Improving Visual-Semantic Embeddings with Hard Negatives Code for the image-caption retrieval methods from VSE++: Improving Visual-Semantic Embeddings

Korean Simple Contrastive Learning of Sentence Embeddings using SKT KoBERT and kakaobrain KorNLU dataset

KoSimCSE Korean Simple Contrastive Learning of Sentence Embeddings implementation using pytorch SimCSE Installation git clone https://github.com/BM-K/

从flomo导出的笔记中生成词云

flomo-word-cloud 从flomo导出的笔记中生成词云 如何使用? 将本项目克隆到你的电脑上,使用如下的命令,安装所需python库 pip install -r requirements.txt 在项目里新建一个file文件夹,把所有从flomo导出的html文件放入其中 运行main

Learning Compatible Embeddings, ICCV 2021

LCE Learning Compatible Embeddings, ICCV 2021 by Qiang Meng, Chixiang Zhang, Xiaoqiang Xu and Feng Zhou Paper: Arxiv We cannot release source codes pu

Large scale embeddings on a single machine.

Marius Marius is a system under active development for training embeddings for large-scale graphs on a single machine. Training on large scale graphs

Versatile Generative Language Model

Versatile Generative Language Model This is the implementation of the paper: Exploring Versatile Generative Language Model Via Parameter-Efficient Tra

PyTorch implementation of the NIPS-17 paper "Poincaré Embeddings for Learning Hierarchical Representations"

Poincaré Embeddings for Learning Hierarchical Representations PyTorch implementation of Poincaré Embeddings for Learning Hierarchical Representations

Convolutional 2D Knowledge Graph Embeddings resources

ConvE Convolutional 2D Knowledge Graph Embeddings resources. Paper: Convolutional 2D Knowledge Graph Embeddings Used in the paper, but do not use thes

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

REST API for sentence tokenization and embedding using Multilingual Universal Sentence Encoder.

MUSE stands for Multilingual Universal Sentence Encoder - multilingual extension (supports 16 languages) of Universal Sentence Encoder (USE).

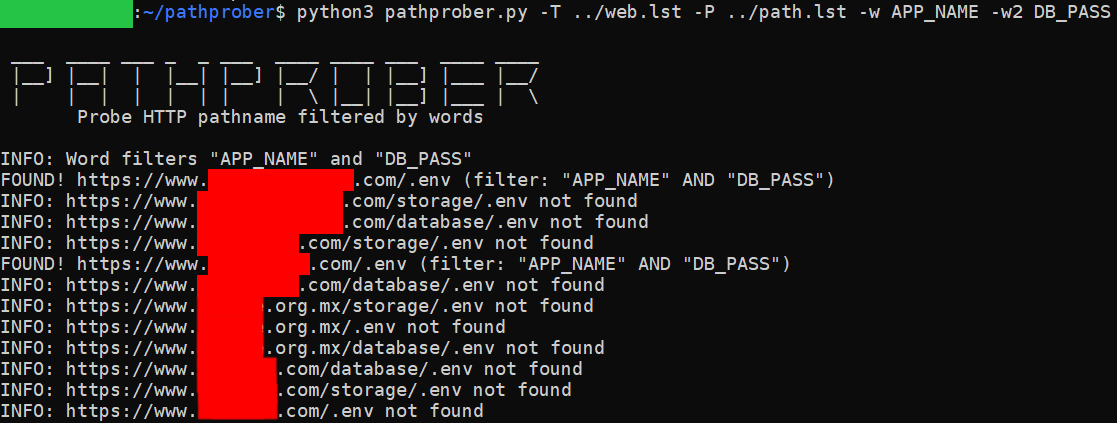

Probe and discover HTTP pathname using brute-force methodology and filtered by specific word or 2 words at once

pathprober Probe and discover HTTP pathname using brute-force methodology and filtered by specific word or 2 words at once. Purpose Brute-forcing webs

An implementation of DeepMind's Relational Recurrent Neural Networks in PyTorch.

relational-rnn-pytorch An implementation of DeepMind's Relational Recurrent Neural Networks (Santoro et al. 2018) in PyTorch. Relational Memory Core (

PyTorch Language Model for 1-Billion Word (LM1B / GBW) Dataset

PyTorch Large-Scale Language Model A Large-Scale PyTorch Language Model trained on the 1-Billion Word (LM1B) / (GBW) dataset Latest Results 39.98 Perp

A PyTorch Implementation of "Watch Your Step: Learning Node Embeddings via Graph Attention" (NeurIPS 2018).

Attention Walk ⠀⠀ A PyTorch Implementation of Watch Your Step: Learning Node Embeddings via Graph Attention (NIPS 2018). Abstract Graph embedding meth

A Pytorch implementation of "Splitter: Learning Node Representations that Capture Multiple Social Contexts" (WWW 2019).

Splitter ⠀⠀ A PyTorch implementation of Splitter: Learning Node Representations that Capture Multiple Social Contexts (WWW 2019). Abstract Recent inte

Official source for spanish Language Models and resources made @ BSC-TEMU within the "Plan de las Tecnologías del Lenguaje" (Plan-TL).

Spanish Language Models 💃🏻 Corpora 📃 Corpora Number of documents Size (GB) BNE 201,080,084 570GB Models 🤖 RoBERTa-base BNE: https://huggingface.co

DRIFT is a tool for Diachronic Analysis of Scientific Literature.

About DRIFT is a tool for Diachronic Analysis of Scientific Literature. The application offers user-friendly and customizable utilities for two modes:

Implementation of Rotary Embeddings, from the Roformer paper, in Pytorch

Rotary Embeddings - Pytorch A standalone library for adding rotary embeddings to transformers in Pytorch, following its success as relative positional

🤖 A Python library for learning and evaluating knowledge graph embeddings

PyKEEN PyKEEN (Python KnowlEdge EmbeddiNgs) is a Python package designed to train and evaluate knowledge graph embedding models (incorporating multi-m

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

This is my reading list for my PhD in AI, NLP, Deep Learning and more.

Shared code for training sentence embeddings with Flax / JAX

flax-sentence-embeddings This repository will be used to share code for the Flax / JAX community event to train sentence embeddings on 1B+ training pa

中文无监督SimCSE Pytorch实现

A PyTorch implementation of unsupervised SimCSE SimCSE: Simple Contrastive Learning of Sentence Embeddings 1. 用法 无监督训练 python train_unsup.py ./data/ne

UmlsBERT: Clinical Domain Knowledge Augmentation of Contextual Embeddings Using the Unified Medical Language System Metathesaurus

UmlsBERT: Clinical Domain Knowledge Augmentation of Contextual Embeddings Using the Unified Medical Language System Metathesaurus General info This is

Reliable probability face embeddings

ProbFace, arxiv This is a demo code of training and testing [ProbFace] using Tensorflow. ProbFace is a reliable Probabilistic Face Embeddging (PFE) me

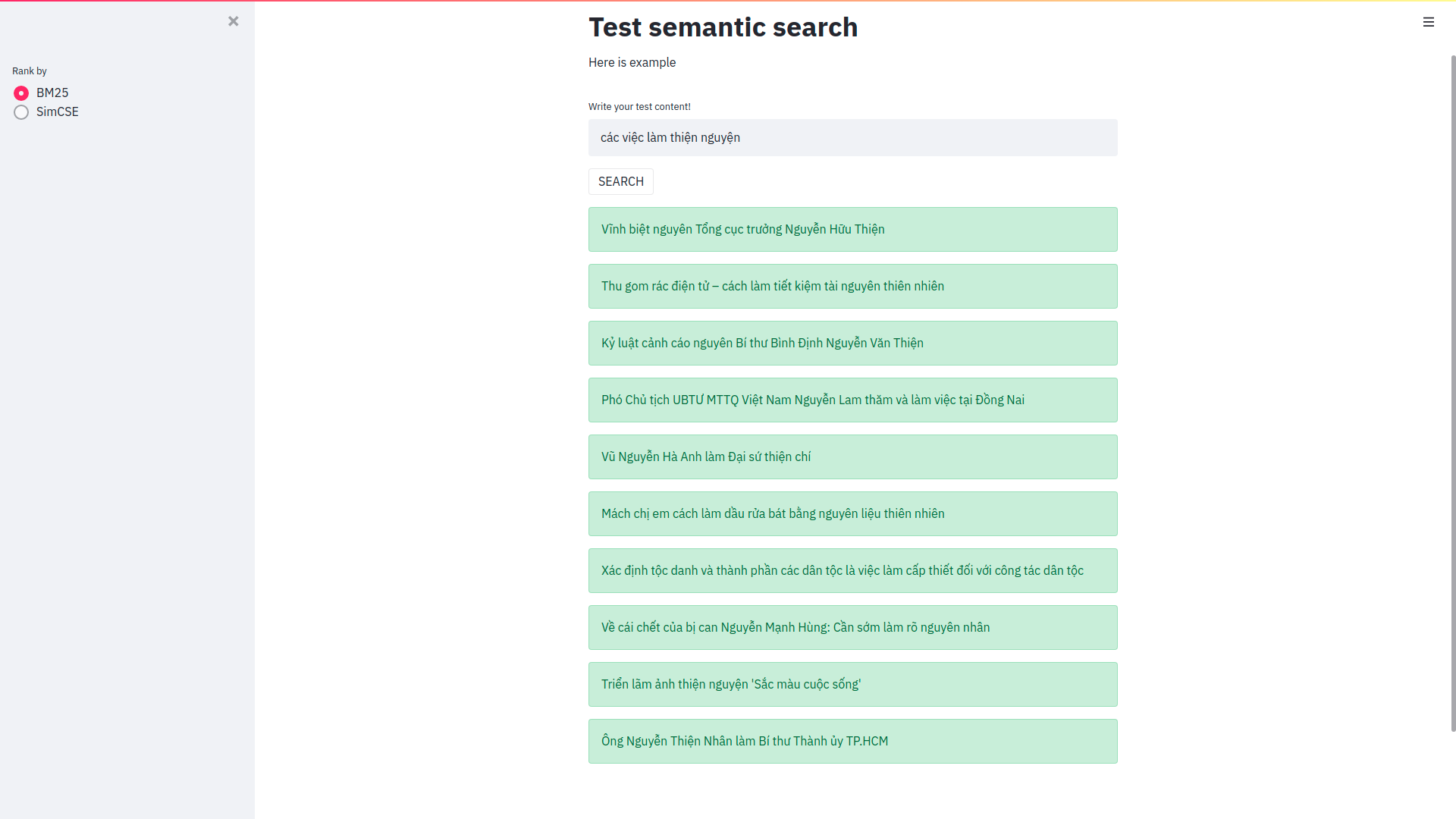

Cải thiện Elasticsearch trong bài toán semantic search sử dụng phương pháp Sentence Embeddings

Cải thiện Elasticsearch trong bài toán semantic search sử dụng phương pháp Sentence Embeddings Trong bài viết này mình sẽ sử dụng pretrain model SimCS

Code for the ACL2021 paper "Combining Static Word Embedding and Contextual Representations for Bilingual Lexicon Induction"

CSCBLI Code for our ACL Findings 2021 paper, "Combining Static Word Embedding and Contextual Representations for Bilingual Lexicon Induction". Require

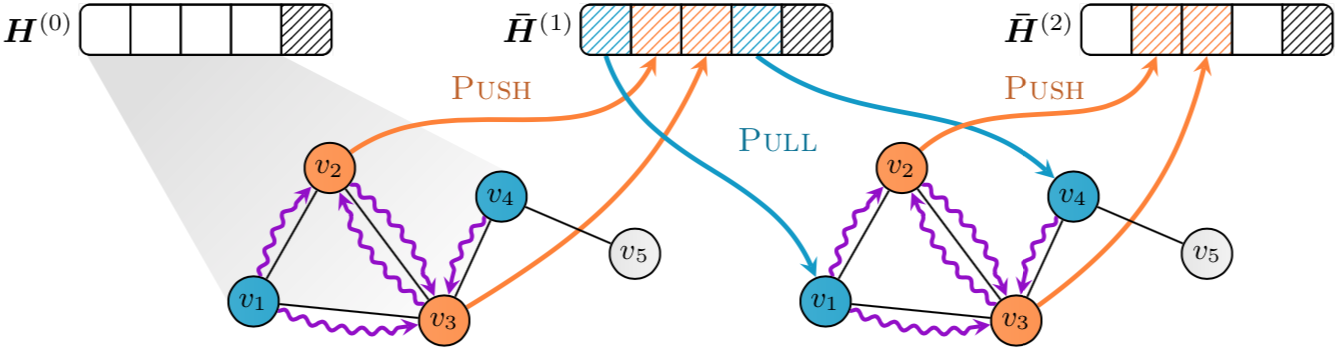

Implementation of "GNNAutoScale: Scalable and Expressive Graph Neural Networks via Historical Embeddings" in PyTorch

PyGAS: Auto-Scaling GNNs in PyG PyGAS is the practical realization of our G NN A uto S cale (GAS) framework, which scales arbitrary message-passing GN

Code for Graph-to-Tree Learning for Solving Math Word Problems (ACL 2020)

Graph-to-Tree Learning for Solving Math Word Problems PyTorch implementation of Graph based Math Word Problem solver described in our ACL 2020 paper G

Paddle implementation for "Cross-Lingual Word Embedding Refinement by ℓ1 Norm Optimisation" (NAACL 2021)

L1-Refinement Paddle implementation for "Cross-Lingual Word Embedding Refinement by ℓ1 Norm Optimisation" (NAACL 2021) 🙈 A more detailed readme is co