2335 Repositories

Python word-language-model Libraries

Phrase-BERT: Improved Phrase Embeddings from BERT with an Application to Corpus Exploration

Phrase-BERT: Improved Phrase Embeddings from BERT with an Application to Corpus Exploration This is the official repository for the EMNLP 2021 long pa

Code for the ACL 2021 paper "Structural Guidance for Transformer Language Models"

Structural Guidance for Transformer Language Models This repository accompanies the paper, Structural Guidance for Transformer Language Models, publis

A single model that parses Universal Dependencies across 75 languages.

A single model that parses Universal Dependencies across 75 languages. Given a sentence, jointly predicts part-of-speech tags, morphology tags, lemmas, and dependency trees.

Research code for the paper "How Good is Your Tokenizer? On the Monolingual Performance of Multilingual Language Models"

Introduction This repository contains research code for the ACL 2021 paper "How Good is Your Tokenizer? On the Monolingual Performance of Multilingual

Code for ICLR 2020 paper "VL-BERT: Pre-training of Generic Visual-Linguistic Representations".

VL-BERT By Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, Jifeng Dai. This repository is an official implementation of the paper VL-BERT:

Vision-Language Pre-training for Image Captioning and Question Answering

VLP This repo hosts the source code for our AAAI2020 work Vision-Language Pre-training (VLP). We have released the pre-trained model on Conceptual Cap

Research code for ECCV 2020 paper "UNITER: UNiversal Image-TExt Representation Learning"

UNITER: UNiversal Image-TExt Representation Learning This is the official repository of UNITER (ECCV 2020). This repository currently supports finetun

Oscar and VinVL

Oscar: Object-Semantics Aligned Pre-training for Vision-and-Language Tasks VinVL: Revisiting Visual Representations in Vision-Language Models Updates

Research Code for NeurIPS 2020 Spotlight paper "Large-Scale Adversarial Training for Vision-and-Language Representation Learning": UNITER adversarial training part

VILLA: Vision-and-Language Adversarial Training This is the official repository of VILLA (NeurIPS 2020 Spotlight). This repository currently supports

Contrastive Language-Image Pretraining

CLIP [Blog] [Paper] [Model Card] [Colab] CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pair

![[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations](https://github.com/kdexd/virtex/raw/master/docs/_static/system_figure.jpg)

[CVPR 2021] VirTex: Learning Visual Representations from Textual Annotations

VirTex: Learning Visual Representations from Textual Annotations Karan Desai and Justin Johnson University of Michigan CVPR 2021 arxiv.org/abs/2006.06

OpenMMLab 3D Human Parametric Model Toolbox and Benchmark

Introduction English | 简体中文 MMHuman3D is an open source PyTorch-based codebase for the use of 3D human parametric models in computer vision and comput

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting Created by Yongming Rao*, Wenliang Zhao*, Guangyi Chen, Yansong Tang, Zheng Z

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

[NeurIPS'21 Spotlight] PyTorch code for our paper "Aligned Structured Sparsity Learning for Efficient Image Super-Resolution"

ASSL This repository is for a new network pruning method (Aligned Structured Sparsity Learning, ASSL) for efficient single image super-resolution (SR)

![Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]](https://github.com/Fangyin1994/KCL/raw/main/fig/overview.png)

Code and Data for the paper: Molecular Contrastive Learning with Chemical Element Knowledge Graph [AAAI 2022]

Knowledge-enhanced Contrastive Learning (KCL) Molecular Contrastive Learning with Chemical Element Knowledge Graph [ AAAI 2022 ]. We construct a Chemi

Learn to code in any language. If

Learn to Code It is an intiiative undertaken by Student Ambassadors Club, Jamshoro for students who are absolute begineers in programming and want to

PyTorch trainer and model for Sequence Classification

PyTorch-trainer-and-model-for-Sequence-Classification After cloning the repository, modify your training data so that the training data is a .csv file

The worst and slowest programming language you have ever seen

VenumLang this is a complete joke EXAMPLE: fizzbuzz in venumlang x = 0

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting Created by Yongming Rao*, Wenliang Zhao*, Guangyi Chen, Yansong Tang, Zheng Z

Advent of Code is an Advent calendar of small programming puzzles for a variety of skill sets and skill levels that can be solved in any programming language you like.

Advent Of Code 2021 - Python English Advent of Code is an Advent calendar of small programming puzzles for a variety of skill sets and skill levels th

Built on python (Mathematical straight fit line coordinates error predictor machine learning foundational model)

Sum-Square_Error-Business-Analytical-Tool- Built on python (Mathematical straight fit line coordinates error predictor machine learning foundational m

Modified GPT using average pooling to reduce the softmax attention memory constraints.

NLP-GPT-Upsampling This repository contains an implementation of Open AI's GPT Model. In particular, this implementation takes inspiration from the Ny

Core ML tools contain supporting tools for Core ML model conversion, editing, and validation.

Core ML Tools Use coremltools to convert machine learning models from third-party libraries to the Core ML format. The Python package contains the sup

Open source annotation tool for machine learning practitioners.

doccano doccano is an open source text annotation tool for humans. It provides annotation features for text classification, sequence labeling and sequ

Tools for curating biomedical training data for large-scale language modeling

Tools for curating biomedical training data for large-scale language modeling

Implementation of the paper 'Sentence Bottleneck Autoencoders from Transformer Language Models'

Introduction This repository contains the code for the paper Sentence Bottleneck Autoencoders from Transformer Language Models by Ivan Montero, Nikola

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network)

BERTAC (BERT-style transformer-based language model with Adversarially pretrained Convolutional neural network) BERTAC is a framework that combines a

Diagnostic tests for linguistic capacities in language models

LM diagnostics This repository contains the diagnostic datasets and experimental code for What BERT is not: Lessons from a new suite of psycholinguist

Language model Prompt And Query Archive

LPAQA: Language model Prompt And Query Archive This repository contains data and code for the paper How Can We Know What Language Models Know? Install

Code for Editing Factual Knowledge in Language Models

KnowledgeEditor Code for Editing Factual Knowledge in Language Models (https://arxiv.org/abs/2104.08164). @inproceedings{decao2021editing, title={Ed

Code for ACL2021 long paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases

LANKA This is the source code for paper: Knowledgeable or Educated Guess? Revisiting Language Models as Knowledge Bases (ACL 2021, long paper) Referen

A Model for Natural Language Attack on Text Classification and Inference

TextFooler A Model for Natural Language Attack on Text Classification and Inference This is the source code for the paper: Jin, Di, et al. "Is BERT Re

The official implementation of "BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Identify Analogies?, ACL 2021 main conference"

BERT is to NLP what AlexNet is to CV This is the official implementation of BERT is to NLP what AlexNet is to CV: Can Pre-Trained Language Models Iden

DeepSpeed is a deep learning optimization library that makes distributed training easy, efficient, and effective.

DeepSpeed+Megatron trained the world's most powerful language model: MT-530B DeepSpeed is hiring, come join us! DeepSpeed is a deep learning optimizat

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations

ALBERT ***************New March 28, 2020 *************** Add a colab tutorial to run fine-tuning for GLUE datasets. ***************New January 7, 2020

Super Tickets in Pre-Trained Language Models: From Model Compression to Improving Generalization (ACL 2021)

Structured Super Lottery Tickets in BERT This repo contains our codes for the paper "Super Tickets in Pre-Trained Language Models: From Model Compress

Pretrained language model and its related optimization techniques developed by Huawei Noah's Ark Lab.

Pretrained Language Model This repository provides the latest pretrained language models and its related optimization techniques developed by Huawei N

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators This is our Pytorch implementation for t

Code associated with the Don't Stop Pretraining ACL 2020 paper

dont-stop-pretraining Code associated with the Don't Stop Pretraining ACL 2020 paper Citation @inproceedings{dontstoppretraining2020, author = {Suchi

Multi-Task Deep Neural Networks for Natural Language Understanding

New Release We released Adversarial training for both LM pre-training/finetuning and f-divergence. Large-scale Adversarial training for LMs: ALUM code

Training and evaluation codes for the BertGen paper (ACL-IJCNLP 2021)

BERTGEN This repository is the implementation of the paper "BERTGEN: Multi-task Generation through BERT" (https://arxiv.org/abs/2106.03484). The codeb

This repository contains the code for "Exploiting Cloze Questions for Few-Shot Text Classification and Natural Language Inference"

Pattern-Exploiting Training (PET) This repository contains the code for Exploiting Cloze Questions for Few-Shot Text Classification and Natural Langua

ACL'2021: LM-BFF: Better Few-shot Fine-tuning of Language Models

LM-BFF (Better Few-shot Fine-tuning of Language Models) This is the implementation of the paper Making Pre-trained Language Models Better Few-shot Lea

Revisiting Self-Training for Few-Shot Learning of Language Model.

SFLM This is the implementation of the paper Revisiting Self-Training for Few-Shot Learning of Language Model. SFLM is short for self-training for few

PyTorch source code of NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models"

This repository contains source code for NAACL 2019 paper "An Embarrassingly Simple Approach for Transfer Learning from Pretrained Language Models" (P

Source code for the ACL-IJCNLP 2021 paper entitled "T-DNA: Taming Pre-trained Language Models with N-gram Representations for Low-Resource Domain Adaptation" by Shizhe Diao et al.

T-DNA Source code for the ACL-IJCNLP 2021 paper entitled Taming Pre-trained Language Models with N-gram Representations for Low-Resource Domain Adapta

Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF shows significant improvements over baseline fine-tuning without data filtration.

Information Gain Filtration Information Gain Filtration (IGF) is a method for filtering domain-specific data during language model finetuning. IGF sho

Sodium is a general purpose programming language which is instruction-oriented (a new programming concept that we are developing and devising) [Still developing...]

Sodium Programming Language Sodium is a general purpose programming language which is instruction-oriented (a new programming concept that we are deve

DANeS is an open-source E-newspaper dataset by collaboration between DATASET JSC (dataset.vn) and AIV Group (aivgroup.vn)

DANeS - Open-source E-newspaper dataset Source: Technology vector created by macrovector - www.freepik.com. DANeS is an open-source E-newspaper datase

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

Nateve transpiler developed with python.

Adam Adam is a Nateve Programming Language transpiler developed using Python. Nateve Nateve is a new general domain programming language open source i

A kedro-plugin to serve Kedro Pipelines as API

General informations Software repository Latest release Total downloads Pypi Code health Branch Tests Coverage Links Documentation Deployment Activity

TensorFlow (Python) implementation of DeepTCN model for multivariate time series forecasting.

DeepTCN TensorFlow TensorFlow (Python) implementation of multivariate time series forecasting model introduced in Chen, Y., Kang, Y., Chen, Y., & Wang

DeepFill v1/v2 with Contextual Attention and Gated Convolution, CVPR 2018, and ICCV 2019 Oral

Generative Image Inpainting An open source framework for generative image inpainting task, with the support of Contextual Attention (CVPR 2018) and Ga

Label-Free Model Evaluation with Semi-Structured Dataset Representations

Label-Free Model Evaluation with Semi-Structured Dataset Representations Prerequisites This code uses the following libraries Python 3.7 NumPy PyTorch

A simple Programming Language

R.S.O.C. A custom built programming language About The Project R.S.O.C. is a custom built programming language very similar to a low-level 8085 progra

Word and phrase lists in CSV

Word Lists Word and phrase lists in CSV, collected from different sources. Oxford Word Lists: oxford-5k.csv - Oxford 3000 and 5000 oxford-opal.csv - O

Train the HRNet model on ImageNet

High-resolution networks (HRNets) for Image classification News [2021/01/20] Add some stronger ImageNet pretrained models, e.g., the HRNet_W48_C_ssld_

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

EdiBERT, a generative model for image editing

EdiBERT, a generative model for image editing EdiBERT is a generative model based on a bi-directional transformer, suited for image manipulation. The

CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer

CycleTransGAN-EVC CycleTransGAN-EVC: A CycleGAN-based Emotional Voice Conversion Model with Transformer Demo emotion CycleTransGAN CycleTransGAN Cycle

PyContinual (An Easy and Extendible Framework for Continual Learning)

PyContinual (An Easy and Extendible Framework for Continual Learning) Easy to Use You can sumply change the baseline, backbone and task, and then read

This repository is for the preprint "A generative nonparametric Bayesian model for whole genomes"

BEAR Overview This repository contains code associated with the preprint A generative nonparametric Bayesian model for whole genomes (2021), which pro

Code for "On Memorization in Probabilistic Deep Generative Models"

On Memorization in Probabilistic Deep Generative Models This repository contains the code necessary to reproduce the experiments in On Memorization in

Toolbox to analyze temporal context invariance of deep neural networks

PyTCI A toolbox that estimates the integration window of a sensory response using the "Temporal Context Invariance" paradigm (TCI). The TCI method Int

Think Big, Teach Small: Do Language Models Distil Occam’s Razor?

Think Big, Teach Small: Do Language Models Distil Occam’s Razor? Software related to the paper "Think Big, Teach Small: Do Language Models Distil Occa

Code for models used in Bashiri et al., "A Flow-based latent state generative model of neural population responses to natural images".

A Flow-based latent state generative model of neural population responses to natural images Code for "A Flow-based latent state generative model of ne

Evolutionary Scale Modeling (esm): Pretrained language models for proteins

Evolutionary Scale Modeling This repository contains code and pre-trained weights for Transformer protein language models from Facebook AI Research, i

[NeurIPS 2021] "G-PATE: Scalable Differentially Private Data Generator via Private Aggregation of Teacher Discriminators"

G-PATE This is the official code base for our NeurIPS 2021 paper: "G-PATE: Scalable Differentially Private Data Generator via Private Aggregation of T

Mouse Brain in the Model Zoo

Deep Neural Mouse Brain Modeling This is the repository for the ongoing deep neural mouse modeling project, an attempt to characterize the representat

Official Implementation for Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation

Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation We present a generic image-to-image translation framework, pixel2style2pixel (pSp

A Simple Long-Tailed Rocognition Baseline via Vision-Language Model

BALLAD This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model. Requirements Python3 Pytorch(1.7.

Official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

This is the official code repository for A Simple Long-Tailed Rocognition Baseline via Vision-Language Model.

A paper list of pre-trained language models (PLMs).

Large-scale pre-trained language models (PLMs) such as BERT and GPT have achieved great success and become a milestone in NLP.

Various Algorithms for Short Text Mining

Short Text Mining in Python Introduction This package shorttext is a Python package that facilitates supervised and unsupervised learning for short te

A lightweight interface for reading in output from the Weather Research and Forecasting (WRF) model into xarray Dataset

xwrf A lightweight interface for reading in output from the Weather Research and Forecasting (WRF) model into xarray Dataset. The primary objective of

Project to deploy a machine learning model based on Titanic dataset from Kaggle

kaggle_titanic_deploy Project to deploy a machine learning model based on Titanic dataset from Kaggle In this project we used the Titanic dataset from

This repository contains the implementation of the paper: Federated Distillation of Natural Language Understanding with Confident Sinkhorns

Federated Distillation of Natural Language Understanding with Confident Sinkhorns This repository provides an alternative method for ensembled distill

WinPython is a portable distribution of the Python programming language for Windows

WinPython tools Copyright © 2012-2013 Pierre Raybaut Copyright © 2014-2019+ The Winpython development team https://github.com/winpython/ Licensed unde

Official Implementation of SWAGAN: A Style-based Wavelet-driven Generative Model

Official Implementation of SWAGAN: A Style-based Wavelet-driven Generative Model SWAGAN: A Style-based Wavelet-driven Generative Model Rinon Gal, Dana

Official implementation of VQ-Diffusion: Vector Quantized Diffusion Model for Text-to-Image Synthesis

Official implementation of VQ-Diffusion: Vector Quantized Diffusion Model for Text-to-Image Synthesis

![[NeurIPS 2021] Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data](https://github.com/EndlessSora/DeceiveD/raw/main/resources/teaser.jpg)

[NeurIPS 2021] Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data

Deceive D: Adaptive Pseudo Augmentation for GAN Training with Limited Data (NeurIPS 2021) This repository provides the official PyTorch implementation

Code for paper "Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs"

This is the codebase for the paper: Do Language Models Have Beliefs? Methods for Detecting, Updating, and Visualizing Model Beliefs Directory Structur

Code for unmixing audio signals in four different stems "drums, bass, vocals, others". The code is adapted from "Jukebox: A Generative Model for Music"

Status: Archive (code is provided as-is, no updates expected) Disclaimer This code is a based on "Jukebox: A Generative Model for Music" Paper We adju

Anomaly Localization in Model Gradients Under Backdoor Attacks Against Federated Learning

Federated_Learning This repo provides a federated learning framework that allows to carry out backdoor attacks under varying conditions. This is a ker

Source Code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chinese Question Matching

Description The source code and data for my paper titled Linguistic Knowledge in Data Augmentation for Natural Language Processing: An Example on Chin

A simple rest api serving a deep learning model that classifies human gender based on their faces. (vgg16 transfare learning)

this is a simple rest api serving a deep learning model that classifies human gender based on their faces. (vgg16 transfare learning)

Train an imgs.ai model on your own dataset

imgs.ai is a fast, dataset-agnostic, deep visual search engine for digital art history based on neural network embeddings.

LAnguage Model Analysis

LAMA: LAnguage Model Analysis LAMA is a probe for analyzing the factual and commonsense knowledge contained in pretrained language models. The dataset

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER (EMNLP 2021).

Data and evaluation code for the paper WikiNEuRal: Combined Neural and Knowledge-based Silver Data Creation for Multilingual NER. @inproceedings{tedes

Count the MACs / FLOPs of your PyTorch model.

THOP: PyTorch-OpCounter How to install pip install thop (now continously intergrated on Github actions) OR pip install --upgrade git+https://github.co

Pre-trained Deep Learning models and demos (high quality and extremely fast)

OpenVINO™ Toolkit - Open Model Zoo repository This repository includes optimized deep learning models and a set of demos to expedite development of hi

Selenium Page Object Model with Python

Page-object-model (POM) is a pattern that you can apply it to develop efficient automation framework.

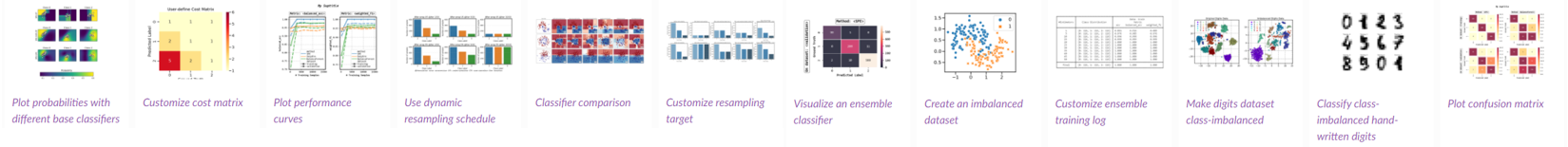

IMBENS: class-imbalanced ensemble learning in Python.

IMBENS: class-imbalanced ensemble learning in Python. Links: [Documentation] [Gallery] [PyPI] [Changelog] [Source] [Download] [知乎/Zhihu] [中文README] [a

Research code for the paper "Variational Gibbs inference for statistical estimation from incomplete data".

Variational Gibbs inference (VGI) This repository contains the research code for Simkus, V., Rhodes, B., Gutmann, M. U., 2021. Variational Gibbs infer

Codebase for Amodal Segmentation through Out-of-Task andOut-of-Distribution Generalization with a Bayesian Model

Codebase for Amodal Segmentation through Out-of-Task andOut-of-Distribution Generalization with a Bayesian Model

Simple programming language built on Python.

Serial Another programming language. Built on Python. Building and running program In order to run the program on serial, unfortunately you still need

An optimized prompt tuning strategy comparable to fine-tuning across model scales and tasks.

P-tuning v2 P-Tuning v2: Prompt Tuning Can Be Comparable to Finetuning Universally Across Scales and Tasks An optimized prompt tuning strategy achievi

Redis OM Python makes it easy to model Redis data in your Python applications.

Object mapping, and more, for Redis and Python Redis OM Python makes it easy to model Redis data in your Python applications. Redis OM Python | Redis