1182 Repositories

Python AI-DAY-WORKSHOP-NLP-TRANSFORMERS Libraries

Research code for CVPR 2021 paper "End-to-End Human Pose and Mesh Reconstruction with Transformers"

MeshTransformer ✨ This is our research code of End-to-End Human Pose and Mesh Reconstruction with Transformers. MEsh TRansfOrmer is a simple yet effec

An IVR Chatbot which can exponentially reduce the burden of companies as well as can improve the consumer/end user experience.

IVR-Chatbot Achievements 🏆 Team Uhtred won the Maverick 2.0 Bot-a-thon 2021 organized by AbInbev India. ❓ Problem Statement As we all know that, lot

Medical Image Segmentation using Squeeze-and-Expansion Transformers

Medical Image Segmentation using Squeeze-and-Expansion Transformers Introduction This repository contains the code of the IJCAI'2021 paper 'Medical Im

Microsoft contributing libraries, tools, recipes, sample codes and workshop contents for machine learning & deep learning.

Microsoft contributing libraries, tools, recipes, sample codes and workshop contents for machine learning & deep learning.

(L2ID@CVPR2021) Boosting Co-teaching with Compression Regularization for Label Noise

Nested-Co-teaching (L2ID@CVPR2021) Pytorch implementation of paper "Boosting Co-teaching with Compression Regularization for Label Noise" [PDF] If our

Pytorch code for ICRA'21 paper: "Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation"

Hierarchical Cross-Modal Agent for Robotics Vision-and-Language Navigation This repository is the pytorch implementation of our paper: Hierarchical Cr

Contains code for the paper "Vision Transformers are Robust Learners".

Vision Transformers are Robust Learners This repository contains the code for the paper Vision Transformers are Robust Learners by Sayak Paul* and Pin

Train 🤗transformers with DeepSpeed: ZeRO-2, ZeRO-3

Fork from https://github.com/huggingface/transformers/tree/86d5fb0b360e68de46d40265e7c707fe68c8015b/examples/pytorch/language-modeling at 2021.05.17.

XtremeDistil framework for distilling/compressing massive multilingual neural network models to tiny and efficient models for AI at scale

XtremeDistilTransformers for Distilling Massive Multilingual Neural Networks ACL 2020 Microsoft Research [Paper] [Video] Releasing [XtremeDistilTransf

Implementation of gMLP, an all-MLP replacement for Transformers, in Pytorch

Implementation of gMLP, an all-MLP replacement for Transformers, in Pytorch

This repository contains PyTorch code for Robust Vision Transformers.

This repository contains PyTorch code for Robust Vision Transformers.

![[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.](https://github.com/cambridgeltl/sapbert/raw/main/sapbert_front_graphs_v6.png?raw=true)

[NAACL & ACL 2021] SapBERT: Self-alignment pretraining for BERT.

SapBERT: Self-alignment pretraining for BERT This repo holds code for the SapBERT model presented in our NAACL 2021 paper: Self-Alignment Pretraining

MILES is a multilingual text simplifier inspired by LSBert - A BERT-based lexical simplification approach proposed in 2018. Unlike LSBert, MILES uses the bert-base-multilingual-uncased model, as well as simple language-agnostic approaches to complex word identification (CWI) and candidate ranking.

MILES Multilingual Lexical Simplifier Explore the docs » Read LSBert Paper · Report Bug · Request Feature About The Project MILES is a multilingual te

TrackFormer: Multi-Object Tracking with Transformers

TrackFormer: Multi-Object Tracking with Transformers This repository provides the official implementation of the TrackFormer: Multi-Object Tracking wi

Code for "ShineOn: Illuminating Design Choices for Practical Video-based Virtual Clothing Try-on", accepted at WACV 2021 Generation of Human Behavior Workshop.

ShineOn: Illuminating Design Choices for Practical Video-based Virtual Clothing Try-on [ Paper ] [ Project Page ] This repository contains the code fo

Exploring whether attention is necessary for vision transformers

Do You Even Need Attention? A Stack of Feed-Forward Layers Does Surprisingly Well on ImageNet Paper/Report TL;DR We replace the attention layer in a v

:hot_pepper: R²SQL: "Dynamic Hybrid Relation Network for Cross-Domain Context-Dependent Semantic Parsing." (AAAI 2021)

R²SQL The PyTorch implementation of paper Dynamic Hybrid Relation Network for Cross-Domain Context-Dependent Semantic Parsing. (AAAI 2021) Requirement

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Google AI 2018 BERT pytorch implementation

BERT-pytorch Pytorch implementation of Google AI's 2018 BERT, with simple annotation BERT 2018 BERT: Pre-training of Deep Bidirectional Transformers f

jiant is an NLP toolkit

jiant is an NLP toolkit The multitask and transfer learning toolkit for natural language processing research Why should I use jiant? jiant supports mu

Pytorch NLP library based on FastAI

Quick NLP Quick NLP is a deep learning nlp library inspired by the fast.ai library It follows the same api as fastai and extends it allowing for quick

Interpretable Models for NLP using PyTorch

This repo is deprecated. Please find the updated package here. https://github.com/EdGENetworks/anuvada Anuvada: Interpretable Models for NLP using PyT

keras implement of transformers for humans

keras implement of transformers for humans

An easier way to build neural search on the cloud

Jina is geared towards building search systems for any kind of data, including text, images, audio, video and many more. With the modular design & multi-layer abstraction, you can leverage the efficient patterns to build the system by parts, or chaining them into a Flow for an end-to-end experience.

[EMNLP 2020] Keep CALM and Explore: Language Models for Action Generation in Text-based Games

Contextual Action Language Model (CALM) and the ClubFloyd Dataset Code and data for paper Keep CALM and Explore: Language Models for Action Generation

ISTR: End-to-End Instance Segmentation with Transformers (https://arxiv.org/abs/2105.00637)

This is the project page for the paper: ISTR: End-to-End Instance Segmentation via Transformers, Jie Hu, Liujuan Cao, Yao Lu, ShengChuan Zhang, Yan Wa

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

QuickAI is a Python library that makes it extremely easy to experiment with state-of-the-art Machine Learning models.

COVID-19 Related NLP Papers

COVID-19 outbreak has become a global pandemic. NLP researchers are fighting the epidemic in their own way.

PyTorch code for Vision Transformers training with the Self-Supervised learning method DINO

Self-Supervised Vision Transformers with DINO PyTorch implementation and pretrained models for DINO. For details, see Emerging Properties in Self-Supe

Geometry-Free View Synthesis: Transformers and no 3D Priors

Geometry-Free View Synthesis: Transformers and no 3D Priors Geometry-Free View Synthesis: Transformers and no 3D Priors Robin Rombach*, Patrick Esser*

Twins: Revisiting the Design of Spatial Attention in Vision Transformers

Twins: Revisiting the Design of Spatial Attention in Vision Transformers Very recently, a variety of vision transformer architectures for dense predic

![NAACL'2021: Factual Probing Is [MASK]: Learning vs. Learning to Recall](https://github.com/princeton-nlp/OptiPrompt/raw/main/figure/optiprompt.png)

NAACL'2021: Factual Probing Is [MASK]: Learning vs. Learning to Recall

OptiPrompt This is the PyTorch implementation of the paper Factual Probing Is [MASK]: Learning vs. Learning to Recall. We propose OptiPrompt, a simple

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

🤗 The largest hub of ready-to-use NLP datasets for ML models with fast, easy-to-use and efficient data manipulation tools

NLPretext packages in a unique library all the text preprocessing functions you need to ease your NLP project.

NLPretext packages in a unique library all the text preprocessing functions you need to ease your NLP project.

The 1st place solution of track2 (Vehicle Re-Identification) in the NVIDIA AI City Challenge at CVPR 2021 Workshop.

AICITY2021_Track2_DMT The 1st place solution of track2 (Vehicle Re-Identification) in the NVIDIA AI City Challenge at CVPR 2021 Workshop. Introduction

An Explainable Leaderboard for NLP

ExplainaBoard: An Explainable Leaderboard for NLP Introduction | Website | Download | Backend | Paper | Video | Bib Introduction ExplainaBoard is an i

PyTorch implementation of the end-to-end coreference resolution model with different higher-order inference methods.

End-to-End Coreference Resolution with Different Higher-Order Inference Methods This repository contains the implementation of the paper: Revealing th

Generate indoor scenes with Transformers

SceneFormer: Indoor Scene Generation with Transformers Initial code release for the Sceneformer paper, contains models, train and test scripts for the

SimCSE: Simple Contrastive Learning of Sentence Embeddings

SimCSE: Simple Contrastive Learning of Sentence Embeddings This repository contains the code and pre-trained models for our paper SimCSE: Simple Contr

CoaT: Co-Scale Conv-Attentional Image Transformers

CoaT: Co-Scale Conv-Attentional Image Transformers Introduction This repository contains the official code and pretrained models for CoaT: Co-Scale Co

SpikeX - SpaCy Pipes for Knowledge Extraction

SpikeX is a collection of pipes ready to be plugged in a spaCy pipeline. It aims to help in building knowledge extraction tools with almost-zero effort.

The repo contains the code of the ACL2020 paper `Dice Loss for Data-imbalanced NLP Tasks`

Dice Loss for NLP Tasks This repository contains code for Dice Loss for Data-imbalanced NLP Tasks at ACL2020. Setup Install Package Dependencies The c

VideoGPT: Video Generation using VQ-VAE and Transformers

VideoGPT: Video Generation using VQ-VAE and Transformers [Paper][Website][Colab][Gradio Demo] We present VideoGPT: a conceptually simple architecture

Official implementation of GraphMask as presented in our paper Interpreting Graph Neural Networks for NLP With Differentiable Edge Masking.

GraphMask This repository contains an implementation of GraphMask, the interpretability technique for graph neural networks presented in our ICLR 2021

skweak: A software toolkit for weak supervision applied to NLP tasks

Labelled data remains a scarce resource in many practical NLP scenarios. This is especially the case when working with resource-poor languages (or text domains), or when using task-specific labels without pre-existing datasets. The only available option is often to collect and annotate texts by hand, which is expensive and time-consuming.

![[Preprint] Escaping the Big Data Paradigm with Compact Transformers, 2021](https://github.com/SHI-Labs/Compact-Transformers/raw/main/images/model_sym.png)

[Preprint] Escaping the Big Data Paradigm with Compact Transformers, 2021

Compact Transformers Preprint Link: Escaping the Big Data Paradigm with Compact Transformers By Ali Hassani[1]*, Steven Walton[1]*, Nikhil Shah[1], Ab

![[CVPR'21] Multi-Modal Fusion Transformer for End-to-End Autonomous Driving](https://github.com/autonomousvision/transfuser/raw/main/transfuser/assets/teaser.png)

[CVPR'21] Multi-Modal Fusion Transformer for End-to-End Autonomous Driving

TransFuser This repository contains the code for the CVPR 2021 paper Multi-Modal Fusion Transformer for End-to-End Autonomous Driving. If you find our

Code for producing Japanese GPT-2 provided by rinna Co., Ltd.

japanese-gpt2 This repository provides the code for training Japanese GPT-2 models. This code has been used for producing japanese-gpt2-medium release

FedNLP: A Benchmarking Framework for Federated Learning in Natural Language Processing

FedNLP is a research-oriented benchmarking framework for advancing federated learning (FL) in natural language processing (NLP). It uses FedML repository as the git submodule. In other words, FedNLP only focuses on adavanced models and dataset, while FedML supports various federated optimizers (e.g., FedAvg) and platforms (Distributed Computing, IoT/Mobile, Standalone).

Implementation of Perceiver, General Perception with Iterative Attention in TensorFlow

Perceiver This Python package implements Perceiver: General Perception with Iterative Attention by Andrew Jaegle in TensorFlow. This model builds on t

nlp基础任务

NLP算法 说明 此算法仓库包括文本分类、序列标注、关系抽取、文本匹配、文本相似度匹配这五个主流NLP任务,涉及到22个相关的模型算法。 框架结构 文件结构 all_models ├── Base_line │ ├── __init__.py │ ├── base_data_process.

Tool which allow you to detect and translate text.

Text detection and recognition This repository contains tool which allow to detect region with text and translate it one by one. Description Two pretr

Awesome-NLP-Research (ANLP)

Awesome-NLP-Research (ANLP)

PRTR: Pose Recognition with Cascade Transformers

PRTR: Pose Recognition with Cascade Transformers Introduction This repository is the official implementation for Pose Recognition with Cascade Transfo

Changing the Mind of Transformers for Topically-Controllable Language Generation

We will first introduce the how to run the IPython notebook demo by downloading our pretrained models. Then, we will introduce how to run our training and evaluation code.

Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch

Cross Transformers - Pytorch (wip) Implementation of Cross Transformer for spatially-aware few-shot transfer, in Pytorch Install $ pip install cross-t

A fast and easy implementation of Transformer with PyTorch.

FasySeq FasySeq is a shorthand as a Fast and easy sequential modeling toolkit. It aims to provide a seq2seq model to researchers and developers, which

中文空间语义理解评测

中文空间语义理解评测 最新消息 2021-04-10 🚩 排行榜发布: Leaderboard 2021-04-05 基线系统发布: SpaCE2021-Baseline 2021-04-05 开放数据提交: 提交结果 2021-04-01 开放报名: 我要报名 2021-04-01 数据集 pa

Python bindings to libpostal for fast international address parsing/normalization

pypostal These are the official Python bindings to https://github.com/openvenues/libpostal, a fast statistical parser/normalizer for street addresses

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Tool for visualizing attention in the Transformer model (BERT, GPT-2, Albert, XLNet, RoBERTa, CTRL, etc.)

Hands-on machine learning workshop

emb-ntua-workshop This workshop discusses introductory concepts of machine learning and data mining following a hands-on approach using popular tools

Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GanFormer and TransGan paper

TransGanFormer (wip) Implementation of TransGanFormer, an all-attention GAN that combines the finding from the recent GansFormer and TransGan paper. I

The source code for the Cutoff data augmentation approach proposed in this paper: "A Simple but Tough-to-Beat Data Augmentation Approach for Natural Language Understanding and Generation".

Cutoff: A Simple Data Augmentation Approach for Natural Language This repository contains source code necessary to reproduce the results presented in

Source code for the GPT-2 story generation models in the EMNLP 2020 paper "STORIUM: A Dataset and Evaluation Platform for Human-in-the-Loop Story Generation"

Storium GPT-2 Models This is the official repository for the GPT-2 models described in the EMNLP 2020 paper [STORIUM: A Dataset and Evaluation Platfor

Official repository for "PAIR: Planning and Iterative Refinement in Pre-trained Transformers for Long Text Generation"

pair-emnlp2020 Official repository for the paper: Xinyu Hua and Lu Wang: PAIR: Planning and Iterative Refinement in Pre-trained Transformers for Long

UA-GEC: Grammatical Error Correction and Fluency Corpus for the Ukrainian Language

UA-GEC: Grammatical Error Correction and Fluency Corpus for the Ukrainian Language This repository contains UA-GEC data and an accompanying Python lib

Implementation of various Vision Transformers I found interesting

Implementation of various Vision Transformers I found interesting

Code for "LoFTR: Detector-Free Local Feature Matching with Transformers", CVPR 2021

LoFTR: Detector-Free Local Feature Matching with Transformers Project Page | Paper LoFTR: Detector-Free Local Feature Matching with Transformers Jiami

![[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers](https://github.com/fudan-zvg/SETR/raw/main/fig/image.png)

[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

[CVPR 2021] Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers

Top2Vec is an algorithm for topic modeling and semantic search.

Top2Vec is an algorithm for topic modeling and semantic search. It automatically detects topics present in text and generates jointly embedded topic, document and word vectors.

《LXMERT: Learning Cross-Modality Encoder Representations from Transformers》(EMNLP 2020)

The Most Important Thing. Our code is developed based on: LXMERT: Learning Cross-Modality Encoder Representations from Transformers

Group-Free 3D Object Detection via Transformers

Group-Free 3D Object Detection via Transformers By Ze Liu, Zheng Zhang, Yue Cao, Han Hu, Xin Tong. This repo is the official implementation of "Group-

Examples and code for the Practical Machine Learning workshop series

Practical Machine Learning Workshop Series Practical Machine Learning for Quantitative Finance Post conference workshop at the WBS Spring Conference D

source code and pre-trained/fine-tuned checkpoint for NAACL 2021 paper LightningDOT

LightningDOT: Pre-training Visual-Semantic Embeddings for Real-Time Image-Text Retrieval This repository contains source code and pre-trained/fine-tun

Code for CVPR 2021 paper: Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning

Revamping Cross-Modal Recipe Retrieval with Hierarchical Transformers and Self-supervised Learning This is the PyTorch companion code for the paper: A

Learning Dense Representations of Phrases at Scale (Lee et al., 2020)

DensePhrases DensePhrases provides answers to your natural language questions from the entire Wikipedia in real-time. While it efficiently searches th

Official PyTorch implementation for Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers, a novel method to visualize any Transformer-based network. Including examples for DETR, VQA.

PyTorch Implementation of Generic Attention-model Explainability for Interpreting Bi-Modal and Encoder-Decoder Transformers 1 Using Colab Please notic

Implementation of the paper "Language-agnostic representation learning of source code from structure and context".

Code Transformer This is an official PyTorch implementation of the CodeTransformer model proposed in: D. Zügner, T. Kirschstein, M. Catasta, J. Leskov

PyTorch Implementation of CvT: Introducing Convolutions to Vision Transformers

CvT: Introducing Convolutions to Vision Transformers Pytorch implementation of CvT: Introducing Convolutions to Vision Transformers Usage: img = torch

Implementation of 'lightweight' GAN, proposed in ICLR 2021, in Pytorch. High resolution image generations that can be trained within a day or two

512x512 flowers after 12 hours of training, 1 gpu 256x256 flowers after 12 hours of training, 1 gpu Pizza 'Lightweight' GAN Implementation of 'lightwe

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 B) on a single 16 GB VRAM V100 Google Cloud instance with Huggingface Transformers using DeepSpeed

Guide: Finetune GPT2-XL (1.5 Billion Parameters) and GPT-NEO (2.7 Billion Parameters) on a single 16 GB VRAM V100 Google Cloud instance with Huggingfa

Implementation of STAM (Space Time Attention Model), a pure and simple attention model that reaches SOTA for video classification

STAM - Pytorch Implementation of STAM (Space Time Attention Model), yet another pure and simple SOTA attention model that bests all previous models in

The project is an official implementation of our paper "3D Human Pose Estimation with Spatial and Temporal Transformers".

3D Human Pose Estimation with Spatial and Temporal Transformers This repo is the official implementation for 3D Human Pose Estimation with Spatial and

Code for Transformers Solve Limited Receptive Field for Monocular Depth Prediction

Official PyTorch code for Transformers Solve Limited Receptive Field for Monocular Depth Prediction. Guanglei Yang, Hao Tang, Mingli Ding, Nicu Sebe,

Tracking Progress in Natural Language Processing

Repository to track the progress in Natural Language Processing (NLP), including the datasets and the current state-of-the-art for the most common NLP tasks.

An implementation of Performer, a linear attention-based transformer, in Pytorch

Performer - Pytorch An implementation of Performer, a linear attention-based transformer variant with a Fast Attention Via positive Orthogonal Random

Reformer, the efficient Transformer, in Pytorch

Reformer, the Efficient Transformer, in Pytorch This is a Pytorch implementation of Reformer https://openreview.net/pdf?id=rkgNKkHtvB It includes LSH

Training RNNs as Fast as CNNs (https://arxiv.org/abs/1709.02755)

News SRU++, a new SRU variant, is released. [tech report] [blog] The experimental code and SRU++ implementation are available on the dev branch which

Library for faster pinned CPU - GPU transfer in Pytorch

SpeedTorch Faster pinned CPU tensor - GPU Pytorch variabe transfer and GPU tensor - GPU Pytorch variable transfer, in certain cases. Update 9-29-1

An easier way to build neural search on the cloud

An easier way to build neural search on the cloud Jina is a deep learning-powered search framework for building cross-/multi-modal search systems (e.g

Release of SPLASH: Dataset for semantic parse correction with natural language feedback in the context of text-to-SQL parsing

SPLASH: Semantic Parsing with Language Assistance from Humans SPLASH is dataset for the task of semantic parse correction with natural language feedba

Dense Prediction Transformers

Vision Transformers for Dense Prediction This repository contains code and models for our paper: Vision Transformers for Dense Prediction René Ranftl,

BossNAS: Exploring Hybrid CNN-transformers with Block-wisely Self-supervised Neural Architecture Search

BossNAS This repository contains PyTorch evaluation code, retraining code and pretrained models of our paper: BossNAS: Exploring Hybrid CNN-transforme

⚡ boost inference speed of T5 models by 5x & reduce the model size by 3x using fastT5.

Reduce T5 model size by 3X and increase the inference speed up to 5X. Install Usage Details Functionalities Benchmarks Onnx model Quantized onnx model

Repository to hold code for the cap-bot varient that is being presented at the SIIC Defence Hackathon 2021.

capbot-siic Repository to hold code for the cap-bot varient that is being presented at the SIIC Defence Hackathon 2021. Problem Inspiration A plethora

Package for controllable summarization

summarizers summarizers is package for controllable summarization based CTRLsum. currently, we only supports English. It doesn't work in other languag

a Deep Learning Framework for Text

DeLFT DeLFT (Deep Learning Framework for Text) is a Keras and TensorFlow framework for text processing, focusing on sequence labelling (e.g. named ent

Natural language detection

Detect the language of text. What’s so cool about franc? franc can support more languages(†) than any other library franc is packaged with support for

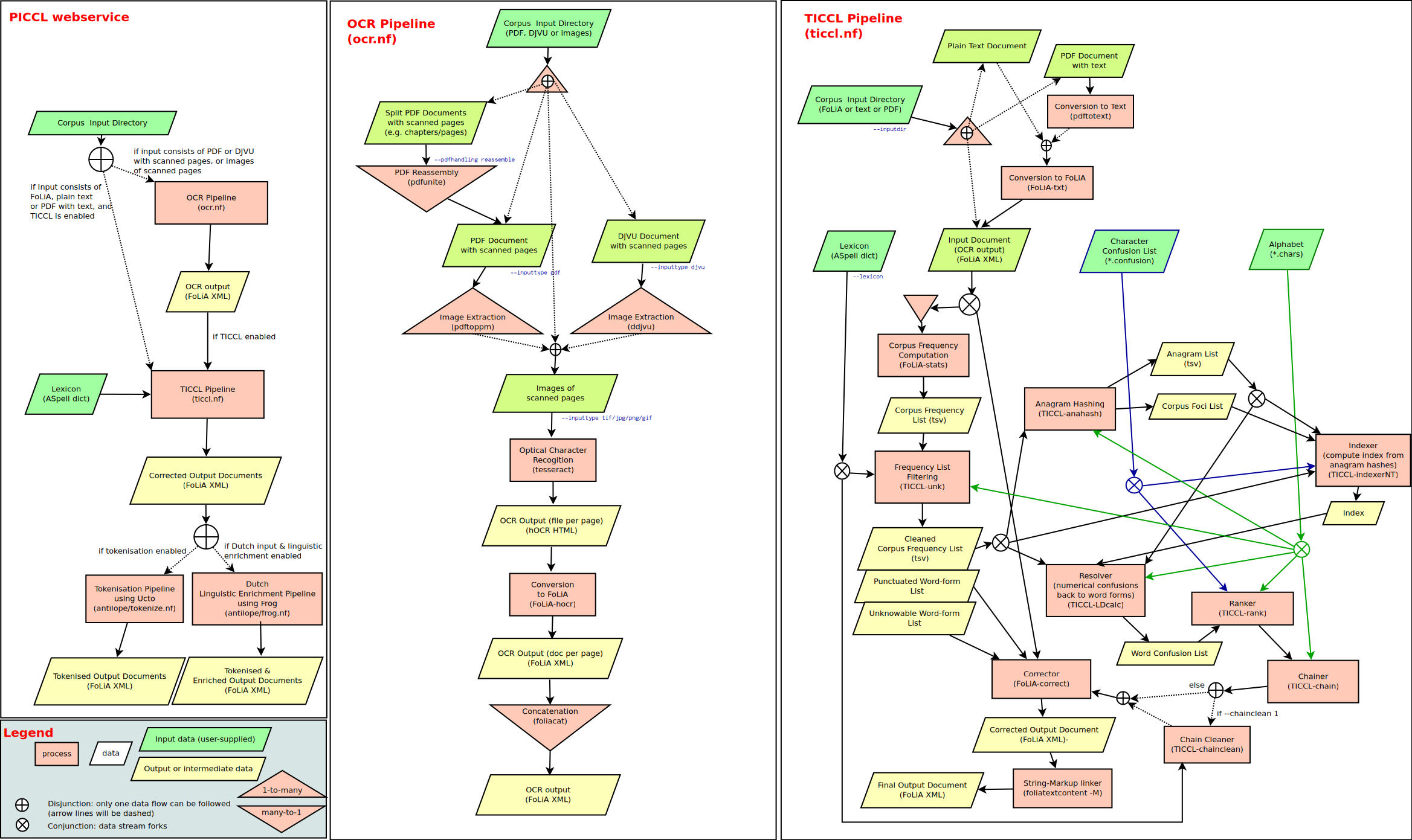

A set of workflows for corpus building through OCR, post-correction and normalisation

PICCL: Philosophical Integrator of Computational and Corpus Libraries PICCL offers a workflow for corpus building and builds on a variety of tools. Th