916 Repositories

Python Non-Local-Sparse-Attention Libraries

Adversarial Framework for (non-) Parametric Image Stylisation Mosaics

Fully Adversarial Mosaics (FAMOS) Pytorch implementation of the paper "Copy the Old or Paint Anew? An Adversarial Framework for (non-) Parametric Imag

Pervasive Attention: 2D Convolutional Networks for Sequence-to-Sequence Prediction

This is a fork of Fairseq(-py) with implementations of the following models: Pervasive Attention - 2D Convolutional Neural Networks for Sequence-to-Se

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

PyTorch Implementation of "Non-Autoregressive Neural Machine Translation"

Non-Autoregressive Transformer Code release for Non-Autoregressive Neural Machine Translation by Jiatao Gu, James Bradbury, Caiming Xiong, Victor O.K.

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Intent parsing and slot filling in PyTorch with seq2seq + attention

PyTorch Seq2Seq Intent Parsing Reframing intent parsing as a human - machine translation task. Work in progress successor to torch-seq2seq-intent-pars

Improving Convolutional Networks via Attention Transfer (ICLR 2017)

Attention Transfer PyTorch code for "Paying More Attention to Attention: Improving the Performance of Convolutional Neural Networks via Attention Tran

Official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification

CrossViT This repository is the official implementation of CrossViT: Cross-Attention Multi-Scale Vision Transformer for Image Classification. ArXiv If

Hierarchical unsupervised and semi-supervised topic models for sparse count data with CorEx

Anchored CorEx: Hierarchical Topic Modeling with Minimal Domain Knowledge Correlation Explanation (CorEx) is a topic model that yields rich topics tha

Django helper application to easily and non-destructively crop arbitrarily large images in admin and frontend.

django-image-cropping django-image-cropping is an app for cropping uploaded images via Django's admin backend using Jcrop. Screenshot: django-image-cr

Efficient Sparse Attacks on Videos using Reinforcement Learning

EARL This repository provides a simple implementation of the work "Efficient Sparse Attacks on Videos using Reinforcement Learning" Example: Demo: Her

Learnable Multi-level Frequency Decomposition and Hierarchical Attention Mechanism for Generalized Face Presentation Attack Detection

LMFD-PAD Note This is the official repository of the paper: LMFD-PAD: Learnable Multi-level Frequency Decomposition and Hierarchical Attention Mechani

Learning based AI for playing multi-round Koi-Koi hanafuda card games. Have fun.

Koi-Koi AI Learning based AI for playing multi-round Koi-Koi hanafuda card games. Platform Python PyTorch PySimpleGUI (for the interface playing vs AI

Implementation of a Transformer, but completely in Triton

Transformer in Triton (wip) Implementation of a Transformer, but completely in Triton. I'm completely new to lower-level neural net code, so this repo

Demo for the paper "Overlap-aware low-latency online speaker diarization based on end-to-end local segmentation"

Streaming speaker diarization Overlap-aware low-latency online speaker diarization based on end-to-end local segmentation by Juan Manuel Coria, Hervé

Implementation of Hierarchical Transformer Memory (HTM) for Pytorch

Hierarchical Transformer Memory (HTM) - Pytorch Implementation of Hierarchical Transformer Memory (HTM) for Pytorch. This Deepmind paper proposes a si

Demo for the paper "Overlap-aware low-latency online speaker diarization based on end-to-end local segmentation"

Streaming speaker diarization Overlap-aware low-latency online speaker diarization based on end-to-end local segmentation by Juan Manuel Coria, Hervé

Keras attention models including botnet,CoaT,CoAtNet,CMT,cotnet,halonet,resnest,resnext,resnetd,volo,mlp-mixer,resmlp,gmlp,levit

Keras_cv_attention_models Keras_cv_attention_models Usage Basic Usage Layers Model surgery AotNet ResNetD ResNeXt ResNetQ BotNet VOLO ResNeSt HaloNet

Curvlearn, a Tensorflow based non-Euclidean deep learning framework.

English | 简体中文 Why Non-Euclidean Geometry Considering these simple graph structures shown below. Nodes with same color has 2-hop distance whereas 1-ho

![[ICCV 2021] Counterfactual Attention Learning for Fine-Grained Visual Categorization and Re-identification](https://github.com/raoyongming/CAL/raw/master/figs/intro.png)

[ICCV 2021] Counterfactual Attention Learning for Fine-Grained Visual Categorization and Re-identification

Counterfactual Attention Learning Created by Yongming Rao*, Guangyi Chen*, Jiwen Lu, Jie Zhou This repository contains PyTorch implementation for ICCV

OptaPlanner wrappers for Python. Currently significantly slower than OptaPlanner in Java or Kotlin.

OptaPy is an AI constraint solver for Python to optimize the Vehicle Routing Problem, Employee Rostering, Maintenance Scheduling, Task Assignment, School Timetabling, Cloud Optimization, Conference Scheduling, Job Shop Scheduling, Bin Packing and many more planning problems.

It's a Discord bot to control your PC using your Discord Channel or using Reco: Discord PC Remote Controller App.

Reco PC Server Reco PC Server is a cross platform PC Controller Discord Bot which is a modified and improved version of Chimera for Reco-Discord PC Re

【Arxiv】Exploring Separable Attention for Multi-Contrast MR Image Super-Resolution

SANet Exploring Separable Attention for Multi-Contrast MR Image Super-Resolution Dependencies numpy==1.18.5 scikit_image==0.16.2 torchvision==0.8.1 to

Normal Learning in Videos with Attention Prototype Network

Codes_APN Official codes of CVPR21 paper: Normal Learning in Videos with Attention Prototype Network (https://arxiv.org/abs/2108.11055) Overview of ou

Variational Attention: Propagating Domain-Specific Knowledge for Multi-Domain Learning in Crowd Counting (ICCV, 2021)

DKPNet ICCV 2021 Variational Attention: Propagating Domain-Specific Knowledge for Multi-Domain Learning in Crowd Counting Baseline of DKPNet is availa

![[ICCV 2021] Counterfactual Attention Learning for Fine-Grained Visual Categorization and Re-identification](https://github.com/raoyongming/CAL/raw/master/figs/intro.png)

[ICCV 2021] Counterfactual Attention Learning for Fine-Grained Visual Categorization and Re-identification

Counterfactual Attention Learning Created by Yongming Rao*, Guangyi Chen*, Jiwen Lu, Jie Zhou This repository contains PyTorch implementation for ICCV

Asynchronous and also synchronous non-official QvaPay client for asyncio and Python language.

Asynchronous and also synchronous non-official QvaPay client for asyncio and Python language. This library is still under development, the interface could be changed.

Efficient Conformer: Progressive Downsampling and Grouped Attention for Automatic Speech Recognition

Efficient Conformer: Progressive Downsampling and Grouped Attention for Automatic Speech Recognition Official implementation of the Efficient Conforme

Mysterium the first tool which permits you to retrieve the most part of a Python code even the .py or .pyc was extracted from an executable file, even it is encrypted with every existing encryptage. Mysterium don't make any difference between encrypted and non encrypted files, it can retrieve code from Pyarmor or .pyc files.

Mysterium the first tool which permits you to retrieve the most part of a Python code even the .py or .pyc was extracted from an executable file, even it is encrypted with every existing encryptage. Mysterium don't make any difference between encrypted and non encrypted files, it can retrieve code from Pyarmor or .pyc files.

git-partial-submodule is a command-line script for setting up and working with submodules while enabling them to use git's partial clone and sparse checkout features.

Partial Submodules for Git git-partial-submodule is a command-line script for setting up and working with submodules while enabling them to use git's

Implementation for our ICCV 2021 paper: Dual-Camera Super-Resolution with Aligned Attention Modules

DCSR: Dual Camera Super-Resolution Implementation for our ICCV 2021 oral paper: Dual-Camera Super-Resolution with Aligned Attention Modules paper | pr

Decentralized Reinforcment Learning: Global Decision-Making via Local Economic Transactions (ICML 2020)

Decentralized Reinforcement Learning This is the code complementing the paper Decentralized Reinforcment Learning: Global Decision-Making via Local Ec

![[ICCV 2021 (oral)] Planar Surface Reconstruction from Sparse Views](https://github.com/jinlinyi/SparsePlanes/raw/main/docs/static/architecture.jpg)

[ICCV 2021 (oral)] Planar Surface Reconstruction from Sparse Views

Planar Surface Reconstruction From Sparse Views Linyi Jin, Shengyi Qian, Andrew Owens, David F. Fouhey University of Michigan ICCV 2021 (Oral) This re

![[ICCV 2021] Encoder-decoder with Multi-level Attention for 3D Human Shape and Pose Estimation](https://github.com/ziniuwan/maed/raw/master/doc/figs/net_arch.png)

[ICCV 2021] Encoder-decoder with Multi-level Attention for 3D Human Shape and Pose Estimation

MAED: Encoder-decoder with Multi-level Attention for 3D Human Shape and Pose Estimation Getting Started Our codes are implemented and tested with pyth

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019)

Adaptive Pyramid Context Network for Semantic Segmentation (APCNet CVPR'2019) Introduction Official implementation of Adaptive Pyramid Context Network

multi-label,classifier,text classification,多标签文本分类,文本分类,BERT,ALBERT,multi-label-classification,seq2seq,attention,beam search

multi-label,classifier,text classification,多标签文本分类,文本分类,BERT,ALBERT,multi-label-classification,seq2seq,attention,beam search

.](https://github.com/ypeleg/Fastformer-Keras/raw/master/model_arch.jpg)

Unofficial Tensorflow-Keras implementation of Fastformer based on paper [Fastformer: Additive Attention Can Be All You Need](https://arxiv.org/abs/2108.09084).

Fastformer-Keras Unofficial Tensorflow-Keras implementation of Fastformer based on paper Fastformer: Additive Attention Can Be All You Need. Tensorflo

Implementation for our ICCV 2021 paper: Dual-Camera Super-Resolution with Aligned Attention Modules

DCSR: Dual Camera Super-Resolution Implementation for our ICCV 2021 oral paper: Dual-Camera Super-Resolution with Aligned Attention Modules paper | pr

An implementation of Fastformer: Additive Attention Can Be All You Need in TensorFlow

Fast Transformer This repo implements Fastformer: Additive Attention Can Be All You Need by Wu et al. in TensorFlow. Fast Transformer is a Transformer

ColabFold / AlphaFold2_advanced on your local PC (or macOS)

LocalColabFold ColabFold / AlphaFold2_advanced on your local PC (or macOS) Installation For Linux Make sure curl and wget commands are already install

[ICCV 2021] Released code for Causal Attention for Unbiased Visual Recognition

CaaM This repo contains the codes of training our CaaM on NICO/ImageNet9 dataset. Due to my recent limited bandwidth, this codebase is still messy, wh

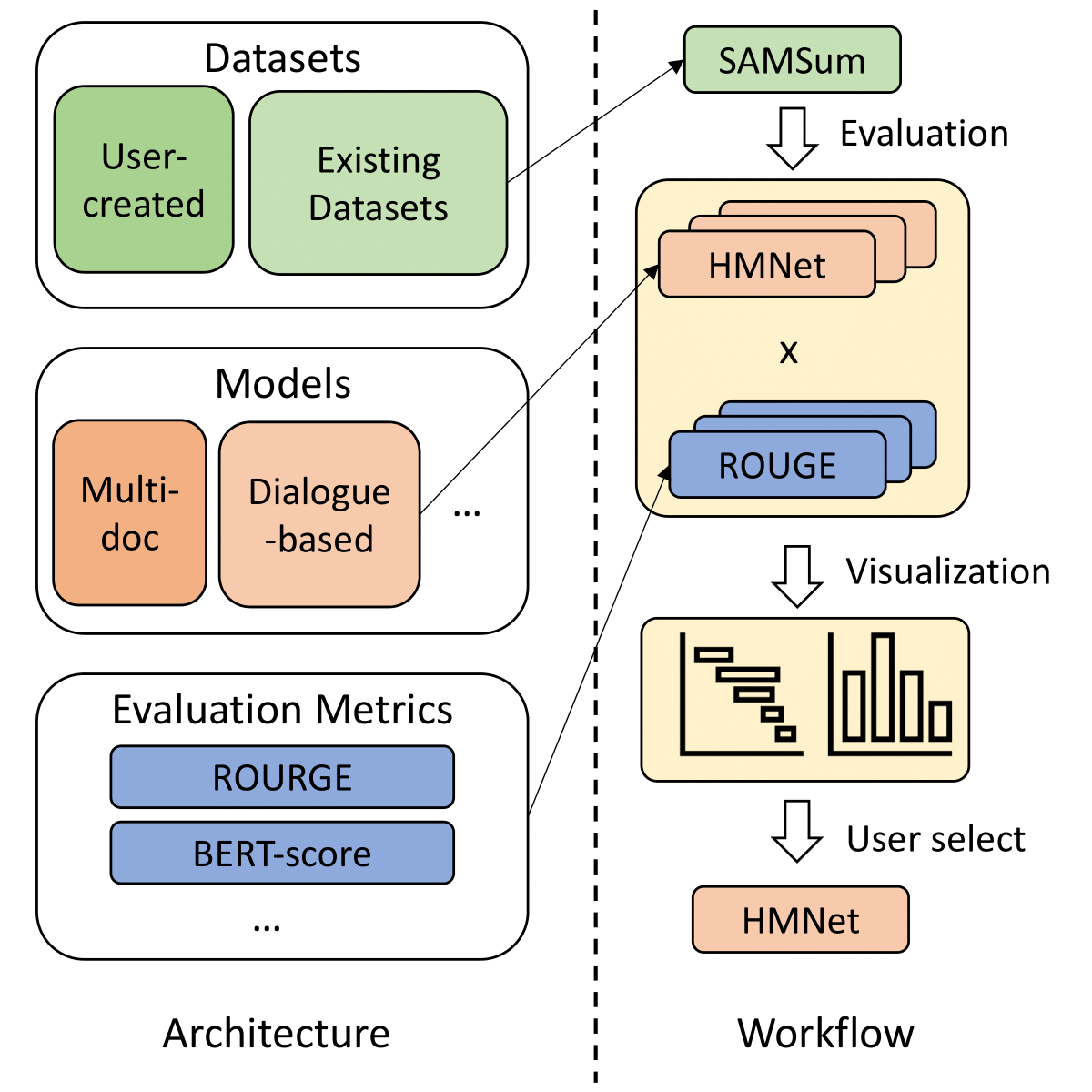

SummerTime - Text Summarization Toolkit for Non-experts

A library to help users choose appropriate summarization tools based on their specific tasks or needs. Includes models, evaluation metrics, and datasets.

Official code of ICCV2021 paper "Residual Attention: A Simple but Effective Method for Multi-Label Recognition"

CSRA This is the official code of ICCV 2021 paper: Residual Attention: A Simple But Effective Method for Multi-Label Recoginition Demo, Train and Vali

🌈 PyTorch Implementation for EMNLP'21 Findings "Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer"

SGLKT-VisDial Pytorch Implementation for the paper: Reasoning Visual Dialog with Sparse Graph Learning and Knowledge Transfer Gi-Cheon Kang, Junseok P

Unofficial PyTorch implementation of Fastformer based on paper "Fastformer: Additive Attention Can Be All You Need"."

Fastformer-PyTorch Unofficial PyTorch implementation of Fastformer based on paper Fastformer: Additive Attention Can Be All You Need. Usage : import t

Unofficial implementation of Perceiver IO: A General Architecture for Structured Inputs & Outputs

Perceiver IO Unofficial implementation of Perceiver IO: A General Architecture for Structured Inputs & Outputs Usage import torch from src.perceiver.

PrimitiveNet: Primitive Instance Segmentation with Local Primitive Embedding under Adversarial Metric (ICCV 2021)

PrimitiveNet Source code for the paper: Jingwei Huang, Yanfeng Zhang, Mingwei Sun. [PrimitiveNet: Primitive Instance Segmentation with Local Primitive

Sink is a CLI tool that allows users to synchronize their local folders to their Google Drives. It is similar to the Git CLI and allows fast and reliable syncs with the drive.

Sink is a CLI synchronisation tool that enables a user to synchronise local system files and folders with their Google Drives. It follows a git C

Sparse R-CNN: End-to-End Object Detection with Learnable Proposals, CVPR2021

End-to-End Object Detection with Learnable Proposal, CVPR2021

[ICCV 2021] Official Pytorch implementation for Discriminative Region-based Multi-Label Zero-Shot Learning SOTA results on NUS-WIDE and OpenImages

Discriminative Region-based Multi-Label Zero-Shot Learning (ICCV 2021) [arXiv][Project page coming soon] Sanath Narayan*, Akshita Gupta*, Salman Kh

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

StarGANv2-VC: A Diverse, Unsupervised, Non-parallel Framework for Natural-Sounding Voice Conversion

StarGANv2-VC: A Diverse, Unsupervised, Non-parallel Framework for Natural-Sounding Voice Conversion Yinghao Aaron Li, Ali Zare, Nima Mesgarani We pres

[ICCV 2021] Official Pytorch implementation for Discriminative Region-based Multi-Label Zero-Shot Learning SOTA results on NUS-WIDE and OpenImages

Discriminative Region-based Multi-Label Zero-Shot Learning (ICCV 2021) [arXiv][Project page coming soon] Sanath Narayan*, Akshita Gupta*, Salman Kh

Intent parsing and slot filling in PyTorch with seq2seq + attention

PyTorch Seq2Seq Intent Parsing Reframing intent parsing as a human - machine translation task. Work in progress successor to torch-seq2seq-intent-pars

A PyTorch implementation of the Transformer model in "Attention is All You Need".

Attention is all you need: A Pytorch Implementation This is a PyTorch implementation of the Transformer model in "Attention is All You Need" (Ashish V

Implementation of Fast Transformer in Pytorch

Fast Transformer - Pytorch Implementation of Fast Transformer in Pytorch. This only work as an encoder. Yannic video AI Epiphany Install $ pip install

Generate YARA rules for OOXML documents using ZIP local header metadata.

apooxml Generate YARA rules for OOXML documents using ZIP local header metadata. To learn more about this tool and the methodology behind it, check ou

Public API client for GETTR, a "non-bias [sic] social network," designed for data archival and analysis.

GoGettr GoGettr is an API client for GETTR, a "non-bias [sic] social network." (We will not reward their domain with a hyperlink.) GoGettr is built an

Official code for paper "Demystifying Local Vision Transformer: Sparse Connectivity, Weight Sharing, and Dynamic Weight"

Demysitifing Local Vision Transformer, arxiv This is the official PyTorch implementation of our paper. We simply replace local self attention by (dyna

Official code for "Focal Self-attention for Local-Global Interactions in Vision Transformers"

Focal Transformer This is the official implementation of our Focal Transformer -- "Focal Self-attention for Local-Global Interactions in Vision Transf

[ICCV'21] NEAT: Neural Attention Fields for End-to-End Autonomous Driving

NEAT: Neural Attention Fields for End-to-End Autonomous Driving Paper | Supplementary | Video | Poster | Blog This repository is for the ICCV 2021 pap

Local trajectory planner based on a multilayer graph framework for autonomous race vehicles.

Graph-Based Local Trajectory Planner The graph-based local trajectory planner is python-based and comes with open interfaces as well as debug, visuali

Context Axial Reverse Attention Network for Small Medical Objects Segmentation

CaraNet: Context Axial Reverse Attention Network for Small Medical Objects Segmentation This repository contains the implementation of a novel attenti

PyTorch code for our ECCV 2018 paper "Image Super-Resolution Using Very Deep Residual Channel Attention Networks"

PyTorch code for our ECCV 2018 paper "Image Super-Resolution Using Very Deep Residual Channel Attention Networks"

Compute descriptors for 3D point cloud registration using a multi scale sparse voxel architecture

MS-SVConv : 3D Point Cloud Registration with Multi-Scale Architecture and Self-supervised Fine-tuning Compute features for 3D point cloud registration

LONG-TERM SERIES FORECASTING WITH QUERYSELECTOR – EFFICIENT MODEL OF SPARSEATTENTION

Query Selector Here you can find code and data loaders for the paper https://arxiv.org/pdf/2107.08687v1.pdf . Query Selector is a novel approach to sp

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

The tl;dr on a few notable transformer/language model papers + other papers (alignment, memorization, etc).

Stacked Hourglass Network with a Multi-level Attention Mechanism: Where to Look for Intervertebral Disc Labeling

⚠️ A more recent and actively-maintained version of this code is available in ivadomed Stacked Hourglass Network with a Multi-level Attention Mech

Official code for "Stereo Waterdrop Removal with Row-wise Dilated Attention (IROS2021)"

Stereo-Waterdrop-Removal-with-Row-wise-Dilated-Attention This repository includes official codes for "Stereo Waterdrop Removal with Row-wise Dilated A

Automatic Calibration for Non-repetitive Scanning Solid-State LiDAR and Camera Systems

ACSC Automatic extrinsic calibration for non-repetitive scanning solid-state LiDAR and camera systems. System Architecture 1. Dependency Tested with U

SlotRefine: A Fast Non-Autoregressive Model forJoint Intent Detection and Slot Filling

SlotRefine: A Fast Non-Autoregressive Model for Joint Intent Detection and Slot Filling Reference Main paper to be cited (Di Wu et al., 2020) @article

PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis

Daft-Exprt - PyTorch Implementation PyTorch Implementation of Daft-Exprt: Robust Prosody Transfer Across Speakers for Expressive Speech Synthesis The

Alternative firmware for ESP8266 with easy configuration using webUI, OTA updates, automation using timers or rules, expandability and entirely local control over MQTT, HTTP, Serial or KNX. Full documentation at

Alternative firmware for ESP8266/ESP32 based devices with easy configuration using webUI, OTA updates, automation using timers or rules, expandability

PyTorch implementation of paper: AdaAttN: Revisit Attention Mechanism in Arbitrary Neural Style Transfer, ICCV 2021.

AdaAttN: Revisit Attention Mechanism in Arbitrary Neural Style Transfer [Paper] [PyTorch Implementation] [Paddle Implementation] Overview This reposit

Docker container to expose a local RTMP, RTSP, and HLS stream for all your Wyze cameras including v3

Docker container to expose a local RTMP, RTSP, and HLS stream for all your Wyze cameras including v3. No Third-party or special firmware required.

Investigating Attention Mechanism in 3D Point Cloud Object Detection (arXiv 2021)

Investigating Attention Mechanism in 3D Point Cloud Object Detection (arXiv 2021) This repository is for the following paper: "Investigating Attention

Scenic: A Jax Library for Computer Vision and Beyond

Scenic Scenic is a codebase with a focus on research around attention-based models for computer vision. Scenic has been successfully used to develop c

Code for paper "ASAP-Net: Attention and Structure Aware Point Cloud Sequence Segmentation"

ASAP-Net This project implements ASAP-Net of paper ASAP-Net: Attention and Structure Aware Point Cloud Sequence Segmentation (BMVC2020). Overview We i

Differentiable Neural Computers, Sparse Access Memory and Sparse Differentiable Neural Computers, for Pytorch

Differentiable Neural Computers and family, for Pytorch Includes: Differentiable Neural Computers (DNC) Sparse Access Memory (SAM) Sparse Differentiab

A non-custodial oracle and escrow system for the lightning network. Make LN contracts more expressive.

Hodl contracts A non-custodial oracle and escrow system for the lightning network. Make LN contracts more expressive. If you fire it up, be aware: (1)

The code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention.

CrossFormer This repository is the code for our paper CrossFormer: A Versatile Vision Transformer Based on Cross-scale Attention. Introduction Existin

MPV remote controller is a program for remote controlling mpv player with device in your local network through web browser.

MPV remote controller is a program for remote controlling mpv player with device in your local network through web browser.

An efficient PyTorch implementation of the winning entry of the 2017 VQA Challenge.

Bottom-Up and Top-Down Attention for Visual Question Answering An efficient PyTorch implementation of the winning entry of the 2017 VQA Challenge. The

pytorch implementation of Attention is all you need

A Pytorch Implementation of the Transformer: Attention Is All You Need Our implementation is largely based on Tensorflow implementation Requirements N

Train an RL agent to execute natural language instructions in a 3D Environment (PyTorch)

Gated-Attention Architectures for Task-Oriented Language Grounding This is a PyTorch implementation of the AAAI-18 paper: Gated-Attention Architecture

PyTorch Implementation of "Non-Autoregressive Neural Machine Translation"

Non-Autoregressive Transformer Code release for Non-Autoregressive Neural Machine Translation by Jiatao Gu, James Bradbury, Caiming Xiong, Victor O.K.

A Structured Self-attentive Sentence Embedding

Structured Self-attentive sentence embeddings Implementation for the paper A Structured Self-Attentive Sentence Embedding, which was published in ICLR

Bilinear attention networks for visual question answering

Bilinear Attention Networks This repository is the implementation of Bilinear Attention Networks for the visual question answering and Flickr30k Entit

This project is a re-implementation of MASTER: Multi-Aspect Non-local Network for Scene Text Recognition by MMOCR

This project is a re-implementation of MASTER: Multi-Aspect Non-local Network for Scene Text Recognition by MMOCR,which is an open-source toolbox based on PyTorch. The overall architecture will be shown below.

A small Blender addon for changing an object's local orientation while in edit mode

A small Blender addon for changing an object's local orientation while in edit mode.

A PyTorch Implementation of the Luna: Linear Unified Nested Attention

Unofficial PyTorch implementation of Luna: Linear Unified Nested Attention The quadratic computational and memory complexities of the Transformer’s at

Implementation of H-Transformer-1D, Hierarchical Attention for Sequence Learning

H-Transformer-1D Implementation of H-Transformer-1D, Transformer using hierarchical Attention for sequence learning with subquadratic costs. For now,

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

🍀 Pytorch implementation of various Attention Mechanisms, MLP, Re-parameter, Convolution, which is helpful to further understand papers.⭐⭐⭐

An all-in-one application to visualize multiple different local path planning algorithms

Table of Contents Table of Contents Local Planner Visualization Project (LPVP) Features Installation/Usage Local Planners Probabilistic Roadmap (PRM)

Vericopy - This Python script provides various usage modes for secure local file copying and hashing.

Vericopy This Python script provides various usage modes for secure local file copying and hashing. Hash data is captured and logged for paths before

This is an official implementation of "Polarized Self-Attention: Towards High-quality Pixel-wise Regression"

Polarized Self-Attention: Towards High-quality Pixel-wise Regression This is an official implementation of: Huajun Liu, Fuqiang Liu, Xinyi Fan and Don

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

VAENAR-TTS - PyTorch Implementation PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Locally Enhanced Self-Attention: Rethinking Self-Attention as Local and Context Terms

LESA Introduction This repository contains the official implementation of Locally Enhanced Self-Attention: Rethinking Self-Attention as Local and Cont

Install alphafold on the local machine, get out of docker.

AlphaFold This package provides an implementation of the inference pipeline of AlphaFold v2.0. This is a completely new model that was entered in CASP